当前位置:网站首页>Celery best practices

Celery best practices

2022-07-06 18:25:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm the king of the whole stack .

As a Celery Using heavy users . notice Celery Best Practices This article . I can't help it .

Just translate it , It will also be added to our project at the same time celery Practical experience of .

as for Celery What is it , Look here Celery.

Usually in use Django When , You may need to run some long background tasks , Maybe you need to use some task queues that can be sorted , that Celery It would be a very good choice .

When put Celery As a task queue, it is used in many projects , The author has accumulated some best practices , For example, how to use it in a proper way Celery, As well as some Celery Features provided but not yet fully used .

1, Don't use the database as your AMQP Broker

Databases are not inherently designed for AMQP broker Of . In production environment , It is very likely to crash at some time (PS, I don't think any system can guarantee that it is improper !!

.).

The author guesses why many people use databases as broker Mainly because they already have a database for web app Provide data storage . So just use it directly . Set to Celery Of broker It's very easy Of . And there is no need to install other components ( for example RabbitMQ).

Suppose there are, for example, the following scenarios : Do you have 4 Back ends workers To get and process the tasks put into the database , That means you have 4 Processes in order to get the latest tasks , You need to poll the database frequently . Maybe everyone worker At the same time, there are many concurrent threads of their own doing this .

One day . You find that because of too many tasks .4 individual worker It's not enough , The speed of processing tasks has greatly lagged behind the speed of production tasks , So you keep adding worker The number of . All of a sudden , Your database is slow to respond due to a large number of process polling tasks , disk IO It has been at a peak , Yours web Applications are also beginning to be affected . All of this , All due to workers The database is constantly being DDOS.

And when you use a suitable AMQP( for example RabbitMQ) When , None of this is going to happen , With RabbitMQ For example . First , It puts the task queue into memory . You don't have to access your hard drive . secondly ,consumers( That's the top worker) There is no need to poll frequently because RabbitMQ Can push new tasks to consumers.

Of course , hypothesis RabbitMQ Something really went wrong . At least it won't affect you web application .

This is what the author said about not using databases as broker Why , And many places provide compiled RabbitMQ Mirror image , You can use it directly , for example these .

For this point . I deeply agree . Our system uses a lot Celery Handle asynchronous tasks , On average, there are millions of asynchronous tasks a day , Once we used mysql. Then there will always be a problem that the task processing delay is too serious , Even with worker It doesn't work . So we used redis. The performance has been improved a lot . As for why mysql Very slow , We didn't go deep , Maybe it did happen DDOS The problem of .

2, Use many other queue( Don't just use the default )

Celery very easy Set up , Usually it will use the default queue Used to store tasks ( Unless you specify something else queue). The usual writing method is as follows :

@app.task()

def my_taskA(a, b, c):

print("doing something here...")

@app.task()

def my_taskB(x, y):

print("doing something here...")These two tasks will be in the same queue Run inside . Writing like this is actually very attractive , Because you only need to use one decorator Can realize an asynchronous task . The author is concerned about taskA and taskB Maybe it's two completely different things , Or one may be more important than another , So why put them in a basket ?( You can't put eggs in a basket , Is that so? !) maybe taskB In fact, it's not very important , But too much , So important taskA Instead, it can't be worker To deal with . add to workers It can't solve the problem , because taskA and taskB Still in a queue Run inside .

3. Use priority workers

In order to solve 2 Problems in it , We need to let taskA In a queue Q1, and taskB There is also a queue Q2 function . Specify at the same time x workers Go to the queue Q1 The task of , Then use other workers Go to the queue Q2 The task of . Use this way ,taskB You can get enough workers To deal with , At the same time, some priorities workers It can also be handled very well taskA Without having to wait for a long time .

First, manually define queue

CELERY_QUEUES = (

Queue('default', Exchange('default'), routing_key='default'),

Queue('for_task_A', Exchange('for_task_A'), routing_key='for_task_A'),

Queue('for_task_B', Exchange('for_task_B'), routing_key='for_task_B'),

)Then define routes Used to decide which task to go to queue

CELERY_ROUTES = {

'my_taskA': {'queue': 'for_task_A', 'routing_key': 'for_task_A'},

'my_taskB': {'queue': 'for_task_B', 'routing_key': 'for_task_B'},

} Finally, for each task Start a different workerscelery worker -E -l INFO -n workerA -Q for_task_A celery worker -E -l INFO -n workerB -Q for_task_B

In our project . It will involve a large number of file conversion problems , There are many less than 1mb File conversion for , At the same time, there are also a few near 20mb File conversion for . The priority of small file conversion is the highest , It doesn't take much time at the same time , But the conversion of large files is very time-consuming . Suppose you put the conversion task in a queue , Then it is very likely that due to the conversion of large files , This leads to the problem that the time-consuming is too serious and the conversion of small files is delayed .

So we set it according to the file size 3 A priority queue . And each queue is set with different workers. It solves the problem of file conversion very well .

4, Use Celery Error handling mechanism

Most tasks do not use error handling , Suppose the task fails , Then it's a failure . In some cases this is very good . But most of the failed tasks the author sees are to call a third party API Then there was a network error . Or resources are unavailable . And for these mistakes . The easiest way is to try again , Maybe it's a third party API Temporary service or network failure , It may be ready immediately , So why not try again ?

@app.task(bind=True, default_retry_delay=300, max_retries=5)

def my_task_A():

try:

print("doing stuff here...")

except SomeNetworkException as e:

print("maybe do some clenup here....")

self.retry(e)The author likes to define a waiting time and retry time for each task , And the maximum number of retries . Of course, there are more specific parameter settings , Read the documents by yourself .

For error handling , Because of our special usage scenarios , For example, a file conversion fails , Then no matter how many times you try again, you will fail . So no retry mechanism is added .

5, Use Flower

Flower It's a very powerful tool , Used for monitoring celery Of tasks and works.

We don't use this much . Because most of the time we are directly connected redis To view the celery Relevant information . It seems quite stupid, right , In especial celery stay redis The data stored inside cannot be easily extracted .

6, Don't pay too much attention to the status of task exit

A task status is the success or failure information at the end of the task , Maybe on some statistical occasions , It's very practical . But we need to know . The status of task exit is not the result of the task running , Some results of this task will affect the program , It is usually written to the database ( For example, update a user's friend list ).

Most projects the author has seen store the status of the end of the task in sqlite Or your own database , But is it really necessary to save these , It may affect your web Service . So the author usually sets CELERY_IGNORE_RESULT = True To discard .

For us , Because it is an asynchronous task , It's useless to know the status of the task after it runs . So discard it decisively .

7, Don't pass the task Database/ORM object

This fact is not to pass Database object ( For example, an instance of a user ) To task . Because the data after serialization may be expired .

So you might as well pass one directly user id, And then get it from the database in real time when the task is running .

For this , So are we , Only pass relevant information to the task id data . For example, when converting files , We will only deliver documents id, We obtain other document information directly through this id Get from the database .

Last

Then there is our own feelings , The author mentioned above Celery Use , It can really be regarded as a very good way to practice . At least now our Celery There is not much problem , Of course, there are still small pits . as for RabbitMQ, We really haven't used this thing , I don't know the effect . At least mysql Easy to use. .

Last . Attach one of the authors Celery Talk https://denibertovic.com/talks/celery-best-practices/.

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/117403.html Link to the original text :https://javaforall.cn

边栏推荐

- MSF horizontal MSF port forwarding + routing table +socks5+proxychains

- 文档编辑之markdown语法(typora)

- On time and parameter selection of asemi rectifier bridge db207

- The difference between parallelism and concurrency

- Maixll dock camera usage

- declval(指导函数返回值范例)

- SQL优化问题的简述

- Penetration test information collection - CDN bypass

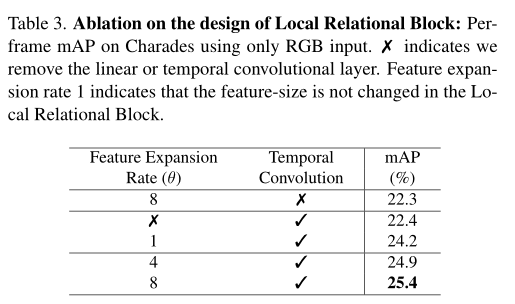

- MS-TCT:Inria&SBU提出用于动作检测的多尺度时间Transformer,效果SOTA!已开源!(CVPR2022)...

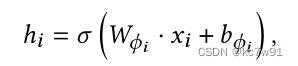

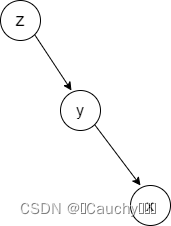

- Tree-LSTM的一些理解以及DGL代码实现

猜你喜欢

Self-supervised Heterogeneous Graph Neural Network with Co-contrastive Learning 论文阅读

Splay

![Jerry's updated equipment resource document [chapter]](/img/6c/17bd69b34c7b1bae32604977f6bc48.jpg)

Jerry's updated equipment resource document [chapter]

Blue Bridge Cup real question: one question with clear code, master three codes

微信为什么使用 SQLite 保存聊天记录?

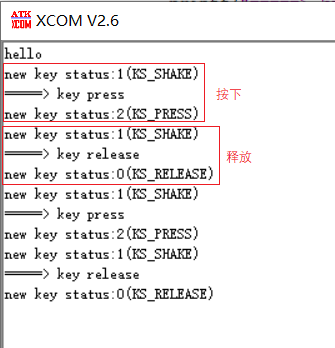

STM32 key state machine 2 - state simplification and long press function addition

Ms-tct: INRIA & SBU proposed a multi-scale time transformer for motion detection. The effect is SOTA! Open source! (CVPR2022)...

F200 - UAV equipped with domestic open source flight control system based on Model Design

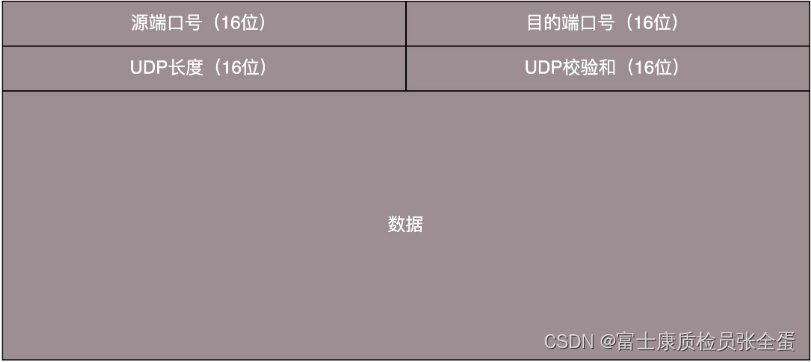

UDP协议:因性善而简单,难免碰到“城会玩”

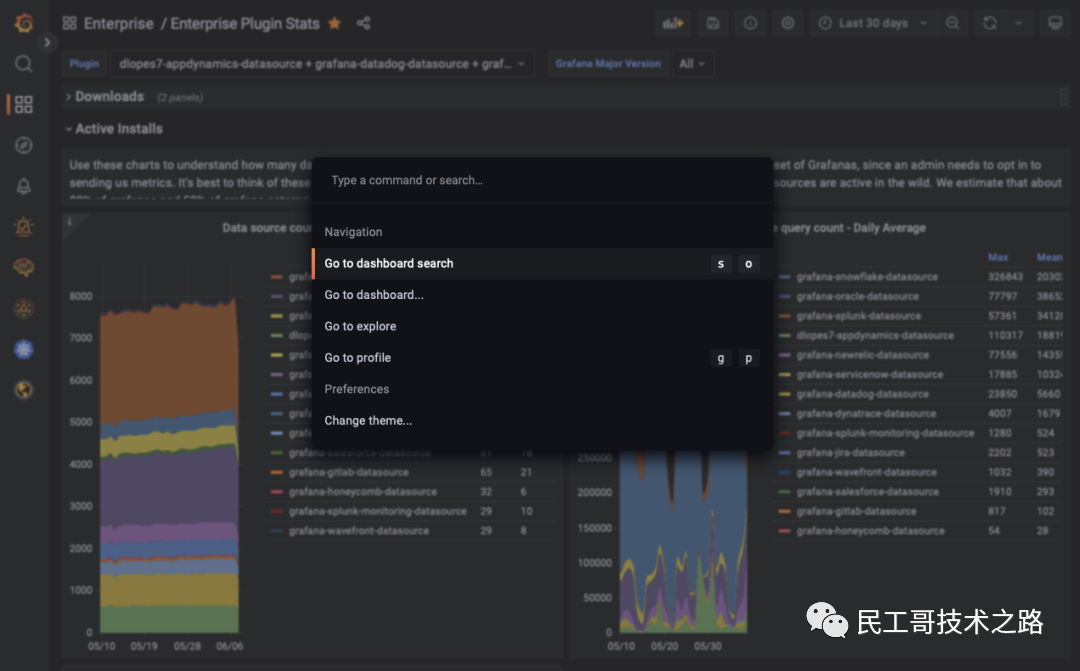

Grafana 9.0 is officially released! It's the strongest!

随机推荐

具体说明 Flume介绍、安装和配置

TCP packet sticking problem

Running the service with systemctl in the container reports an error: failed to get D-Bus connection: operation not permitted (solution)

Unity资源顺序加载的一个方法

2022 Summer Project Training (I)

bonecp使用数据源

STM32+ESP8266+MQTT协议连接OneNet物联网平台

用友OA漏洞学习——NCFindWeb 目录遍历漏洞

从交互模型中蒸馏知识!中科大&美团提出VIRT,兼具双塔模型的效率和交互模型的性能,在文本匹配上实现性能和效率的平衡!...

2022暑期项目实训(一)

Open source and safe "song of ice and fire"

D binding function

POJ 2208 已知边四面体六个长度,计算体积

Easy to use PDF to SVG program

Wchars, coding, standards and portability - wchars, encodings, standards and portability

模板于泛型编程之declval

The third season of Baidu online AI competition is coming in midsummer, looking for you who love AI!

Heavy! Ant open source trusted privacy computing framework "argot", flexible assembly of mainstream technologies, developer friendly layered design

重磅硬核 | 一文聊透对象在 JVM 中的内存布局,以及内存对齐和压缩指针的原理及应用

虚拟机VirtualBox和Vagrant安装