当前位置:网站首页>Audio and video development interview questions

Audio and video development interview questions

2022-07-06 16:41:00 【Dog egg L】

Interview questions 1

Why can a huge original video be encoded into a small video ? What's the technology ?

1) Spatial redundancy : There is a strong correlation between adjacent pixels of image

2) Time redundancy : The content of adjacent images in a video sequence is similar

3) Coding redundancy : Different pixel values have different probabilities

4) Visual redundancy : The human visual system is insensitive to certain details

5) Knowledge redundancy : The structure of regularity can be obtained from prior knowledge and background knowledge

Interview questions 2

How to optimize the live broadcast in seconds ?

- DNS Slow parsing In order to effectively reduce DNS Analyze the impact on the opening , We can finish playing the domain name in advance ->IP Address resolution , And cache it , When it's on the air , Direct afferent band

IP Broadcast address of address , So as to save DNS The parsing time is . If you want to support IP Address play , You need to modify the underlying layer ffmpeg Source code . - Play strategy Many on-demand players , In order to reduce carton , There will be some buffering strategies , After buffering enough data , Then send it to decode and play .

And in order to speed up the opening effect , You need to make some adjustments to the buffering strategy of the playback , If the first frame hasn't been rendered yet , Don't make any buffers , Directly into the decoder decode play , This will ensure that there is no reason for 「 Take the initiative 」 First delay brought by buffer .

- Play parameter settings All based on ffmpeg Player , Will meet avformat_find_stream_info This function takes a long time ,

This increases the opening time , The main function is to read a certain byte of bitstream data ,

To analyze the basic information of the code stream , Such as coding information 、 Duration 、 Bit rate 、 Frame rate and so on , It has two parameters to control the size and duration of the data it reads , One is probesize, One is

analyzeduration.

Reduce probesize and analyzeduration Can effectively reduce avformat_find_stream_info The function of is time consuming , So as to speed up the opening , But here's the thing , Setting too small may cause insufficient data to be read , So we can't parse the stream information , Cause playback failure , Or there's only audio and no video , Only video has no audio problem .

Server optimization

Server keyframe buffer

CDN Recent strategies

Interview questions 3

What is the most important role of histogram in image processing ?

The definition of gray histogram : Function of gray level , Describe the number of pixels of this gray level in the image or the frequency of pixels of this gray level . It reflects the gray distribution of the image .

Gray histogram can only reflect the gray distribution of the image , Cannot reflect the position of image pixels , That is, all spatial information is lost .

Application of histogram :

a. Digitize parameters : Judge whether an image makes reasonable use of all the allowed gray level ranges . Generally, a picture should use all or almost all possible gray levels , Otherwise, the quantization interval is increased , Lost information cannot be recovered .

b. Boundary threshold selection ( Determine the threshold of image binarization ): Suppose that the gray histogram of an image has two peaks , It shows that the brighter and darker areas of the image can be well separated , Take this as the threshold point , Can get very good 2 Value processing effect ( Distinguish objects from backgrounds ).

c. When the gray value of the object part is larger than that of other parts , Histogram can be used to count the area of objects in the image .

d. Calculate the amount of information of the image H.

Interview questions 4

What are the methods of digital image filtering ?

Mean filtering ( Neighborhood averaging )、 median filtering ( Eliminate independent noise points )、 Gauss filtering ( Linear smoothing filtering , Eliminate Gaussian noise , Weighted average the whole image , The value of each pixel is obtained by the weighted average of itself and other pixel values in the neighborhood )、KNN wave filtering 、 High pass filtering 、 Low pass filtering, etc .

Interview questions 5

What features can be extracted from an image ?

Color 、 texture ( Roughness 、 Directivity 、 Contrast )、 shape ( Curvature 、 Eccentricity 、 Spindle direction )、 Color, etc .

Interview questions 6

What are the criteria to measure the quality of image reconstruction ? How to calculate ?

SNR( Signal-to-noise ratio )

PSNR=10*log10((2n-1)2/MSE) (MSE Is the mean square error between the original image and the processed image , So the calculation PSNR need 2 Data of images !)

SSIM ( The structural similarity is from brightness contrast 、 Contrast 、 structure 3 Aspect measures the similarity of images )

Interview questions 7

AAC and PCM The difference between ?

AAC Some parameters are added at the beginning of the data : Sampling rate 、 channel 、 Sample size

Interview questions 8

H264 Two forms of storage ?

a. Annex B :

StartCode :NALU unit , It usually starts with 0001 perhaps 001

Anti contention byte : In order to distinguish between 0 0 0 1, It uses 0 0 0 0x3 1 As a distinction

It is mostly used in network streaming media :rtmp、rtp Formatb. AVCC :

Express NALU Prefix of length , It may be used for a long time 4、2、1 To store this NALU The length of

Anti contention byte

It is mostly used in file storage mp4 The format of

Interview questions 9

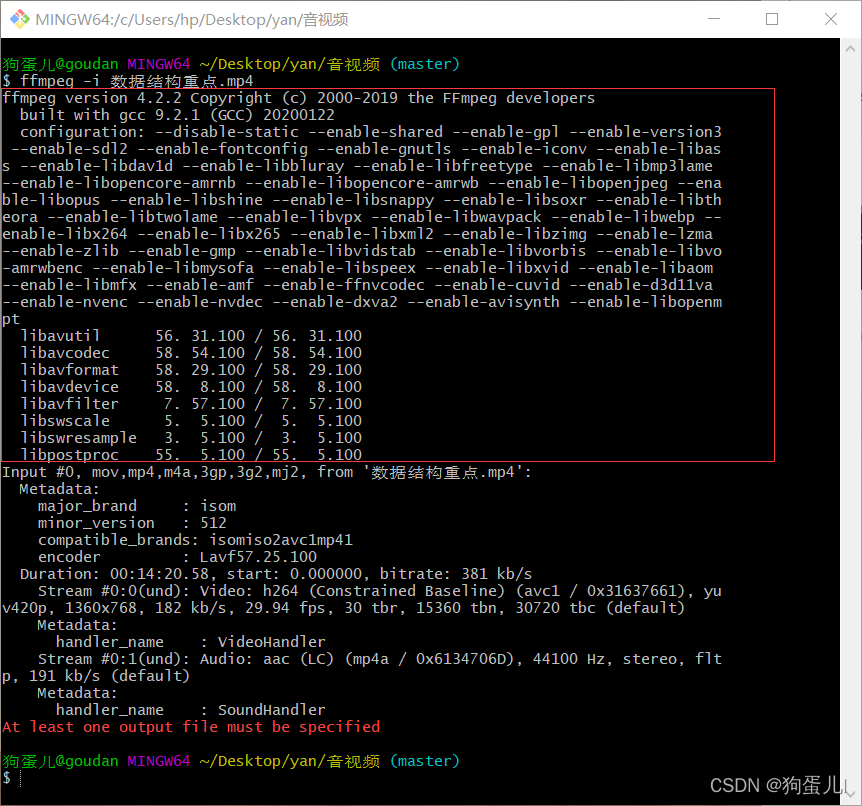

FFMPEG: How pictures compose video

Coding process :

av_register_all

by AVFormatContext Allocate memory

Open file

Create an output stream AVSream

Find the encoder

Turn on the encoder

Write the header , If you don't have it, don't write it

Circularly encode video pixel data -> Video compression data

- Circularly encode audio sampling data -> Audio compression data ———>AVFrame Turn into AVPacket

Write the encoded video code stream to the file ——>AVPacket Turn into AVFormat function

Turn off the encoderWrite the end of the document

Close the resource file

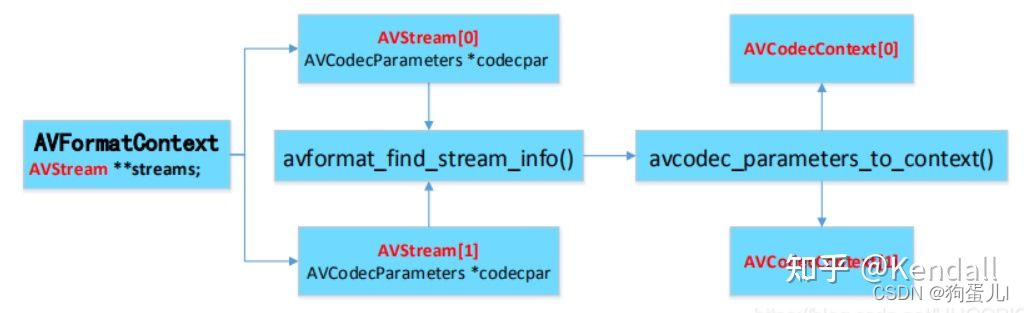

Decoding process :

av_register_all

establish AVFormatContext Object context

Open file

avformat_find_stream_info

Find the decoder

Turn on the decoder

establish AVCodecContext Context

av_read_frame : take avPacket Data to avFrame data

glUniform1i() ——> This can set the corresponding texture layer glTexSubImage2D() and glTexImage2D difference ————> Replace the contents of the texture

Interview questions 10

What are the common audio and video formats ?

MPEG( Motion picture experts ) yes Motion Picture Experts Group

Abbreviation . Such formats include MPEG-1,MPEG-2 and MPEG-4 Various video formats including .AVI, Audio video interleaving (Audio Video

Interleaved) English abbreviations .AVI This video format released by Microsoft , In the field of video, it is one of the oldest formats .MOV, Have used Mac How many friends of the machine should have contacted QuickTime.QuickTime It was Apple The company uses Mac An image and video processing software on a computer .

ASF(Advanced Streaming format Advanced stream format ).ASF yes MICROSOFT For and Real player

A file compression format that can directly watch video programs on the Internet .WMV, An independent coding method in Internet The technical standard of real-time multimedia dissemination on ,Microsoft The company hopes to replace QuickTime Such technical standards and WAV、AVI And so on .

NAVI, If you find that the original playback software suddenly cannot open this format AVI file , Then you have to consider whether it happened n AVI.n AVI yes New AVI

Abbreviation , It's a man named Shadow Realm A new video format developed by underground organizations .3GP It's a kind of 3G Video coding format of streaming media , Mainly for cooperation 3G Developed for the high transmission speed of the network , It is also the most common video format in mobile phones .

REAL VIDEO(RA、RAM) From the beginning, the format is positioned in the application of video streaming , It can also be said to be the originator of video streaming technology .

MKV, One suffix is MKV Video files of frequently appear on the network , It can integrate multiple different types of audio tracks and subtitle tracks in one file , And its video coding freedom is also very large , Can be common DivX、XviD、3IVX, It could even be RealVideo、QuickTime、WMV

This kind of streaming video .FLV yes FLASH

VIDEO For short ,FLV Streaming media format is a new video format . Because it forms a very small file 、 Very fast loading , Make it possible to watch video files online , It effectively solves the problem of video file import Flash after , Make exported SWF The files are huge , It can't be used well on the network .F4V, As a smaller and clearer , More conducive to the format of network communication ,F4V It has gradually replaced the tradition FLV, It has also been played by most mainstream players , Without complex ways such as transformation .

Interview questions 11

Please point out “1080p” The meaning of ?

Interview questions 12

Please explain the nature of color and its digital recording principle , And name a few color gamuts you know .

Interview questions 13

Please explain “ Vectorgraph ” and “ Bitmap ” The difference between ?

Interview questions 14

Please from “ aperture ”“ shutter speed ”“ Sensitivity ”“ white balance ”“ The depth of field ” Middle optional 2 A narrative ?

Interview questions 15

Video component YUV The meaning and digital format of ?

4:2:0;4:1:1;4:2:2;4:4:4; Varied

Interview questions 16

stay MPEG What are the image types in the standard ?

I Frame image , P Frame image , B Frame image

Interview questions 17

List some common implementation schemes of audio codec ?

The first is to use a dedicated audio chip pair Voice signal acquisition and processing , The audio codec algorithm is integrated in the hardware , Such as MP3 Codec chip 、 speech synthesis

Analysis chip, etc . The advantage of using this scheme is to process speed blocks , The design cycle is short ; The disadvantage is that it has great limitations , inflexible , It is difficult to upgrade the system .The second option is to use A/D Acquisition card and computer constitute the hardware platform , The audio codec algorithm is implemented by software on the computer . The advantage of using this scheme is that the price is convenient

should , The development is flexible and conducive to system upgrading ; The disadvantage is that the processing speed is slow , It is difficult to develop .The third option is to use high precision 、 high velocity Of A/D Acquisition chip to complete the acquisition of voice signals , The algorithm of speech signal processing is realized by using a programmable chip with strong data processing ability , And then use ARM Control . The advantage of this scheme is that the system has strong upgrading ability , It can be compatible with a variety of audio compression formats and even future audio compression formats , Low system cost ; The disadvantage is that it is difficult to develop , Designers need to transplant the audio decoding algorithm to the corresponding ARM core In the film .

Interview questions 18

Please describe MPEG Video basic bitstream structure ?

Sequence Header

Sequence Extention

Group of picture Header

Picture Header

Picture coding extension

Interview questions 19

sps and pps The difference between ?

SPS It's a set of sequence parameters 0x67 PPS Is the set of image parameters 0x68

stay SPS The sequence parameter set can resolve the width of the image , Information such as high and frame rate . And in the h264 In file , The first two frames of data are SPS and PPS, This h264 There is only one file SPS Frames and a PPS frame .

Interview questions 20

Please describe AMR Basic bitstream structure ?

AMR The file is composed of file header and data frame , The file header ID accounts for 6 Bytes , Followed by the audio frame ;

The format is as follows :

The file header ( Occupy 6 byte )| :— | Voice frame 1 | Voice frame 2 | … |

- The file header : The header of the file is inconsistent in mono and multi-channel situations , The file header in mono case only includes one Magic

number, In the case of multi-channel, the file header contains Magic number, It also includes a 32 Bit Chanel description

field. Multi channel 32 Bit channel description character , front 28 Bits are reserved characters , Must be set to 0, Last 4 Bit indicates the number of channels used . - Voice data :

After the file header is the time continuous voice frame block , Each frame block contains several 8 Bit aligned speech frame , Relative to several channels , Start from the first channel and arrange in sequence . Every voice frame is from a 8 The frame header of bit begins : among P The filling bit must be set to 0, Every frame is 8 Bit aligned .

Interview questions 21

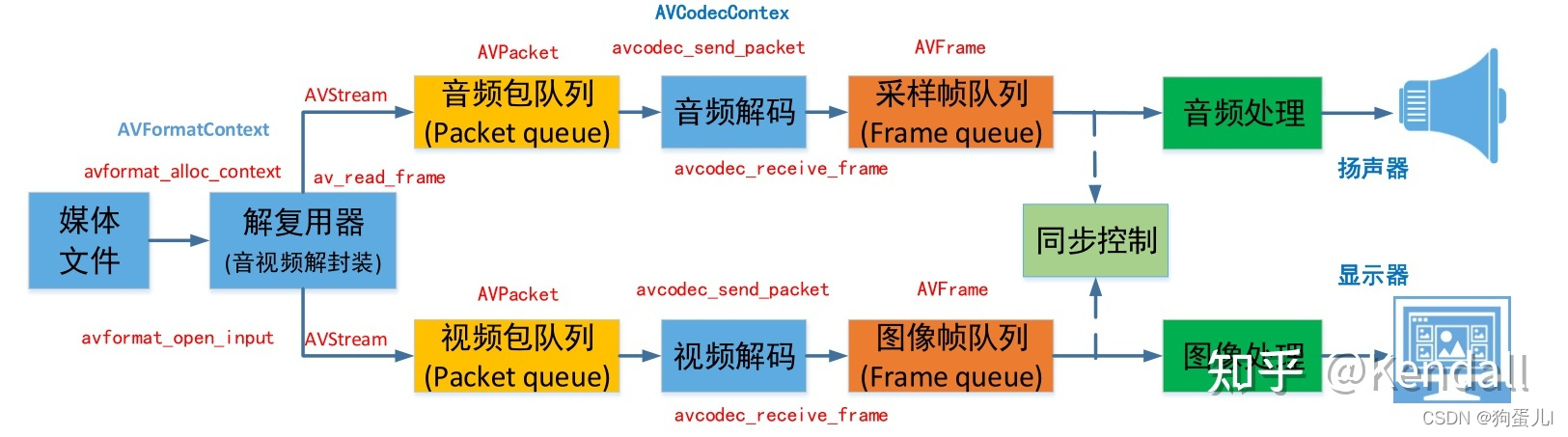

say ffmpeg Data structure of ?

ffmpeg The data structure of can be divided into the following categories :

(1) Solutions of the agreement (http,rtsp,rtmp,mms)AVIOContext,URLProtocol,URLContext It mainly stores the type and status of protocols used by video and audio .URLProtocol The packaging format used to store the input audio and video . Each protocol corresponds to one URLProtocol structure .( Be careful :FFMPEG The document is also used as a protocol “file”)

(2) decapsulation (flv,avi,rmvb,mp4)AVFormatContext It mainly stores the information contained in the video and audio packaging format ffmpeg Support various audio and video input and output file formats ( for example FLV, MKV,MP4, AVI), and AVInputFormat and AVOutputFormat The structure saves the information in these formats and some general settings .

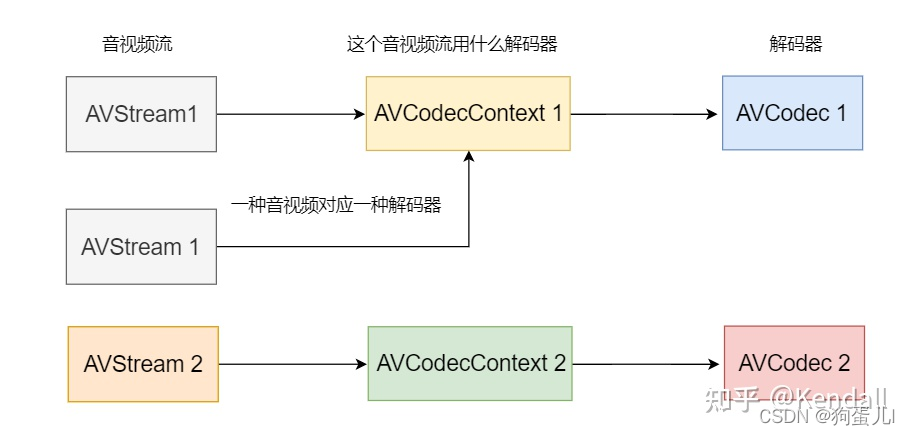

(3) decode (h264,mpeg2,aac,mp3) AVStream Is to store every video / The structure of audio stream information .AVCodecContext: Codec context structure , Store the video / The audio stream uses decoded data . AVCodec: Every video ( Audio ) codecs ( for example H.264 decoder ) Corresponding to a structure .

The relationship between the three is shown in the figure below :

(4) Save the data For video , Each structure usually stores one frame ; The audio may have several frames

- Data before decoding :AVPacket

- Data after decoding :AVFrame

Interview questions 22

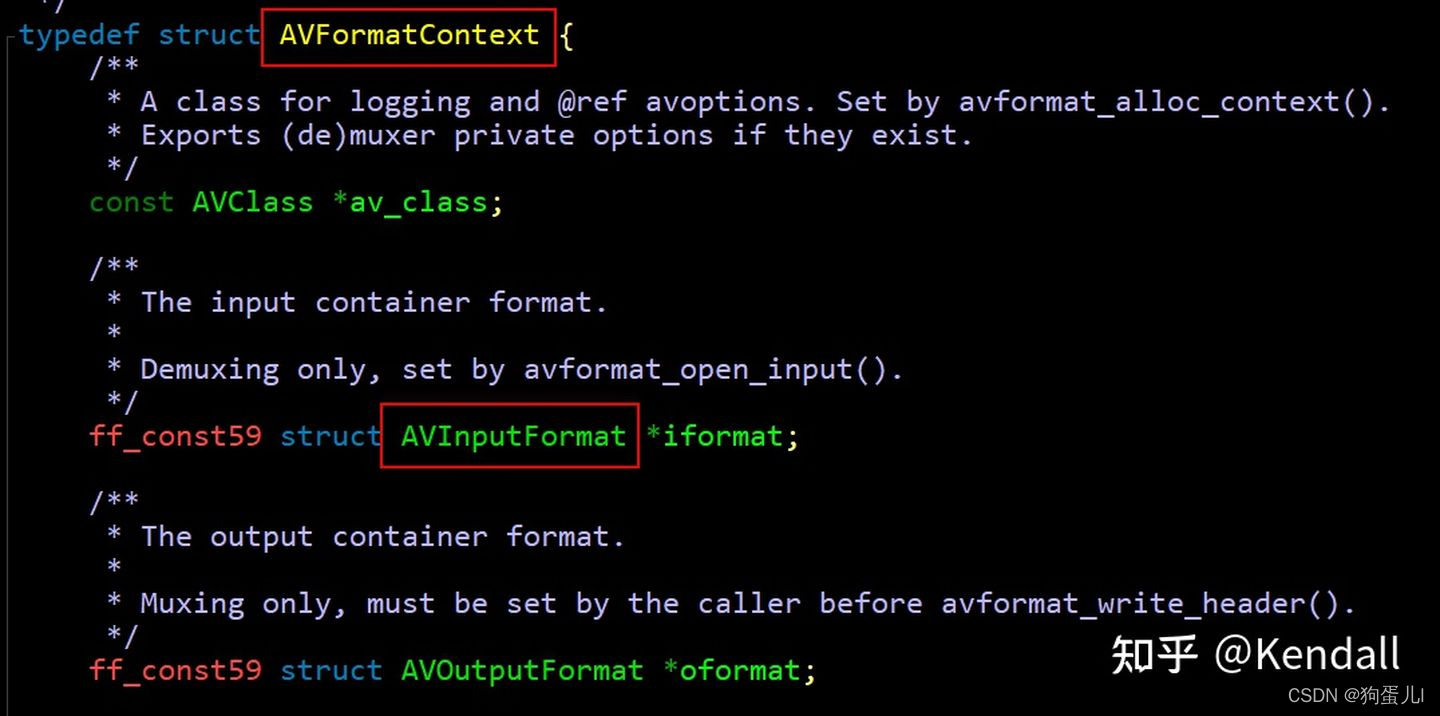

say AVFormatContext and AVInputFormat The relationship between ?

AVInputFormat Encapsulated in AVFormatContext in

AVFormatContext As API Called by the outside world

AVInputFormat Mainly FFmpeg Internal calls

AVFormatContext Information about the packaging format of video files is saved in , It is the structure responsible for storing data . and AVInputFormat Represents various packaging formats , Belonging to method , This is an object-oriented encapsulation .

adopt int avformat_open_input(AVFormatContext **ps, const char *filename,AVInputFormat *fmt, AVDictionary **options) Function loading unpacker . AVFormatContext and AVInputFormat The relationship between

Interview questions 23

say AVFormatContext, AVStream and AVCodecContext The relationship between ?

AVStream and AVpacket There are index Field is used to distinguish different code streams ( video 、 Audio 、 Subtitles, etc ),AVFormatContext Contains the entered AVStream Array is used to record each code stream ,nb_streams Record the number of input bitstreams .AVCodecContext Record AVStream Which decoder is needed to decode .

Interview questions 24

Let's talk about the video splicing processing steps ?( Details , For example, the resolution is different , Time management and so on

decapsulation 、 decode 、 Decide the resolution 、 code 、 Time processing 、 encapsulation .

Interview questions 25

NV21 How to convert it into I420?

First of all, we need to understand why we need to NV21 convert to I420, This is because x264 Only encoding is supported I420 The data of . It's actually YUV420p And YUV420sp Conversion between . YUV420p And YUV420sp Please refer to :《 Basic knowledge of audio and video -YUV Images 》

Interview questions 26

DTS And PTS Common ground ?

PTS Namely Presentation Time Stamp When will this frame be put on the monitor ;

DTS Namely Decode Time Stamp, That is to say when this frame is put in the encoder to solve . In the absence of B In the case of frames ,DTS and PTS The output order of is the same .

Interview questions 27

What are the indicators that affect video definition ?

Frame rate Bit rate The resolution of the Quantizing parameters ( Compression ratio )

Interview questions 28

What are the difficulties encountered in codec processing ?

ffmpeg In the process of encoding and decoding :

Use ffmpeg Of libavcoder,libavformat Library and possible codec

Coding process :

- Video collected 、 Audio data usage ffmpeg Compress

- Press continuous video , Audio is grouped and packaged , In order to distinguish various packages , Also want bags and Baotou , The header of the packet is written with the display timestamp PTS, Decoding timestamp DTS, It is transmitted to the player through the network

The decoding process :

- adopt TCP The protocol received the media stream ,FFmpeg decapsulation , decode

- Finally get the original video YUV, Audio PCM Format , Play with the player

Interview questions 29

How to open video in seconds ? What is second open video ?

What is second open video ? Second on means that the time from the user clicking to playing to seeing the picture is very short , stay 1 In seconds .

Why does it take seconds to open ? At present, the mainstream live broadcast protocol is RTMP,HTTP-FLV and HLS, It's all based on TCP

Long connection of . In the process of playing , If the network environment of the player is in a better state , At this time, the playback will be very smooth . If the network environment is not very stable , Jitter often occurs , If there is no special treatment at the playback end , There may be frequent jams , Seriously, there will even be a black screen . Mobile live broadcast is convenient , Users can initiate and watch live broadcast anytime, anywhere , We can't guarantee that the user's network is always in a very good state , therefore , It is very important to ensure smooth playback when the network is unstable .Solutions

3.1 After obtaining the key frame, display Rewrite the player logic so that the player gets the first key frame and displays it . GOP The first frame of the is usually a keyframe , Due to less data loaded , You can achieve “ First frame second on ”. If the live server supports GOP

cache , It means that the player can get the data immediately after establishing a connection with the server , So as to save the cross region and cross operator back to the source transmission time . GOP

Reflects the period of the key frame , That is, the distance between two keyframes , That is, the maximum number of frames of a frame group . Suppose the constant frame rate of a video is 24fps( namely 1 second 24

Frame image ), The keyframe period is 2s, So one GOP Namely 48 Zhang image . generally speaking , At least one keyframe is required for each second of video .

Increasing the number of keyframes can improve the picture quality (GOP Usually it is FPS Multiple ), But at the same time, it increases the bandwidth and network load . It means , The client player downloads a GOP, After all

GOP There is a certain data volume , If the player network is poor , It may not be able to download the in seconds GOP, And then affect the visual experience .

If the player behavior logic cannot be changed, it will be turned on in the first frame second , The live broadcast server can also do some tricks , For example, from cache GOP

Change to cache double keyframes ( Reduce the number of images ), This can greatly reduce player loading GOP Volume of content to be transferred .3.2 app Business logic optimization For example, do well in advance DNS analysis ( Save tens of milliseconds ), And do a good job of speed measurement and line selection in advance ( Choose the best route ). After such pretreatment , When you click the play button , Will greatly improve download performance .

One side , Performance optimization can be done around the transport layer ; On the other hand , Business logic optimization can be done around the customer's playback behavior . The two can effectively complement each other , As the optimization space of second on .

Second video scheme

4.1 Optimize server policy The video at the time when the player accesses the server to request data is not necessarily a key frame , Then you need to wait until the next key frame comes , If the period of the keyframe is 2s Words , Then the waiting time may be 0~2s Within the scope of , This waiting time will affect the loading time of the first screen . If the server has a cache , When the player is connected , The server can find the nearest key frame and send it to the player , This saves the waiting time , It can greatly reduce the loading time of the first screen .

4.2 Optimize the player strategy The first frame of data requested by the player must be a key frame , Key frames can be decoded by intra reference . In this way, the player can start decoding and displaying immediately after receiving the first key frame , Instead of waiting for a certain number of video frames to be cached before decoding , This can also reduce the time of the first screen display .

Optimization of first screen duration at playback end Several steps in the process of the first screen of the player :

First screen time , It refers to the time from entering the live studio to seeing the live picture for the first time . The first screen time is too long, which can easily lead to users losing their patience for live broadcast , Lower retention of users . However, live game broadcasting requires high picture quality and consistency , The amount of data encoded at the corresponding streaming end is much larger than that of other types of live broadcasting , How to reduce the first screen time is a big problem .

During the first screen of the player , There are three main operations to be carried out : Load the live broadcast room

UI( Including the player itself )、 Download live streaming ( Not decoded ) And decoded data playback . Among them, data decoding and playback are subdivided into the following steps :Detect the transport protocol type (RTMP、RTSP、HTTP etc. ) And establish a connection with the server to receive data ;

The video stream is demultiplexed to obtain audio and video encoded data (H.264/H.265、AAC etc. );

Audio and video data decoding , Audio data is synchronized to peripherals , Video data rendering screen , thus , The video starts playing , The first screen time is over .

summary : First , load UI This can be done as a single example , It can improve the first screen display speed to a certain extent ; secondly , The decoding type can be preset , Reduce data type detection time ; Again , Set a reasonable download buffer size , Minimize the amount of data downloaded , When detected I The frame data , Immediately start decoding single frame picture for playback , Improve the first screen display time .

Interview questions 30

How to reduce delay ? How to ensure fluency ? How to solve Caton ? Solve network jitter ?

- The reasons causing

Ensuring the smoothness of the live broadcast means ensuring that the broadcast does not get stuck during the live broadcast , Caton refers to the stagnation of sound and picture during playback , Very affecting the user experience . There are several reasons for Caton :

The data cannot be sent to the server due to the network jitter at the streaming end , Cause the playback end to jam ; The network jitter at the playback end leads to data accumulation on the server that cannot be pulled down , Cause bovancaton . Because the network from server to player is complex , Especially in 3G And those with poor bandwidth WIFI In the environment , Jitter and delay often occur , The playback is not smooth , The negative effect of poor playback is that the delay increases . How to ensure the smoothness and real-time of playback in the case of network jitter is the difficulty to ensure the performance of live broadcast .

- Fluency optimization At present, the mainstream live broadcast protocol is RTMP、HTTP-FLV and HLS, It's all based on TCP

Long connection of . In the process of playing , If the network environment of the player is in a better state , At this time, the playback will be very smooth . If the network environment is not very stable , Jitter often occurs , If there is no special treatment on the player , There may be frequent jams , Seriously, there will even be a black screen . Mobile live broadcast is convenient , Users can initiate and watch live broadcast anytime, anywhere , We can't guarantee that the user's network is always in a very good state , therefore , It is very important to ensure smooth playback when the network is unstable .

To solve this problem , First, the player needs to separate the streaming thread and decoding thread , And establish a buffer queue to buffer audio and video data . The streaming thread puts the audio and video streams obtained from the server into the queue , The decoding thread obtains audio and video data from the queue for decoding and playing , The length of the queue can be adjusted . When the network jitters , The player cannot get data from the server or the speed of getting data is slow , At this time, the data cached in the queue can play a transitional role , Let users not feel the network jitter .

Of course, this is the strategy adopted for the situation of network jitter , If the network at the playback end cannot be restored for a long time or the edge node of the server goes down , Then the application layer needs to reconnect or schedule .

Interview questions 31

What is the basic principle of predictive coding ?

Predictive coding is an important branch of data compression theory . According to the correlation between discrete signals , Use one or more of the previous signals to predict the next signal , Then compare the difference between the actual value and the predicted value ( Prediction error ) Encoding . If the prediction is more accurate , Then the error signal will be very small , You can encode with fewer code points , To achieve the purpose of data compression .

- principle : Use the past sample value to predict the new sample value , Subtract the actual value of the new sample value from its predicted value , Get the error value , Encode the error value , Send this code . Theoretically, the data source can be accurately represented by a mathematical model , Make its output data always consistent with the output of the model , Therefore, the data can be accurately predicted , But in fact, the predictor cannot find such a perfect mathematical model ; Prediction itself does not cause distortion . The error value can be encoded by distortion free compression method or distortion compression method .

Interview questions 32

You need to store a video on the network ( For example, as mp4 ), Please implement and say the method ( The first video needs to climb over the wall to enter )?

Interview questions 33

You need to store a voice on the network ( For example, as mp3 ), Please implement and say the method ?

Interview questions 34

Why would there be YUV This kind of data comes out ?(YUV comparison RGB The advantages of )

RGB It refers to the optical trichromatic red 、 Green and blue , Through this 3 The value of species (0-255) Change can form other colors , whole 0 It's black when it's dark , whole 255 When it's white .RGB Is a device dependent color space : Different devices have specific requirements RGB The detection and reproduction of values are different , Because color matter ( Fluorescent agent or dye ) Red with them 、 The individual response levels of green and blue vary from manufacturer to manufacturer , Even the same equipment is different at different times .

YUV, It's a color coding method . It is often used in various video processing components . The three letters represent the brightness signal Y And two color difference signals R-Y( namely U)、B-Y( namely V), It is used to describe image color and saturation , Used to specify the color of pixels .Y’UV The invention of color TV is due to the transition period between color TV and black-and-white TV . Black and white video only Y video , That's the gray scale value . With what we know RGB similar ,YUV It's also a color coding method , Mainly used in TV system and analog video field , It will send the brightness information (Y) And color information (UV) Separate , No, UV Information can also display complete images , It's just black and white , This design solves the problem of compatibility between color TV and black and white TV . also ,YUV Unlike RGB That requires three independent video signals to be transmitted at the same time , So use YUV Mode transfer takes up very little bandwidth .

YUV and RGB It's interchangeable , Basically, all image algorithms are based on YUV Of , All display panels are receiving RGB data .

Interview questions 35

H264/H265 What's the difference? ?

Same quality and same bit rate ,H.265 Than H2.64 Take up less storage space 50%. If the storage space is the same , That means , At the same bit rate H.265 than H.264 The picture quality should be higher, and the theoretical value is 30%~40%.

Compared with H.264,H.265 More different tools are provided to reduce the bit rate , In coding units , The smallest 8x8 To the biggest 64x64. Areas with little information ( The color change is not obvious ) The macroblock is larger , There are fewer codewords after encoding , Where there are many details, the macroblocks are correspondingly smaller and more , There are more codewords after encoding , In this way, it is equivalent to coding the image with emphasis , Thus, the overall bit rate is reduced , The coding efficiency is improved accordingly .

H.265 The standard is mainly around the existing video coding standards H.264, In addition to retaining some of the original technology , It can improve the bitstream 、 Coding quality 、 The relationship between delay and algorithm complexity and other related technologies .H.265 The main contents of the study include , Improve compression efficiency 、 Improve robustness and error resilience 、 Reduce real-time delay 、 Reduce channel acquisition time and random access delay 、 Reduce complexity .

Interview questions 36

Video or audio transmission , What would you choose TCP Agreement or UDP agreement ? Why? ?

choice UDP agreement ,UDP Good real-time .TCP Make sure you lose package Will be re sent , Make sure the other party can receive . And in video playback , If there is a one second signal indeed , It caused a little flaw in the picture , Then the most appropriate way is to add this flaw with any signal , In this way, although the picture has a little flaw, it does not affect the viewing . If the use of TCP Words , This missing signal will be sent over and over again until the receiving end confirms receipt . This is not what audio and video playback expects . and UDP It is very suitable for this situation .UDP It will not send the lost over and over package.

Interview questions 37

The usual soft solution and hard solution , What is it ?

Hard decoding is hardware decoding , To use GPU To partially replace CPU decode , Soft decoding is software decoding , It refers to using software to make CPU To decode . The specific differences between the two are as follows :

Hard decoding : Is to hand over all the original to CPU Part of the video data to be processed is handed over to GPU To do it , and GPU The parallel computing ability of is much higher than CPU, This can greatly reduce the impact on CPU The load of ,CPU After the occupancy rate of is low, you can run some other programs at the same time , Of course , For better processors , such as i5 2320, perhaps AMD For any four core processor , The difference between hard solution and software is just a matter of personal preference .

Soft decoding : That is, through software to make CPU To decode the video ; Hard decoding : Without the help of CPU, And complete the video decoding task independently through the special sub card device . Once the VCD/DVD Unzip the card 、 Video compression cards and so on belong to the category of hard decoding . now , There is no need for additional daughter cards to complete HD decoding , Because the hard decoding module has been integrated into the graphics card GPU Internal , So the current mainstream graphics card ( Set display ) Can support hard decoding technology .

Interview questions 38

What is live broadcast ? What is on demand ?

live broadcast : It's a three-way interaction ( The host 、 The server 、 The audience ), This interactive real-time ! Although there will be some delays depending on the protocol chosen , But we still think it's live !—> The anchor sends audio and video locally to the server ( Push flow ), The audience decodes in real time from the server ( Pull flow ) Watch and listen to the audio and video sent by the anchor to the server ( Live broadcast content ). Live broadcast is an on-demand broadcast without fast forward : First of all, we must be clear , There is no streaming process in VOD , Your own stream has already been pushed to the server , Or it's wrong to say so , It should be that your audio and video have already been uploaded to the server , Viewers only need to watch it online , Because your audio and video are uploaded to the server , The audience can fast forward , Fast back , Adjust the progress bar to watch !

Interview questions 39

Brief description of push flow 、 Flow pulling workflow ?

Refer to the answer

Push flow : In the live , One sends a request to the server , Push the data you are broadcasting live to the server , These contents are pushed to the server in the process of “ flow ” In the form of , This is it. “ Push flow ”, Stream audio and video data ( Or upload ) The process to the server is “ Push flow ”! Streaming Party's audio and video tend to be large , In the process of streaming, first follow aac Audio - code and h264 Depending on the 【 The word "video" cannot appear on public platforms , What a pit 】 frequency - The coding standard pushes the audio and video compression , And then merge it into MP4 perhaps FLV Format , Then according to the live broadcast encapsulation protocol , Finally, it is sent to the server to complete the streaming process .

Pull flow : Contrary to streaming , Streaming is the process that users obtain audio and video from the server , This is it. “ Pull flow ”! Pull flow first aac Audio - decode and h.264 Depending on the 【 The word "video" cannot appear on public platforms , What a pit 】 frequency - The internal decoding decompresses the pushed audio and video , Then synthesize MP4 perhaps FLV Format , Then unpack , Finally, our client interacts with the audience .

Interview questions 40

How to live I Frame interval setting 、 With frame rate resolution selected ?

Interview questions 41

Live streaming push I Frame and tweet I What is the difference between frames ?

Interview questions 42

What are the common live broadcast protocols ? What's the difference between ?

Interview questions 43

What are the common data transmission protocols in VOD ?

Interview questions 44

RTMP、HLS The default port number of each protocol is ?

Interview questions 45

sketch RTMP agreement , How to package RTMP package ?

Interview questions 46

m3u8 The constitution is ? Live m3u8、ts How to update in real time ?

Interview questions 47

What is audio and video synchronization , What is the standard of audio and video synchronization ?

Interview questions 48

Player pause 、 Fast forward, fast backward 、seek、 Frame by frame 、 How to realize speed change ?

Interview questions 49

Talk about the optimization work you usually do in the process of playing ?

Interview questions 50

What specific streaming media servers have you studied , Have you ever done secondary development ?

Interview questions 51

What is? GOP?

GOP ( Group of Pictures ) It's a continuous set of pictures , By one I Frames and a few B / P

The frame of , Is the basic unit of video encoder and decoder access . in other words GOP Group is a key frame I The length of the group in which the frame is located , Every GOP Group only 1 individual I frame .

GOP The length format of the group also determines the size of the code stream . GOP The bigger it is , In the middle of the P The frame and B The more frames there are , So the higher the quality of the decoded video , But it will affect the coding efficiency .

Interview questions 52

Test point of audio test , How to test the audio delay ?

Test point : function , performance , Compatibility , Power consumption , Security , Pressure test , Objective sound quality POLQA branch , Subjective experience of sound quality , Time delay from anchor to audience , Audience to audience delay ,3A effect

Audio delay : Connect 2 The tested objects are connected to the computer , use PESQ The script calculates the audio delay

Interview questions 53

Implementation principle of beauty , Specific implementation steps ?

Interview questions 54

How to live APP Grab the file , How to filter uplink , The downside , Total bit rate ?

- The upside :ip.src192.168.x.x

- The downside :ip.dst192.168.x.x

- Total bit rate :ip.src192.168.x.x and ip.dst192.168.x.x

Interview questions 55

How to test a beauty pendant ?

Interview questions 56

Why use FLV?

Because of the protocol requirements of transmission ,RTMP The agreement only supports FLV Format stream

Interview questions 57

How to test a beauty pendant ?

Interview questions 58

Normal video format ?

MP4/RMVB/FLY/AVI/MOV/MKV etc.

Interview questions 59

What is the homebrew? What have you installed with it ? What are the common commands ?

homebrew It's a Mac The unique suite manager under the system , I want to do live broadcast , need rtmp and nginx

, Installation alone is complicated , Simply enter the command to install the corresponding package in the terminal to complete the installation , Complex processes depend on homebrew

Get around it ! I have installed many things with it , such as nginx Set up streaming media server . Common commands :brew install 、brew

uninstall、brew search、brew list、brew update、brew help etc. ~

Interview questions 60

RTMP、HLS The default port number of each protocol is ?

- RTMP Port number :1935

- HLS Port number :8080

边栏推荐

- 第6章 Rebalance详解

- Submit several problem records of spark application (sparklauncher with cluster deploy mode)

- Double specific tyrosine phosphorylation regulated kinase 1A Industry Research Report - market status analysis and development prospect prediction

- Codeforces Round #802(Div. 2)A~D

- Research Report on market supply and demand and strategy of China's four flat leadless (QFN) packaging industry

- Research Report on market supply and demand and strategy of China's four seasons tent industry

- China tetrabutyl urea (TBU) market trend report, technical dynamic innovation and market forecast

- Research Report on market supply and demand and strategy of double drum magnetic separator industry in China

- 计算时间差

- Calculate the time difference

猜你喜欢

ffmpeg命令行使用

Chapter 5 namenode and secondarynamenode

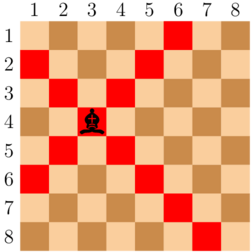

Codeforces Round #799 (Div. 4)A~H

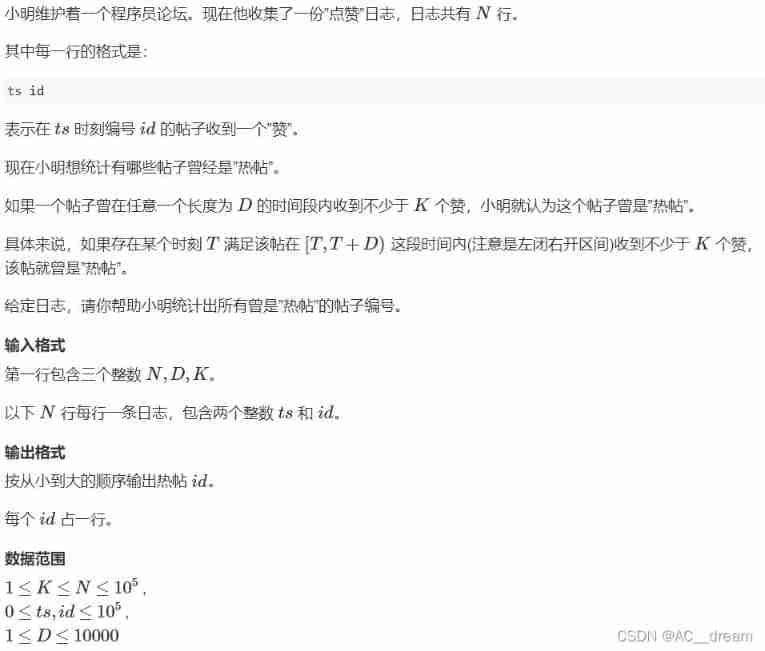

Log statistics (double pointer)

Business system compatible database oracle/postgresql (opengauss) /mysql Trivia

antd upload beforeUpload中禁止触发onchange

Chapter 7__ consumer_ offsets topic

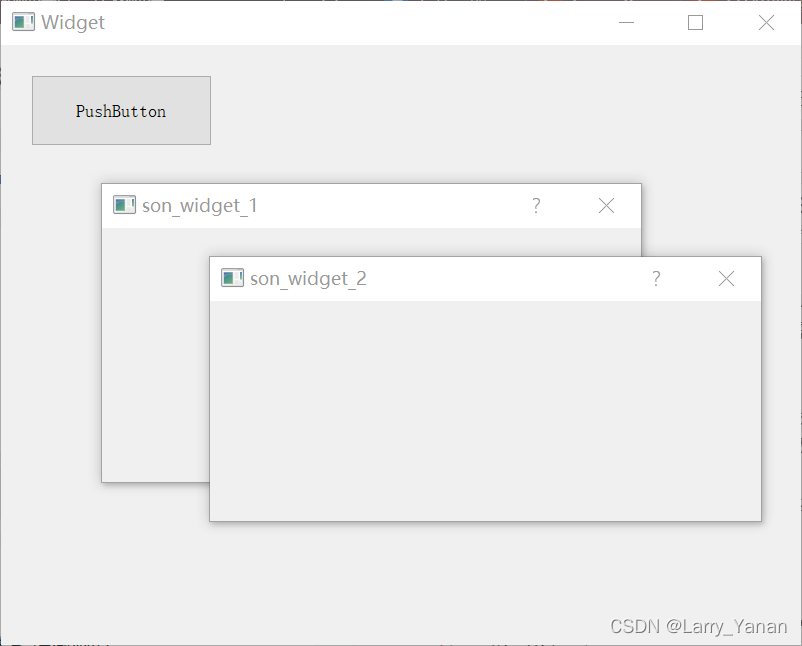

QT realizes window topping, topping state switching, and multi window topping priority relationship

Codeforces Round #797 (Div. 3)无F

软通乐学-js求字符串中字符串当中那个字符出现的次数多 -冯浩的博客

随机推荐

第6章 Rebalance详解

js时间函数大全 详细的讲解 -----阿浩博客

第三章 MapReduce框架原理

Date plus 1 day

原生js实现全选和反选的功能 --冯浩的博客

Problem - 1646C. Factorials and Powers of Two - Codeforces

第 300 场周赛 - 力扣(LeetCode)

MariaDB的安装与配置

业务系统兼容数据库Oracle/PostgreSQL(openGauss)/MySQL的琐事

Remove the border when input is focused

(POJ - 3186) treatments for the cows (interval DP)

简单尝试DeepFaceLab(DeepFake)的新AMP模型

SQL快速入门

CMake速成

Bisphenol based CE Resin Industry Research Report - market status analysis and development prospect forecast

sublime text 代码格式化操作

第一章 MapReduce概述

SF smart logistics Campus Technology Challenge (no T4)

Educational Codeforces Round 130 (Rated for Div. 2)A~C

JS time function Daquan detailed explanation ----- AHAO blog