当前位置:网站首页>Deep Learning Theory - Initialization, Parameter Adjustment

Deep Learning Theory - Initialization, Parameter Adjustment

2022-08-04 06:18:00 【Learning adventure】

Initialization

The essence of the deep learning model training process is to update the parameter w, which requires each parameter to have a corresponding initial value.

Why initialization?

Neural network needs to optimize a very complex nonlinear model, and there is basically no global optimal solution, initialization plays a very important role in it.

□ The selection of the initial point can sometimes determine whether the algorithm converges;

□ When it converges, the initial point can determine how fast the learning converges and whether it converges to a point with high or low cost;

□ OverA large initialization leads to exploding gradients, and an initialization that is too small leads to vanishing gradients.

What is a good initialization?

A good initialization should meet the following two conditions:

□ The activation value of each layer of neurons will not be saturated;

□ The activation value of each layer should not be0.

All-zero initialization: The parameter is initialized to 0.

Disadvantages: Neurons in the same layer will learn the same features, and the symmetry properties of different neurons cannot be destroyed.

If the weight of the neuron is initialized to 0, the output of all neurons will be the same, except for the output, all the nodes in the middle layer will have the value of zero.Generally, the neural network has a symmetrical structure, so when the first error backpropagation is performed, the updated network parameters will be the same. In the next update, the same network parameters cannot learn to extract useful features, so the deep learning modelNeither will initialize all parameters with 0.

Parameter adjustment

Batch batchsize choose 2 exponential times with computerMemory match

Hyperparameter tuning method

Trial and error, web search, random search, Bayesian optimization, Gaussian process

边栏推荐

- 腾讯、网易纷纷出手,火到出圈的元宇宙到底是个啥?

- Android foundation [Super detailed android storage method analysis (SharedPreferences, SQLite database storage)]

- [CV-Learning] Semantic Segmentation

- Golang环境变量设置(二)--GOMODULE&GOPROXY

- TensorFlow2学习笔记:7、优化器

- 【CV-Learning】线性分类器(SVM基础)

- [Deep Learning 21 Days Learning Challenge] 2. Complex sample classification and recognition - convolutional neural network (CNN) clothing image classification

- YOLOV5 V6.1 详细训练方法

- Learning curve learning_curve function in sklearn

- 学习资料re-id

猜你喜欢

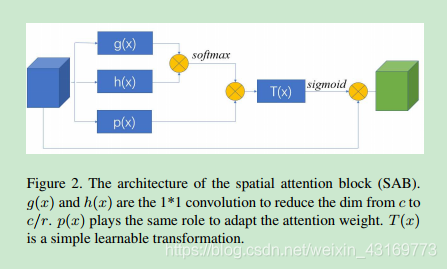

【论文阅读】Further Non-local and Channel Attention Networks for Vehicle Re-identification

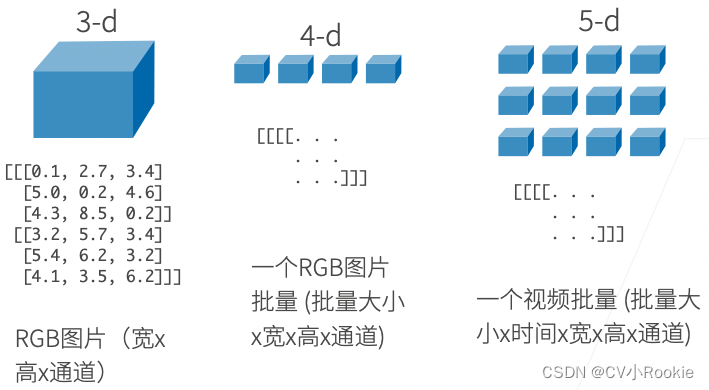

动手学深度学习__数据操作

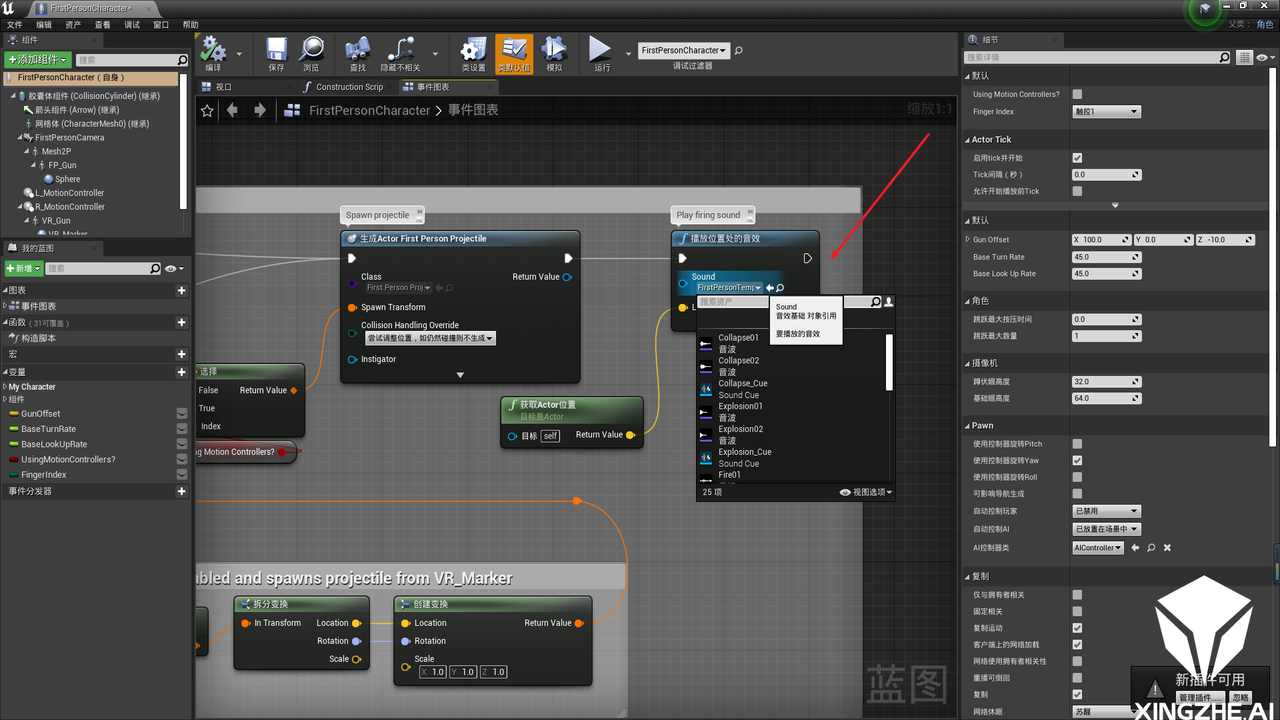

浅谈游戏音效测试点

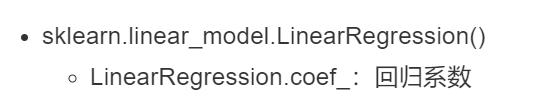

Introduction of linear regression 01 - API use cases

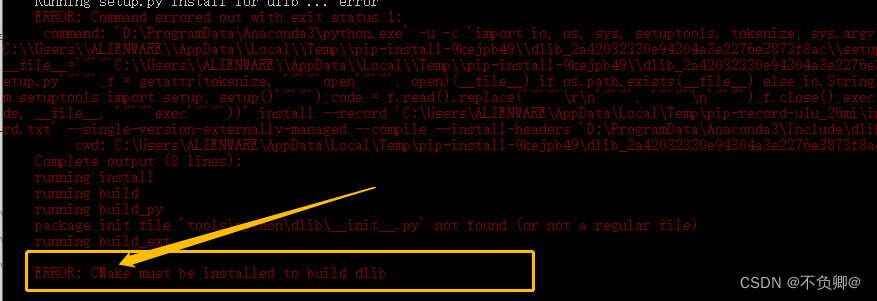

安装dlib踩坑记录,报错:WARNING: pip is configured with locations that require TLS/SSL

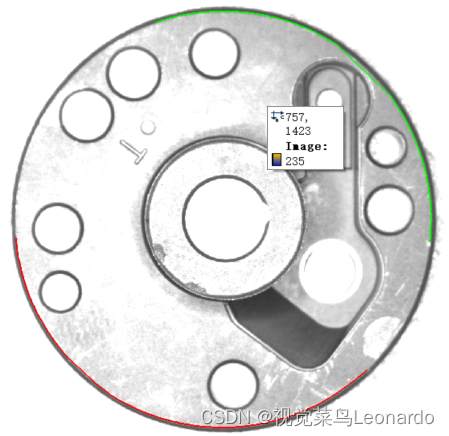

Halcon缺陷检测

Copy攻城狮信手”粘“来 AI 对对联

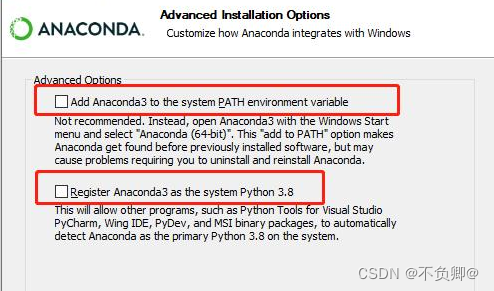

0, deep learning 21 days learning challenge 】 【 set up learning environment

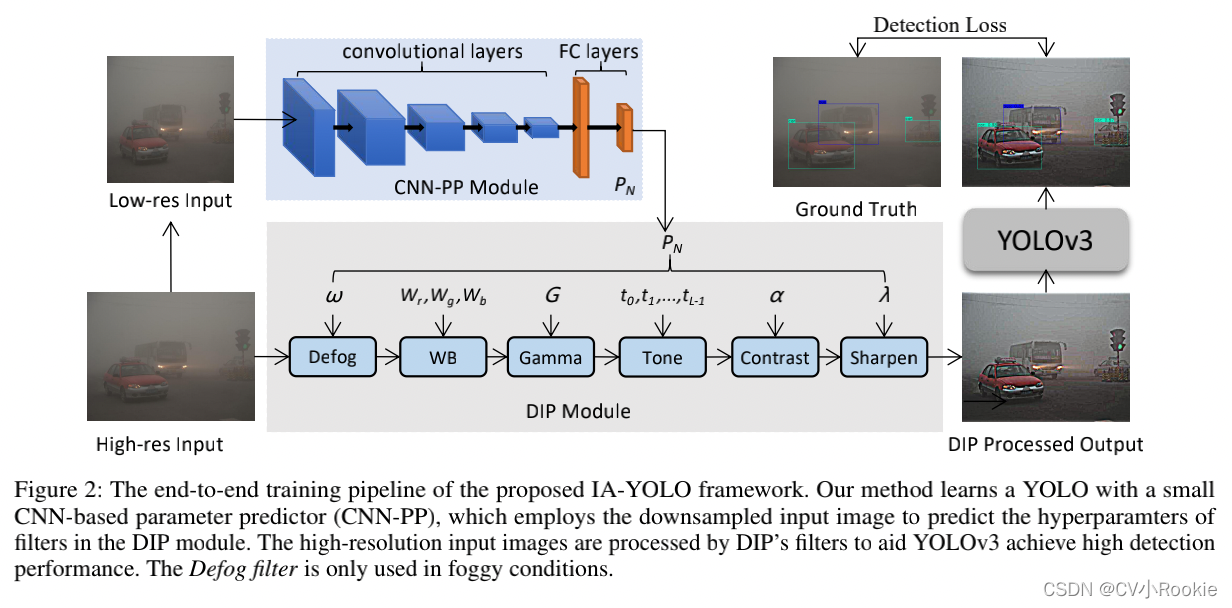

Image-Adaptive YOLO for Object Detection in Adverse Weather Conditions

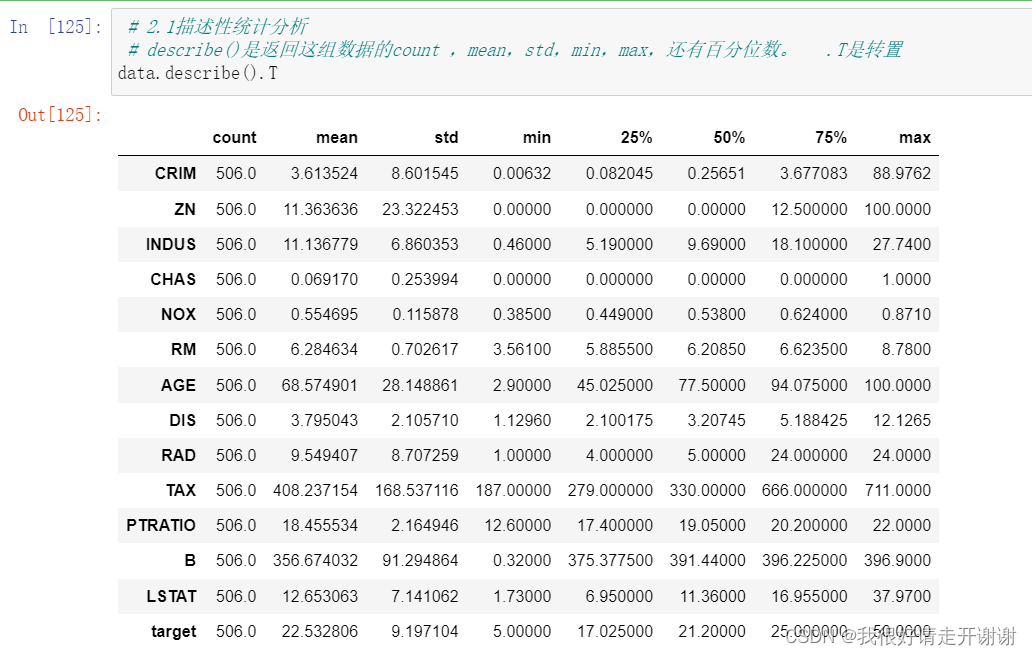

Linear Regression 02---Boston Housing Price Prediction

随机推荐

JPEG2jpg

PostgreSQL schema (Schema)

动手学深度学习_softmax回归

动手学深度学习_线性回归

ValueError: Expected 96 from C header, got 88 from PyObject

Pytorch语义分割理解

【深度学习21天学习挑战赛】3、使用自制数据集——卷积神经网络(CNN)天气识别

【Copy攻城狮日志】“一分钟”跑通MindSpore的LeNet模型

浅谈游戏音效测试点

[CV-Learning] Semantic Segmentation

动手学深度学习__张量

No matching function for call to ‘RCTBridgeModuleNameForClass‘

MFC 打开与保存点云PCD文件

【深度学习21天学习挑战赛】0、搭建学习环境

光条提取中的连通域筛除

度量学习(Metric learning)—— 基于分类损失函数(softmax、交叉熵、cosface、arcface)

MNIST手写数字识别 —— Lenet-5首个商用级别卷积神经网络

【CV-Learning】线性分类器(SVM基础)

【go语言入门笔记】13、 结构体(struct)

YOLOV5 V6.1 详细训练方法

Batch batchsize choose 2 exponential times with computerMemory match

Batch batchsize choose 2 exponential times with computerMemory match