当前位置:网站首页>[deep learning] 3 minutes introduction

[deep learning] 3 minutes introduction

2022-07-07 17:42:00 【SmartBrain】

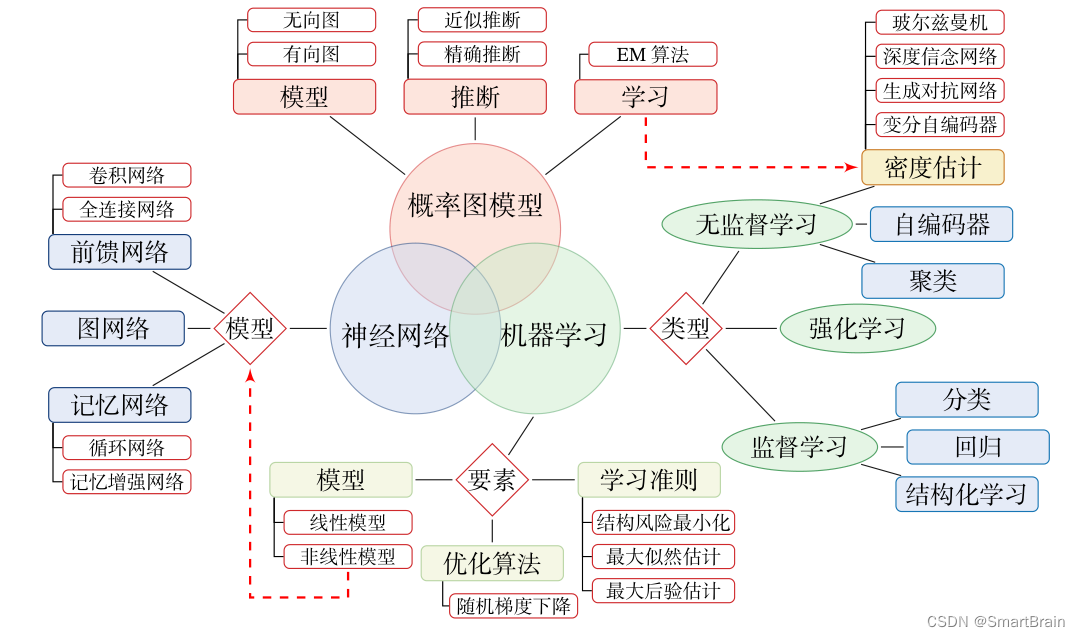

First explain AI And deep learning : AI is a big circle , Including computer vision 、 Natural person treatment 、 Data mining, etc ; What is called machine learning ? Draw a small circle . What is deep learning ? Equivalent to deep learning is also a part of machine learning .

machine learning ( Machine Learning , ML ) Learning from limited observational data ( or “ guess ”) A general law , And using these laws to predict the unknown data .

Machine learning is an important branch of artificial intelligence , And gradually become the key factor to promote the development of artificial intelligence . Traditional machine learning mainly focuses on how to learn a prediction model . Generally, you need to first express the data as a set of features ( Feature ), The representation of a feature can be a continuous number 、 Discrete symbols or other forms . Then input these characteristics into the prediction model , And output the prediction results . This kind of machine learning can be regarded as shallow learning ( Shallow Learning ). An important feature of shallow learning is that it does not involve feature learning , Its features are mainly extracted by manual experience or feature transformation methods .

When we use machine learning to solve practical tasks , Will face a variety of data forms , Like sound 、 Images 、 Text etc. . The feature construction methods of different data vary greatly . For data such as images , We can naturally express it as a continuous vector . There are many ways to express image data as vectors , For example, directly set all pixel values of an image ( Grayscale value or RGB value ) Form a continuous vector . For text data , Because it is generally composed of discrete symbols , And each symbol is represented as meaningless coding inside the computer , So it is usually difficult to find a suitable expression . therefore , Using machine learning model in practical tasks generally includes the following steps :

( 1 ) Data preprocessing : After data preprocessing , Such as removing noise, etc . For example, in text categorization , Remove stop words, etc ;

( 2 ) feature extraction : Extract some effective features from the original data . For example, in image classification , Edge extraction 、 Scale invariant feature transformation ( Scale Invariant Feature Transform , SIFT ) Characteristics, etc ;

( 3 ) Feature conversion : Some processing of features , For example, peacekeeping and upgrading . Many feature transformation methods are also machine learning methods . Dimensionality reduction includes feature extraction ( Feature Extraction ) And feature selection ( Feature Selection ) Two ways . The commonly used feature transformation methods are principal component analysis ( Principal Components Analysis , PCA )、 Principal component analysis .

Deep study what it does ? Deep learning neural network should not be classified as an algorithm , What should I be , It should be regarded as a method of feature extraction . In machine learning and data mining or other tasks , What is the most difficult part of all intelligent tasks ? The most difficult thing is not your algorithm , It's a data level , Like this person , He is going to cook a good meal , Can he make it without some good ingredients ? I'm sure I can't do it . Let me tell you a little about this , Characteristics are very, very important , What kind of characteristics , What kind of data can be more suitable for our model and you . At present, the direction of this problem is more important . To summarize , Let's continue the basic process .

First step , Take the data first ;

The second step , Talk about a project ;

The third step , Choose an algorithm to model ;

Step four , Balanced application

What is the difference between deep learning and machine learning in dealing with Feature Engineering ? I take out the following data , That's how I choose this feature , How to combine that feature ? How can I extract some information from this feature , How to make the data easier to transform , Can get some more valuable input . We often think about a problem , I think everyone is in the process of considering this problem , Have you ever thought about one thing ? Is there an algorithm after he gets the data , He himself will choose some features here ? For example, after some algorithms come , He will learn what characteristics are good by himself , What's wrong , He slowly thought of good features , Which features fit together , What features are appropriate for decomposition and fusion ? If there is such an algorithm to help us do tasks , Is it easier for us to do it by ourselves ? Deep learning can .

Why do you say that? , Deep learning is the most integrated with artificial intelligence , Because it solves the problem of intelligence to a certain extent , Why? ? Because you have to manually select data , Manually select features , Choose an algorithm manually , Get a result manually , Show some special intelligent feelings ? It makes us feel as if we are doing the task , It's just the realization of this mathematical formula . This is my understanding of machine learning .

But deep learning , After taking the raw data , We can let this network really learn what characteristics , It is more suitable , How to combine is more appropriate . This is a realm that deep learning can achieve . Deep learning is a part of machine learning , It is also the core part of reality . What is the biggest point to solve ? It solves the problem of Feature Engineering . How to do it ?

Sum up a sentence , What data characteristics determine what , It determines that you are going to make a model at present . So , Data preprocessing and feature extraction are always the first step , It is always the core part, and your choice of Algorithm , For example, I use SVM, Also use logical regression , Also use random forest . How can I adjust this parameter ? No matter how you choose the algorithm and parameters , It just determines how we approach such an upper limit ? So you should know a little , Special projects are more important , This thing has to go to the core than algorithms and parameters . Then you may ask a question , How should I choose features, for example, now , I still have a good understanding of some numerical characteristics . If you get some text data , If you get some image data , How can I choose features ? This number is very, very difficult .

We should think about this parameter as much as possible , It is not this parameter, this feature how to do ? Then I got one X after , For example, a x1 Is there a git page in the logic meeting , And then there's a x2 It is difficult to reach the characteristics , What characteristics do you want ? Its influence on decoupling is also relatively difficult . It is difficult to find features , This is the core difficulty . Then how to learn in depth in deep learning? Everyone will regard it as a black box .

Neural network is a black box , How did it do it ? After he took away the original data , Will do all kinds of complex transformation operations on this raw data , I can paint this thing black first , You can treat this thing as a black box , Because there are many things in the middle , Now I may not know a few details. Let's talk about one of them slowly , Now you need to know a little about behavior .

After having an input data , The big black box of neural network can automatically extract the features of the input data , Take them out of all kinds of features . This feature enables the computer to recognize . For example, how does the computer think it can recognize this 3, He will extract these three numbers , A series of characteristics he knows . So here he has a learning process , Computers really learn , Learning what kind of characteristics is the most suitable for him . This is the core of deep learning. In the future, we will encounter some deep learning problems , In the process of thinking, you will know a little . You may see that when you go to Baidu to check, you will hear various in-depth explanations . I think some of these explanations may be one-sided , I suggest you study in depth according to my form , It is really to learn what characteristics are the most appropriate . What would you like to add after having features ? If you are willing to add logic and return to illogical, you will do typing , You can even transmit this feature to SM It's OK to be in the middle . So the core of this problem is how to carve . Then he .

The way , Deep study what it can do ? What do you call it now ? A driverless car . What does driverless car mean ? Now this car doesn't need people to drive , He drives by himself , When driving by himself, does he have to see whether there is a car in front of him , Is there anyone in the back ? The car ahead is running at an hourly rate 80 Drive at km , I spend every hour 100 Drive at km , Tesla , His driverless car had a fatal accident . Why is a car driving on the highway ? There is a tractor parked in front , This tractor is foreign. It has some treading, spraying, painting and so on , Spray like a blue sky and white clouds , Tesla's driverless system is not recognized , In front is a tractor , Thought it was blue sky and white clouds , Jingle hit it , Is there something wrong ?

therefore , What is the core of deep learning ? Some traditional data mining tasks may not use so much , Of course, there is no limit to this thing , More is the use of vision . In fact, text data needs to be modeled . For some face recognition with more face detection , Face detected the airport , People used to hold tickets , Hands, hands, hands , See if you stare at you alone for a long time , Now people don't have to look at you much when checking in , Take out your ID card and look at the corresponding camera , The system says it has been recognized , Then you can pass .

Deep learning , It is not particularly well supported on the mobile end . Why? ? Because deep learning is equivalent to a disadvantage , The amount of calculation is too large , Let's talk about logistic regression or random score or random score , How many parameters can you say? Dozens of parameters can survive, right ? But in the neural network , Let me start with a number , You may sound scary , A parameter of tens of millions of levels , Why it can go automatically depends on the characteristics ? It depends on such a huge level of a parameter , Tens of millions of parameters are actually relatively few , Hundreds of millions of parameters are very common .

Let's think , Next, I will use an optimization algorithm to optimize this deep learning network , Optimize this neural network , Will the speed be very, very slow ? Because you have to adjust the parameters of thousands of levels every time 1000 All the parameters , And every time you tune 50 Parameters , Can the speed be the same ? Definitely not the same . So the biggest problem of deep learning is that the speed may be too slow . On some mobile terminals , For example, you want to transplant some products to some mobile phones , You use the deep learning algorithm to be sure that it is much stronger than the traditional model algorithm , But it just can't speed up , This may be its biggest problem .

Then I will tell you , Now medicine is particularly popular in social learning , Do some tests, for example, in some cells , It is common to detect some cancer cells . Detect the variation of cells in phase , Testing expectations is frustration , What it's like to do something complicated .

such as , These face changing operations seem to be a complicated process . It's not hard , Neural network to do this thing is relatively easy , What else to do ? Now you haven't seen some old movies , Then new ones, such as Sunday professional films , Then a long time ago, I couldn't remember when I went to school . When I first entered the University , When I first entered the University, there was the Titanic , Is the Titanic out 3D Version ? Then you say 3D What is the difference between the version and the old version ? That just means 3D Version it looks clearer , Is it a clearer version , Talk about whether it is a reconstruction of resolution. Here you let the neural network automatically do a coloring , Sure , It is also possible to do some super-resolution reconstruction automatically .

There are many changes in the neural network , Basically, what you can do is , As long as you can basically think of something , The Internet 80% Can give you to complete . Every year in major conferences , Neural networks are all kinds of deformations , There are too many delayed versions , What you can play is actually covered , It's basically all aspects of life , But the core is still in images and texts . Then this picture can be said to be a point of the rise of deep learning . First of all, let me tell you about this history , Fei Fei Li , Is specialized in computer vision , She wondered if she could create a dataset ? Six years , She called on how many colleges and universities in the United States , Go to label the image . What is collection and annotation ? Let's play this deep learning or active learning , Are we usually a supervised problem . Next, we will talk about neural networks , All algorithms are also supervised . So for example, in the above tasks , Do you have to have a mark of what ? Where is a real location of the face ? You need to do a critical language test , Are you in the training process , You know where its real location is ? Don't underestimate such a labeling work .

Completed a data set , It's called a imagine net It also exists now , At that time, you can see that the data inside is a public photograph , Then everyone can come down . The data in Baidu online disk is very, very large , But I don't recommend you to play , Because it's too big , You don't do some research on this data set , Don't do some big movie projects , We may not use it for the time being .

How many do you see 14m One of the imagine 22k A kind of , Basically, its data volume can be said to be the largest Image Classification Library at present . The objects involved in it are basically you see 22 Multiply by 1000 How many kinds? 2 More than ten thousand kinds of tables , It includes all kinds of tables you can think of in life . Then this is when he created such a data set , After creating the dataset , Then he held such a competition , It's called a imagenet The same category competition , It was 09 year , Deep learning is not mentioned at all , No one makes this thing . Why? ? Because the first point is that it is slow . Second point , This thing has an effect on the pattern , You haven't played before , Because the effect may also be average , until 12 year .

12 year , There is a man named Alex People who , He was in 12 In the image classification competition, I won a champion , He used a neural network in deep learning to complete the game . The first place he won was more than ten percentage points higher than the second place . The second algorithm is what to use ? It is done with the lifting algorithm of the machine learning algorithm we mentioned before . This time, everyone suddenly found something , Neural network is an algorithm for image classification , It can achieve such a good effect in the field of computer vision . So from this 12 Year begins , Deep learning is the official rise , Everyone is beginning to recognize this thing . For example, in-depth studies , More and more scholars , More and more people are involved in this field .

Our computer vision natural processing is developing rapidly , What is the main reason ? 12 Such a turning point in history , Some papers of major conferences every year , Many excellent articles will be sent out about some competition situations of major competitions , These weeks' articles become everyone's own to play , Follow up practical projects , The company's business level projects are all done with these papers . This is the starting point .

Then you need to know a little about such a picture , It's about how to do it in depth, and the effect can be better , Here, let's take a look at . Here is a report from Baidu Zhongkan before. Take out a picture , He said such a thing , Baidu has many AI products , When the data scale is small , You can see when the data scale is small , It can be said that there is a difference between deep learning and traditional AI algorithms ? No difference . The thief of deep learning algorithm is slow , The traditional algorithm is quite fast . Do you say you use deep learning algorithm when the data scale is small ? Don't use it at all . How much room does the deep learning algorithm have to play ? The larger the data size, the better , Usually, there are few thousands of data sets , It is generally said that only tens of thousands, even millions of data sets can be done with deep learning algorithms .

边栏推荐

猜你喜欢

Robot engineering lifelong learning and work plan-2022-

本周小贴士#136:无序容器

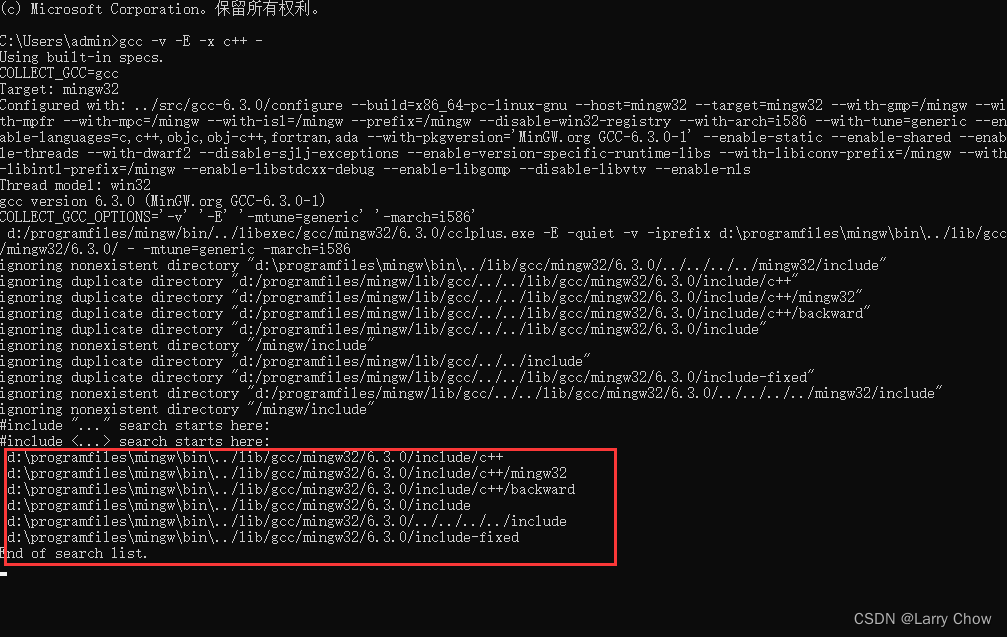

Vscode three configuration files about C language

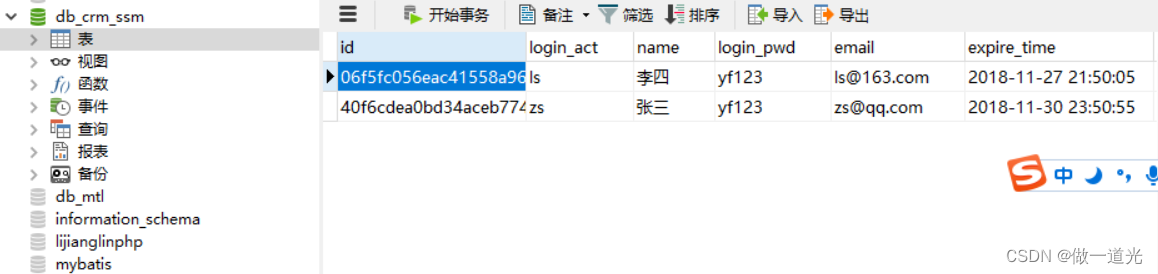

第2章搭建CRM项目开发环境(数据库设计)

![[distributed theory] (II) distributed storage](/img/51/473a8f6a0d109277eab54d72156539.png)

[distributed theory] (II) distributed storage

js拉下帷幕js特效显示层

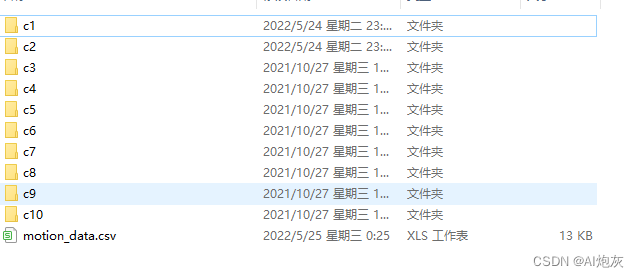

Pytorch中自制数据集进行Dataset重写

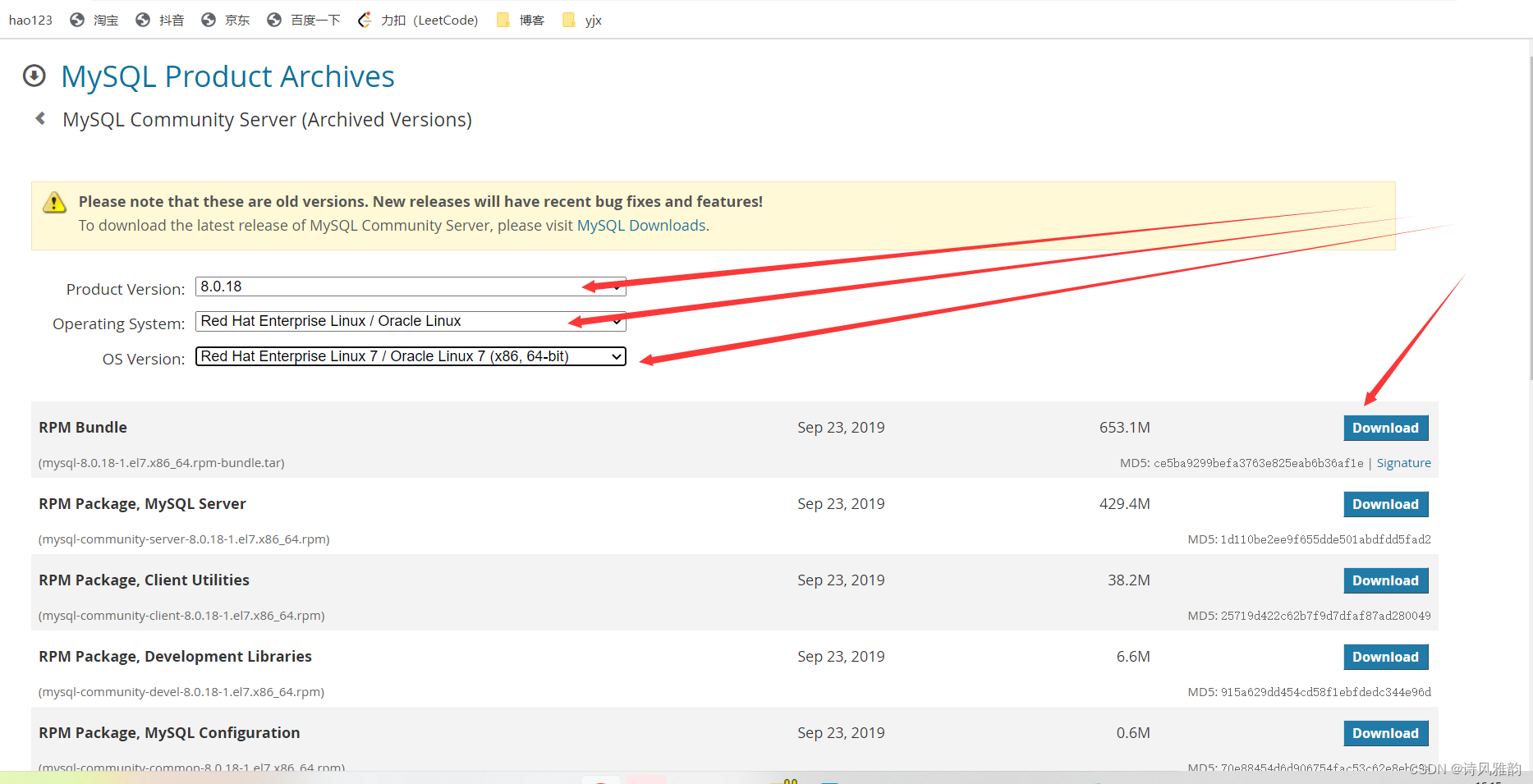

mysql官网下载:Linux的mysql8.x版本(图文详解)

【TPM2.0原理及应用指南】 12、13、14章

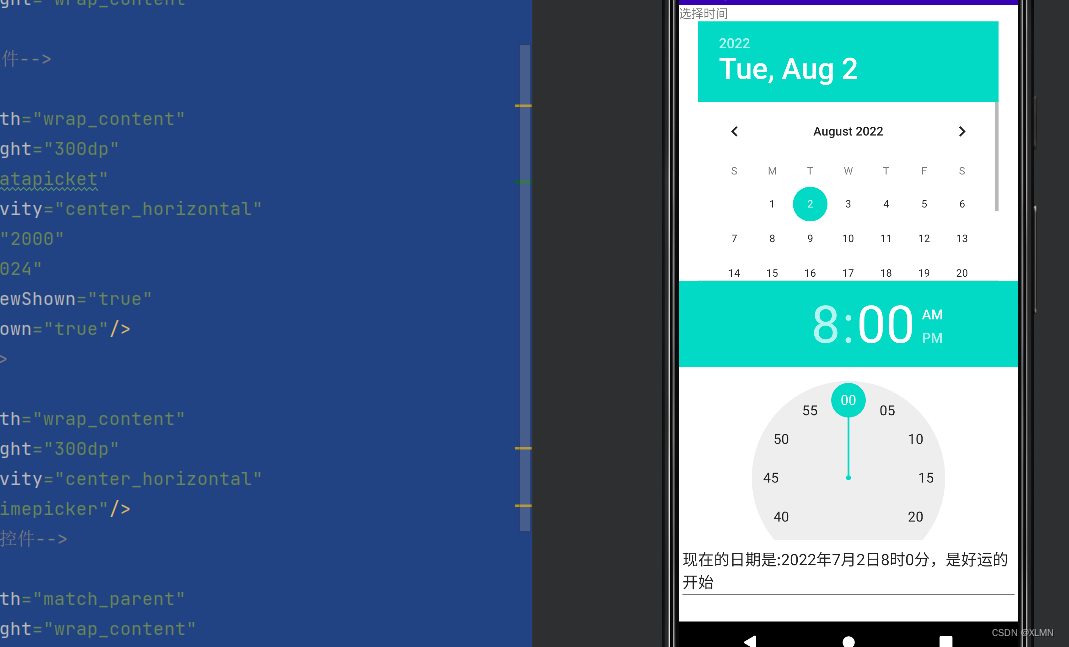

datepicket和timepicket,日期、时间选择器的功能和用法

随机推荐

本周小贴士#140:常量:安全习语

大笨钟(Lua)

Functions and usage of imageswitch

serachview的功能和用法

Functions and usage of ratingbar

【解惑】App处于前台,Activity就不会被回收了?

Use onedns to perfectly solve the optimization problem of office network

什么是敏捷测试

Function and usage of textswitch text switcher

第2章搭建CRM项目开发环境(数据库设计)

yolo训练过程中批量导入requirments.txt中所需要的包

Problems encountered in Jenkins' release of H5 developed by uniapp

Establishment of solid development environment

到底有多二(Lua)

Functions and usage of tabhost tab

Ratingbar的功能和用法

Solid function learning

深度学习-制作自己的数据集

简单的loading动画

MySQL index hit level analysis