当前位置:网站首页>Restore backup data on S3 compatible storage with br

Restore backup data on S3 compatible storage with br

2022-07-06 08:03:00 【Tianxiang shop】

This paper introduces how to combine S3 On compatible storage SST Restore the backup data to AWS Kubernetes In the environment TiDB colony .

The recovery method used in this article is based on TiDB Operator Of Custom Resource Definition (CRD) Realization , Bottom use BR Data recovery .BR Its full name is Backup & Restore, yes TiDB Command line tools for distributed backup and recovery , Used to deal with TiDB Cluster for data backup and recovery .

Use scenarios

When using BR take TiDB The cluster data is backed up to Amazon S3 after , If needed from Amazon S3 Will back up SST( Key value pair ) Restore files to TiDB colony , Please refer to this article to use BR Resume .

Be careful

- BR Only support TiDB v3.1 And above .

- BR The recovered data cannot be synchronized to the downstream , because BR Direct import SST file , At present, the downstream cluster has no way to obtain the upstream SST file .

This article assumes that it will be stored in Amazon S3 Specify the path on spec.s3.bucket In the bucket spec.s3.prefix The backup data under the folder is restored to namespace test2 Medium TiDB colony demo2. The following is the specific operation process .

The first 1 Step : Prepare to restore the environment

Use BR take S3 Restore the backup data on the compatible storage to TiDB front , Please follow these steps to prepare the recovery environment .

Download the file backup-rbac.yaml, And execute the following command in

test2This namespace Create what is needed for recovery RBAC Related resources :kubectl apply -f backup-rbac.yaml -n test2Grant remote storage access .

- If the data to be recovered is Amazon S3 On , There are three ways to grant permissions , Refer to the documentation AWS Account Authorization .

- If the data to be recovered is compatible with other S3 On the storage of , for example Ceph、MinIO, have access to AccessKey and SecretKey Mode Authorization , Refer to the documentation adopt AccessKey and SecretKey to grant authorization .

If you use it TiDB Version below v4.0.8, You also need to do the following . If you use it TiDB by v4.0.8 And above , Please skip this step .

Make sure you have the recovery database

mysql.tidbTabularSELECTandUPDATEjurisdiction , Used for adjusting before and after recovery GC Time .establish

restore-demo2-tidb-secretsecret For storing access TiDB Clustered root Account and key .kubectl create secret generic restore-demo2-tidb-secret --from-literal=password=${password} --namespace=test2

The first 2 Step : Restore the specified backup data to TiDB colony

According to the remote storage access authorization method selected in the previous step , You need to use the corresponding method below to restore the backup data to TiDB:

Method 1: If it passes accessKey and secretKey Method of authorization , You can create

RestoreCR Recover cluster data :kubectl apply -f resotre-aws-s3.yamlrestore-aws-s3.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: Restore metadata: name: demo2-restore-s3 namespace: test2 spec: br: cluster: demo2 clusterNamespace: test2 # logLevel: info # statusAddr: ${status_addr} # concurrency: 4 # rateLimit: 0 # timeAgo: ${time} # checksum: true # sendCredToTikv: true # # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 # to: # host: ${tidb_host} # port: ${tidb_port} # user: ${tidb_user} # secretName: restore-demo2-tidb-secret s3: provider: aws secretName: s3-secret region: us-west-1 bucket: my-bucket prefix: my-folderMethod 2: If it passes IAM binding Pod Method of authorization , You can create

RestoreCR Recover cluster data :kubectl apply -f restore-aws-s3.yamlrestore-aws-s3.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: Restore metadata: name: demo2-restore-s3 namespace: test2 annotations: iam.amazonaws.com/role: arn:aws:iam::123456789012:role/user spec: br: cluster: demo2 sendCredToTikv: false clusterNamespace: test2 # logLevel: info # statusAddr: ${status_addr} # concurrency: 4 # rateLimit: 0 # timeAgo: ${time} # checksum: true # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 to: host: ${tidb_host} port: ${tidb_port} user: ${tidb_user} secretName: restore-demo2-tidb-secret s3: provider: aws region: us-west-1 bucket: my-bucket prefix: my-folderMethod 3: If it passes IAM binding ServiceAccount Method of authorization , You can create

RestoreCR Recover cluster data :kubectl apply -f restore-aws-s3.yamlrestore-aws-s3.yamlThe contents of the document are as follows :--- apiVersion: pingcap.com/v1alpha1 kind: Restore metadata: name: demo2-restore-s3 namespace: test2 spec: serviceAccount: tidb-backup-manager br: cluster: demo2 sendCredToTikv: false clusterNamespace: test2 # logLevel: info # statusAddr: ${status_addr} # concurrency: 4 # rateLimit: 0 # timeAgo: ${time} # checksum: true # Only needed for TiDB Operator < v1.1.10 or TiDB < v4.0.8 to: host: ${tidb_host} port: ${tidb_port} user: ${tidb_user} secretName: restore-demo2-tidb-secret s3: provider: aws region: us-west-1 bucket: my-bucket prefix: my-folder

In the configuration restore-aws-s3.yaml When you file , Please refer to the following information :

- About compatibility S3 Storage related configuration , Please refer to S3 Storage field introduction .

.spec.brSome parameters in are optional , Such aslogLevel、statusAddr、concurrency、rateLimit、checksum、timeAgo、sendCredToTikv. more.spec.brDetailed explanation of fields , Please refer to BR Field is introduced .- If you use it TiDB by v4.0.8 And above ,BR Will automatically adjust

tikv_gc_life_timeParameters , Don't need to Restore CR Middle configurationspec.toField . - more

RestoreCR Detailed explanation of fields , Please refer to Restore CR Field is introduced .

Create good Restore CR after , You can view the status of the recovery through the following command :

kubectl get rt -n test2 -o wide

边栏推荐

- 649. Dota2 Senate

- From monomer structure to microservice architecture, introduction to microservices

- ESP系列引脚說明圖匯總

- Transformer principle and code elaboration

- P3047 [usaco12feb]nearby cows g (tree DP)

- (lightoj - 1410) consistent verbs (thinking)

- Artcube information of "designer universe": Guangzhou implements the community designer system to achieve "great improvement" of urban quality | national economic and Information Center

- 在 uniapp 中使用阿里图标

- Esrally domestic installation and use pit avoidance Guide - the latest in the whole network

- Flash return file download

猜你喜欢

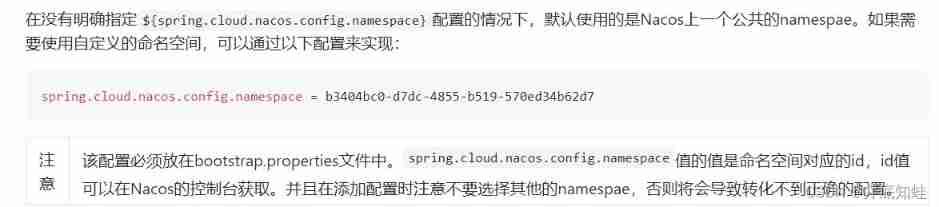

Nacos Development Manual

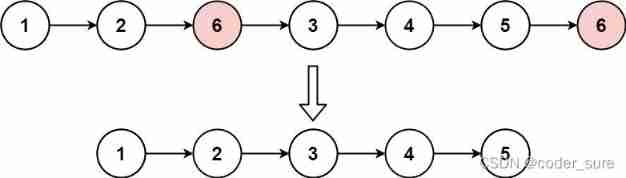

Leetcode question brushing record | 203_ Remove linked list elements

IP lab, the first weekly recheck

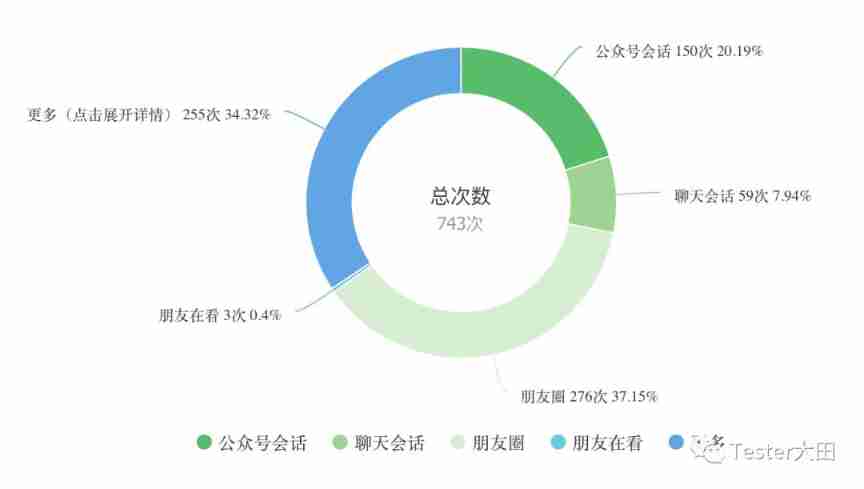

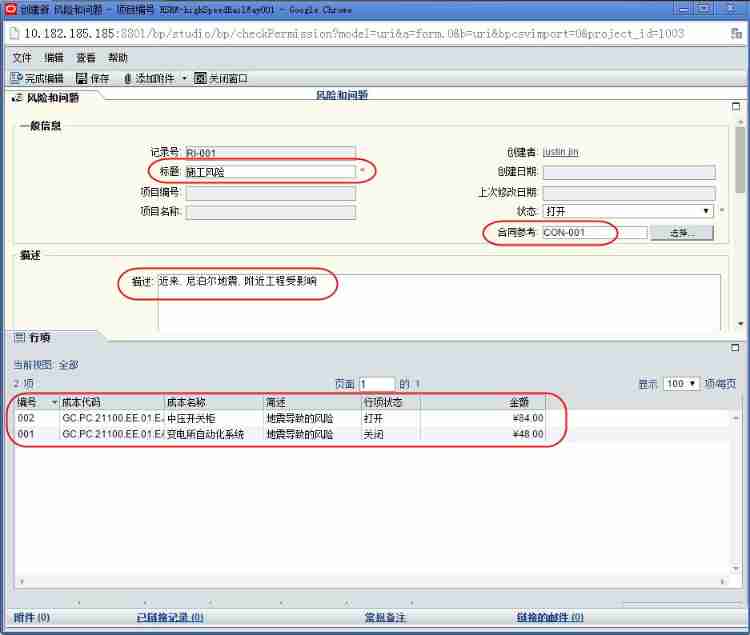

Qualitative risk analysis of Oracle project management system

继电反馈PID控制器参数自整定

Inspiration from the recruitment of bioinformatics analysts in the Department of laboratory medicine, Zhujiang Hospital, Southern Medical University

![[Yugong series] February 2022 U3D full stack class 011 unity section 1 mind map](/img/c3/1b6013bfb2441219bf621c3f0726ea.jpg)

[Yugong series] February 2022 U3D full stack class 011 unity section 1 mind map

![07- [istio] istio destinationrule (purpose rule)](/img/be/fa0ad746a79ec3a0d4dacd2896235f.jpg)

07- [istio] istio destinationrule (purpose rule)

![[nonlinear control theory]9_ A series of lectures on nonlinear control theory](/img/a8/03ed363659a0a067c2f1934457c106.png)

[nonlinear control theory]9_ A series of lectures on nonlinear control theory

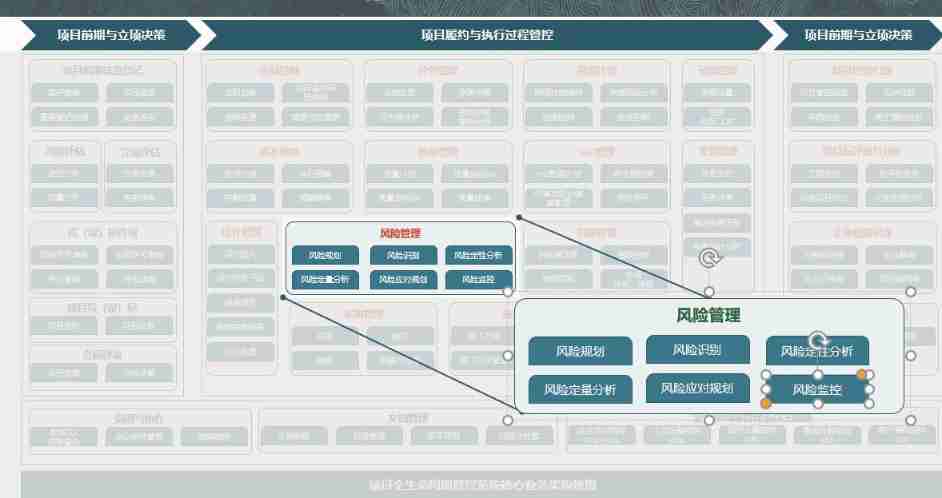

Risk planning and identification of Oracle project management system

随机推荐

Go learning notes (3) basic types and statements (2)

备份与恢复 CR 介绍

Circuit breaker: use of hystrix

Hackathon ifm

[research materials] 2022 China yuancosmos white paper - Download attached

Transformer principle and code elaboration

Nft智能合约发行,盲盒,公开发售技术实战--拼图篇

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

Notes on software development

【T31ZL智能视频应用处理器资料】

你想知道的ArrayList知识都在这

onie支持pice硬盘

Understanding of law of large numbers and central limit theorem

1204 character deletion operation (2)

图像融合--挑战、机遇与对策

从 SQL 文件迁移数据到 TiDB

Migrate data from CSV files to tidb

shu mei pai

【云原生】手把手教你搭建ferry开源工单系统

Hcip day 16