当前位置:网站首页>Image fusion -- challenges, opportunities and Countermeasures

Image fusion -- challenges, opportunities and Countermeasures

2022-07-06 08:04:00 【Timer-419】

Image fusion series blog and :

- The most complete image fusion paper and code sorting see : The most complete image fusion paper and code sorting

- For the summary of image fusion papers, see : Summary of image fusion

- For image fusion evaluation indicators, see : Evaluation index of infrared and visible image fusion

- See... For common data set sorting of image fusion : Image fusion commonly used data set sorting

- For general image fusion framework papers and code sorting, see : General image fusion framework paper and code sorting

- Infrared and visible image fusion paper and code sorting based on deep learning. See : Infrared and visible image fusion paper and code sorting based on deep learning

- For more detailed infrared and visible image fusion codes, see : Infrared and visible image fusion paper and code sorting

- Multi exposure image fusion paper and code sorting based on deep learning. See : Multi exposure image fusion paper and code sorting based on deep learning

- Multi focus image fusion paper and code sorting based on deep learning. See : Multi focus image fusion based on deep learning (Multi-focus Image Fusion) Thesis and code sorting

- Panchromatic image sharpening paper and code sorting based on deep learning. See : Panchromatic image sharpening based on deep learning (Pansharpening) Thesis and code sorting

- Medical image fusion based on deep learning paper and code sorting, see : Medical image fusion based on deep learning (Medical image fusion) Thesis and code sorting

- For color image fusion procedures, see : Color image fusion

- SeAFusion: The first image fusion framework combined with advanced visual tasks. See :SeAFusion: The first image fusion framework combined with advanced visual tasks

Image fusion -- Challenge 、 Opportunities and countermeasures

As an important image enhancement technology , Image fusion in areas such as target detection 、 Semantic segmentation 、 Clinical diagnosis 、 Remote sensing monitoring 、 Video surveillance and military reconnaissance play a vital role . In recent years , With the continuous progress of deep learning technology , Image fusion algorithms based on deep learning have sprung up . Although deep learning has achieved great success in the field of image fusion , But there are still some serious challenges and problems to be overcome .

Unregistered image fusion :

Existing image fusion algorithms require the source image to be strictly aligned in space . But in practice , Due to lens distortion 、 Scale difference 、 The influence of parallax and shooting position , Neither images taken by different sensors nor images taken by digital cameras under different settings can achieve strict spatial alignment . Usually , Mature image registration algorithms or manual annotation are needed to register the source image before fusion ). Existing registration algorithms can successfully register images with the same mode , But for multimode images , At present, there is no robust registration algorithm for large-scale multimode images . In fact, multi-mode image fusion can weaken the modal differences in multi-mode data and reduce the impact of redundant information on the registration process . therefore , In fusion scenarios with more significant modal differences ( Such as infrared and visible image fusion 、 Medical image fusion ) Develop image registration - Robust algorithms that complement each other in image fusion are expected .

Advanced visual task driven image fusion :

Image fusion can fully integrate the complementary information in the source image to comprehensively characterize the imaging scene , This makes it possible to improve the performance of subsequent visual tasks . However , Most of the existing fusion algorithms usually ignore the actual needs of subsequent visual tasks , So as to unilaterally pursue better visual effects and evaluation indicators . Even though SeAFusion,TarDAL A preliminary exploration has been made , However, image fusion and advanced visual tasks are only connected through the loss function . therefore , In the future, we should further model the requirements of advanced visual tasks into the whole image fusion process, so as to further improve the performance of advanced visual tasks .

Image fusion based on imaging principle :

Different types of sensors or sensors with different settings usually have different imaging principles . Although the difference in imaging principle brings obstacles to the design of network structure and loss function . But these differences in imaging principle also provide more prior information for the design of fusion algorithm . Analyzing the imaging principle of different types of sensors or sensors with different imaging settings and modeling it into the fusion process will help to further improve the fusion performance . In particular, modeling the defocus diffusion effect in multi focus images from the perspective of imaging is worth further exploration .

Image fusion under extreme conditions :

The existing image fusion algorithms are designed based on normal imaging scenes . However, in practical applications, we often need to deal with extreme situations , For example, underexposure 、 Overexposure and serious noise . For infrared and visible image fusion , It is often necessary to comprehensively perceive the imaging scene at night by integrating the information in the infrared image and the visible image . However , At this time, the information in the visible image is often submerged in the dark and accompanied by serious noise . Therefore, it is very important to design an effective fusion algorithm to mine the information hidden in darkness and noise while aggregating complementary information . Besides , Most of the existing multi exposure image fusion algorithms are not designed for extreme exposure situations , When these fusion algorithms are applied to extreme exposure situations, serious performance degradation often occurs . therefore . How to fully mine the information in extremely underexposed images and effectively suppress the adverse effects caused by extremely overexposed images will be a major challenge .

Cross resolution image fusion :

Due to the difference of imaging principle , Images captured by different types of sensors often have different resolutions . How to overcome the difference in resolution and fully integrate the effective information in different source images is a severe challenge . Although some researchers have proposed some algorithms to solve the cross-resolution image fusion . But there are still some problems , Such as what kind of upsampling strategy is adopted and the location of the upsampling layer in the network . One of the ideas to solve this problem is to organically combine image hypersegmentation and image fusion and design network structure and loss function .

Real time image fusion :

Image fusion is usually used as a preprocessing means of advanced visual tasks or as a post-processing process of photographic equipment . For advanced visual tasks , It often has high real-time requirements for the pretreatment process . For photographic equipment , People expect to realize the conversion from multiple input images to a single fused image in an imperceptible time . however , The hardware capability of photographic equipment is often limited . therefore , On the premise of ensuring the fusion performance , Developing a lightweight real-time image fusion algorithm plays a vital role in broadening the application scene of image fusion algorithm .

Color image fusion :

Most of the existing image fusion algorithms usually convert color images to YCbCr Space , Then use only brightness (Y) The channel is used as the input of the depth network to get the fused brightness channel , And chroma channel (Cb and Cr passageway ) Adopt traditional strategies for simple integration . in fact , Chrominance channels also contain useful information for comprehensively characterizing the imaging scene . Therefore, considering color information in the fusion process will provide more complementary information for the network . Adaptive adjustment of color information of fusion results based on depth network is helpful to obtain more vivid fusion results , This is particularly important for improving the visual effect of digital photographic image fusion .

Comprehensive evaluation indicators :

Because most image fusion tasks ( Multimode image fusion and digital photographic image fusion ) Lack of reference images , Therefore, how to comprehensively evaluate the fusion performance of different algorithms is a huge challenge . The existing evaluation indicators can only start from a certain angle , One sided evaluation of fusion performance . However, a fusion algorithm often cannot take into account all the evaluation indicators . therefore , It is very important for the field of image fusion to design a non reference index with stronger representation ability to comprehensively evaluate the performance of different fusion algorithms . First , A comprehensive evaluation index can more fairly evaluate the advantages and disadvantages of different fusion results , So as to better guide the follow-up research . secondly , The non reference index that can comprehensively evaluate the fusion performance helps to better construct the loss function to guide the optimization of the depth network .

First write a first draft , Continue to improve later ~~

Timer original Without permission Do not reprint .

The author QQ:2458707789, Please note when applying for friends : full name + School Convenient for remark .

边栏推荐

- The State Economic Information Center "APEC industry +" Western Silicon Valley will invest 2trillion yuan in Chengdu Chongqing economic circle, which will surpass the observation of Shanghai | stable

- Qualitative risk analysis of Oracle project management system

- Position() function in XPath uses

- onie支持pice硬盘

- PHP - Common magic method (nanny level teaching)

- "Designer universe": "benefit dimension" APEC public welfare + 2022 the latest slogan and the new platform will be launched soon | Asia Pacific Financial Media

- The Vice Minister of the Ministry of industry and information technology of "APEC industry +" of the national economic and information technology center led a team to Sichuan to investigate the operat

- Uibehavior, a comprehensive exploration of ugui source code

- Vit (vision transformer) principle and code elaboration

- How to prevent Association in cross-border e-commerce multi account operations?

猜你喜欢

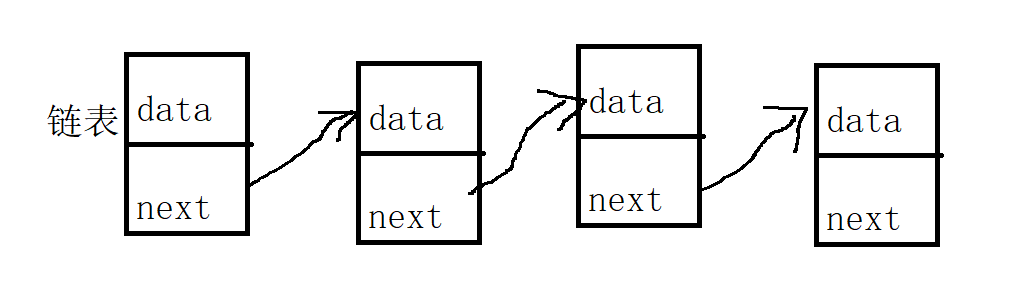

你想知道的ArrayList知识都在这

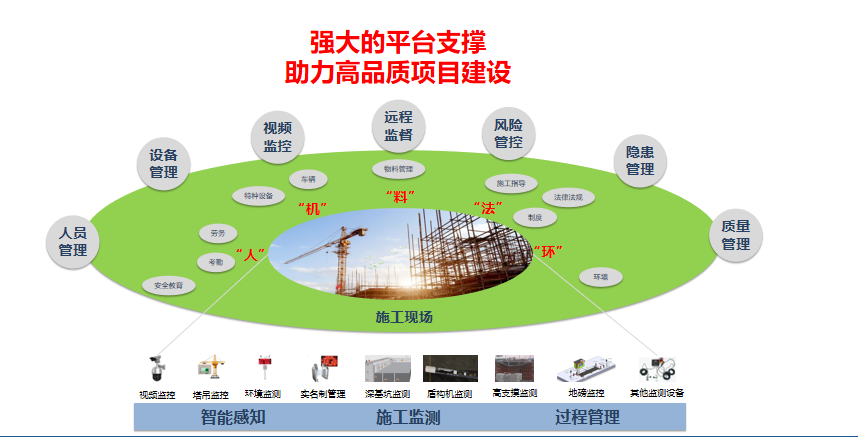

Solution: intelligent site intelligent inspection scheme video monitoring system

Machine learning - decision tree

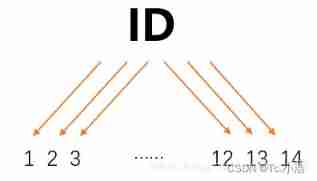

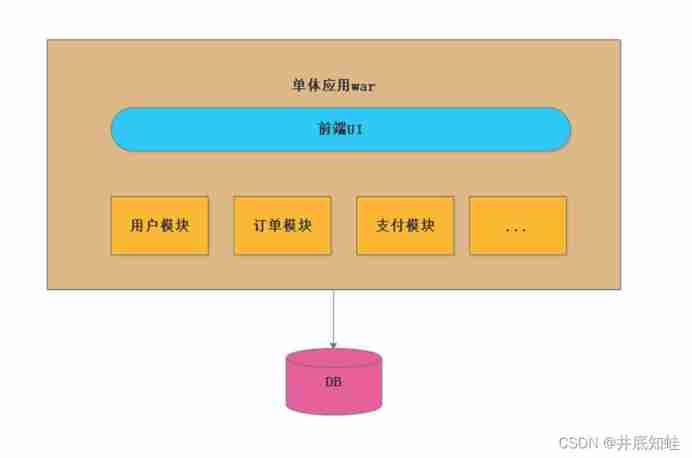

From monomer structure to microservice architecture, introduction to microservices

. Net 6 learning notes: what is NET Core

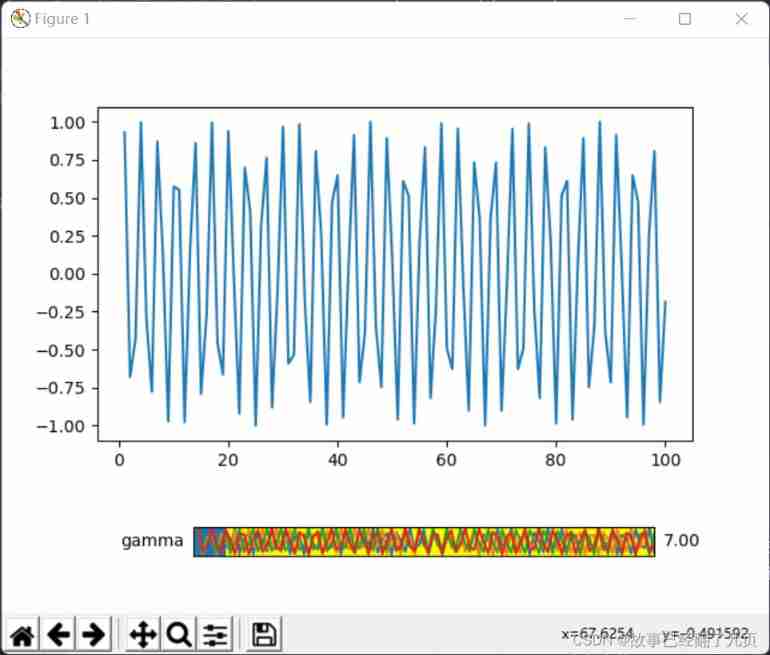

Pyqt5 development tips - obtain Manhattan distance between coordinates

matplotlib. Widgets are easy to use

How to use information mechanism to realize process mutual exclusion, process synchronization and precursor relationship

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

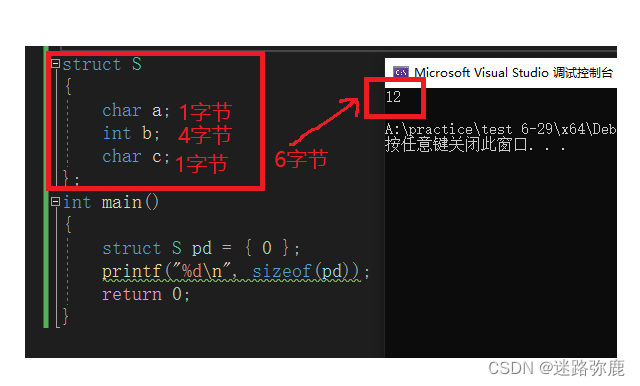

C语言自定义类型:结构体

随机推荐

Golang DNS write casually

Wireshark grabs packets to understand its word TCP segment

MES, APS and ERP are essential to realize fine production

Description of octomap averagenodecolor function

[research materials] 2022 China yuancosmos white paper - Download attached

【T31ZL智能视频应用处理器资料】

Parameter self-tuning of relay feedback PID controller

二叉树创建 & 遍历

升级 TiDB Operator

07- [istio] istio destinationrule (purpose rule)

ESP series pin description diagram summary

图像融合--挑战、机遇与对策

CAD ARX 获取当前的视口设置

数据治理:微服务架构下的数据治理

TiDB备份与恢复简介

让学指针变得更简单(三)

How to estimate the number of threads

从 CSV 文件迁移数据到 TiDB

Data governance: 3 characteristics, 4 transcendence and 3 28 principles of master data

将 NFT 设置为 ENS 个人资料头像的分步指南