当前位置:网站首页>Lane change detection

Lane change detection

2020-11-06 01:28:00 【Artificial intelligence meets pioneer】

author |Hitesh Valecha compile |VK source |Towards Data Science

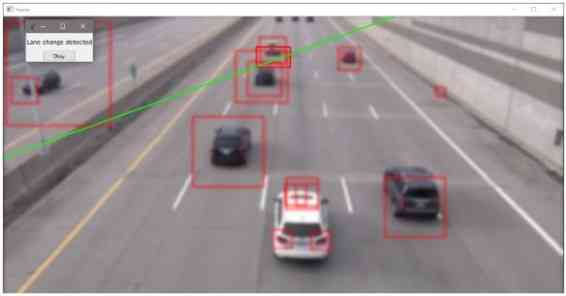

In this tutorial , We will learn how to use computer vision and image processing to detect whether a car is changing lanes on the road .

You must have heard of using Opencv haar cascade Can detect faces 、 Eyes or cars 、 Things like buses ? This time, let's use this simple detection method to build something cool .

1. Data sets

In this tutorial , Video files of cars on the road are used as data sets . Besides , We can use images in the data set to detect vehicles , But here, , When the traffic changes lanes , We'll use pop-up windows to alert , So for the dynamic information , Video input is more feasible .

2. Input

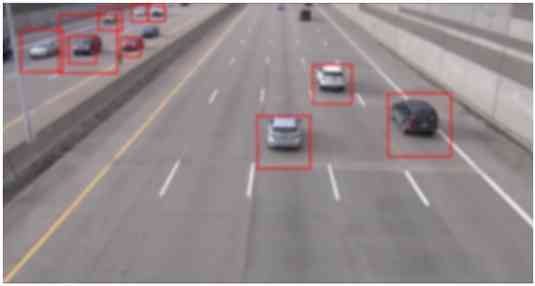

We use OpenCV Of haar cascade To detect the coordinates of the car , The input is a video file of a car on the road

cascade_src = 'cascade/cars.xml'

video_src = 'dataset/cars.mp4'

cap = cv2.VideoCapture(video_src)

car_cascade = cv2.CascadeClassifier(cascade_src)

cv2.VideoCapture() Method is used to capture the input video , A video is usually per second 25 Frame image (fps). After capturing the input , Loop extraction frames , And make use of haar cascade testing , Draw a rectangle around the car in the loop , To achieve consistency , Perform other operations on the captured frame at the same time .

while(1):

# Get every frame

_, frame = cap.read()

cars = car_cascade.detectMultiScale(frame, 1.1, 1)

for (x,y,w,h) in cars:

roi = cv2.rectangle(frame,(x,y),(x+w,y+h),(0,0,255),2) # Region of interest

stay OpenCV Use in BGR instead of RGB, therefore (0,0,255) A red rectangle will be drawn on the car , Not blue .

3. The image processing

We use frames , But if the frame resolution is very high , It will slow down the execution of the operation , Besides , Frames contain noise , You can use blur to reduce , Here we use Gaussian Blur .

Now? , Let's look at some of the concepts of image processing

3.1 HSV frame

In this article , We use from cv2.VideoCapture() Capture in the frame HSV frame , Highlight only the point where the vehicle is turning , And cover the rest of the road and the vehicles that are going straight on the road . Set the upper and lower limits to define HSV Color range in , To see where the car changed lanes , And used as a mask for frames . Here is a snippet of code to get this information -

# Using blur to eliminate noise in video frames

frame = cv2.GaussianBlur(frame,(21,21),0)

# transformation BGR To HSV

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

# stay HSV Define the color range in , To see where the vehicle changes angle

lower_limit = np.array([0,150,150])

upper_limit = np.array([10,255,255])

# HSV Image limit threshold

mask = cv2.inRange(hsv, lower_limit, upper_limit)

3.2 Corrosion and expansion

Corrosion and dilation are two basic morphological operations for image processing . The erosion operator has a local minimum effect on the region of the kernel function , Kernel functions are templates or masks . Corrosion is used to reduce speckle noise in images . Dilation is the convolution of image and kernel , It has the function of local maximum operator . When a pixel is added to the edge of a smooth object in the image , Apply inflation to recover some lost areas .

from HSV The mask generated in the first step of the frame is now operated with basic morphology ( Corrosion and expansion ) To deal with it . The generated frame is obtained by bitwise sum between the frame and mask ROI( Region of interest ).

kernel = np.ones((3,3),np.uint8)

kernel_lg = np.ones((15,15),np.uint8)

# Image processing technology known as corrosion is used to reduce noise

mask = cv2.erode(mask,kernel,iterations = 1)

# Image processing technology called dilation , To recover some of the lost areas

mask = cv2.dilate(mask,kernel_lg,iterations = 1)

# Except for areas of interest , Everything else turns black

result = cv2.bitwise_and(frame,frame, mask= mask)

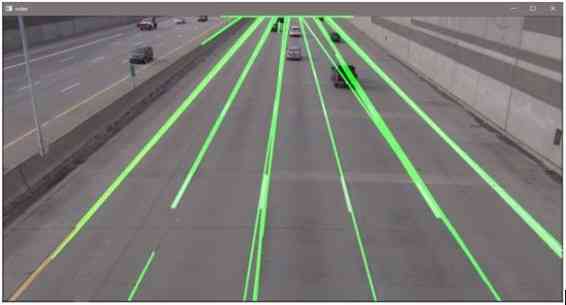

3.3 Lane detection

utilize canny Edge detection operators combine Hough Lane change for lane detection .

# Lane detection

def canny(frame):

gray=cv2.cvtColor(frame,cv2.COLOR_RGB2GRAY)

blur=cv2.GaussianBlur(gray,(5,5),0)

canny=cv2.Canny(blur,50,150)

return canny

def region_of_interest(frame):

height=frame.shape[0]

polygons=np.array([

[(0,height),(500,0),(800,0),(1300,550),(1100,height)]

])

mask=np.zeros_like(frame)

cv2.fillPoly(mask,polygons,255)

masked_image=cv2.bitwise_and(frame,mask)

return masked_image

def display_lines(frame,lines):

line_image=np.zeros_like(frame)

if lines is not None:

for line in lines:

x1,y1,x2,y2=line.reshape(4)

cv2.line(line_image, (x1, y1), (x2, y2), (0, 255, 0), 3)

return line_image

lane_image=np.copy(frame)

canny=canny(lane_image)

cropped_image=region_of_interest(canny)

lines=cv2.HoughLinesP(cropped_image,2,np.pi/180,100,np.array([]),minLineLength=5,maxLineGap=300)

line_image=display_lines(lane_image,lines)

frame=cv2.addWeighted(lane_image,0.8,line_image,1,1)

cv2.imshow('video', frame)

4. contour

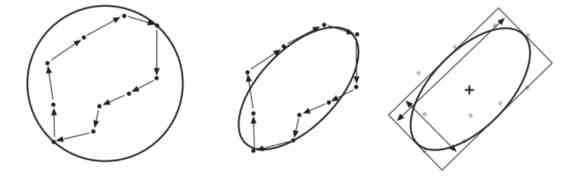

canny Edge detector and other algorithms are used to find edge boundaries in edge pixel images , But it doesn't tell us how to find points and edges where objects or entities can't combine , Here we can use OpenCV Realized cv2.findContours() As a concept of outline .

Definition -“ The outline represents a list of the points of the curve in the image .”

The outline is represented by a sequence , Each sequence encodes the location of the next point . We are roi Run many times in the cv2.findConteurs() To get the entity , And then use cv2.drawContours() Draw the outline area . The outline can be a dot 、 edge 、 Polygon, etc , So when you draw a contour line , We do polygonal approximation , Find the length of the edge and the area of the region .

function cv2.drawContours() It works by drawing a tree from the root node ( data structure ), And then connect the following points 、 Bounding box and Freeman Chain code .

thresh = mask

contours, hierarchy = cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

# Define the minimum area of the contour ( Ignore min All values below )

min_area = 1000

cont_filtered = []

# Filter out all contours below the minimum area

for cont in contours:

if cv2.contourArea(cont) > min_area:

cont_filtered.append(cont)

cnt = cont_filtered[0]

# Draw a rectangle around the outline

rect = cv2.minAreaRect(cnt)

box = cv2.boxPoints(rect)

box = np.int0(box)

cv2.drawContours(frame,[box],0,(0,0,255),2)

rows,cols = thresh.shape[:2]

[vx,vy,x,y] = cv2.fitLine(cnt, cv2.DIST_L2,0,0.01,0.01)

lefty = int((-x*vy/vx) + y)

righty = int(((cols-x)*vy/vx)+y)

cv2.line(frame,(cols-1,righty),(0,lefty),(0,255,0),2)

Another important task after finding the contours is to match them . Matching contours means that we have two independent computational contours to compare with each other , Or have a profile compared to an abstract template .

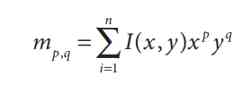

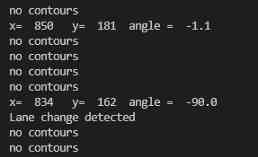

5. Characteristic moment

We can compare two contours by calculating contour moments .“ The characteristic of the total contour is the moment , Calculate by adding all the pixels of the contour .”

Torque type

Space characteristic moment :m00, m10, m01, m20, m11, m02, m30, m21, m12, m03.

Central characteristic moment :mu20, mu11, mu02, mu30, mu21, mu12, mu03.

Hu Characteristic moment : There are seven Hu Characteristic moment (h0-h6) or (h1-h7), Both symbols use .

We use cv2.fitEllipse() Calculate the characteristic moment and fit the ellipse on the point . Find the angle from the contour line and the feature moment , Because changing lanes requires 45 Degree of rotation , This is seen as the threshold for the car's turning angle .

M = cv2.moments(cnt)

cx = int(M['m10']/M['m00'])

cy = int(M['m01']/M['m00'])

(x,y),(MA,ma),angle = cv2.fitEllipse(cnt)

print('x= ', cx, ' y= ', cy, ' angle = ', round(rect[2],2))

if(round(rect[2],2))<-45:

# print('Lane change detected')

popupmsg('Lane change detected')

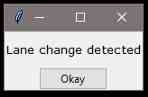

Now? , We can use Tkinter To create a simple pop-up window to warn of changes , It's not just about printing the change detection results .

if(round(rect[2],2))<-45:

popupmsg('Lane change detected')

def popupmsg(msg):

popup = tk.Tk()

popup.wm_title("Message")

label = ttk.Label(popup, text=msg, font=NORM_FONT)

label.pack(side="top", fill="x", pady=10)

B1 = ttk.Button(popup, text="Okay", command = popup.destroy)

B1.pack()

popup.mainloop()

Draw a rectangle on the frame , Measure the angle with a green line

6. Summary and future

In this tutorial , Will use lane change detection method to explore a small demonstration of smart car navigation .

Computer vision is developing rapidly , It's not only used in local car navigation , And there's progress in navigation and product testing on Mars , Even in medical applications it is being developed and used in the early days of X Cancer and tumor detection in X-ray images .

Click here to get GitHub The source code of the account :https://github.com/Hitesh-Valecha/Car_Opencv

reference

- Bradski, Gary and Kaehler, Adrian, Learning OpenCV: Computer Vision in C++ with the OpenCV Library, O’Reilly Media, Inc., 2nd edition, 2013, @10.5555/2523356, ISBN — 1449314651.

- Laganiere, Robert, OpenCV Computer Vision Application Programming Cookbook, Packt Publishing, 2nd edition, 2014, @10.5555/2692691, ISBN — 1782161481.

Link to the original text :https://towardsdatascience.com/lane-change-detection-computer-vision-at-next-stage-914973f96f4b

Welcome to join us AI Blog station : http://panchuang.net/

sklearn Machine learning Chinese official documents : http://sklearn123.com/

Welcome to pay attention to pan Chuang blog resource summary station : http://docs.panchuang.net/

版权声明

本文为[Artificial intelligence meets pioneer]所创,转载请带上原文链接,感谢

边栏推荐

- Wechat applet: prevent multiple click jump (function throttling)

- 带你学习ES5中新增的方法

- Arrangement of basic knowledge points

- What is the side effect free method? How to name it? - Mario

- Natural language processing - wrong word recognition (based on Python) kenlm, pycorrector

- 速看!互联网、电商离线大数据分析最佳实践!(附网盘链接)

- Solve the problem of database insert data garbled in PL / SQL developer

- 多机器人行情共享解决方案

- 零基础打造一款属于自己的网页搜索引擎

- IPFS/Filecoin合法性:保护个人隐私不被泄露

猜你喜欢

Just now, I popularized two unique skills of login to Xuemei

Python基础数据类型——tuple浅析

In order to save money, I learned PHP in one day!

Vue 3 responsive Foundation

Thoughts on interview of Ali CCO project team

采购供应商系统是什么?采购供应商管理平台解决方案

速看!互联网、电商离线大数据分析最佳实践!(附网盘链接)

2019年的一个小目标,成为csdn的博客专家,纪念一下

一篇文章带你了解CSS 分页实例

vue-codemirror基本用法:实现搜索功能、代码折叠功能、获取编辑器值及时验证

随机推荐

6.3 handlerexceptionresolver exception handling (in-depth analysis of SSM and project practice)

keras model.compile Loss function and optimizer

一篇文章带你了解HTML表格及其主要属性介绍

加速「全民直播」洪流,如何攻克延时、卡顿、高并发难题?

零基础打造一款属于自己的网页搜索引擎

每个前端工程师都应该懂的前端性能优化总结:

Five vuex plug-ins for your next vuejs project

Keyboard entry lottery random draw

A course on word embedding

vue-codemirror基本用法:实现搜索功能、代码折叠功能、获取编辑器值及时验证

6.6.1 localeresolver internationalization parser (1) (in-depth analysis of SSM and project practice)

至联云分享:IPFS/Filecoin值不值得投资?

ES6学习笔记(五):轻松了解ES6的内置扩展对象

Aprelu: cross border application, adaptive relu | IEEE tie 2020 for machine fault detection

Just now, I popularized two unique skills of login to Xuemei

IPFS/Filecoin合法性:保护个人隐私不被泄露

采购供应商系统是什么?采购供应商管理平台解决方案

Python crawler actual combat details: crawling home of pictures

Skywalking series blog 2-skywalking using

Interface pressure test: installation, use and instruction of siege pressure test