当前位置:网站首页>Yolov5 Lite: yolov5, which is lighter, faster and easy to deploy

Yolov5 Lite: yolov5, which is lighter, faster and easy to deploy

2022-07-08 02:19:00 【pogg_】

QQ Communication group :993965802

The copyright of this article belongs to GiantPandaCV, Please do not reprint without permission

Preface : Part of Bi Shi , Some time ago , stay yolov5 A series of ablation experiments were carried out on , Make him lighter (Flops smaller , Lower memory usage , Fewer parameters ), faster ( Join in shuffle channel,yolov5 head Perform channel clipping , stay 320 Of input_size At least in raspberry pie 4B Reasoning last second 10 frame ), Easier to deploy ( Enucleation Focus Layer and four slice operation , Make the quantization accuracy of the model drop within an acceptable range ).

2021-08-26 to update ---------------------------------------

We try to improve the resolution and then lower it for training (train in 640-1280-640, The first round 640 Training 150 individual epochs, The second round 1280 Training 50 individual epochs, The third round 640 Training 100 individual epochs), It is found that the model still has a certain learning ability ,map Still improving

# evaluate in 640×640:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.271

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.457

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.274

# evaluate in 416×416:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.244

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.413

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.246

# evaluate in 320×320:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.208

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.362

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.206

One 、 Comparison of ablation experimental results

| ID | Model | Input_size | Flops | Params | Size(M) | M a p @ 0.5 Map^{@0.5} Map@0.5 | M a p @ 0.5 : 0.95 Map^{@0.5:0.95} Map@0.5:0.95 |

|---|---|---|---|---|---|---|---|

| 001 | yolo-faster | 320×320 | 0.25G | 0.35M | 1.4 | 24.4 | - |

| 002 | nanodet-m | 320×320 | 0.72G | 0.95M | 1.8 | - | 20.6 |

| 003 | yolo-faster-xl | 320×320 | 0.72G | 0.92M | 3.5 | 34.3 | - |

| 004 | yolov5-lite | 320×320 | 1.43G | 1.62M | 3.3 | 36.2 | 20.8 |

| 005 | yolov3-tiny | 416×416 | 6.96G | 6.06M | 23.0 | 33.1 | 16.6 |

| 006 | yolov4-tiny | 416×416 | 5.62G | 8.86M | 33.7 | 40.2 | 21.7 |

| 007 | nanodet-m | 416×416 | 1.2G | 0.95M | 1.8 | - | 23.5 |

| 008 | yolov5-lite | 416×416 | 2.42G | 1.62M | 3.3 | 41.3 | 24.4 |

| 009 | yolov5-lite | 640×640 | 2.42G | 1.62M | 3.3 | 45.7 | 27.1 |

| 010 | yolov5s | 640×640 | 17.0G | 7.3M | 14.2 | 55.4 | 36.7 |

notes :yolov5 primary FLOPS The calculation script has bug, Please use thop Library calculation :

input = torch.randn(1, 3, 416, 416)

flops, params = thop.profile(model, inputs=(input,))

print('flops:', flops / 900000000*2)

print('params:', params)

Two 、 Detection effect

P y t o r c h @ 640 × 640 : Pytorch^{@640×640}: Pytorch@640×640:

N C N N 640 × 640 @ F P 16 NCNN^{@FP16}_{640\times640} NCNN640×640@FP16

N C N N 640 × 640 @ I n t 8 NCNN^{@Int8}_{640\times640} NCNN640×640@Int8

3、 ... and 、Relect Work

YOLOv5-Lite The network structure of is actually very simple ,backbone Mainly used are shuffle channel Of shuffle block, The head is still used yolov5 head, But it's a castrated version yolov5 head

shuffle block:

yolov5 head:

yolov5 backbone:

In the beginning U Version of yolov5 backbone in , The author used four times in the superstructure of feature extraction slice Operations consist of Focus layer

about Focus layer , Every... In a square 4 Adjacent pixels , And generate a with 4 Times the number of channels feature map, It is similar to four down sampling operations on the upper layer , Then the result concat together , The main function is not to reduce the ability of feature extraction , Reduce and accelerate the model .

1.7.0+cu101 cuda _CudaDeviceProperties(name='Tesla T4', major=7, minor=5, total_memory=15079MB, multi_processor_count=40)

Params FLOPS forward (ms) backward (ms) input output

7040 23.07 62.89 87.79 (16, 3, 640, 640) (16, 64, 320, 320)

7040 23.07 15.52 48.69 (16, 3, 640, 640) (16, 64, 320, 320)

1.7.0+cu101 cuda _CudaDeviceProperties(name='Tesla T4', major=7, minor=5, total_memory=15079MB, multi_processor_count=40)

Params FLOPS forward (ms) backward (ms) input output

7040 23.07 11.61 79.72 (16, 3, 640, 640) (16, 64, 320, 320)

7040 23.07 12.54 42.94 (16, 3, 640, 640) (16, 64, 320, 320)

As can be seen from the above figure ,Focus When the layer parameters are reduced , The model is accelerated .

but ! This acceleration is conditional , Must be in GPU This advantage can only be reflected under the use of , For cloud deployment, this method ,GPU It is not necessary to consider the cache occupation , The method of "take and process" makes Focus Layer in GPU The equipment is very work.

For your chip , Especially without GPU、NPU Accelerated chips , Frequent slice The operation will only make the cache seriously occupied , Increase the burden of calculation and processing . meanwhile , When the chip is deployed ,Focus Layer transformation is extremely unfriendly to novices .

Four 、 Lightweight concept

shufflenetv2 Design concept of , On the chip side where resources are scarce , It has many reference meanings , It proposes four criteria for model lightweight :

(G1) The same channel size minimizes memory access

(G2) Overuse of group convolution increases MAC

(G3) The network is too fragmented ( Especially multi-channel ) Will reduce parallelism

(G4) Element level operations cannot be ignored ( such as shortcut and Add)

YOLOv5-Lite

Design concept :

(G1) Enucleation Focus layer , Avoid multiple use of slice operation

(G2) Avoid multiple use C3 Leyer And high channel C3 Layer

C3 Leyer yes YOLOv5 Proposed by the author CSPBottleneck Improved version , It's simpler 、 faster 、 Lighter , Better results can be achieved at nearly similar losses . but C3 Layer Using demultiplexing convolution , Test certificate , Frequent use C3 Layer And those with a high number of channels C3 Layer, Take up more cache space , Reduce the running speed .

( Why the higher the number of channels C3 Layer Would be right cpu Not very friendly , Mainly because shufflenetv2 Of G1 Rules , The higher the number of channels ,hidden channels And c1、c2 The step gap is larger , An inappropriate metaphor , Imagine jumping one step and ten steps , Although you can jump ten steps at a time , But you need a run-up , adjustment , Only by accumulating strength can you jump onto , It may take longer )

class C3(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super(C3, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1)

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])

# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])

(G3) Yes yolov5 head Conduct channel pruning , Pruning details reference G1

(G4) Enucleation shufflenetv2 backbone Of 1024 conv and 5×5 pooling

This is for imagenet A module designed to make a list , When there are not so many classes in the actual business scenario , It can be removed properly , Accuracy will not have much effect , But it's a big boost for speed , This was also confirmed in ablation experiments .

5、 ... and 、What can be used for?

(G1) Training

Isn't that bullshit ... It's really a little nonsense ,YOLOv5-Lite be based on yolov5 The fifth edition ( The latest version ) Ablation experiments performed on , So you can continue all the functions of the fifth edition without modification , such as :

Export heat map :

Derive confusion matrix for data analysis :

export PR curve :

(G2) export onnx No other modifications are required after ( For deployment )

(G3)DNN or ort The call no longer requires additional pairs Focus Layer by layer ( Before playing yolov5 Stuck here for a long time , Although it can be called, the accuracy also drops a lot ):

(G4)ncnn Conduct int8 Quantification can ensure the continuity of accuracy ( In the next chapter, I will talk about )

(G5) stay 0.1T Play with the raspberry pie of Suanli yolov5 Can also be real-time

I used to run on raspberry pie yolov5, It's something you can't even think of , It is necessary to detect only one frame 1000ms about , Even 160*120 You need to input 200ms about , I can't chew it .

But now YOLOv5-Lite Did it , The detection scene is in the space like elevator car and corridor corner , The actual detection distance only needs to be guaranteed 3m that will do , Adjust the resolution to 160*120 Under the circumstances ,YOLOv5-Lite Up to 18 frame , In addition, post-processing can basically stabilize in 15 Around the frame .

Remove the first three preheating , The temperature of the equipment is stable at 45° above , The forward reasoning framework is ncnn, Record twice benchmark contrast :

# The fourth time

[email protected]pi:~/Downloads/ncnn/build/benchmark $ ./benchncnn 8 4 0

loop_count = 8

num_threads = 4

powersave = 0

gpu_device = -1

cooling_down = 1

YOLOv5-Lite min = 90.86 max = 93.53 avg = 91.56

YOLOv5-Lite-int8 min = 83.15 max = 84.17 avg = 83.65

YOLOv5-Lite-416 min = 154.51 max = 155.59 avg = 155.09

yolov4-tiny min = 298.94 max = 302.47 avg = 300.69

nanodet_m min = 86.19 max = 142.79 avg = 99.61

squeezenet min = 59.89 max = 60.75 avg = 60.41

squeezenet_int8 min = 50.26 max = 51.31 avg = 50.75

mobilenet min = 73.52 max = 74.75 avg = 74.05

mobilenet_int8 min = 40.48 max = 40.73 avg = 40.63

mobilenet_v2 min = 72.87 max = 73.95 avg = 73.31

mobilenet_v3 min = 57.90 max = 58.74 avg = 58.34

shufflenet min = 40.67 max = 41.53 avg = 41.15

shufflenet_v2 min = 30.52 max = 31.29 avg = 30.88

mnasnet min = 62.37 max = 62.76 avg = 62.56

proxylessnasnet min = 62.83 max = 64.70 avg = 63.90

efficientnet_b0 min = 94.83 max = 95.86 avg = 95.35

efficientnetv2_b0 min = 103.83 max = 105.30 avg = 104.74

regnety_400m min = 76.88 max = 78.28 avg = 77.46

blazeface min = 13.99 max = 21.03 avg = 15.37

googlenet min = 144.73 max = 145.86 avg = 145.19

googlenet_int8 min = 123.08 max = 124.83 avg = 123.96

resnet18 min = 181.74 max = 183.07 avg = 182.37

resnet18_int8 min = 103.28 max = 105.02 avg = 104.17

alexnet min = 162.79 max = 164.04 avg = 163.29

vgg16 min = 867.76 max = 911.79 avg = 889.88

vgg16_int8 min = 466.74 max = 469.51 avg = 468.15

resnet50 min = 333.28 max = 338.97 avg = 335.71

resnet50_int8 min = 239.71 max = 243.73 avg = 242.54

squeezenet_ssd min = 179.55 max = 181.33 avg = 180.74

squeezenet_ssd_int8 min = 131.71 max = 133.34 avg = 132.54

mobilenet_ssd min = 151.74 max = 152.67 avg = 152.32

mobilenet_ssd_int8 min = 85.51 max = 86.19 avg = 85.77

mobilenet_yolo min = 327.67 max = 332.85 avg = 330.36

mobilenetv2_yolov3 min = 221.17 max = 224.84 avg = 222.60

# The eighth

[email protected]:~/Downloads/ncnn/build/benchmark $ ./benchncnn 8 4 0

loop_count = 8

num_threads = 4

powersave = 0

gpu_device = -1

cooling_down = 1

nanodet_m min = 81.15 max = 81.71 avg = 81.33

nanodet_m-416 min = 143.89 max = 145.06 avg = 144.67

YOLOv5-Lite min = 84.30 max = 86.34 avg = 85.79

YOLOv5-Lite-int8 min = 80.98 max = 82.80 avg = 81.25

YOLOv5-Lite-416 min = 142.75 max = 146.10 avg = 144.34

yolov4-tiny min = 276.09 max = 289.83 avg = 285.99

squeezenet min = 59.37 max = 61.19 avg = 60.35

squeezenet_int8 min = 49.30 max = 49.66 avg = 49.43

mobilenet min = 72.40 max = 74.13 avg = 73.37

mobilenet_int8 min = 39.92 max = 40.23 avg = 40.07

mobilenet_v2 min = 71.57 max = 73.07 avg = 72.29

mobilenet_v3 min = 54.75 max = 56.00 avg = 55.40

shufflenet min = 40.07 max = 41.13 avg = 40.58

shufflenet_v2 min = 29.39 max = 30.25 avg = 29.86

mnasnet min = 59.54 max = 60.18 avg = 59.96

proxylessnasnet min = 61.06 max = 62.63 avg = 61.75

efficientnet_b0 min = 91.86 max = 95.01 avg = 92.84

efficientnetv2_b0 min = 101.03 max = 102.61 avg = 101.71

regnety_400m min = 76.75 max = 78.58 avg = 77.60

blazeface min = 13.18 max = 14.67 avg = 13.79

googlenet min = 136.56 max = 138.05 avg = 137.14

googlenet_int8 min = 118.30 max = 120.17 avg = 119.23

resnet18 min = 164.78 max = 166.80 avg = 165.70

resnet18_int8 min = 98.58 max = 99.23 avg = 98.96

alexnet min = 155.06 max = 156.28 avg = 155.56

vgg16 min = 817.64 max = 832.21 avg = 827.37

vgg16_int8 min = 457.04 max = 465.19 avg = 460.64

resnet50 min = 318.57 max = 323.19 avg = 320.06

resnet50_int8 min = 237.46 max = 238.73 avg = 238.06

squeezenet_ssd min = 171.61 max = 173.21 avg = 172.10

squeezenet_ssd_int8 min = 128.01 max = 129.58 avg = 128.84

mobilenet_ssd min = 145.60 max = 149.44 avg = 147.39

mobilenet_ssd_int8 min = 82.86 max = 83.59 avg = 83.22

mobilenet_yolo min = 311.95 max = 374.33 avg = 330.15

mobilenetv2_yolov3 min = 211.89 max = 286.28 avg = 228.01

(G6)YOLOv5-Lite And yolov5s Comparison of

notes : Randomly select 100 pictures for reasoning , Round off to calculate the average time per .

Afterword :

I have used my own data set before yolov3-tiny,yolov4-tiny,nanodet,efficientnet-lite And other lightweight Networks , But the results did not meet expectations , Instead, use yolov5 It has achieved better results than I expected , But it's true ,yolov5 Not in the lightweight network design concept , So, right yolov5 Modified idea, Hope to be able to enhance its strong data and positive and negative anchor Satisfactory results can be achieved under the mechanism . in general ,YOLOv5-Lite Based on yolov5 Platform for training , For a small sample data set, it is still very work Of .

There is not much complicated interleaved parallel structure , Try to ensure the simplicity of the network model ,YOLOv5-Lite Designed purely for industrial landing , Better fit Arm Architecture's processor , But you use this thing to run GPU, Low cost performance .

Optimize this thing , Part of it is based on feelings , After all, a lot of early work is based on yolov5 Carried out by , Part of it is true that this thing is very important for my personal data set work( To be exact , It should be for me who is extremely short of data set resources ,yolov5 The various mechanisms of are indeed robust to small sample data sets ).

Project address :

https://github.com/ppogg/YOLOv5-Lite

in addition , This project will be continuously updated and iterated , welcome star and fork!

Finally, a digression , In fact, I have been paying attention to YOLOv5 The dynamics of the , lately U The update frequency of version great God is much faster , It is estimated that soon YOLOv5 Will usher in the Sixth Edition ~

边栏推荐

- leetcode 869. Reordered Power of 2 | 869. 重新排序得到 2 的幂(状态压缩)

- JVM memory and garbage collection-3-direct memory

- 生命的高度

- Height of life

- LeetCode精选200道--链表篇

- [knowledge map paper] attnpath: integrate the graph attention mechanism into knowledge graph reasoning based on deep reinforcement

- Force buckle 5_ 876. Intermediate node of linked list

- 电路如图,R1=2kΩ,R2=2kΩ,R3=4kΩ,Rf=4kΩ。求输出与输入关系表达式。

- 关于TXE和TC标志位的小知识

- "Hands on learning in depth" Chapter 2 - preparatory knowledge_ 2.1 data operation_ Learning thinking and exercise answers

猜你喜欢

Learn face detection from scratch: retinaface (including magic modified ghostnet+mbv2)

Monthly observation of internet medical field in May 2022

leetcode 866. Prime Palindrome | 866. 回文素数

分布式定时任务之XXL-JOB

银行需要搭建智能客服模块的中台能力,驱动全场景智能客服务升级

The bank needs to build the middle office capability of the intelligent customer service module to drive the upgrade of the whole scene intelligent customer service

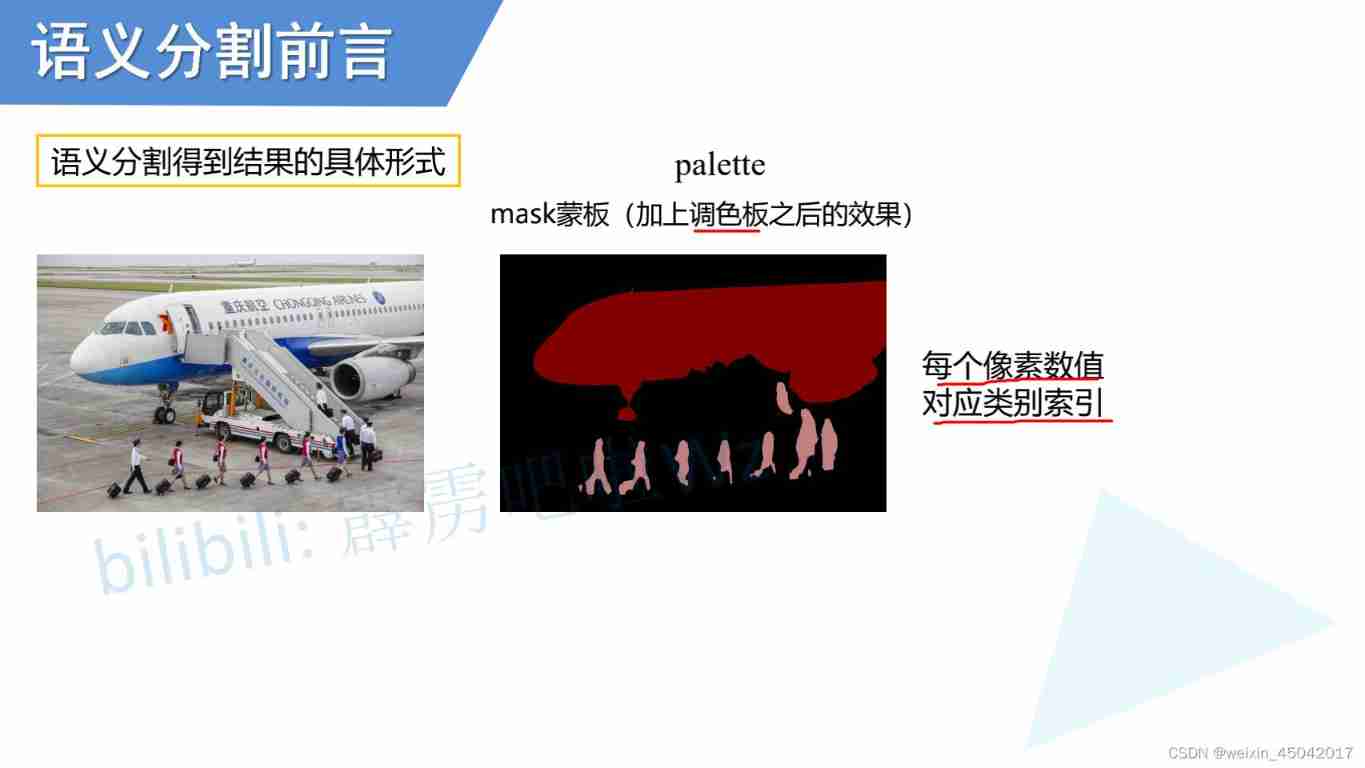

Semantic segmentation | learning record (1) semantic segmentation Preface

Master go game through deep neural network and tree search

Kwai applet guaranteed payment PHP source code packaging

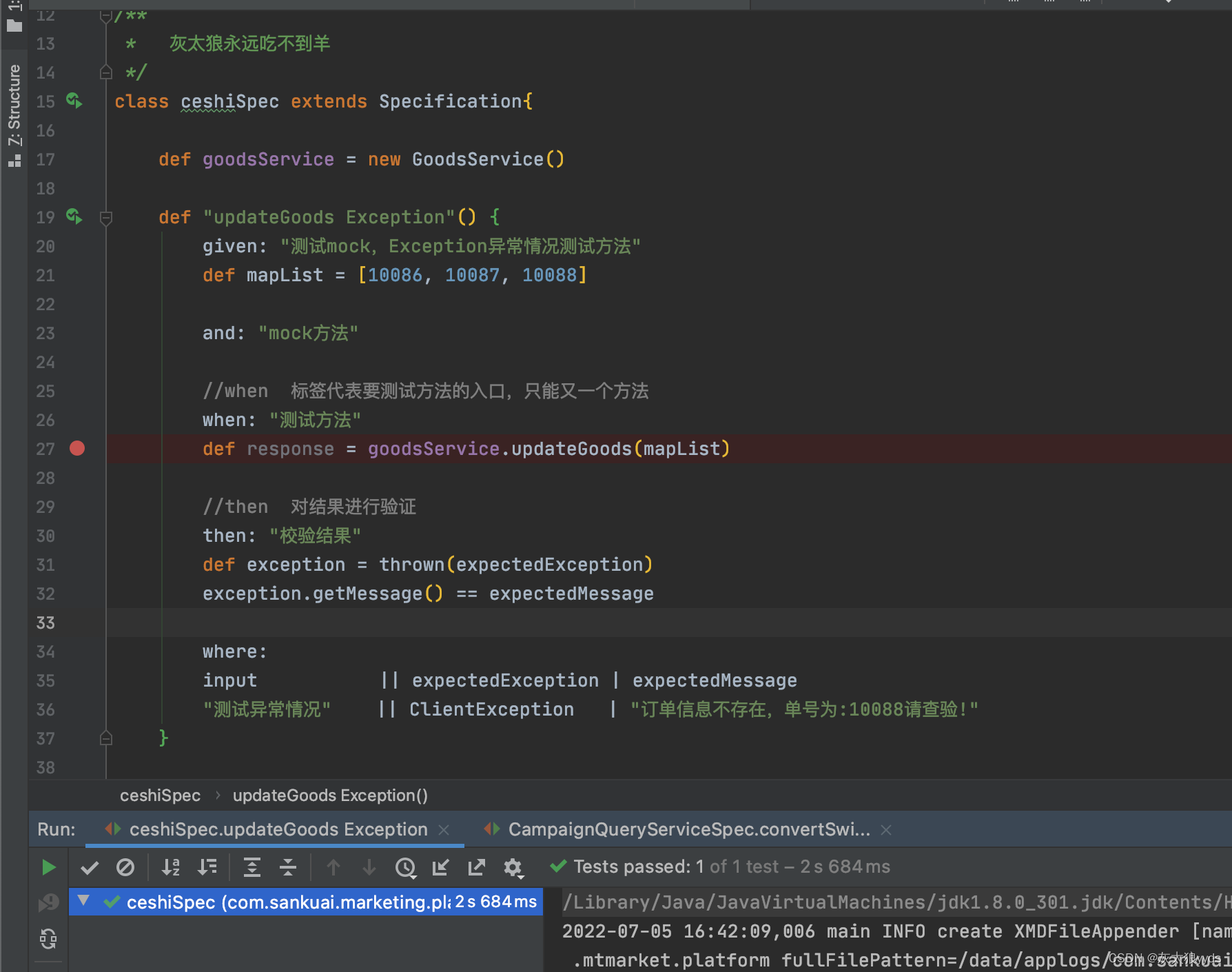

Spock单元测试框架介绍及在美团优选的实践_第四章(Exception异常处理mock方式)

随机推荐

【每日一题】736. Lisp 语法解析

How to use diffusion models for interpolation—— Principle analysis and code practice

Thread deadlock -- conditions for deadlock generation

Introduction to QT: video player

科普 | 什么是灵魂绑定代币SBT?有何价值?

Infrared dim small target detection: common evaluation indicators

LeetCode精选200道--数组篇

实现前缀树

分布式定时任务之XXL-JOB

Master go game through deep neural network and tree search

Gaussian filtering and bilateral filtering principle, matlab implementation and result comparison

cv2-drawline

VIM use

Installing and using mpi4py

th:include的使用

Random walk reasoning and learning in large-scale knowledge base

leetcode 873. Length of Longest Fibonacci Subsequence | 873. 最长的斐波那契子序列的长度

力扣6_1342. 将数字变成 0 的操作次数

[recommendation system paper reading] recommendation simulation user feedback based on Reinforcement Learning

#797div3 A---C