当前位置:网站首页>Summed up 200 Classic machine learning interview questions (with reference answers)

Summed up 200 Classic machine learning interview questions (with reference answers)

2022-07-07 11:46:00 【samll-guo】

Brush problem , It's a must before an interview . This article summarizes previous years BAT Machine learning interview questions , Dry cargo is full. , It is worth collecting .

If you want to work in a big factory, you can say that thousands of troops are crossing a single wooden bridge .

In order to pass the tests , It's absolutely necessary to brush up the topic . This article is published online according to BAT Machine learning interview 1000 Question series , I have compiled a dictionary of interview questions .

1. Please give us a brief introduction SVM.

SVM, The full name is support vector machine, The Chinese name is support vector machine .SVM Is a data oriented classification algorithm , Its goal is to determine a classification hyperplane , So as to separate the different data .

Expand :

Support vector machine learning methods include building models from simple to complex : Linear separable support vector machine 、 Linear support vector machines and nonlinear support vector machines . When the training data is linearly separable , Maximize... By hard spacing , Learn a linear classifier , That is, linear separable support vector machine , Also known as hard spaced support vector machines ; When the training data is approximately linearly separable , Maximize... By soft spacing , Also learn a linear classifier , Linear support vector machines , It is also called soft interval support vector machine ; When the training data is linear and indivisible , Maximize... By using nuclear techniques and soft separation , Learning nonlinear support vector machines .

General introduction to support vector machines ( understand SVM Three levels of state ):https://www.cnblogs.com/v-July-v/archive/2012/06/01/2539022.html

Deep understanding of machine learning SVM:http://blog.csdn.net/sinat_35512245/article/details/54984251

2. Please give us a brief introduction Tensorflow The calculation chart of .

@ Han Xiaoyang :Tensorflow It is a programming system to express calculation in the form of calculation diagram , Computational graphs are also called data flow graphs , You can think of a computational graph as a directed graph ,Tensorflow Each calculation in is a node on the calculation graph , And the edge between nodes describes the dependency between calculations .

3. Excuse me, GBDT and XGBoost What's the difference ?

@Xijun LI:XGBoost Be similar to GBDT Optimized version of , Both precision and efficiency have been improved . And GBDT comparison , The specific advantages are :

The loss function is approximated by binomial Taylor expansion , Not like it GBDT That's the first derivative ; The structure of the tree is regularized , Prevent the model from becoming too complex , It reduces the possibility of over fitting ; Nodes split in different ways ,GBDT It's the Gini coefficient used ,XGBoost It's derived from optimization .

Knowledge Links : Summary of integrated learning https://xijunlee.github.io/

4. stay k-means or kNN, We use Euclidean distance to calculate the distance between the nearest neighbors . Why not Manhattan distance ?

Manhattan distance only calculates horizontal or vertical distance , There are dimensional limitations . On the other hand , Euclidean distance can be used to calculate distance in any space . because , Data points can exist in any space , Euclidean distance is a more feasible choice . for example : Imagine a chess board , The movement of the elephant or car is calculated by Manhattan distance , Because they move in their own horizontal and vertical directions .

5. Baidu 2015 Machine learning written test questions for school enrollment .

Knowledge Links : Baidu 2015 Machine learning written test questions for school enrollment

http://www.itmian4.com/thread-7042-1-1.html

6. Let's talk about feature engineering .

7. About LR.

@rickjin: hold LR From head to toe . modeling , Field mathematical derivation , The principle of each solution is , Regularization ,LR and maxent Model what relationship ,LR Why is it better than linear regression . There are many people who can recite the answers , Ask for logical details . The principle will be ? Then ask engineering , How to parallelize , There are several ways to parallelize , Read about open source implementations . Will , Then prepare to accept it , By the way LR The history of model development .

Knowledge Links : Machine learning Logistic Return to ( Logitis return )

http://blog.csdn.net/sinat_35512245/article/details/54881672

8.overfitting How to solve ?

dropout、regularization、batch normalizatin

9.LR and SVM The connection and difference between ?

@ The sun is in sight , contact :

1、LR and SVM Can handle classification problems , And it is generally used to deal with linear binary classification problems ( In the improved case, we can deal with the multi classification problem )

2、 Both methods can add different regularization terms , Such as L1、L2 wait . So in a lot of experiments , The results of the two algorithms are very close .

difference :

1、LR It's a parametric model ,SVM It's a nonparametric model .

2、 In terms of objective function , The difference is that logical regression uses Logistical Loss,SVM It's using hinge loss. The purpose of these two loss functions is to increase the weight of data points that have a greater impact on classification , Reduce the weight of data points that have less relationship with classification .

3、SVM The way to deal with it is to only consider Support Vectors, That is to say, the few points most relevant to classification , To learn classifiers . And logical regression is through nonlinear mapping , It greatly reduces the weight of points far away from the classification plane , Relatively improve the weight of the data points most relevant to the classification .

4、 The logistic regression model is relatively simple , A good understanding , In particular, large-scale linear classification is more convenient . and SVM The understanding and optimization of is relatively complicated ,SVM After transforming into a dual problem , Classification only needs to calculate the distance from a few support vectors , This is an obvious advantage in the calculation of complex kernel functions , Can greatly simplify the model and calculation .

5、Logic What can be done SVM Do , But there may be problems with accuracy ,SVM What can be done Logic Some can't do .

The answer comes from : Machine learning common interview questions ( One )

http://blog.csdn.net/timcompp/article/details/62237986

10.LR The difference and relation with linear regression ?

@nishizhen: First of all, both logistic regression and linear regression are generalized linear regression , Secondly, the objective function of the classical linear model is the least square , Logistic regression is a likelihood function , In addition, linear regression is used to predict the whole range of real numbers , The sensitivity is consistent , And the scope of classification , Need to be in [0,1]. Logistic regression is a way to reduce the prediction range , Limit the predicted value to [0,1] A regression model between , So for this kind of problem , The robustness of logistic regression is better than that of linear regression .

@ A good mangy dog : The model of logistic regression is essentially a linear regression model , Logistic regression is supported by linear regression theory . But linear regression models can't sigmoid The nonlinear form of ,sigmoid It's easy to handle 0/1 Classification problem .

11. Why? XGBoost To expand with Taylor , What are the advantages ?

@AntZ:XGBoost First and second order partial derivatives are used , The second derivative is beneficial to the faster and more accurate gradient descent . Use Taylor expansion to get the second reciprocal form , It can be used in algorithm optimization analysis without selecting the specific form of loss function . In essence, the selection of loss function and the optimization of model algorithm / Parameter selection is separated . This decoupling increases XGBoost The applicability of .

12.XGBoost How to find the best feature ? Is it put back again or not ?

@AntZ:XGBoost In the process of training, give the score of each feature , It shows the importance of each feature to model training ..XGBoost Using gradient optimization model algorithm , The samples are not returned ( Imagine a sample being drawn over and over again , Would you be happy to step back and forth on a gradient ). but XGBoost Support subsampling , In other words, each round of calculation can not use all samples .

13. On discriminant model and generative model ?

Discrimination method : Learning decision function directly from data Y = f(X), Or by conditional distribution probability P(Y|X) As a prediction model , The discriminant model .

Generation method : The joint probability density distribution function is learned from the data P(X,Y), Then we get the conditional probability distribution P(Y|X) As a model of prediction , It's a generative model .

The discriminant model can be obtained from the generative model , But we can't get the generative model from the discriminant model .

Common discriminant models are :K a near neighbor 、SVM、 Decision tree 、 perceptron 、 Linear discriminant analysis (LDA)、 Linear regression 、 Traditional neural networks 、 Logistic returns 、boosting、 Conditional random field

Common generation models are : Naive Bayes 、 hidden Markov model 、 Gaussian mixture model 、 Document theme generation model (LDA)、 Limit the Boltzmann machine

14.L1 and L2 The difference between .

L1 norm (L1 norm) Is the sum of the absolute values of the elements in the vector , There is also a good name called “ Sparse regular operators ”(Lasso regularization).

such as vector A=[1,-1,3], that A Of L1 The norm is |1|+|-1|+|3|.

Just to summarize :

L1 norm : by x The sum of the absolute values of the elements of a vector .

L2 norm : by x The sum of the squares of the elements of a vector 1/2 Power ,L2 Norm is also called Euclidean Norm or Frobenius norm

Lp norm : by x The absolute value of each element of a vector p To the power of sum 1/p Power .

In the support vector machine learning process ,L1 Norm is actually a process of solving the optimal cost function , therefore ,L1 Norm regularization is achieved by adding L1 norm , Make the learning result satisfy the sparsity , It is convenient for human to extract features .

L1 Norms can make weights sparse , Convenient feature extraction .

L2 Norms prevent over fitting , Improve the generalization ability of the model .

15.L1 and L2 What distribution does regular prior obey ?

@ Classmate Qi : Interview ,L1 and L2 What distribution does regular prior obey ,L1 It's a Laplacian distribution ,L2 It's a Gaussian distribution .

16.CNN The most successful application is in CV, What then? NLP and Speech You can also use CNN Work it out ? Why? AlphaGo I also used CNN? What are the similarities between these unrelated questions ?CNN By what means can we capture this commonness ?

@ Xu Han

Knowledge Links ( Analysis of the answer ): Deep learning job interview questions, organize notes

https://zhuanlan.zhihu.com/p/25005808

17. The way Adaboost, Weight update formula . When the weak classifier is Gm when , The weight of each sample is w1,w2…, Please write the final decision formula .

Analysis of the answer http://www.360doc.com/content/14/1109/12/20290918_423780183.shtml

18.LSTM Structural derivation , Why is it better than RNN good ?

deduction forget gate,input gate,cell state, hidden information And so on ; because LSTM Having in and out and current cell informaton It's through input gate After controlling, the stack of ,RNN It's multiplication , therefore LSTM It can prevent the gradient from disappearing or exploding .

19. Friends who often search for things on the Internet know , When you accidentally type in a word that doesn't exist , The search engine will prompt you if you want to enter a correct word , For example, when you are Google Input in “Julw” when , The system will guess your intention : Do you want to search “July”, As shown in the figure below :

This is called spell checking . According to an article written by a Google employee How to Write a Spelling Corrector Show ,Google Based on Bayesian method . Please tell me your understanding , Specifically Google How to use Bayesian method , Realization ” Spelling check ” The function of .

When the user enters a word , Maybe the spelling is correct , It can also be misspelled . If you write down the correct spelling c( representative correct), Make a note of the spelling mistakes w( representative wrong), that ” Spelling check ” The thing to do is : In the event of a w Under the circumstances , Try to deduce c. In other words : It is known that w, Then in a number of alternatives , Find out the most likely one c, That is o P(c|w)P(c|w) The maximum of . And according to Bayes Theorem , Yes :

Because for all the alternatives c Come on , They are all the same w, So their P(w) It's the same , So we just need to maximize P(w|c)P(c) that will do . among :

P(c) The appearance of a correct word ” probability ”, It can be used ” frequency ” Instead of . If we have a large enough text library , So the frequency of each word in this text library , It's equivalent to its probability of occurrence . The more frequently a word appears ,P(c) The greater the . For example, when you type a wrong word “Julw” when , The system is more likely to guess what you might want to type “July”, instead of “Jult”, because “July” More common .

P(w|c) Trying to spell c Under the circumstances , There are spelling mistakes w Probability . To simplify the problem , Let's say that two words are closer in form , The more likely it is to misspell ,P(w|c) The greater the . for instance , The spelling of a letter , It's more than two letters apart , More likely to happen . You want to spell the words July, So misspelled Julw( One letter apart ) The possibility of , It's better than putting together Jullw high ( Two letters apart ). It is worth mentioning that , This kind of problem is generally called “ Edit distance ”, See the art of programming 28 ~ Chapter 29 : Maximum continuous product substring 、 String edit distance .

http://blog.csdn.net/v_july_v/article/details/8701148#t4

therefore , We compare the frequency of all words with similar spelling in the text library , Then pick out the one with the highest frequency , That's the word users want to type most . For detailed calculation process and defects of this method, please refer to How to Write a Spelling Corrector.

http://norvig.com/spell-correct.html

20. Why naive Bayes is so “ simple ”?

Because it assumes that all features are equally important and independent in the data set . As we know , This assumption is very unrealistic in the real world , therefore , To say naive Bayes is really “ simple ”.

21. Machine learning , Why do you often normalize the data ?

@zhanlijun

The source of the problem is analyzed : Why some machine learning models need to normalize the data ?

http://www.cnblogs.com/LBSer/p/4440590.html

22. On normalization in deep learning .

See this video for details : Normalization in deep learning

http://www.julyedu.com/video/play/69/686

23. Please briefly describe the process of a complete machine learning project .

1. Abstract into a mathematical problem

Identifying problems is the first step in machine learning . The training process of machine learning is usually a very time-consuming thing , The time cost of trying is very high .

The abstraction here is a mathematical problem , We know what kind of data we can get , The goal is a classification or regression or clustering problem , If not , If it falls into one of these problems .

2. get data

The data determines the upper limit of machine learning results , And the algorithm is just as close to the upper limit as possible . The data should be representative , Otherwise, it will be over fitted . And for classification , Data skew should not be too severe , The number of different categories of data should not differ by several orders of magnitude .

And there's also an assessment of the magnitude of the data , How many samples , How many features , You can estimate how much memory it consumes , Judge whether the memory can fit in the training process . If you can't put it down, you have to consider improving the algorithm or using some dimensionality reduction techniques . If the amount of data is too large , Then consider distributed .

3. Feature preprocessing and feature selection

Good data must be able to extract good features in order to really work .

Feature preprocessing 、 Data cleaning is a key step , It can improve the effect and performance of the algorithm significantly . normalization 、 discretization 、 Factorization 、 Missing value processing 、 Remove collinearity, etc , A lot of time is spent on them in data mining . The work is simple and reproducible , Earnings are stable and predictable , It is the basic and necessary step of machine learning .

Screen out the salient features 、 Get rid of non salient features , Machine learning engineers need to understand the business over and over again . This has a decisive effect on many results . Feature selection , Very simple algorithm can also get good 、 Stable results . This requires the use of relevant techniques of feature validity analysis , Such as correlation coefficient 、 Chi square test 、 Average mutual information 、 Conditional entropy 、 Posterior probability 、 Logistic regression weight and other methods .

4. Training models and tuning

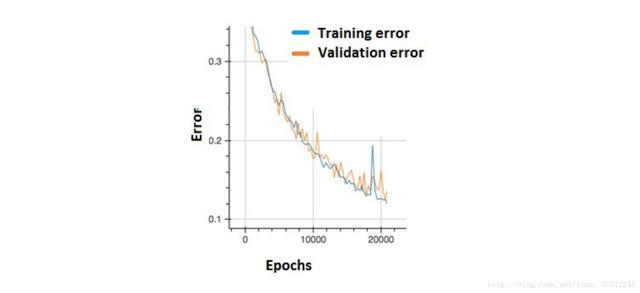

Until this step, we used the algorithm mentioned above for training . Now many algorithms can be packaged into black boxes for human use . But the real test is to adjust these algorithms ( super ) Parameters , Make the results better . This requires us to have a deep understanding of the principle of the algorithm . The deeper you understand , The more you can find the crux of the problem , Put forward a good optimization plan .

5. Model diagnosis

How to determine the direction and idea of model optimization ? This requires techniques for diagnosing models .

Over fitting 、 Under fitting Judgment is a crucial step in model diagnosis . Common methods such as cross validation , Draw a learning curve, etc . The basic idea of over fitting is to increase the amount of data , Reduce model complexity . The basic optimization idea of under fitting is to improve the quantity and quality of features , Increase model complexity .

error analysis It's also a crucial step in machine learning . By looking at the error samples , Comprehensive analysis of the error causes error : Is it a parameter problem or an algorithm selection problem , Is it a problem of characteristics or of data itself ……

The diagnosed model needs to be tuned , The new model needs to be re diagnosed after tuning , This is a process of iteration and approximation , You need to keep trying , And then reach the optimal state .

6. Model fusion

Generally speaking , After model fusion, the effect can be improved to some extent . And it works .

Engineering , The main methods to improve the accuracy of the algorithm are in the front end of the model ( Feature cleaning and pretreatment , Different sampling modes ) With the back end ( Model fusion ) Work hard . Because they are more standard and reproducible , The effect is relatively stable . And the work of direct parameter adjustment will not be much , After all, a lot of data is too slow to train , And the effect is hard to guarantee .

7. Go live

This part of the content is mainly related to the implementation of the project . Engineering is result oriented , The effect of the model running on line directly determines the success or failure of the model . Not just its accuracy 、 Error, etc , And the speed at which it runs ( Time complexity )、 Resource consumption ( Spatial complexity )、 Is stability acceptable .

These work processes are mainly the experience summed up in engineering practice . Not every project contains a complete process . The part here is just a guidance note , It's only for us to practice more , Accumulate more project experience , Will have their own deeper understanding .

so , Based on this , July online every issue ML Feature engineering is added to the algorithm class 、 Model optimization and other related courses . such as , Here's an open class video 《 Feature processing and feature selection 》.

24.new and malloc The difference between ?

Knowledge Links :new and malloc The difference between

https://www.cnblogs.com/fly1988happy/archive/2012/04/26/2470542.html

25.hash Conflicts and solutions ?

@Sommer_Xia

Elements with different key values may be mapped to the same address in the hash table, and a hash conflict will occur . terms of settlement :

1) Open addressing : When conflict occurs , Use some kind of probe ( Also called detection ) Technology forms a probe in the hash table ( measuring ) Sequence . Follow this sequence unit by unit to find , Until we find the given Key words of , Or come across an open address ( That is, the address unit is empty ) until ( To insert , In the search for open addresses , The new node to be inserted can be stored in the address unit ). Searching for open The address indicates that there are no keywords to look up in the table , That is, the search failed .

2) Then the hash method : Construct several different hash functions at the same time .

3) Chain address : Put all hash addresses to i The elements of constitute a single chain table called a synonym chain , And store the header pointer of the single chain table in the first... Of the hash table i In units , So find 、 Inserts and deletes are mainly performed in the synonym chain . The chain address method is suitable for frequent insertions and deletions .

4) Establish a common overflow area : The hash table is divided into two parts: basic table and overflow table , Any element that conflicts with the base table , Fill in the overflow form .

26. How to solve gradient disappearance and gradient expansion ?

(1) The gradient disappears :

According to the chain rule , If the partial derivative of each layer of neurons to the output of the upper layer multiplied by the weight, the result is less than 1 Words , So even if the result is 0.99, After enough layers of propagation , The bias of the error to the input layer tends to 0. May adopt ReLU The activation function can effectively solve the problem that the gradient disappears .

(2) Gradient expansion :

According to the chain rule , If the partial derivative of each layer of neurons to the output of the upper layer multiplied by the weight, the result is greater than 1 Words , After enough layers of propagation , The partial derivative of the error to the input layer tends to infinity

It can be solved by activating functions .

27. Which of the following does not belong to CRF The model is for HMM and MEMM The advantages of the model ( )

A. Flexible features

B. Fast

C. It can hold more context information

D. The best in the world

answer : First ,CRF,HMM( Hidden horse model ),MEMM( Maximum entropy hidden horse model ) They are often used to model sequence annotation .

One of the biggest shortcomings of the hidden Markov model is its output independence assumption , It can't take into account the characteristics of the context , Limits the selection of features .

The maximum entropy hidden horse model solves the hidden horse problem , You can choose any feature , But because it has to be normalized at every node , So we can only find the local optimal value , At the same time, it also brings about the problem of marking bias , That is to say, all the situations that do not appear in the training corpus are ignored .

Conditional random fields solve this problem very well , He doesn't normalize at every node , Instead, all features are normalized globally , Therefore, the global optimal value can be obtained .

The answer for B.

28. In short, there is the difference between supervised learning and unsupervised learning ?

Supervised learning : Learn from the labeled training samples , In order to classify and predict the data outside the training sample set as much as possible .(LR,SVM,BP,RF,GBDT)

Unsupervised learning : Training the unlabeled samples , Than to find the structural knowledge in these samples .(KMeans,DL)

29. Do you know about regularization ?

Regularization is proposed for over fitting , It is thought that the optimal solution to the model is the minimum empirical risk of general optimization , Now add model complexity to this empirical risk ( The regularization term is the norm of the model parameter vector ), And use a rate The ratio is used to weigh the complexity of the model against the weight of past experience risk , If the model is more complex , The greater the risk of structured experience , Now the goal is to optimize the structural empirical risk , It can prevent model training from becoming too complicated , Effectively reduce the risk of over fitting .

Occam's razor principle , The best model is to be able to interpret known data well and to be very simple .

30. What's the difference between covariance and correlation ?

Correlation is a standardized form of covariance . Covariance itself is hard to compare . for example : If we calculate wages ($) And age ( year ) The covariance , Because these two variables have different measures , So we get different covariances that we can't compare . To solve this problem , We calculate the correlation to get a value between -1 and 1 Between the value of the , You can ignore their different measures .

31. The difference between linear classifier and non-linear classifier as well as their advantages and disadvantages .

If it's a linear model of a function , And there is a linear classification surface , So it's a linear classifier , Otherwise, it's not .

The common linear classifiers are :LR, Bayesian classification , Single layer perceptron 、 Linear regression .

Common nonlinear classifiers : Decision tree 、RF、GBDT、 Multilayer perceptron .

SVM There are both ( Look at the linear kernel or the Gaussian kernel ).

Linear classifiers are fast 、 Easy to program , But it may not work well .

Nonlinear classifier programming is complex , But the effect fitting ability is strong .

32. The logical storage structure of data ( Such as arrays , queue , Trees, etc ) For software development has a very important impact , Try to understand the various storage structures you know from the running speed 、 The storage efficiency and application situation are briefly analyzed .

33. What is a distributed database ?

Distributed database system is based on the mature technology of centralized database system , But it's not simply to implement centralized database in a decentralized way , It has its own nature and characteristics . Many concepts and technologies of centralized database system , Such as data independence 、 Data sharing and reducing redundancy 、 concurrency control 、 integrity 、 Security and recovery are different in distributed database systems 、 Richer content .

34. Let's talk about Bayes theorem .

Before we introduce Bayes Theorem , Let's learn a few definitions first :

Conditional probability ( Also called posterior probability ) It's an event. A In another event B Probability of occurrence under the condition of occurrence . The conditional probability is expressed as P(A|B), pronounce as “ stay B Under the condition of A Probability ”.

such as , In the same sample space Ω An event or subset of A And B, If random from Ω One of the elements chosen in is B, So this randomly selected element belongs to A The probability of is defined as in B Under the premise of A Conditional probability of , therefore :P(A|B) = |A∩B|/|B|, Then the molecules 、 The denominator is divided by |Ω| obtain :

Joint probability is the probability that two events happen together .A And B The joint probability of is expressed as P(A∩B) perhaps P(A,B).

Edge probability ( Also known as prior probability ) It's the probability of an event . This is how the marginal probability is obtained : In the joint probability , Combine the unnecessary events in the final result into their total probability , And eliminate them ( For discrete random variables, sum the total probability , The total probability of integration for continuous random variables ), This is called marginalization (marginalization), such as A The marginal probability of is expressed as P(A),B The marginal probability of is expressed as P(B).

next , Consider a question :P(A|B) Is in B When it happens A The possibility of happening .

1) First , event B Before it happened , We are... About the event A There is a basic probability of the occurrence of , be called A The prior probability of , use P(A) Express ;

2) secondly , event B After that , We are... About the event A A reassessment of the probability of occurrence of , be called A The posterior probability of , use P(A|B) Express ;

3) Allied , event A Before it happened , We are... About the event B There is a basic probability of the occurrence of , be called B The prior probability of , use P(B) Express ;

4) Again , event A After that , We are... About the event B A reassessment of the probability of occurrence of , be called B The posterior probability of , use P(B|A) Express .

The formula expression of Bayes theorem :

35.#include <filename.h> and #include“filename.h” What's the difference? ?

Knowledge Links :#include<filename.h> and #include”filename.h” What's the difference?

http://blog.csdn.net/u010339647/article/details/77825788

36. A supermarket research sales record data found , Beer buyers are more likely to buy diapers , What kind of problem does this kind of data mining belong to ?(A)

A. Association rule discovery B. clustering C. classification D. natural language processing

37. Integrating raw data 、 Transformation 、 Dimensional conventions 、 Numerical specification is the task in which of the following steps ?(C)

A. Frequent pattern mining B. Classification and prediction C. Data preprocessing D. Data stream mining

38. Which of the following methods does not belong to data preprocessing ?(D)

A Variable substitution B discretization C Gather D Estimated missing value

39. What is? KDD?(A)

A. Data mining and knowledge discovery B. Domain knowledge discovery C. Document knowledge discovery D. Dynamic knowledge discovery

40. When you don't know the tag the data carries , What technology can be used to separate data with similar tags from data with other tags ?(B)

A. classification B. clustering C. Correlation analysis D. Hidden Markov chain

41. Build a model , Through this model, we can predict which kind of task the other variable value belongs to data mining according to the known variable value ?(C)

A. Search for B. Modeling description

C. Predictive modeling D. Look for patterns and rules

42. Which of the following methods is not a standard method of feature selection ?(D)

A The embedded B Filter C packing D Sampling

43. Please use python Write function find_string, Search for and print content from text , Require support for wildcard asterisk and question mark .

1find_string('hello\nworld\n','wor') 2['wor'] 3find_string('hello\nworld\n','l*d') 4['ld'] 5find_string('hello\nworld\n','o.') 6['or'] 7 answer 8deffind_string(str,pat): 9import re10return re.findall(pat,str,re.I)11--------------------- 12 author :qinjianhuang13 source :CSDN14 original text :https://huangqinjian.blog.csdn.net/article/details/7879632815

44. Let's talk about the five properties of red and black trees .

Teach you a preliminary understanding of the red and black trees

http://blog.csdn.net/v_july_v/article/details/6105630

45. Under the simple said sigmoid Activation function .

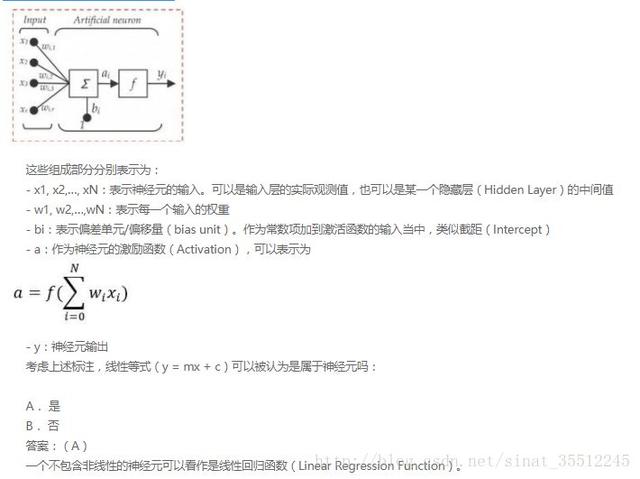

The commonly used nonlinear activation functions are sigmoid、tanh、relu wait , The first two sigmoid/tanh More common in the full connection layer , the latter relu Common in convolutions . Here is a brief introduction to the most basic sigmoid function (btw, In this blog SVM It was mentioned at the beginning of the article ).

Sigmoid The function expression of is as follows :

in other words ,Sigmoid The function is equivalent to compressing a real number to 0 To 1 Between . When z It's a very large positive number ,g(z) It will approach to 1, and z When it's a very small negative number , be g(z) It will approach to 0.

Compression - 0 To 1 What's the use ? The utility is that the activation function can be regarded as a kind of “ The probability of classification ”, For example, the output of the activation function is 0.9 That would be interpreted as 90% The probability of is a positive sample .

for instance , Here's the picture ( Figure quoted Stanford Machine learning open class ):

46. What is convolution ?

To image ( Different data window data ) And filter matrix ( A set of fixed weights : Because multiple weights of each neuron are fixed , So it can be seen as a constant filter filter) Do inner product ( Multiply and sum one by one ) The operation of is called 『 Convolution 』 operation , It's also the name of convolutional neural network .

Not strictly speaking , The part in the red frame in the figure below can be understood as a filter , That is, neurons with a set of fixed weights . Multiple filters are added to form a convolution layer .

OK, Take a specific example . As shown in the picture below , The left part of the figure is the original input data , The middle part of the picture is the filter filter, On the right is the new two-dimensional data output .

Break it down

47. What is? CNN Pooling of pool layer ?

Pooling , in short , I.e. average or maximum area , As shown in the figure below ( Figure quoted cs231n):

The picture above shows the largest area , In the left part of the picture above top left corner 2x2 In the matrix of 6 Maximum , Upper right corner 2x2 In the matrix of 8 Maximum , The lower left corner 2x2 In the matrix of 3 Maximum , The lower right corner 2x2 In the matrix of 4 Maximum , So we get the result on the right side of the graph :6 8 3 4. It's very simple not ?

48. In brief, what is generation countermeasure network .

GAN It's antagonistic , Because GAN Inside is the competitive relationship , One side is called generator, Its main job is to generate pictures , And try to make it look like it comes from a training sample . The other side is discriminator, The goal is to determine whether the input image belongs to a real training sample .

To put it more bluntly , take generator Imagine a counterfeit currency , and discriminator It's the police .generator The purpose is to make counterfeit money as real as possible , To be able to cheat discriminator, That is to generate samples and make them look like they come from real training samples .

See the left and right scenes in the figure below :

More on this course : Generative antagonistic network

https://www.julyedu.com/course/getDetail/83

49. What is the principle of Van Gogh painting ?

Here's an experimental tutorial on how to do Van Gogh style painting Teach you to use... From the beginning to the end DL Painting with Van Gogh :GTX 1070 cuda 8.0 tensorflow gpu edition , As for the principle, please watch this video :NeuralStyle Artistic pictures ( Learn the principles behind Van Gogh's painting ).

http://blog.csdn.net/v_july_v/article/details/52658965

http://www.julyedu.com/video/play/42/523

50. Now there is a To z 26 Elements , Write a program to print a To z Take whatever you like 3 A combination of elements ( such as Print a b c ,d y z etc. ).

A Baidu machine learning engineer position interview question

http://blog.csdn.net/lvonve/article/details/53320680

51. Which machine learning algorithms do not need to be normalized ?

Probability models don't need to be normalized , Because they don't care about the value of variables , It's about the distribution of variables and the conditional probabilities between variables , Such as the decision tree 、RF. And like Adaboost、GBDT、XGBoost、SVM、LR、KNN、KMeans Optimization problems like this need to be normalized .

52. Let's talk about gradient descent .

@LeftNotEasy

Mathematics in machine learning (1)- Return to (regression)、 gradient descent (gradient descent)

53. The gradient descent method must find the fastest direction of descent ?

Gradient descent is not the fastest way to go , It's just the tangent plane of the objective function at the current point ( Of course, a high dimensional problem can't be called a plane ) The fastest way up and down . stay Practical Implementation in , Newton's direction ( Consider the Hessian matrix ) Is generally considered to be the fastest direction of decline , You can achieve Superlinear The rate of convergence . The convergence rate of gradient descent algorithm is generally Linear even to the extent that Sublinear Of ( In some problems with complex constraints ).

Knowledge Links : This paper clearly explains the gradient descent algorithm in machine learning ( Including its variant algorithm )

http://blog.csdn.net/wemedia/details.html?id=45460

54. What's the difference between Newton's method and gradient descent method ?

@wtq1993 Knowledge Links : The common optimization algorithm in machine learning

http://blog.csdn.net/wtq1993/article/details/51607040

55. What is quasi Newton's method (Quasi-Newton Methods)?

@wtq1993 The common optimization algorithm in machine learning

56. Please talk about the problems and challenges of stochastic gradient descent ?

57. Talk about conjugate gradient method ?

@wtq1993 The common optimization algorithm in machine learning

http://blog.csdn.net/wtq1993/article/details/51607040

58. For all optimization problems , Is it possible to find a better algorithm than the current known algorithm ?

Answer link https://www.zhihu.com/question/41233373/answer/145404190

59、 What's the least square method ?

We often say : Generally speaking , On average . On average , The health of nonsmokers is better than that of smokers , The reason for adding “ Average ” Two words , Because there are exceptions to everything , There is always someone special who smokes, but because he exercises regularly, his health may be better than his non-smoking friends . One of the simplest examples of least squares is the arithmetic mean .

Least square method ( Also known as the least square method ) It's a mathematical optimization technique . It finds the best function matching of data by minimizing the square sum of errors . The unknown data can be obtained simply by using the least square method , The sum of the squared errors between the calculated data and the actual data is minimized . Expressed as a function :

Because arithmetic averaging is a tried and tested method , And the above reasoning shows that , Arithmetic mean is a special case of least squares , So from another point of view, the advantages of the least square method are illustrated , So that we have more confidence in the least square method .

After the publication of the least square method, it was accepted by everyone , And quickly in the data analysis practice is widely used . However, some people in history attributed the invention of the least square method to Gauss , What's the matter . Gauss is in 1809 The least square method was also published , And claim to have been using this method for years . Gauss invented the mathematical method of asteroid location , The least square method is used in data analysis , It accurately predicted the location of Ceres .

by the way , Least squares and SVM What is the connection ? See popular introduction to support vector machines ( understand SVM Three levels of state ).

http://blog.csdn.net/v_july_v/article/details/7624837

60、 Look at you T On the T-shirt is printed : Life is too short , I use Python, Can you talk about Python What kind of language is it ? You can compare other technologies or languages to answer your questions .

15 Important Python Interview questions Test whether you are suitable for Python?

61.Python How to manage memory ?

2017 Python The latest interview questions and answers 16 Problem

http://www.cnblogs.com/tom-gao/p/6645859.html

62. Please write a paragraph Python Code implementation delete a list The repeating elements in it .

1、 Use set function ,set(list);

2、 Use dictionary functions :

1a=[1,2,4,2,4,5,6,5,7,8,9,0]2b={}3b=b.fromkeys(a)4c=list(b.keys())5c

63. For programming sort Sort , Then judge from the last element .

1a=[1,2,4,2,4,5,7,10,5,5,7,8,9,0,3]23a.sort()4last=a[-1]5for i inrange(len(a)-2,-1,-1):6if last==a[i]:7del a[i]8else:last=a[i]9print(a)

64.Python How to generate random numbers in it ?

@Tom_junsong

random modular

Random integers :random.randint(a,b): Returns a random integer x,a<=x<=b

random.randrange(start,stop,[,step]): Return to a range in (start,stop,step) Random integer between , Does not include end value .

Random real number :random.random( ): return 0 To 1 The floating point number between

random.uniform(a,b): Returns the floating-point number in the specified range .

65. Talk about the common loss function .

For a given input X, from f(X) Give the corresponding output Y, The predicted value of this output f(X) And the real value Y May or may not be the same ( Need to know , Sometimes loss or error is inevitable ), Use a loss function to measure the degree of prediction error . The loss function is written as L(Y, f(X)).

The common loss functions are as follows ( It's basically quoted from 《 Statistical learning method 》):

66. A brief introduction Logistics Return to .

Logistic The purpose of regression is to learn one from the features 0/1 Classification model , This model takes the linear combination of characteristics as independent variables , Because the value range of independent variable is from negative infinity to positive infinity . therefore , Use logistic function ( Or called sigmoid function ) Mapping arguments to (0,1) On , The mapped values are considered to belong to y=1 Probability .

Hypothesis function :

among x yes n Dimension eigenvector , function g Namely Logistic function . and :g(z)=11+ezg(z)=11+ez The image is :

You can see , Mapping infinity to (0,1). And suppose that the function is characteristic of y=1 Probability .

67. Look, you're visual , Familiar with what CV frame , By the way CV What's the history of the last five years ?

Analysis of the answer https://mp.weixin.qq.com/s?__biz=MzI3MTA0MTk1MA==&mid=2651986617&idx=1&sn=fddebd0f2968d66b7f424d6a435c84af&scene=0#wechat_redirect

68. What is the frontier of deep learning in the field of vision ?

@ Yuanfeng The source of the problem is analyzed : The advanced development of deep learning in the field of computer vision

https://zhuanlan.zhihu.com/p/24699780

69.HashMap And HashTable difference ?

HashMap And Hashtable The difference between

http://oznyang.iteye.com/blog/30690

70. In the classification problem , We often encounter the situation that the amount of positive and negative sample data is not equal , For example, the positive sample is 10w Data , The negative sample is only 1w Data , The best way to deal with it is ( )

A、 Repeat the negative sample 10 Time , Generate 10w sample size , Get out of order and participate in classification

B、 Direct classification , You can make the most of the data

C、 from 10w Random sampling from positive samples 1w Participate in classification

D、 Set the weight of each negative sample to 10, The positive sample weight is 1, Participate in the training process

@ Dr. Guan : Accurately speaking , In fact, each of these options has its own advantages and disadvantages , Need specific analysis , The advantages and disadvantages of various methods are analyzed in this paper , Well done Interested students can refer to :

How to handle Imbalanced Classification Problems in machine learning?

https://www.analyticsvidhya.com/blog/2017/03/imbalanced-classification-problem/

71. Deep learning is a popular machine learning algorithm , In deep learning , It involves a lot of matrix multiplication , Now we need to compute three dense matrices A,B,C The product of the ABC, false 90 Let the dimensions of the three matrices be mn,np,pq, And m <n <p <q, The following calculation sequence is the most efficient (A)

A.(AB)C

B.AC(B)

C.A(BC)

D. So the efficiency is the same

right key :A

@BlackEyes_SGC:m*n*p <m*n*q,m*p*q < n*p*q, therefore (AB)C Minimum

72.Nave Bayes It's a special kind Bayes classifier , The characteristic variable is X, The category label is C, One of its assumptions is :( C )

A. A priori probability of each category P(C) They are equal.

B. With 0 Is the mean ,sqr(2)/2 Is the normal distribution of standard deviation

C. Characteristic variable X Each dimension of is a category condition independent random variable

D.P(X|C) It's a Gaussian distribution

right key :C

@BlackEyes_SGC: The condition of naive Bayes is that each variable is independent of each other .

73. About support vector machines SVM, The following statement is wrong (C)

A.L2 The regularization , The function is to maximize the classification interval , It makes the classifier have stronger generalization ability

B.Hinge Loss function , The function is to minimize the error of experience classification

C. The classification interval is 1||w||1||w||,||w|| The module of a vector

D. When parameters C The more hours , The larger the classification interval is , The more misclassification , Tend to be under educated

right key :C

@BlackEyes_SGC:

A correct . Consider the reason for adding a regularization term : Imagine a perfect data set ,y>1 It's true ,y<-1 It's negative , Decision making y=0, Add a y=-30 Positive class noise , Then the decision-making side will change “ Crooked ” quite a lot , The classification interval becomes smaller , The generalization ability is reduced . After adding regular terms , The fault tolerance of noise samples is enhanced , In the example mentioned above , The decision-making side will be less “ Crooked ” 了 , Make the classification interval larger , Improved generalization ability .

B correct .

C error . The interval should be 2||w||2||w|| That's right , The second half of the sentence should be true , The norm of a vector is usually referred to as the second norm .

D correct . When you think about soft intervals ,C The impact on the optimization problem is to put a The range is from [0,+inf] It's limited to [0,C].C The smaller it is , that a The smaller , The derivative of the objective function Lagrange function is 0 We can work out w=∑iaiyixiw=∑iaiyixi,a To make smaller w smaller , So the interval 2||w||2||w|| Bigger

74. stay HMM in , If the observation sequence and the state sequence of the observation sequence are known , Then which of the following methods can be used for parameter estimation directly ( D )

A.EM Algorithm

B. viterbi algorithm

C. Forward backward algorithm

D. Maximum likelihood estimation

right key :D

@BlackEyes_SGC:

EM Algorithm : Only the observation sequence , Learning model parameters when there is no state sequence , namely Baum-Welch Algorithm

viterbi algorithm : Solve with dynamic programming HMM The prediction problem of , It's not parameter estimation

Forward backward algorithm : To calculate the probability

Maximum likelihood estimation : It is a supervised learning algorithm when both the observation sequence and the corresponding state sequence exist , Used to estimate parameters

Note that the model parameters are estimated in a given observation sequence and the corresponding state sequence , Maximum likelihood estimation can be used . If we give a sequence of observations , There is no corresponding state sequence , Only use EM, Look at the state sequence as unmeasurable hidden data .

75. Suppose a classmate uses Naive Bayesian(NB) When classifying models , Accidentally, the two dimensions of training data are repeated , So about NB The right thing to say is :(BD)

A. The decisive role of this repeated feature in the model will be enhanced

B. The accuracy of the model is lower than that of the model without repeated features

C. If all the features are repeated , The predicted result of the model is the same as that of the model without repetition .

D. When two columns of features are highly correlated , It is impossible to analyze the problem with the conclusion that two columns have the same characteristics

E.NB It can be used to do least squares regression

F. None of the above is true

right key :BD

@BlackEyes_SGC:NB At the heart of it is that it assumes that all the components of a vector are independent . In the system of Bayesian theory , There is an important assumption of conditional independence : Suppose all features are independent of each other , So we can split the joint probability .

76. Which of the following methods can't directly classify text ?(A)

A、Kmeans

B、 Decision tree

C、 Support vector machine

D、KNN

right key : A Classification is different from clustering .

@BlackEyes_SGC:A:Kmeans It's a clustering method , Typical unsupervised learning methods . Classification is a supervised learning method ,BCD They are all common classification methods .

77. The covariance matrix of a set of data is known P, What's wrong about the principal component is ( C )

A、 The best criterion of principal component analysis is to decompose a set of data according to a set of orthogonal bases , Under the condition of taking only the same quantity components , The truncation error calculated by mean square error is the smallest

B、 After principal component decomposition , The covariance matrix becomes a diagonal matrix

C、 Principal component analysis is K-L Transformation

D、 The principal component is obtained by finding the characteristic value of covariance matrix

right key : C

@BlackEyes_SGC:K-L Transform with PCA Transformation is a different concept ,PCA The transformation matrix of is the covariance matrix ,K-L There are many kinds of transformation matrix of transformation ( Second order matrix 、 Covariance matrix 、 The dispersion matrix within the total class and so on ). When K-L When the transformation matrix is a covariance matrix , Equate to PCA.

78.Kmeans Complexity ?

Time complexity :O(tKmn), among ,t For the number of iterations ,K For the number of clusters ,m For the number of records ,n Is the dimensional space complexity :O((m+K)n), among ,K For the number of clusters ,m For the number of records ,n For dimension .

Specific reference : Deep understanding of machine learning K-means、 And KNN Algorithm difference and code implementation

http://blog.csdn.net/sinat_35512245/article/details/55051306

79. About Logit Regression and SVM What's not right is (A)

A. Logit Regression is essentially a method of maximum likelihood estimation of weights based on samples , The posterior probability is proportional to the product of the prior probability and the likelihood function .logit It's just the maximum likelihood function , There is no maximization of a posteriori probability , Not to mention minimizing a posteriori probability .A error

B. Logit The output of the regression is the probability that the sample belongs to a positive category , You can calculate the probability , correct

C. SVM The goal of this paper is to find the hyperplane that makes the training data as separate as possible with the largest classification interval , It should be structural risk minimization .

D. SVM The complexity of the model can be controlled by the regularization coefficient , Avoid overfitting .

@BlackEyes_SGC:Logit The objective function of regression is to minimize the posterior probability ,Logit Regression can be used to predict the probability of events ,SVM The goal is to minimize structural risk ,SVM It can effectively avoid model over fitting .

80. Enter the image size as 200×200, After a layer of convolution in turn (kernel size 5×5,padding 1,stride 2),pooling(kernel size 3×3,padding 0,stride 1), Another layer of convolution (kernel size 3×3,padding 1,stride 1) after , The size of the output feature map is :()

right key :97

@BlackEyes_SGC: The calculated size is not divisible only in GoogLeNet Encountered in . Convolution rounding down , Pool up and round up .

This topic (200-5+2*1)/2+1 by 99.5, take 99

(99-3)/1+1 by 97

(97-3+2*1)/1+1 by 97

If you have studied the Internet, you can see stride by 1 When , When kernel by 3 padding by 1 perhaps kernel by 5 padding by 2 At first glance, the size of convolution remains unchanged . Calculation GoogLeNet The size of the whole process is the same .

81. The main factors that affect the result of clustering algorithm are (BCD )

A. The sample quality of a given class ;

B. Classification criteria ;

C. Feature selection ;

D. Pattern similarity measure

82. In pattern recognition , The advantage of horse distance over European distance is (CD)

A. Translation invariance ;

B. Rotation invariance ;

C. Scale invariance ;

D. Considering the distribution of patterns

83. The impact is basically K- The main factors of mean algorithm are (ABD)

A. Sample input order ;

B. Pattern similarity measure ;

C. Clustering criteria ;

D. The selection of the initial class center

84. In the classification of statistical patterns , When a priori probability is unknown , have access to (BD)

A. The minimum loss criterion ;

B. The minimum and maximum loss criterion ;

C. Minimum probability of misjudgment criterion ;

D. N-P Sentence

85. If we take the correlation coefficient of the eigenvector as the pattern similarity measure , The main factors that affect the results of clustering algorithm are (BC)

A. Given the class sample quality ;

B. Classification criteria ;

C. Feature selection ;

D. dimension

86. Euclidean distance has (AB ); Equine distance has (ABCD ).

A. Translation invariance ;

B. Rotation invariance ;

C. Scaling invariance ;

D. A characteristic that is not affected by dimensions

87. What do you have Deep Learning(RNN,CNN) The experience of tuning parameters ?

Analysis of the answer , From Zhihu

https://www.zhihu.com/question/41631631

88. Simple talk about RNN Principle .

When we went to senior three to prepare for the college entrance examination , At this time, the knowledge is composed of the knowledge learned before and after grade two and the knowledge learned by senior three , That is, our knowledge is foreshadowed by the antecedents , There is a memory , It's like when the movie subtitles appear :“ I am a ” when , You will naturally associate with :“ I'm Chinese ”.

89. What is? RNN?

@ The sky of a bird , The source of the problem is analyzed :

Cyclic neural network (RNN, Recurrent Neural Networks) Introduce

http://blog.csdn.net/heyongluoyao8/article/details/48636251

90.RNN How is it constructed step by step from a single layer network ?

@ He Zhiyuan , The source of the problem is analyzed :

Complete diagram RNN、RNN variant 、Seq2Seq、Attention Mechanism

https://zhuanlan.zhihu.com/p/28054589

101. Deep learning (CNN RNN Attention) Solve the problem of large-scale text classification .

Learn in depth (CNN RNN Attention) Solve the problem of large-scale text classification - Review and practice

https://zhuanlan.zhihu.com/p/25928551

102. How to solve RNN Gradient explosion and diffusion problems ?

Deep learning and natural language processing (7)_ Stanford cs224d Language model ,RNN,LSTM And GRU

http://blog.csdn.net/han_xiaoyang/article/details/51932536

103. How to improve the performance of deep learning ?

Machine learning series (10)_ How to improve deep learning ( And machine learning ) Performance of

http://blog.csdn.net/han_xiaoyang/article/details/52654879

104.RNN、LSTM、GRU difference ?

@ I love big bubbles , The source of the problem is analyzed : Interview written examination arrangement 3: Deep learning machine learning interview question preparation ( Must be )

http://blog.csdn.net/woaidapaopao/article/details/77806273

105. When machine learning performance encounters bottlenecks , How would you optimize ?

You can get it from here 4 Try it in three aspects : Data based 、 With the aid of algorithms 、 Adjust parameters by algorithm 、 With the help of model fusion . Of course, it depends on your experience .

Here's a list of references : Machine learning series (20)_ Machine learning performance improves cheat sheet

http://blog.csdn.net/han_xiaoyang/article/details/53453145

106. What kind of machine learning programs have you done ? For example, how to build a recommendation system from scratch ?

Open class of recommendation system http://www.julyedu.com/video/play/18/148, another , Recommend another course : Machine learning project class [10 Second pure project explanation ,100% Pure combat ](https://www.julyedu.com/course/getDetail/48).

107. What kind of data set is not suitable for deep learning ?

@ Abstract monkey , source :

Know the answer

https://www.zhihu.com/question/41233373

108. How generalized linear models are applied to deep learning ?

@ Xu Han , source : Know the answer

https://www.zhihu.com/question/41233373/answer/145404190

109. What theoretical knowledge should you know when preparing for a machine learning interview ?

@ Mu Wen

Know the answer

https://www.zhihu.com/question/62482926

Expand three aspects :

【 Theoretical foundation 】 It mainly investigates the understanding of machine learning model , Selective questions ( If you meet the research direction of the interviewer, you don't know but are interested , Will be very happy , You can take the opportunity to learn a ha ha ) The problem here will be more detailed , It's all something I've thought deeply about myself ( Endorsement is useless , I can ask you any point here ), All hands here .

Over fitting, under fitting ( Let me give you a few examples to judge , By the way, the purpose of cross validation 、 Super parameter search method 、EarlyStopping)、L1 Regular and L2 Regular practice 、 The idea behind regularization ( By the way BatchNorm、Covariance Shift)、L1 Canonical generation sparse solution principle 、 Logistic regression why linear model ( By the way LR How to solve the problem of low dimensional indivisibility 、 From the perspective of graph model LR And naive Bayes and unsupervised )、 Several parameter estimation methods MLE/MAP/ Bayesian connections and differences 、 Under the simple said SVM The support vector of ( By the way KKT Conditions 、 Why 、 Popular understanding of nuclear )、 GBDT Can random forests be parallel ( By the way bagging boosting)、 Generative model, discriminant model, for example 、 Mastery of clustering methods ( By the way Kmeans Of EM The way of thinking 、 Spectral clustering and Graph-cut The understanding of the )、 The difference between gradient descent method and Newton method ( By the way Adam、L-BFGS The idea of )、 The idea of semi Supervision ( By the way, how some specific semi supervised algorithms use unlabeled data 、 from MAP From the perspective of semi Supervision )、 Evaluation indexes of common classification models ( By the way, cross entropy 、ROC How to draw 、AUC The physical meaning of 、 Category imbalance sample )

a. CNN Convolution operation and convolution kernel function in 、maxpooling effect 、 The connection between convolution layer and full connection layer 、 The concept of gradient explosion and disappearance ( By the way, the method of neural network weight initialization 、 Why can you slow down the gradient explosion and disappear 、CNN What are the solutions 、LSTM How to solve 、 How to gradient crop 、dropout How to use it in RNN In the series network 、dropout Prevent over fitting )、 Why convolution can be used in images / voice / On statement ( By the way channel Meaning in different types of data sources )

b. If the interviewer does what I do NLP、 Recommendation system , I will continue to ask CRF With logical regression The relationship between the maximum entropy model 、CRF Optimization method 、CRF and MRF The connection of 、HMM and CRF The relationship between ( By the way Naive Bayes and HMM The connection of 、LSTM+CRF Principle for sequence annotation 、CRF Point function and edge function 、CRF The distribution of experience )、WordEmbedding Several common methods and principles of ( By the way language model、perplexity The evaluation index 、word2vec Follow Glove Similarities and differences )、topic model say 、 why CNN Can be used in text classification 、syntactic and semantic Examples of questions 、 common Sentence embedding Method 、 Attention mechanism ( By the way, there are several different situations of attention mechanism 、 Why introduce 、seq2seq principle )、 Evaluation index of sequence annotation 、 Semantic disambiguation 、 Common with word Relevant features 、factorization machine、 Common matrix decomposition models 、 How to use the classification model for commodity recommendation ( Including data set partitioning 、 Model validation, etc )、 Sequence learning 、wide&deep model( By the way, why wide and deep)

【 codability 】 It mainly investigates the ability to implement algorithms and optimize code , I usually look at the interviewer first github repo( If your resume comes out ), Look at the code style 、 Architectural capability ( When you meet a great God, you will seriously learn a ha ha ), without github, I will avoid asking typical test questions , But ask some My own small algorithm problem abstracted from practical problems , such as :

a. The matrix of nodes and the matrix of edges are given , Find the path and the maximum path ( originate Viterbi Algorithm , The essence is a dynamic programming ), At least give me an idea and pseudo code ( By the way, let's talk about forward propagation and reverse propagation )

b. Give an array , Array elements are pair pair , Represents a directed acyclic graph < Father node , Child node >, In the best way , Turn it into a new ordered array , Array elements are all nodes of the directed acyclic graph , The order of arrays is reflected in : The parent node is in front of the child node ( originate Small problems in the implementation of Bayesian network trick)

【 Project capability 】 Mainly investigate the ideas to solve practical problems 、 Pit filling capacity , This part tests the interviewer's skills , Be able to find meaningful points from the interviewer's exaggerated description , And dig deep step by step . A lot more dirty work( Data preprocessing 、 Text cleaning 、 Transfer experience 、 Algorithm complexity optimization 、Bad case analysis 、 Modify the loss function, etc ) Also in this step of deep excavation

110. The difference between standardization and normalization ?

Simply speaking , Standardization deals with data according to the columns of characteristic matrix , It is through seeking. z-score Methods , The eigenvalues of samples are converted to the same dimension . Normalization is the processing of data according to the row of characteristic matrix , The purpose of this method is to calculate the similarity of sample vectors in point multiplication or other kernel functions , Have a unified standard , In other words, they are transformed into “ Unit vector ”. The rule is L2 The normalization formula is as follows :

Missing value processing of eigenvector :

1. There are many missing values . Discard the feature directly , Otherwise, it may lead to larger noise, Have an adverse effect on the results .

2. Less missing values , The rest of the missing values are in the 10% within , There are many ways we can deal with :

1) hold NaN Directly as a feature , Hypothetical use 0 Express ;

2) Fill in with the mean ;

3) Using random forest and other algorithms to predict filling

111. How do random forests deal with missing values .

Method 1 (na.roughfix) Simple and crude , For training sets , The same class The data under the , If it's a missing categorical variable , Fill in with modes , If it's a continuous variable missing , Fill in with the median .

Method 2 (rfImpute) This method has a lot of calculation , As for better than method one ? It's hard to judge . First use na.roughfix Fill in the missing value , Then build the forest and calculate proximity matrix, Looking back at the missing value , If it's a categorical variable , Then the method of weighted average without matrix is used to make up the missing value . Then iteration 4-6 Time , The idea of making up for the lack of value and KNN Some similar 1 Of missing observational examples proximity To vote with the weight in . If it's a continuous variable , Then use proximity Moment 2.

112. How random forests assess the importance of features .

There are two ways to measure the importance of variables ,Decrease GINI and Decrease Accuracy:

1) Decrease GINI: For the return question , Use it directly argmax(VarVarLeftVarRight) As a criterion , That is, the variance of the current node training set Var Subtract the variance of the left node VarLeft And the variance of the right node VarRight.

2) Decrease Accuracy: For a tree Tb(x), We use it OOB The sample can get the test error 1; And then randomly change OOB The first part of the sample j Column : Leave the other columns unchanged , Right. j Columns are randomly permuted up and down , Get the error 2. thus , We can use error 1- error 2 To characterize variables j Importance . The basic idea is , If a variable j Important enough , Then changing it will greatly increase the test error ; conversely , If you change it, the test error doesn't increase , It means that the variable is not so important .

113. Optimize Kmeans.

Use Kd Trees or Ball Tree Build all the observation instances into one kd Trees , Before, each cluster center needs to calculate the distance from each observation point in turn , Now these cluster centers are based on kd The tree only needs to calculate a local region nearby .

114.KMeans The selection of the center point of the initial cluster .

K-means++ Algorithm selection initial seeds The basic idea is : The distance between the initial clustering centers should be as far as possible .

1. Randomly select a point from the set of input data points as the first clustering center

2. For every point in the dataset x, Calculate it and the nearest cluster center ( Refers to the selected cluster center ) Distance of D(x)

3. Select a new data point as a new clustering center , The principle of choice is :D(x) Larger point , The probability of being selected as cluster center is high

4. repeat 2 and 3 until k Cluster centers selected

5. Use this k Initial cluster centers to run standard k-means Algorithm

115. Explain the concept of duality .

An optimization problem can be studied from two aspects , One is primal problem , One is dual problem , It's a dual problem , In general, the lower bound of the optimal value of the main problem is given for the dual problem , In the case of strong duality, the optimal lower bound of the main problem can be obtained from the dual problem , The dual problem is a convex optimization problem , It can be solved better ,SVM Middle is going to be Primal The question turned to dual Solve the problem , Thus, the idea of kernel function is further introduced .

116. How to select features ?

Feature selection is an important data preprocessing process , There are two main reasons : One is to reduce the number of features 、 Dimension reduction , Make model generalization more powerful , Reduce overfitting ; The second is to enhance the understanding between features and eigenvalues .

Common feature selection methods :

1. Remove features with small variance .

2. Regularization .1 Regularization can generate sparse models .L2 The performance of regularization is more stable , Because useful features often correspond to non-zero coefficients .

3. Random forests , For the classification problem , Gini impurity or information gain is usually used , For the return question , Variance or least squares fitting is usually used . In general, you don't need feature engineering、 Adjustment and other tedious steps . Its two main problems ,1 It's an important feature that has the potential to score very low ( The problem of relevance characteristics ),2 It is that this method is more beneficial to the features with more types of characteristic variables ( Biased questions ).

4. Stability options . It is a new method based on the combination of quadratic sampling and selection algorithm , The selection algorithm can be regression 、SVM Or something like that . Its main idea is to run feature selection algorithms on different data subsets and feature subsets , Keep repeating , The final summary feature selection results , For example, you can count the frequency of a feature that is considered to be an important feature ( The number of times a feature is selected as an important feature divided by the number of times its subset is tested ). Ideally , The score of important characteristics will be close to 100%. A slightly weaker feature has to be true or false 0 Number of numbers , And the most useless feature score will be close to 0.

117. Data preprocessing .

1. Missing value , Fill in missing values fillna:

i. discrete :None,

ii. continuity : mean value .

iii. Too many missing values , Remove the column directly

2. Continuous value : discretization . Some models ( Such as the decision tree ) Discrete values are required

3. Binarization of quantitative features . The core is to set a threshold , Values greater than the threshold are assigned as 1, Values less than or equal to the threshold are assigned as 0. Such as image manipulation

4. Pearson correlation coefficient , Remove highly correlated columns

118. Let's talk about feature engineering .

119. Do you know what kind of data processing and Feature Engineering ?

120. Please compare Sigmoid、Tanh、ReLu These three activation functions ?

121.Sigmoid、Tanh、ReLu What are the disadvantages of these three activation functions , There is no improved activation function ?

@ I love big bubbles , source : Interview written examination arrangement 3: Deep learning machine learning interview question preparation ( Must be )

http://blog.csdn.net/woaidapaopao/article/details/77806273

122. How to understand decision tree 、xgboost Can handle missing values ? And some models (svm) Sensitive to missing values ?

Know the answer

https://www.zhihu.com/question/58230411

123. Why the nonlinear excitation function is introduced ?

@Begin Again, source : Know the answer

https://www.zhihu.com/question/29021768

If you don't use the excitation function ( In fact, the excitation function is f(x) = x), In this case, your output at each level is a linear function of the upper level input , It's easy to verify , No matter how many layers your neural network has , The output is a linear combination of inputs , It's equivalent to no hidden layer , This is the original perceptron (Perceptron) 了 .

Because of the above reasons , We decided to introduce a nonlinear function as the excitation function , So deep neural networks make sense ( It's no longer a linear combination of inputs , Can approximate any function ). The first idea was Sigmoid Function or Tanh function , The output is bounded , It's easy to act as the next level of input ( And some people's biological explanations ).

124. Why is artificial neural network ReLu Better than Tanh and Sigmoid function?

@Begin Again, source : Know the answer

https://www.zhihu.com/question/29021768

125. Why? LSTM In the model Sigmoid Also exist Tanh Two activation functions ?

The source of the problem is analyzed : Know the answer

https://www.zhihu.com/question/46197687

@beanfrog: The purpose of the two is different :sigmoid Used in all kinds of gate On , produce 0~1 Between the value of the , This is usually only sigmoid Most directly .tanh Used in states and outputs , It's data processing , This can be done with other activation functions .

@hhhh: See also A Critical Review of Recurrent Neural Networks for Sequence Learning Of section4.1, Said the two tanh Can be replaced with something else .

126. Measure the quality of classifiers .

@ I love big bubbles , source : Analysis of the answer

http://blog.csdn.net/woaidapaopao/article/details/77806273

The first thing to know here is TP、FN( It's really a fake )、FP( The false judgment is true )、TN Four kinds of ( You can draw a table ).

Several common indicators :

precision precision = TP/(TP+FP) = TP/~P (~p For the quantity predicted to be true )

Recall rate recall = TP/(TP+FN) = TP/ P

F1 value :2/F1 = 1/recall + 1/precision

ROC curve :ROC Space is a false positive rate (FPR,false positive rate) by X Axis , True positive rate (TPR, true positive rate) by Y The plane represented by the two-dimensional coordinate system of an axis . Among them, the true positive rate is TPR = TP / P = recall, False positive rate FPR = FP / N

127. Machine learning and statistics auc What is the physical meaning of ?

See machine learning and statistics for details auc How to understand ?

https://www.zhihu.com/question/39840928

128. Observe the gain gain, alpha and gamma The bigger it is , The smaller the gain ?

@AntZ:XGBoost The criterion for finding the segmentation point is to maximize gain. The traditional greedy method which considers all possible segmentation points of each feature is inefficient ,XGBoost An approximate algorithm is implemented . The general idea is to list several candidates who may become the segmentation point according to the percentile method , And then calculate from the candidates Gain Find the best segmentation point according to the maximum value . Its calculation formula is divided into four items , It can be adjusted by the regularization parameter (lamda Is the coefficient of the sum of squares of leaf weights , gama For the number of leaves ):

The first one is to assume that the left child's weight score for segmentation , The second is the right child , The third item is the undivided overall score , The last term is to introduce the complexity loss of a node .

It can be seen from the formula that , gama The bigger it is gain The smaller it is , lamda The bigger it is , gain It can be small or big .

The original problem is alpha instead of lambda, here paper There is no mention of , XGBoost The implementation has this parameter . It's me from paper The answer to the above understanding , Here's what I found :

How to XGBoost The model is used for parameter tuning https://zhidao.baidu.com/question/2121727290086699747.html?fr=iks&word=xgboost%20lamda&ie=gbk

129. What causes the gradient to disappear ? Let's deduce .

@ Xu Han , source : In the training of neural networks , By changing the weight of neurons , Make the output value of the network as close to the label as possible to reduce the error value , Training is commonly used BP Algorithm , The core idea is , Calculate the loss function between the output and the tag , And then calculate its gradient relative to each neuron , Iterate the weights .

If the gradient disappears, the weight update will be slow , The difficulty of model training increases . One reason for the gradient to disappear is , Many activation functions squeeze the output value into a small range , In the large range of definition domain at both ends of the activation function, the gradient is 0, Cause learning to stop .

130. What are gradient disappearance and gradient explosion ?

@ Han Xiaoyang , The chain rule in the back propagation brings about multiplication , If there are several small trends 0, The result will be very small ( The gradient disappears ); If the numbers are large , Maybe it's going to be big ( Gradient explosion ).

@ A bike , Gradient vanishing and gradient explosion in neural network training https://zhuanlan.zhihu.com/p/25631496

131. How to solve gradient disappearance and gradient expansion ?

(1) The gradient disappears :

According to the chain rule , If the partial derivative of each layer of neurons to the output of the upper layer multiplied by the weight, the result is less than 1 Words , So even if the result is 0.99, After enough layers of propagation , The bias of the error to the input layer tends to 0, May adopt ReLU The activation function can effectively solve the problem that the gradient disappears .

(2) Gradient expansion

According to the chain rule , If the partial derivative of each layer of neurons to the output of the upper layer multiplied by the weight, the result is greater than 1 Words , After enough layers of propagation , The partial derivative of the error to the input layer tends to infinity , It can be solved by activating functions .

132. Back propagation Backpropagation.