当前位置:网站首页>Detailed explanation of NIN network

Detailed explanation of NIN network

2022-07-02 08:30:00 【Red carp and green donkey】

1 Model is introduced

Network In Network (NIN) By M i n L i n Min Lin MinLin And other people in 2014 in , stay CIFAR-10 and CIFAR-100 The classification task reached the best level at that time , Its network structure is made up of three multilayer perceptrons .NiN Model paper 《Network In Network》 Published in ICLR-2014,NIN The convolution kernel design in convolution neural network is examined from a new perspective , By introducing the subnetwork structure to replace the linear mapping part in pure convolution , This form of network structure stimulates the structural design of more complex convolutional neural networks ,GoogLeNet Of Inception Structure is derived from this idea .

2 MLPConv

The author thinks that , Tradition CNN Linear filter used ( Convolution kernel ) It is a generalized linear model under local receptive field (Generalized linear model,GLM), So use CNN In feature extraction , In fact, it implicitly assumes that the characteristics are linearly separable , It is often difficult to separate linear problems .CNN By adding convolution filters to generate higher-level feature representation , The author thought of adding network volume layers as before , You can also make special designs in the convolution layer , That is, use a more effective nonlinear function approximator (nonlinear function approximator), To improve the abstraction ability of convolution layer, so that the network can extract better features in each perception domain .

NiN in , A micro network is used in the convolution layer (micro network) Improve the abstract ability of convolution , Here we use multilayer perceptron (MLP) As micro network, Because of this MLP Satellite network is located in convolution network , So the model was named “network in network” , The following figure compares the ordinary linear convolution layer with the multilayer perceptron convolution layer (MlpConv Layer).

Linear convolution layer and MLPConv Are local receptive fields (local receptive field) Map to the output eigenvector .MLPConv The kernel uses MLP, With the traditional CNN equally ,MLP Sharing parameters in each local receptive field , slide MLP The kernel can finally get the output characteristic graph .NIN Through multiple MLPConv The stack of gets . complete NiN The network structure is shown in the figure below .

The first convolution kernel is 3 × 3 × 3 × 16 3\times3\times3\times16 3×3×3×16, So in a patch The output of convolution on the block is 1 × 1 × 96 1\times1\times96 1×1×96 Of feature map( One 96 Dimension vector ). Then there was another MLP layer , The output is still 96, So the MLP Layer is equivalent to a 1 × 1 1\times1 1×1 The convolution of layer . Refer to the following table for the configuration of model parameters .

| The network layer | Enter dimensions | Nuclear size | Output size | Number of parameters |

|---|---|---|---|---|

| Local full connection layer L 11 L_{11} L11 | 32 × 32 × 3 32\times32\times3 32×32×3 | ( 3 × 3 ) × 16 / 1 (3\times3)\times16/1 (3×3)×16/1 | 30 × 30 × 16 30\times30\times16 30×30×16 | ( 3 × 3 × 3 + 1 ) × 16 (3\times3\times3+1)\times16 (3×3×3+1)×16 |

| Fully connected layer L 12 L_{12} L12 | 30 × 30 × 16 30\times30\times16 30×30×16 | 16 × 16 16\times16 16×16 | 30 × 30 × 16 30\times30\times16 30×30×16 | ( ( 16 + 1 ) × 16 ) ((16+1)\times16) ((16+1)×16) |

| Local full connection layer L 21 L_{21} L21 | 30 × 30 × 16 30\times30\times16 30×30×16 | ( 3 × 3 ) × 64 / 1 (3\times3)\times64/1 (3×3)×64/1 | 28 × 28 × 64 28\times28\times64 28×28×64 | ( 3 × 3 × 16 + 1 ) × 64 (3\times3\times16+1)\times64 (3×3×16+1)×64 |

| Fully connected layer L 22 L_{22} L22 | 28 × 28 × 64 28\times28\times64 28×28×64 | 64 × 64 64\times64 64×64 | 28 × 28 × 64 28\times28\times64 28×28×64 | ( ( 64 + 1 ) × 64 ) ((64+1)\times64) ((64+1)×64) |

| Local full connection layer L 31 L_{31} L31 | 28 × 28 × 64 28\times28\times64 28×28×64 | ( 3 × 3 ) × 100 / 1 (3\times3)\times100/1 (3×3)×100/1 | 26 × 26 × 100 26\times26\times100 26×26×100 | ( 3 × 3 × 64 + 1 ) × 100 (3\times3\times64+1)\times100 (3×3×64+1)×100 |

| Fully connected layer L 32 L_{32} L32 | 26 × 26 × 100 26\times26\times100 26×26×100 | 100 × 100 100\times100 100×100 | 26 × 26 × 100 26\times26\times100 26×26×100 | ( ( 100 + 1 ) × 100 ) ((100+1)\times100) ((100+1)×100) |

| Global average sampling G A P GAP GAP | 26 × 26 × 100 26\times26\times100 26×26×100 | 26 × 26 × 100 / 1 26\times26\times100/1 26×26×100/1 | 1 × 1 × 100 1\times1\times100 1×1×100 | 0 0 0 |

stay NIN in , After three layers MLPConv after , Not connected to the full connection layer (FC), But the last one MLPConv Global average pooling of output characteristic graphs (global average pooling,GAP). The following is a detailed introduction to the global average pooling layer .

3 Global Average Pooling

Conventional CNN The model first uses stacked convolution layers to extract features , Input full connection layer (FC) To classify . This structure follows from LeNet5, Use convolution layer as feature extractor , The full connection layer acts as a classifier . however FC Too many layer parameters , It's easy to over fit , It will affect the generalization performance of the model . So we need to use Dropout Increase the generalization of the model .

It is proposed here GAP Instead of the traditional FC layer . The main idea is that each classification corresponds to the last layer MLPConv The output characteristic diagram of . Average each characteristic graph , The pooled vector obtained after softmax Get the classification probability .GAP The advantages of :

- Strengthen the correspondence between feature mapping and categories , It is more suitable for convolutional neural network , Characteristic graphs can be interpreted as category confidence .

- GAP Layer does not need to optimize parameters , Over fitting can be avoided .

- GAP Summarize spatial information , Therefore, it has better robustness to the spatial transformation of input data .

Can be GAP Think of it as a structural regularizer , Explicitly force the feature map to map to conceptual confidence .

4 Model characteristics

- A multi-layer perceptron structure is used to replace the filtering operation of convolution , It not only effectively reduces the problem of parameter inflation caused by too many convolution kernels , It can also improve the abstract ability of the model to features by introducing nonlinear mapping .

- Use global average pooling instead of the last full connection layer , It can effectively reduce the amount of parameters ( No trainable parameters ), At the same time, pooling uses the information of the entire feature map , It is more robust to the transformation of spatial information , The final output result can be directly used as the confidence of the corresponding category .

边栏推荐

猜你喜欢

ICMP Protocol

sqli-labs第1关

C language custom types - structure, bit segment (anonymous structure, self reference of structure, memory alignment of structure)

![[untitled]](/img/6c/df2ebb3e39d1e47b8dd74cfdddbb06.gif)

[untitled]

什么是SQL注入

When a custom exception encounters reflection

2022 Heilongjiang latest food safety administrator simulation exam questions and answers

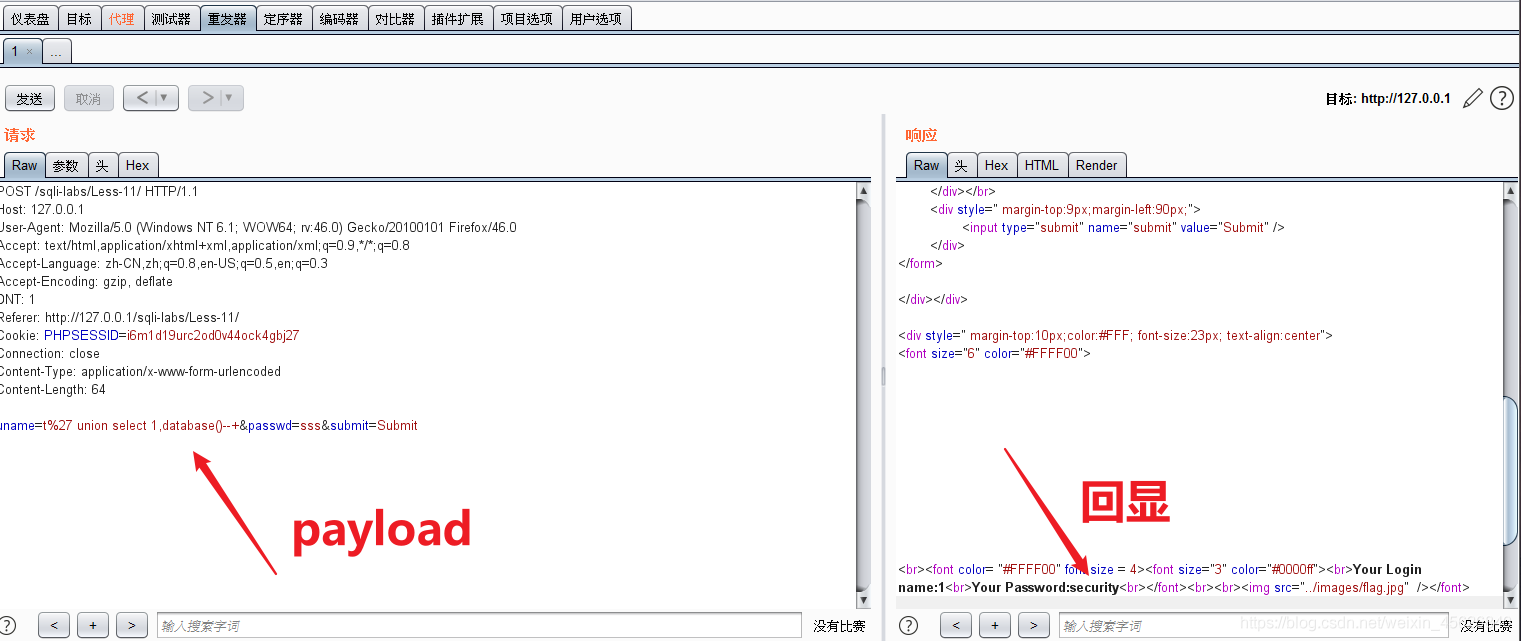

sqli-labs(POST类型注入)

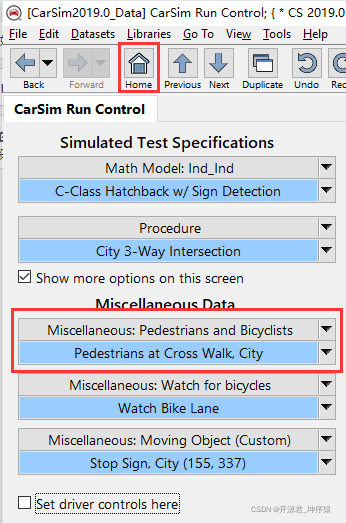

Carsim problem failed to start Solver: Path Id Obj (X) was set to y; Aucune valeur de correction de xxxxx?

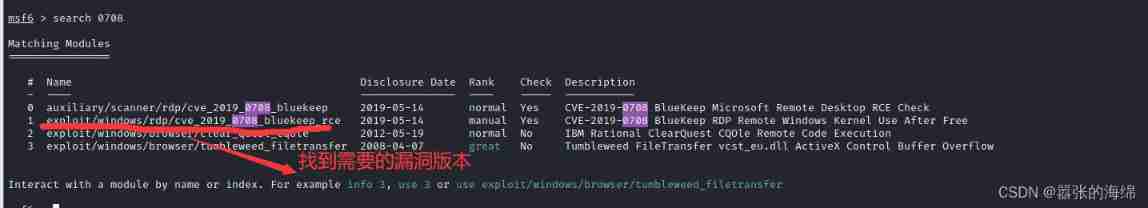

cve_ 2019_ 0708_ bluekeep_ Rce vulnerability recurrence

随机推荐

HCIA—應用層

Summary of one question per day: stack and queue (continuously updated)

ICMP协议

CarSim problem failed to start solver: path_ ID_ OBJ(X) was set to Y; no corresponding value of XXXXX?

Shortcut key to comment code and cancel code in idea

Constant pointer and pointer constant

Use Matplotlib to draw a preliminary chart

实现双向链表(带傀儡节点)

Valin cable: BI application promotes enterprise digital transformation

Summary of one question per day: String article (continuously updated)

C language replaces spaces in strings with%20

Causes of laptop jam

类和对象(类和类的实例化,this,static关键字,封装)

Vs code configuration problem

Carla-UE4Editor导入RoadRunner地图文件(保姆级教程)

Library function of C language

Use the numbers 5, 5, 5, 1 to perform four operations. Each number should be used only once, and the operation result value is required to be 24

DWORD ptr[]

Matlab-其它

Chinese garbled code under vscade