当前位置:网站首页>The worse the AI performance, the higher the bonus? Doctor of New York University offered a reward for the task of making the big model perform poorly

The worse the AI performance, the higher the bonus? Doctor of New York University offered a reward for the task of making the big model perform poorly

2022-07-06 23:16:00 【QbitAl】

Yi Pavilion From the Aofei temple

qubits | official account QbitAI

The bigger the model 、 The worse the performance, the better the prize ?

The total bonus is 25 Ten thousand dollars ( Renminbi conversion 167 ten thousand )?

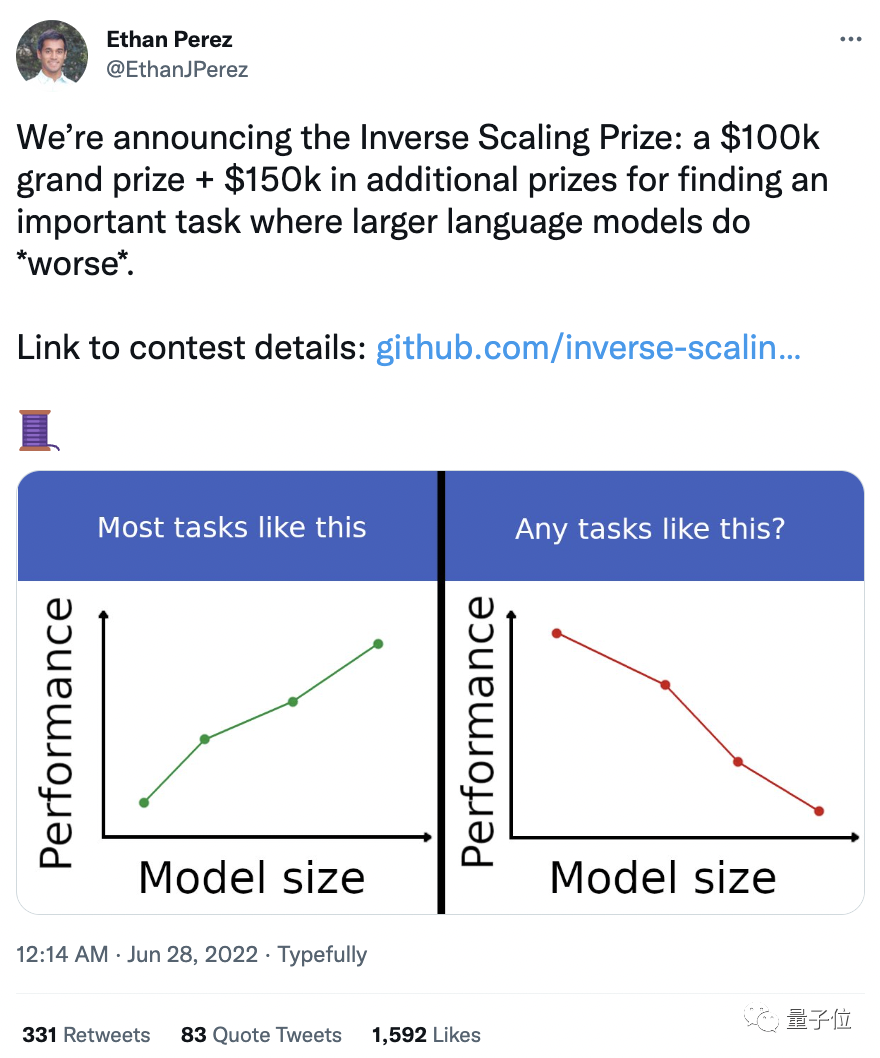

such “ Out of line ” It really happened , A man named Inverse Scaling Prize( Anti scale effect Award ) The game of caused heated discussion on twitter .

The competition was organized by New York University 7 Jointly organized by researchers .

Originator Ethan Perez Express , The main purpose of this competition , It is hoped to find out which tasks will make the large model show anti scale effect , So as to find out some problems in the current large model pre training .

Now? , The competition is receiving contributions , The first round of submissions will end 2022 year 8 month 27 Japan .

Competition motivation

People seem to acquiesce , As the language model gets bigger , The operation effect will be better and better .

However , Large language models are not without flaws , For example, race 、 Gender and religious prejudice , And produce some fuzzy error messages .

The scale effect shows , With the number of parameters 、 The amount of computation used and the size of the data set increase , The language model will get better ( In terms of test losses and downstream performance ).

We assume that some tasks have the opposite trend : With the increase of language model testing loss , Task performance becomes monotonous 、 The effect becomes worse , We call this phenomenon anti scale effect , Contrary to the scale effect .

This competition aims to find more anti scale tasks , Analyze which types of tasks are prone to show anti scale effects , Especially those tasks that require high security .

meanwhile , The anti scale effect task will also help to study the potential problems in the current language model pre training and scale paradigm .

As language models are increasingly applied to real-world applications , The practical significance of this study is also increasing .

Collection of anti scale effect tasks , It will help reduce the risk of adverse consequences of large language models , And prevent harm to real users .

Netizen disputes

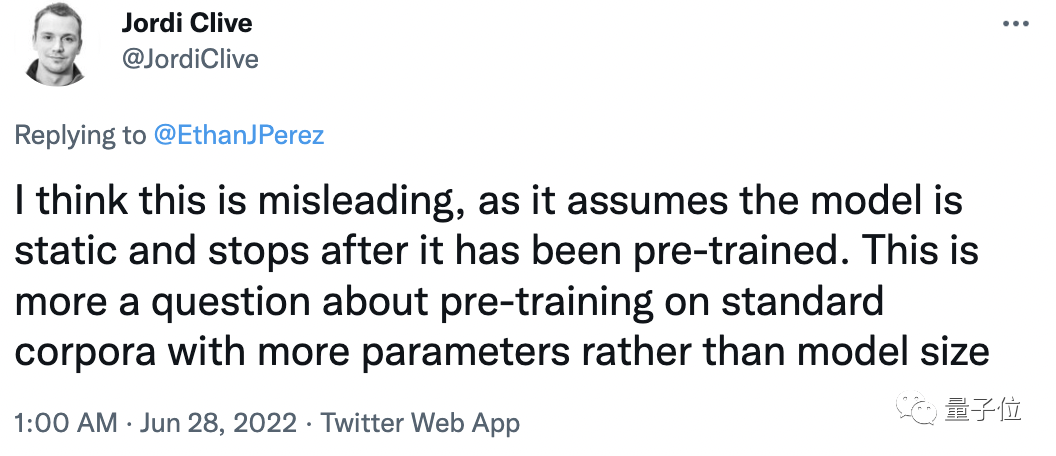

But for this competition , Some netizens put forward different views :

I think this is misleading . Because it assumes that the model is static , And stop after pre training .

This is more a problem of pre training on standard corpora with more parameters , Not the size of the model .

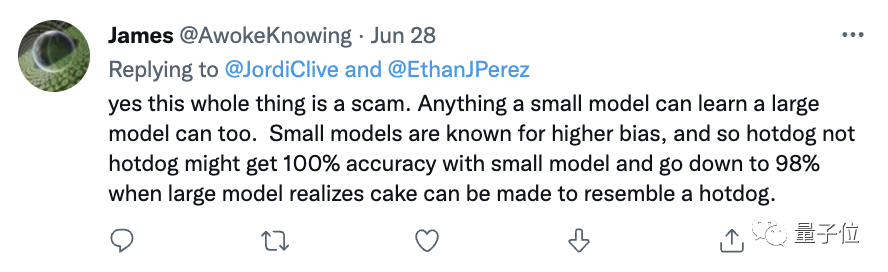

Software engineer James Agree with this view :

Yes , This whole thing is a hoax . Anything a small model can learn , Large models can also .

The deviation of the small model is larger , therefore “ Hot dogs are not hot dogs ” It may be recognized as 100% Right , When the big model realized that it could make cakes similar to hot dogs , The accuracy will drop to 98%.

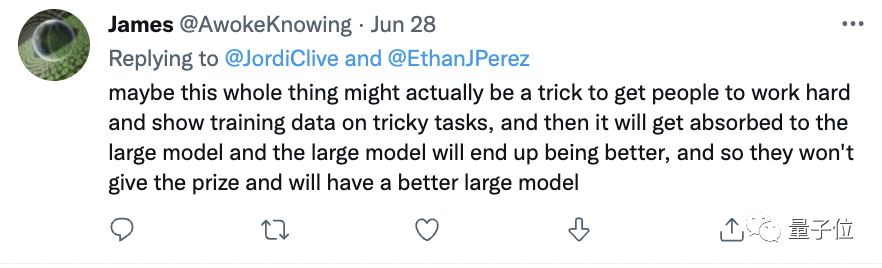

James Even further proposed “ Conspiracy theories ” View of the :

Maybe the whole thing is a hoax —— Let people work hard , And show the training data when encountering difficult tasks , This experience will be absorbed by large models , Large models will eventually be better .

So they don't need to give bonuses , You will also get a better large-scale model .

Regarding this , Originator Ethan Perez Write in the comment :

Clarify it. , The focus of this award is to find language model pre training that will lead to anti scale effect , Never or rarely seen category .

This is just a way to use large models . There are many other settings that can lead to anti scale effects , Not included in our awards .

Rules of the game

According to the task submitted by the contestant , The team will build a system that contains at least 300 Sample datasets , And use GPT-3/OPT To test .

The competition will be selected by an anonymous jury .

The judges will start from the intensity of the anti scale effect 、 generality 、 Novelty 、 Reproducibility 、 Coverage and the importance of the task 6 There are three considerations , Conduct a comprehensive review of the submitted works , Finally, the first prize was awarded 、 Second and third prizes .

The bonus is set as follows :

The first prize is the most 1 position ,10 Ten thousand dollars ;

Most second prizes 5 position , Everyone 2 Ten thousand dollars ;

The third prize is the most 10 position , Everyone 5000 dollar .

The competition was held in 6 month 27 The day begins ,8 month 27 The first round of evaluation will be conducted on the th ,10 month 27 The second round of evaluation began on the th .

Originator Ethan Perez

Originator Ethan Perez Is a scientific researcher , Has been committed to the study of large-scale language models .

Perez Received a doctorate in natural language processing from New York University , Previously in DeepMind、Facebook AI Research、Mila( Montreal Institute of learning algorithms ) Worked with Google .

Reference link :

1、https://github.com/inverse-scaling/prize

2、https://twitter.com/EthanJPerez/status/1541454949397041154

3、https://alignmentfund.org/author/ethan-perez/

— End —

「 qubits · viewpoint 」 Live registration

What is? “ Intelligent decision making ”? What is the key technology of intelligent decision ? How will it build a leading enterprise for secondary growth “ Intelligent gripper ”?

7 month 7 On Thursday , Participate in the live broadcast , Answer for you ~

Focus on me here , Remember to mark the star ~

边栏推荐

- Station B Big utilise mon monde pour faire un réseau neuronal convolutif, Le Cun Forward! Le foie a explosé pendant 6 mois, et un million de fois.

- The application of machine learning in software testing

- Les entreprises ne veulent pas remplacer un système vieux de dix ans

- 欧洲生物信息研究所2021亮点报告发布:采用AlphaFold已预测出近1百万个蛋白质

- js对JSON数组的增删改查

- 不要再说微服务可以解决一切问题了

- MySQL authentication bypass vulnerability (cve-2012-2122)

- ACL 2022 | 序列标注的小样本NER:融合标签语义的双塔BERT模型

- mysql拆分字符串作为查询条件的示例代码

- Docker mysql5.7 how to set case insensitive

猜你喜欢

On the problems of born charge and non analytical correction in phonon and heat transport calculations

Enterprises do not want to replace the old system that has been used for ten years

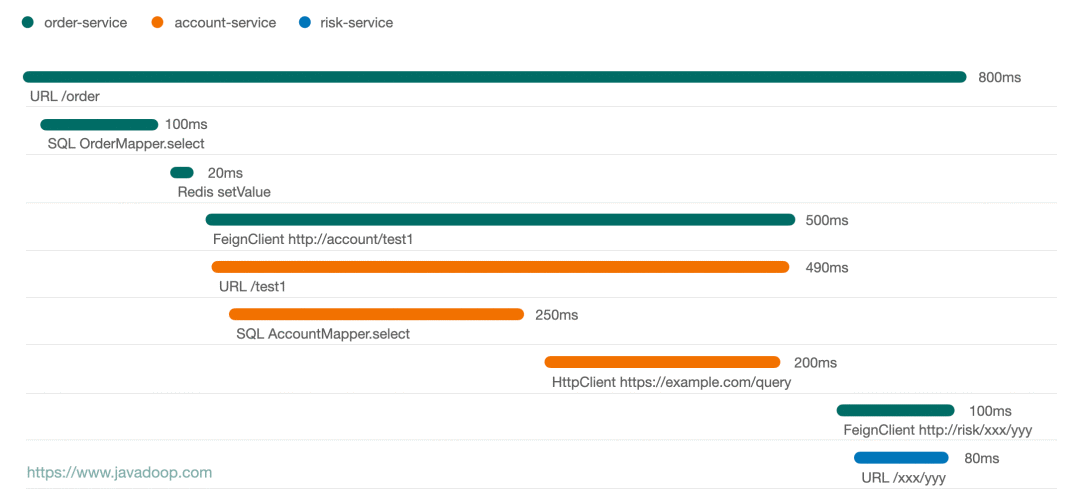

监控界的最强王者,没有之一!

CSDN 上传图片取消自动加水印的方法

Financial professionals must read book series 6: equity investment (based on the outline and framework of the CFA exam)

COSCon'22 社区召集令来啦!Open the World,邀请所有社区一起拥抱开源,打开新世界~

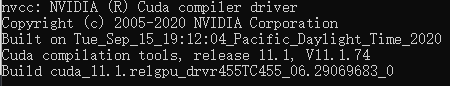

CUDA exploration

Dockermysql modifies the root account password and grants permissions

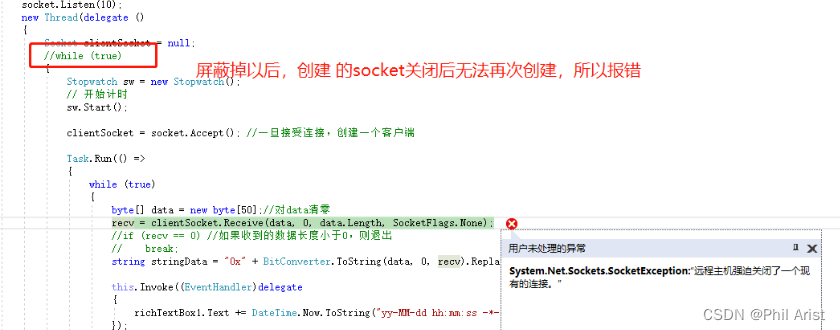

C three ways to realize socket data reception

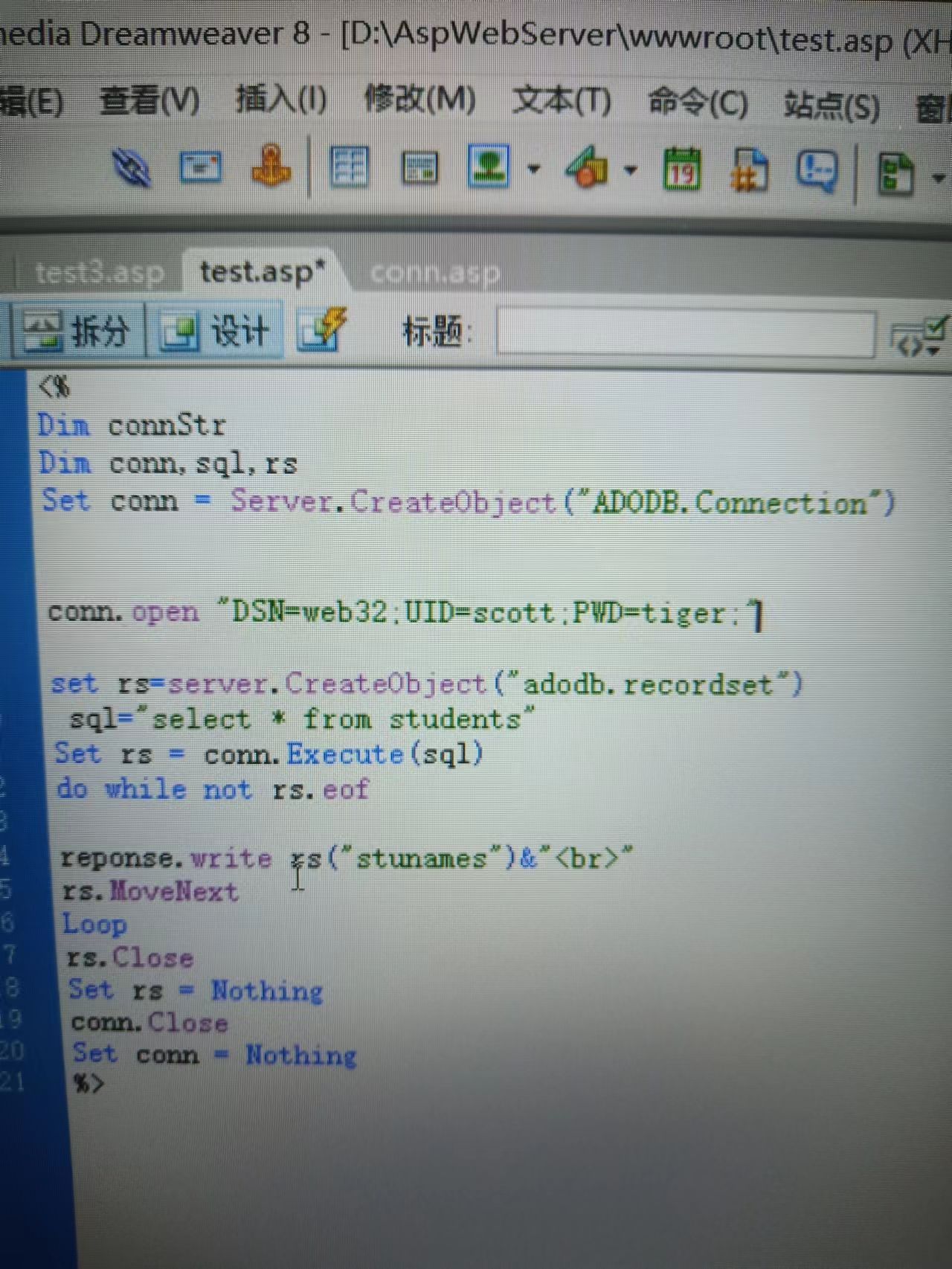

asp读取oracle数据库问题

随机推荐

Is "applet container technology" a gimmick or a new outlet?

MySQL authentication bypass vulnerability (cve-2012-2122)

Up to 5million per person per year! Choose people instead of projects, focus on basic scientific research, and scientists dominate the "new cornerstone" funded by Tencent to start the application

专为决策树打造,新加坡国立大学&清华大学联合提出快速安全的联邦学习新系统

js对JSON数组的增删改查

Station B boss used my world to create convolutional neural network, Lecun forwarding! Burst the liver for 6 months, playing more than one million

View

借助这个宝藏神器,我成为全栈了

How does crmeb mall system help marketing?

Enterprises do not want to replace the old system that has been used for ten years

COSCon'22 社区召集令来啦!Open the World,邀请所有社区一起拥抱开源,打开新世界~

spark调优(二):UDF减少JOIN和判断

AcWing 4299. Delete point

Let's see through the network i/o model from beginning to end

欧洲生物信息研究所2021亮点报告发布:采用AlphaFold已预测出近1百万个蛋白质

Dockermysql modifies the root account password and grants permissions

MySQL数据库之JDBC编程

让 Rust 库更优美的几个建议!你学会了吗?

Financial professionals must read book series 6: equity investment (based on the outline and framework of the CFA exam)

Chapter 19 using work queue manager (2)