当前位置:网站首页>If someone asks you about the consistency of database cache, send this article directly to him

If someone asks you about the consistency of database cache, send this article directly to him

2022-07-06 10:01:00 【Kaka's Java architecture notes】

In a previous post 《 Why is there an inconsistency between the database and the cache 》 in , We have introduced several cases of data inconsistency between cache and database .

We mentioned , During the operation of database and cache , Possible ” Write the database first , Post deletion cache ”、” Write the database first , Update cache after ”、” Delete cache library first , Write database after ” as well as ” Update the cache library first , Write database after ” These four .

that , Should the cache be deleted , It's better to update the cache ? Should I operate the database first or the cache first ? Which is better ? And how to choose ?

This article will analyze .

Delete or update

In order to ensure that the data in the database and cache are consistent , Many people will, many people are doing data updates , The contents in the cache will be updated at the same time . But I actually tell you , Priority should be given to deleting the cache rather than updating the cache .

First , Let's put aside the problem of data consistency for the time being , Let's look at the complex problems of updating cache and deleting cache separately .

The data we put in the cache , Many times, it may not be just a simple string value , He may also be a big JSON strand , One map Type, etc. .

Take a chestnut , When we need to deduct inventory through cache , You may need to find out the whole order model data from the cache , After deserializing him , Then analyze the inventory field , Modify him , And then serialize , Finally, it is updated to the cache .

You can see , The action of updating the cache , Compared with directly deleting the cache , The operation process is complicated , And it's easy to make mistakes .

And that is , In terms of consistency assurance of database and cache , Deleting the cache is a little easier than updating the cache .

We are 《 Why is there an inconsistency between the database and the cache 》 As described in " Write concurrent " In the scene , If you update the cache and database at the same time , Then it is easy to cause data inconsistency due to concurrency problems . Such as :

Write the database first , Update the cache again

Update cache first , Write database after :

however , If you delete the cache , In the case of write concurrency , The data in the cache is to be cleared , So there will be no data inconsistency .

however , Updating the cache still has a small disadvantage over deleting the cache , That's an extra cache miss, In other words, the next query after deleting the cache will fail to hit the cache , To query the database .

such cache miss To some extent, it may lead to cache breakdown , That is, just after the cache is deleted , The same Key There are a lot of requests coming , Cause the cache to be broken down , A large number of requests to access the database .

however , It is convenient to solve the problem of cache breakdown by locking .

All in all , Delete cache compared to update cache , The scheme is simpler , And there are fewer consistency problems . therefore , Between deleting and updating the cache , I still prefer to suggest that you first choose to delete the cache .

Delete or update

After determining the priority to delete the cache rather than update the cache , Leave our database + The options for cache updates are left :" Write the database first and then delete the cache " and " Delete the cache first and then write to the database ".

that , What are the advantages and disadvantages of these two methods ? How to choose ?

Write the database first

Because the operation of database and cache is two steps , There's no way to guarantee atomicity , So it's possible to succeed in the first step and fail in the second step .

And in general , If you put the deletion of the cache into the second step , There is an advantage , That's it The probability of cache deletion failure is still relatively low , Unless it's a network problem or cache server downtime , Otherwise, most cases can be successful .

And that is , Write the database first and then delete the cache, although it does not exist " Write concurrent " Data consistency problems caused by , But there will be " Read write concurrency " Data consistency in case .

We know , When we use the cache , The process of a reading thread querying data is as follows :

1、 The query cache , If there is a value in the cache , Then return directly

2、 Query the database

3、 Update the query results of the database to the cache

therefore , For a read thread , Although I can't write database , But the cache will be updated , therefore , In some special concurrency scenarios , It will lead to inconsistent data .

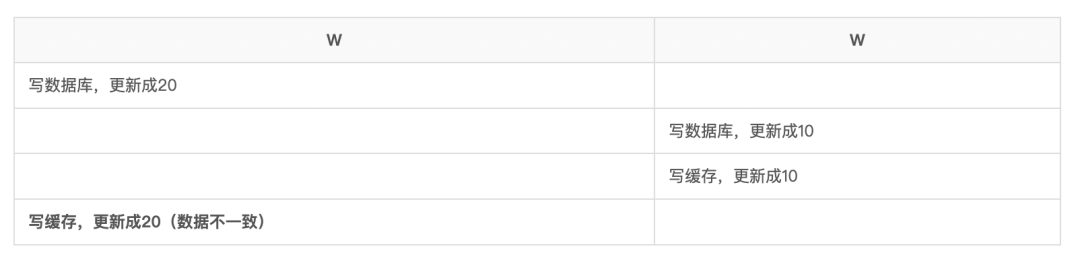

The sequence of read-write concurrency is as follows :

in other words , Suppose a read thread , No value was found when reading the cache , He'll look it up in the database , But if after the query results , Before updating the cache , The database has been updated , But the reading thread doesn't know at all , Then it will lead to the final cache being reused " The old value " overwrite .

This leads to Inconsistency between cache and database .

But the probability of this phenomenon is relatively low , Because generally a read operation is very fast , database + The cache read operation can be completed in about ten milliseconds .

And in the meantime , Better, the probability of another thread performing a more time-consuming write operation is really low .

Delete cache first

that , If the cache operation is to delete the database first , Will the scheme be more perfect ?

First , If you choose to delete the cache first and then write to the database , Then the failure of the second step is acceptable , Because there will be no dirty data , It doesn't matter , Just try again .

however , Delete the cache first and then write to the database , Will virtually enlarge what we mentioned earlier " Read write concurrency " The problem of data inconsistency caused by .

Because of this " Read write concurrency " The premise of the problem is that the read thread does not read the value in the read cache , Once the action of deleting the cache first occurs , Just so that the reading thread can't read the value from the cache .

therefore , A small probability would have happened " Read write concurrency " problem , In the process of deleting the cache first , The probability of problems will be amplified .

And the consequences of this problem are more serious , That is, the value in the cache is always wrong , It will lead to subsequent queries, so the query results that hit the cache are all wrong !

Delay double delete

that , Although the data is written first and then the cache is deleted , It can greatly reduce the probability of concurrent problems , however , According to Murphy's law , As long as something bad can happen , Then it will basically happen . The larger the system, the higher the probability of occurrence .

that , Is there any way to solve the inconsistency caused by this situation ?

In fact, there is a common scheme , It is also used more in many companies , That's it Delay double delete .

because " Read write concurrency " Problems can lead to concurrency after , The number in the cache is dirty data written by the read thread , Then you only need to write the database while writing the thread 、 After deleting the cache , Put off for a while , Just execute a delete action .

This ensures that the dirty data in the cache is cleaned up , Avoid reading dirty data in subsequent read operations . Of course , The length of this delay is also very particular , How long will it take to delete ? It is generally recommended to set 1-2s That's all right. .

Of course , This scheme also has a disadvantage , That is, it may cause the accurate data in the cache to be deleted . Of course, this is not a big problem , As we said before , Just add once cache miss only

How to choose

The specific problems and solutions of several situations have been introduced earlier , So how to choose in actual work ?

I think it's mainly based on the actual business situation .

such as , If the business volume is not large , The concurrency is not high , You can choose So let's delete the cache , How to update the database after , Because this scheme is simpler .

however , If the business volume is relatively large , If concurrency is high , Then I suggest to choose Update the database first , How to delete the cache after , Because there are fewer concurrency problems in this way . But locking may be introduced 、 More mechanisms such as delayed double deletion , Make the whole scheme more complex .

Actually , Database first , Post operation cache , It is a typical design pattern ——Cache Aside Pattern.

The main scheme of this mode is to write the database first , Post deletion cache , Moreover, cache deletion can be performed asynchronously in the bypass .

The advantage of this model is what we call , He can solve " Write concurrent " Data inconsistency caused by , And can greatly reduce " Read write concurrency " The problem of , So this is also Facebook A more respected model .

Optimization plan

Cache Aside Pattern In this model , We can process the cache asynchronously in the bypass . In fact, there are quite a few such schemes in large factories .

The main way is to use the database binlog Or based on asynchronous message subscription .

in other words , In the main logic of the code , Just operate the database first , Then the database operation is completed , You can send an asynchronous message .

Then a listener receives the message , Delete the data in the cache asynchronously .

Or simply with the help of the database binlog, Subscribe to database changes , Asynchronously clear the cache .

Both methods will have a certain delay , Usually at the millisecond level , It is generally used in business scenarios where second delay is acceptable .

Design patterns

I've introduced Cache Aside Pattern This design pattern for caching operations , So there are actually several other design patterns , Let's also introduce :

Read/Write Through Pattern

In both models , Applications use caching as the primary data source , No need for database awareness , The task of updating the database and reading from the database is entrusted to the cache agent .

Read Through Pattern Next , A read module is configured by the cache , It knows how to write data from the database to the cache . When data is requested , If you miss , Load the data from the database into the cache .

Write Through Pattern Next , Cache configuration a write module , It knows how to write data to the database . When the application wants to write data , The cache stores data first , And call the write module to write the data to the database .

in other words , In both modes , There is no need for the application to operate the database itself , I'll finish the work myself .

Write Behind Caching Pattern

This mode is when updating data , Update cache only , Without updating the database , Then, the data in the cache is persisted to the database asynchronously and regularly .

The advantages and disadvantages of this model are obvious , That is, the speed of reading and writing is very fast , But it will cause some data loss .

This is more suitable for, for example, counting the number of visits to articles 、 Like and other scenes , Allow a small amount of data loss , But faster .

There is no silver bullet

《 One month myth 》 The author of Fred Brooks In his early years, there was a famous article 《No Silver Bullet》 , He mentioned :

In the process of software development, there is no universal weapon of final destruction , Only a combination of various methods , That's the solution . And all kinds of theories or methods that claim to be magical , Can't kill “ Software Crisis ” This wolf's silver bullet .

in other words , There is no technical means or scheme , It is universally applicable . If any , There is no need for us engineers to exist .

therefore , Any technical solution , It's all a balancing process , There are many issues to weigh , Details of the business , The complexity of implementation 、 Cost of implementation , Acceptance of team members 、 Maintainability 、 Easy to understand, etc .

therefore , None of them " perfect " The plan , Only " fit " The plan .

however , How to choose a suitable scheme , There needs to be a lot of input to support . I hope the content of this article can provide some reference for your decision-making in the future !

边栏推荐

- Hero League rotation map automatic rotation

- 《ASP.NET Core 6框架揭秘》样章发布[200页/5章]

- NLP routes and resources

- C#/. Net phase VI 01C Foundation_ 01: running environment, process of creating new C program, strict case sensitivity, meaning of class library

- vscode 常用的指令

- Configure system environment variables through bat script

- Elk project monitoring platform deployment + deployment of detailed use (II)

- Learning SCM is of great help to society

- History of object recognition

- 简单解决phpjm加密问题 免费phpjm解密工具

猜你喜欢

Carolyn Rosé博士的社交互通演讲记录

再有人问你数据库缓存一致性的问题,直接把这篇文章发给他

The 32-year-old fitness coach turned to a programmer and got an offer of 760000 a year. The experience of this older coder caused heated discussion

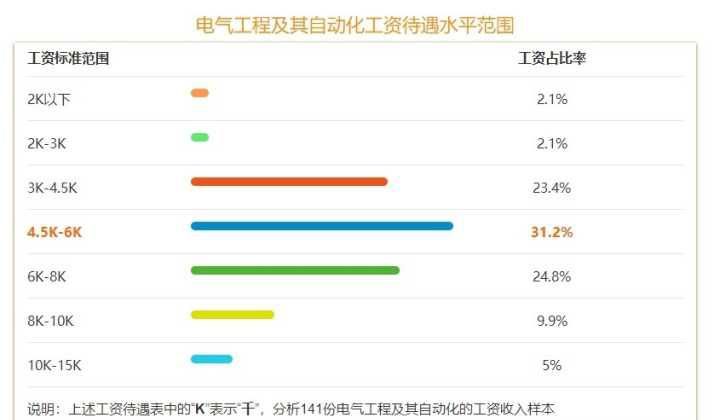

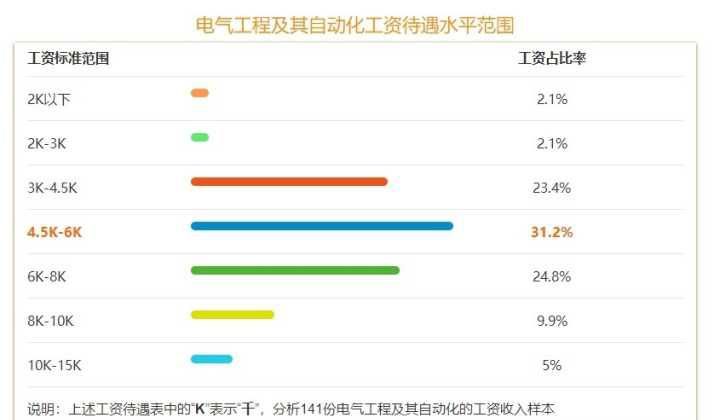

Which is the better prospect for mechanical engineer or Electrical Engineer?

大学想要选择学习自动化专业,可以看什么书去提前了解?

机械工程师和电气工程师方向哪个前景比较好?

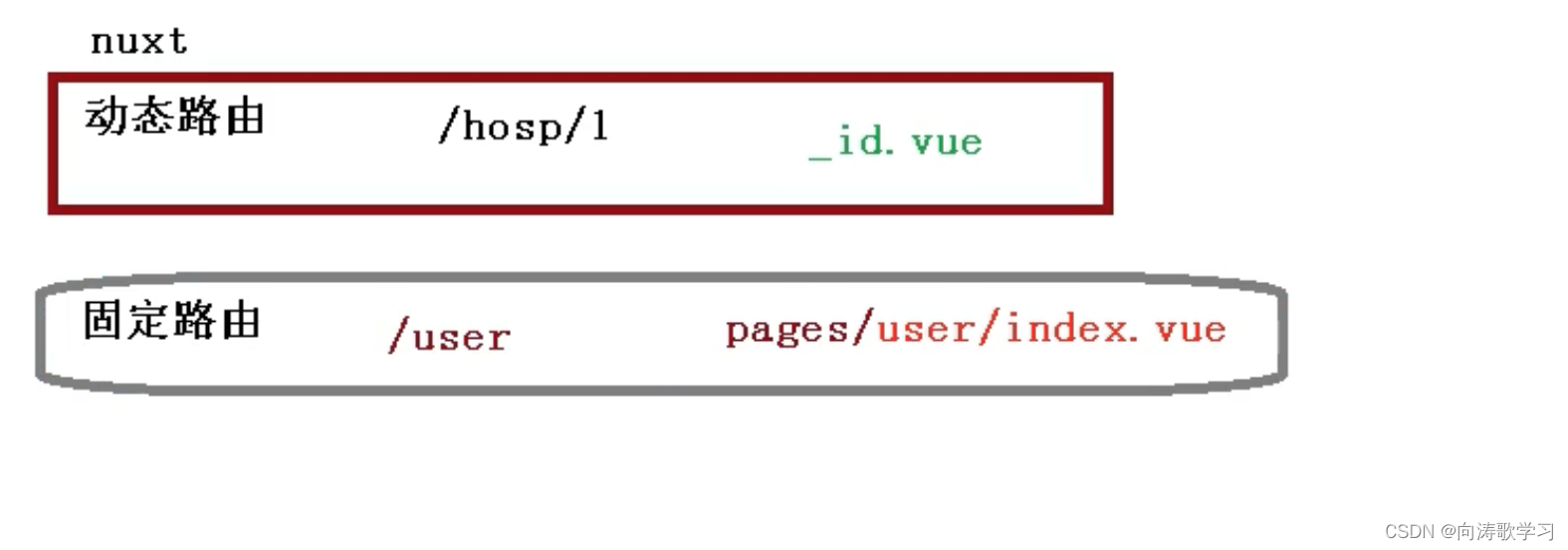

14 医疗挂号系统_【阿里云OSS、用户认证与就诊人】

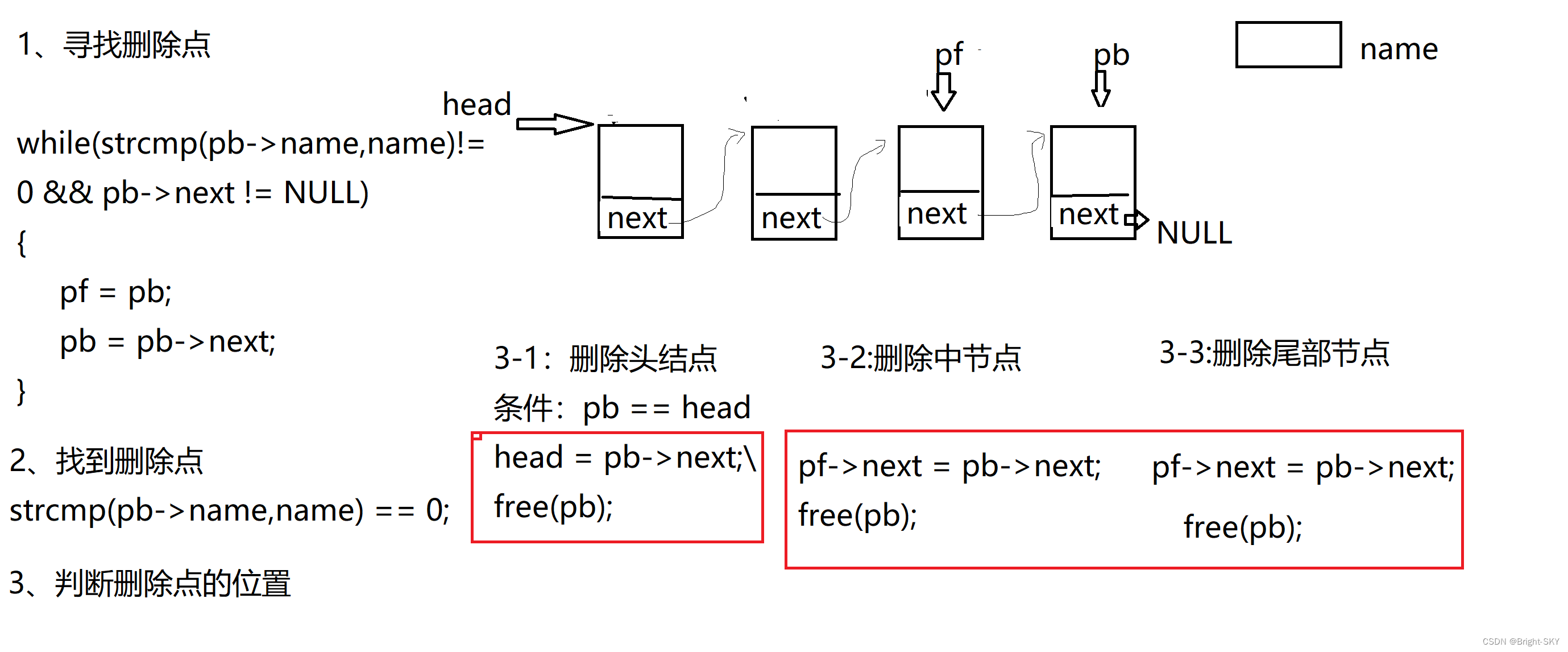

C杂讲 动态链表操作 再讲

![[NLP] bert4vec: a sentence vector generation tool based on pre training](/img/fd/8e5e1577b4a6ccc06e29350a1113ed.jpg)

[NLP] bert4vec: a sentence vector generation tool based on pre training

Hero League rotation chart manual rotation

随机推荐

vscode 常用的指令

嵌入式开发比单片机要难很多?谈谈单片机和嵌入式开发设计经历

Counter attack of noodles: redis asked 52 questions in a series, with detailed pictures and pictures. Now the interview is stable

C#/. Net phase VI 01C Foundation_ 01: running environment, process of creating new C program, strict case sensitivity, meaning of class library

Some thoughts on the study of 51 single chip microcomputer

17 医疗挂号系统_【微信支付】

NLP routes and resources

Several silly built-in functions about relative path / absolute path operation in CAPL script

[untitled]

C杂讲 动态链表操作 再讲

Selection of software load balancing and hardware load balancing

CAPL 脚本对.ini 配置文件的高阶操作

May brush question 03 - sorting

Pointer learning

Docker MySQL solves time zone problems

Safety notes

112 pages of mathematical knowledge sorting! Machine learning - a review of fundamentals of mathematics pptx

C杂讲 文件 初讲

C杂讲 双向循环链表

四川云教和双师模式