当前位置:网站首页>Five challenges of ads-npu chip architecture design

Five challenges of ads-npu chip architecture design

2022-07-06 00:52:00 【Advanced engineering intelligent vehicle】

author | Dr. Luo

brief introduction : Doctor of engineering, Southeast University , Postdoctoral Fellow, University of Bristol, UK , Furui microelectronics UK R & D Center GRUK chief AI scientists , Resident in Cambridge, England .Dr. Luo He has been engaged in scientific research and development of advanced machine vision products for a long time , Once in a 500 strong ICT The enterprise serves as the chief scientist of machine vision .

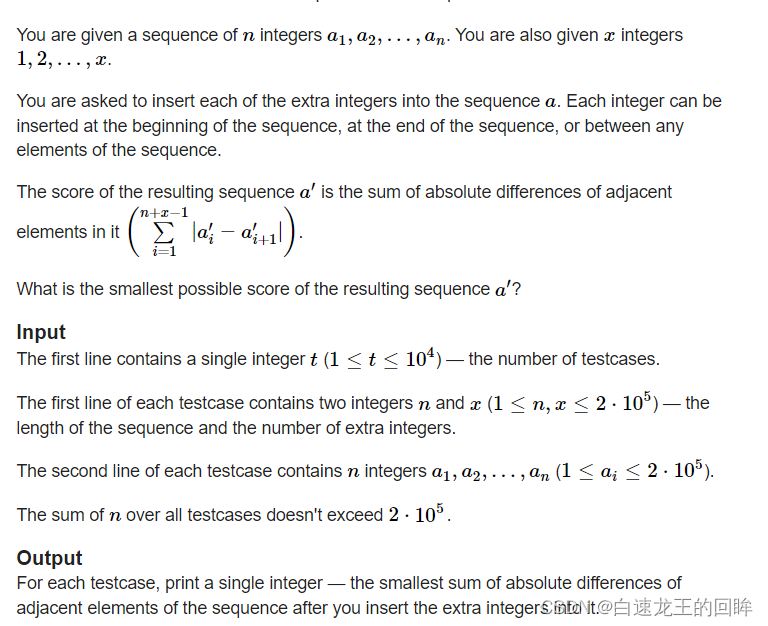

AI Algorithm in autopilot ADS Industry applications in the field , Its current evolution direction from perception to cognition , Mainly reflected in :

1) It can support multi-mode sensor sensing fusion and multi task sharing in a unified space , While improving the computational efficiency of finite computing power , Ensure that the algorithm model is suitable for extremely bad scenes in information extraction ( Rain, snow and fog 、 Low illuminance 、 High occlusion 、 Partial sensor failure 、 Active or passive scene attack ) Generalization perception of , Reduce over reliance on annotated data and high-definition maps ;

2) Joint modeling of prediction and Planning , Combine offline and online learning , Combining supervision with self supervised learning , Thus, it can deal with safe driving and effective decision-making under uncertainty , Provide interpretable questions about cognitive decision-making behavior , Solve new scene problems through continuous learning .

At present , Corresponding to ADS The evolution trend of sensor load diversification and fusion sensing decision algorithm diversification ,ADS The computing power demand and chip acceleration ability of ( Ten times the speed / Every few years ) The sustained high growth trend of .ADS Great computing power in the field NPU Current development status of chips , Really is : The age of great computing power , By perception , Four or two pounds ; The wild shuffle of cards is like the war , Only quick response , One force reduces ten meetings .

chart 1. DNN Task proportion analysis : CNN vs Transformer ( The chart analysis comes from the literature 1)

Pictured 1 Shown ,ADS Algorithm from Compute-bound towards Memory-bound evolution .ADS The mixed demand of savings and settlement , Can pass “ Embedded hardware , Algorithm iteration , Balance of computing power “ , To provide a forward compatible solution , With universal computing power NPU Designed to solve the future uncertainty of the algorithm , It is embodied in :1) The evolution of the underlying architecture : From memory computing to near memory computing , Finally, memory computing ; 2) Data channels and models : High speed data interface + data compression + The model of compression + Low precision approximation calculation + Sparse computing accelerates ; 3) Parallel top-level architecture : Model - Hardware joint design , And hard design configurable + Hardware scheduling + Soft running programmable scheduling engine .

Lao Tzu once said “ Cuddle wood , Born in the end ; Platform on the ninth floor , From the soil ; A journey , Begins with a single step .” Lao Tzu also said ” It's difficult in the world , Make it easy ; World affairs , It must be done in detail .” Deal with difficult problems from the easy , Commit to great goals and focus on micro efforts .ADS-NPU The architecture design of the chip , Also need to use 【 temperament 】 The ability of , To solve heterogeneous computing 、 Sparse computing 、 Approximation calculation 、 Memory computing and other common problems and challenges .

1. Design challenges of Heterogeneous Computing

chart 2. Systolic array architecture ( The chart analysis comes from the literature 1)

chart 3. Configurable systolic array architecture ( The chart analysis comes from the literature 1)

contrast CPU Ten hundred level parallel processing units and GPU Tens of thousands of parallel processing units ,NPU There will be millions of parallel computing units , May adopt Spatial Accelerator architecture , namely Spatial PE The space element array passes NoC, data bus , Or cross PE To realize matrix multiplication ( Full convolution calculation or full connection FC Calculation )、 High speed data flow interaction 、 And computing data sharing .

Configurable architecture of coarse granularity CGRA yes Spatial A form of accelerator , Configurable PE Array Configurable at nanosecond or microsecond level Interconnect To connect , It can support configuration driver or data flow driver .

Pictured 2 Sum graph 3 Shown , pulsation Systolic Accelerator architecture is also Spatial A kind of implementation of accelerator , Its main calculation is through 1D or 2D The calculation unit performs directional fixed flow processing on the data stream, and finally outputs the cumulative calculation result , Yes DNN Output different requirements for docking convolution layer or pooling layer , It can dynamically adjust hardware computing logic and data channels , However, the existing problems are difficult to support the sparse computing and accelerated processing of the compression model .

NPU The second type of computing unit is Vector Vector accelerator architecture , Vector oriented Element-wise Sum、Conv1x1 Convolution 、Batch Normalization、 Activate function processing and other operations , Its calculation can be realized by configurable vector kernel , The design commonly used in the industry is scalar + vector + The combination of array accelerators should be used to cope with ADS The different pre-processing requirements of multiple sensors and the hybrid storage and computing requirements of pipeline parallel processing of diversified algorithm models .

NPU SoC Multi core architecture technology can also be adopted , That is to provide thousands of accelerator physical cores to package and Chiplet On chip interconnection provides a higher degree of parallelism , Especially suitable for high parallel data load under large computing power , This requires the combination of bottom hardware scheduling and upper software scheduling , Provide a fine-grained running state call of distributed hardware computing resources .

NPU Another memory processor in evolution PIM framework , That is to reduce data movement energy consumption and improve memory bandwidth by putting computing close to storage . It can be divided into two types: near memory computing and memory computing . Near memory computing brings computing engines closer to traditional DRAM perhaps SRAM cell, Keep their design characteristics .

Memory calculation requires memory cell Add data calculation logic , Use more ReRAM perhaps STT-MRAM New technology , At present, analog or digital design is adopted , Can be realized >100TOPS/Watt Of PPA performance , But the technical problem is how to dynamically refresh the parameters of the large model in the running state , Process realization may also fall behind market expectations .

chart 4. AI Operator distribution statistics of algorithm model load ( The chart analysis comes from the literature 2)

chart 5. nVidia A100 Of TensorCore Architecture and UPCYCLE Comparison of computing efficiency of fusion architecture ( The chart analysis comes from the literature 2)

The mainstream in the current market AI chip , Common architectures have the following forms :1) GEMM Accelerated architecture (TensorCore from nVidia, Matrix Core from AMD); 2) CGRA ( Start-up company ); 3) Systolic Array (Google TPU); 4) Dataflow (Wave, Graphcore, Start-up company ); 4) Spatial Dataflow (Samba Nova, Groq); 5) Sparse framework (Inferentia).

Pictured 4 And graph 5 The case shown shows ,ADS-NPU One of the design challenges is low computational efficiency . One of the main purposes of heterogeneous computing architecture is to find a balance between hard design time optimization configurable and soft run time dynamic programmability from the perspective of design methodology , Thus, a general scheme can be provided to cover the whole design space .

Another thing worth mentioning ,UPCYCLE Fusion architecture case , involves SIMD/Short Vector, Matrix Multiply, Caching, Synchronization And other multi-core optimization strategies , This case , Description is only through short vector processing + Traditional memory cache + Traditional methods of synchronization strategy are combined , Without scalar + vector + Under the condition of microarchitecture combination of array , It can still be optimized from the top-level software architecture ( Instruction set and tool chain optimization strategy , Model - Hardware joint optimization ) To achieve 7.7x The overall computing performance is improved and 23x Improved power consumption efficiency .

2. Design challenges of sparse Computing

ADS-NPU Inefficient Computing , From the field of microarchitecture design , It can involve :1) Sparse data ( sparse DNN The Internet , Or sparse input and output data ) Lead to PE Invalid calculation of a large number of zero value data ;2)PE Due to the low efficiency of software and hardware Scheduling Algorithm ,PE Delays caused by interdependencies between ;3) The data waiting problem caused by the mismatch between the peak capacity of the data channel and the calculation channel .

The above problems 2 And questions 3 It can be effectively solved from the top-level architecture and storage and computing microarchitecture design . problem 1 Sparse data can be compressed to effectively improve the microarchitecture computing unit PE The efficiency of . Pictured 6 Sum graph 7 Shown , The case of sparse data graph coding , It can effectively improve data storage space and impact on data channels , The calculation unit is based on non-zero data NZVL Distribution map for effective screening calculation , At the cost of adding simple logical units, a 72PE The computational efficiency of is improved to 95%, Reduced data bandwidth 40%.

chart 6. Sparse computing microarchitecture case ( The chart analysis comes from the literature 3)

chart 7. Sparse data graph coding case ( The chart analysis comes from the literature 3)

3. Design challenges of approximation computation

chart 8. Examples of the relationship between algorithm model and quantitative representation ( The chart analysis comes from the literature 6)

The relationship between algorithm model and quantitative representation is shown in the figure 8 Shown , The design of approximation calculation can be characterized by the low bit parameters of the algorithm model + How to train after quantification , Without reducing the accuracy of the algorithm model , Through time and space reuse , Equivalent increase of low bits MAC PE unit .

Another advantage of approximation computation is that it can be combined with sparse computation . Low bit representation will increase the sparsity of data , similar ReLU The activation function and pooling calculation will also produce a large number of zero value data . In addition, if floating-point values are used bit-slices To characterize , There will also be a large number of high-order zero bit features .

Zero value output data means that a large number of subsequent convolution calculations can be directly skipped through pre calculation . Pictured 9 The case shown , The simple one is bit-slice Data decomposition and characterization will produce biased distribution , Can pass Signed Bit-Slice Method to solve , So that PPA The performance is effectively improved to (x4 energy consumption ,x5 performance ,x4 area ).

chart 9. Signed Bit-Slice and RLE Run length coding case ( The chart analysis comes from the literature 4)

4. Design challenges of memory computing

ADS-NPU One of the design challenges is the problem of data wall and energy consumption wall , Calculation unit PE The design of separation of storage and calculation leads to repeated movement of data , Data sharing is difficult , The mismatch between the peak capacity of the data channel and the calculation channel will lead to PE Low efficiency and SRAM/DRAM high energy-consumption .

chart 10. MRAM replace SRAM Case study ( The chart analysis comes from the literature 5)

An interesting attempt is to use new technology MRAM (STT/SOT/VGSOT-MRAM) To partially or completely replace SRAM, P0 The solution is to replace only the algorithm model parameter cache and global parameter cache ;P1 The plan is MRAM Take the place of SRAM. contrast SRAM-only framework , From the picture 10 As can be seen from the case of MRAM-P0 The solution can be >30% Energy consumption increases ,MRAM-P1 The solution is >80% Energy consumption increases , Chip area reduction >30%.

chart 11. Von Neumann Compared with the architecture of memory computing ( The chart analysis comes from the literature 6)

chart 12. Analog wall problem of memory computing ( The chart analysis comes from the literature 6)

The current memory computing architecture strategy of start-ups requires memory cell Add data calculation logic , By adopting ReRAM perhaps STT-MRAM New technology , Adopt analog or digital design to realize . Analog memory calculation IMC For those who break the tradition Von Neumann Computer architecture memory wall and energy consumption wall should have more advantages , But we need to break the simulated wall problem in the design at the same time , This is also the current digital design IMC-SRAM perhaps IMC-MRAM The reason for the majority .

Pictured 11 Sum graph 12 Shown ,IMC The main problem comes from analog-to-digital conversion ADC/DAC Interface and interface of activation function bring design redundancy . A new experimental design is based on RRAM Of RFIMC Microarchitecture (RRAM cells + CLAMP circuits + JQNL-ADCs + DTACs). Every RRAM cell representative 2 Bit memory data ,4 individual RAM cell To store 8 Weight of bits ,JQNL-ADC use 8 Bit floating point number .

From the picture 13 It can be seen that RFIMC The micro architecture of can partially solve the problem of simulation wall , Can be realized >100TOPS/Watt Of PPA performance , But the problem is , Only small-scale full vector matrix multiplication is supported , Oversized matrix multiplication , It is necessary to move the simulation data locally , Whether there is a data wall is still unknown .

chart 13. RFIMC Performance breakdown diagram of ( The chart analysis comes from the literature 6)

5. Algorithm - Common hardware design challenges

ADS The evolution trend of algorithm diversification and NPU Mixed demand for large computing power , Need algorithm -NPU Joint design to achieve the overall efficiency of the model .

Commonly used quantification and model tailoring can solve some problems , Model - Hardware federated search , It can be said that NPU The predefined hardware architecture is a template , A network model ASIC-NAS It's a typical case , That is, in the limited hardware computing space DNN Model search and model miniaturization , Seek the best combination model of calculation elements to improve the efficiency of equivalent calculation force under the same calculation complexity .

NPU Add configurable hardware and fine-grained schedulability , But there are still great performance constraints . Pictured 14 Sum graph 15 Shown ,SkyNet A case of co design of algorithm and hardware , Yes, it will NPU Fine grained PE The unit carries out Bundle Optimized encapsulation , Its value lies in that it can reduce NAS High dimensional space for architecture search , So as to reduce the dependence on the hardware infrastructure and the complexity of the optimization algorithm .

chart 14. SkyNet Algorithm and hardware design cases ( The chart analysis comes from the literature 7)

chart 15. SkyNet-Bundle-NAS Example ( The chart analysis comes from the literature 7)

author | Dr. L. Luo

reference :

【1】J. Kim, and etc., “Exploration of Systolic-Vector Architecture with Resource Scheduling for Dynamic ML Workloads”,

https://arxiv.org/pdf/2206.03060.pdf

【2】M Davies, and etc., “Understanding the limits of Conventional Hardware Architectures for Deep-Learning”,

https://arxiv.org/pdf/2112.02204.pdf

【3】C. Wu, and etc., “Reconfigurable DL accelerator Hardware Architecture Design for Sparse CNN”,

https://ieeexplore.ieee.org/document/9602959

【4】D. Im, and etc., “Energy-efficient Dense DNN Acceleration with Signed Bit-slice Architecture”,

https://arxiv.org/pdf/2203.07679.pdf

【5】V Parmar, and etc., “Memory-Oriented Design-Space Exploration of Edge-AI Hardware for XR Applications”,

https://arxiv.org/pdf/2206.06780.pdf

【6】Z Xuan,and etc., “High-Efficiency Data Conversion Interface for Reconfigurable

Function-in-MemoryComputing”,

https://ieeexplore.ieee.org/document/9795103

【7】X Zhang, and etc., “Algorithm/Accelerator Co-Design and Co-Search for Edge AI”,

https://ieeexplore.ieee.org/document/9785599

边栏推荐

- Anconda download + add Tsinghua +tensorflow installation +no module named 'tensorflow' +kernelrestart: restart failed, kernel restart failed

- Spark DF adds a column

- 【文件IO的简单实现】

- STM32按键消抖——入门状态机思维

- Ffmpeg captures RTSP images for image analysis

- Natural language processing (NLP) - third party Library (Toolkit):allenlp [library for building various NLP models; based on pytorch]

- Four dimensional matrix, flip (including mirror image), rotation, world coordinates and local coordinates

- 【线上小工具】开发过程中会用到的线上小工具合集

- Overview of Zhuhai purification laboratory construction details

- cf:C. The Third Problem【关于排列这件事】

猜你喜欢

Comment faire votre propre robot

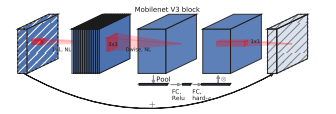

Mobilenet series (5): use pytorch to build mobilenetv3 and learn and train based on migration

激动人心,2022开放原子全球开源峰会报名火热开启

Building core knowledge points

![Cf:h. maximum and [bit operation practice + K operations + maximum and]](/img/c2/9e58f18eec2ff92e164d8d156629cf.png)

Cf:h. maximum and [bit operation practice + K operations + maximum and]

2022-02-13 work record -- PHP parsing rich text

Folding and sinking sand -- weekly record of ETF

cf:D. Insert a Progression【关于数组中的插入 + 绝对值的性质 + 贪心一头一尾最值】

After 95, the CV engineer posted the payroll and made up this. It's really fragrant

![[groovy] JSON serialization (jsonbuilder builder | generates JSON string with root node name | generates JSON string without root node name)](/img/dd/bffe27b04d830d70f30df95a82b3d2.jpg)

[groovy] JSON serialization (jsonbuilder builder | generates JSON string with root node name | generates JSON string without root node name)

随机推荐

Building core knowledge points

How to make your own robot

Calculate sha256 value of data or file based on crypto++

Beginner redis

Zhuhai laboratory ventilation system construction and installation instructions

Synchronized and reentrantlock

What is the most suitable book for programmers to engage in open source?

程序员成长第九篇:真实项目中的注意事项

Spark SQL UDF function

Model analysis of establishment time and holding time

2020.2.13

MCU通过UART实现OTA在线升级流程

Spark SQL null value, Nan judgment and processing

I'm interested in watching Tiktok live beyond concert

Interview must brush algorithm top101 backtracking article top34

Novice entry depth learning | 3-6: optimizer optimizers

【文件IO的简单实现】

The relationship between FPGA internal hardware structure and code

Questions about database: (5) query the barcode, location and reader number of each book in the inventory table

[EI conference sharing] the Third International Conference on intelligent manufacturing and automation frontier in 2022 (cfima 2022)