当前位置:网站首页>PV static creation and dynamic creation

PV static creation and dynamic creation

2022-07-07 19:15:00 【Silly [email protected]】

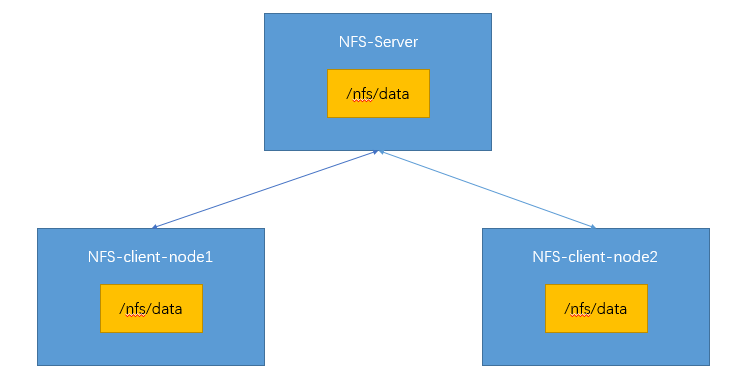

NFS(Network File System)

In the use of Kubernetes In the process of , We often use storage . Maximum function of storage , That is to make the data in the container persistent , Prevent the phenomenon of deleting databases and running away . To realize this function , Cannot leave network file system .kubernetes adopt NFS Network file system , Synchronize the attached data of each node , Then it's guaranteed pod Failover, etc , The stored data can still be read .

One 、 install NFS

stay kubernetes Installation in cluster NFS.

All nodes execute

yum install -y nfs-utils

nfs Master node execution

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports # Exposed the directory /nfs/data/,`*` It means that all nodes can access .

mkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

# Configuration takes effect

exportfs -r

# Check and verify

[[email protected] ~]# exportfs

/nfs/data <world>

[[email protected] ~]#

nfs Execute... From node

# Exhibition 172.31.0.2 Which directories can be mounted

showmount -e 172.31.0.2 # ip Change to your own master node ip

mkdir -p /nfs/data

# Mount the local directory and remote directory

mount -t nfs 172.31.0.2:/nfs/data /nfs/data

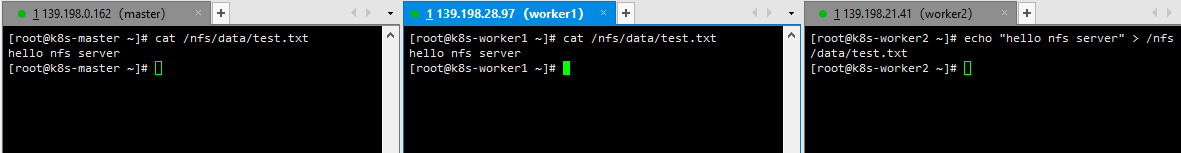

Two 、 verification

# Write a test file

echo "hello nfs server" > /nfs/data/test.txt

Through these steps , We can see NFS The file system has been successfully installed .172.31.0.2 As the main node of the system , Exposed /nfs/data, Other slave nodes /nfs/data And the master node /nfs/data Mounted . stay kubernetes Within cluster , You can choose any server as server, Other servers act as client.

PV&PVC

PV: Persistent volume (Persistent Volume), Save the data that the application needs to persist to the specified location .

PVC: Persistent volume declaration (Persistent Volume Claim), State the persistent volume specifications to be used .

Pod The data in needs to be persisted , Where to save it ? Of course, save to PV in ,PV Where it is , That's what we said NFS file system . So how to use PV Well ? It is through PVC. like PV Is stored ,PVC Just tell PV, I'm going to start using you .

PV There are generally two kinds of creation , Static creation and dynamic creation . Static creation is to create a lot in advance PV, To form a PV pool , according to PVC According to the specification requirements, choose the right one to supply . Dynamic creation does not need to be created in advance , But according to PVC Specification requirements for , You can create whatever specifications are required . From the perspective of resource utilization , Dynamic creation is better .

One 、 Static creation PV

Create data storage directory .

# nfs Master node execution

[[email protected] data]# pwd

/nfs/data

[[email protected] data]# mkdir -p /nfs/data/01

[[email protected] data]# mkdir -p /nfs/data/02

[[email protected] data]# mkdir -p /nfs/data/03

[[email protected] data]# ls

01 02 03

[[email protected] data]#

establish PV,pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

capacity:

storage: 10M

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/01

server: 172.31.0.2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 172.31.0.2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 172.31.0.2

[[email protected] ~]# kubectl apply -f pv.yml

persistentvolume/pv01-10m created

persistentvolume/pv02-1gi created

persistentvolume/pv03-3gi created

[[email protected] ~]# kubectl get persistentvolume

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01-10m 10M RWX Retain Available nfs 45s

pv02-1gi 1Gi RWX Retain Available nfs 45s

pv03-3gi 3Gi RWX Retain Available nfs 45s

[[email protected] ~]#

Three folders correspond to three PV, The sizes are 10M、1Gi、3Gi, These three PV To form a PV pool .

establish PVC,pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs

[[email protected] ~]# kubectl apply -f pvc.yml

persistentvolumeclaim/nginx-pvc created

[[email protected] ~]# kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nginx-pvc Bound pv02-1gi 1Gi RWX nfs 14m

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv01-10m 10M RWX Retain Available nfs 17m

persistentvolume/pv02-1gi 1Gi RWX Retain Bound default/nginx-pvc nfs 17m

persistentvolume/pv03-3gi 3Gi RWX Retain Available nfs 17m

[[email protected] ~]#

Can be found using 200Mi Of PVC, Will be in PV The best binding in the pool 1Gi The size of pv02-1gi Of PV To use the , Status as Bound. Create Pod or Deployment When , Use PVC You can persist the data to PV in , and NFS Any node of can synchronize .

Two 、 Dynamically create PV

Configure the default storage class of dynamic provisioning ,sc.yml

# Created a storage class

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## Delete pv When ,pv Do you want to back up your content

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 172.31.0.2 ## Specify yourself nfs Server address

- name: NFS_PATH

value: /nfs/data ## nfs Server shared directory

volumes:

- name: nfs-client-root

nfs:

server: 172.31.0.2

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

Note here ip Change it to your own , And the address of the image warehouse has been changed to Alibaba cloud , Prevent the image from downloading .

[[email protected] ~]# kubectl apply -f sc.yml

storageclass.storage.k8s.io/nfs-storage created

deployment.apps/nfs-client-provisioner created

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[[email protected] ~]#

Confirm that the configuration is in effect

[[email protected] ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storage (default) k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 6s

[[email protected] ~]#

Dynamic supply testing ,pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

[[email protected] ~]# kubectl apply -f pvc.yml

persistentvolumeclaim/nginx-pvc created

[[email protected] ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-pvc Bound pvc-7b01bc33-826d-41d0-a990-8c1e7c997e6f 200Mi RWX nfs-storage 9s

[[email protected] ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-7b01bc33-826d-41d0-a990-8c1e7c997e6f 200Mi RWX Delete Bound default/nginx-pvc nfs-storage 16s

[[email protected] ~]#

pvc The statement needs 200Mi Space , Then it is created 200Mi Of pv, Status as Bound, Test success . Create Pod or Deployment When , Use PVC You can persist the data to PV in , and NFS Any node of can synchronize .

Summary

Then you can understand ,NFS、PV and PVC by kubernetes Clusters provide data storage support , The application is deployed by any node , Then the previous data can still be read .

This paper is written by mdnice Multi platform Publishing

版权声明

本文为[Silly [email protected][email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/188/202207071703098198.html

边栏推荐

- 2022年推荐免费在线接收短信平台(国内、国外)

- 嵌入式面试题(算法部分)

- 2022-07-04 matlab读取视频帧并保存

- Scientists have observed for the first time that the "electron vortex" helps to design more efficient electronic products

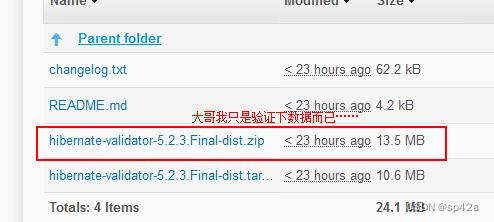

- 數據驗證框架 Apache BVal 再使用

- Complete e-commerce system

- Cloud security daily 220707: Cisco Expressway series and telepresence video communication server have found remote attack vulnerabilities and need to be upgraded as soon as possible

- Basic concepts and properties of binary tree

- Redis

- [tpm2.0 principle and Application guide] Chapter 9, 10 and 11

猜你喜欢

Former richest man, addicted to farming

Command mode - unity

![Interview vipshop internship testing post, Tiktok internship testing post [true submission]](/img/69/b27255c303150430df467ff3b5cd08.gif)

Interview vipshop internship testing post, Tiktok internship testing post [true submission]

Reuse of data validation framework Apache bval

2022上半年朋友圈都在传的10本书,找到了

抢占周杰伦

99% of people don't know that privatized deployment is also a permanently free instant messaging software!

多个kubernetes集群如何实现共享同一个存储

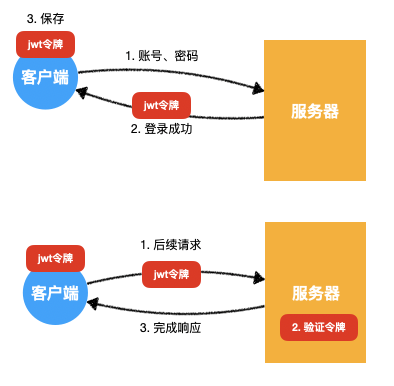

6.关于jwt

![[Tawang methodology] Tawang 3W consumption strategy - U & a research method](/img/63/a8c08ac6ec7d654159e5fc8b4423e4.png)

[Tawang methodology] Tawang 3W consumption strategy - U & a research method

随机推荐

学习open62541 --- [67] 添加自定义Enum并显示名字

Antisamy: a solution against XSS attack tutorial

【Base64笔记】「建议收藏」

POJ 1182 :食物链(并查集)[通俗易懂]

Desci: is decentralized science the new trend of Web3.0?

国内首次!这家中国企业的语言AI实力被公认全球No.2!仅次于谷歌

初识缓存以及ehcache初体验「建议收藏」

Big Ben (Lua)

Differences between rip and OSPF and configuration commands

Numpy——2.数组的形状

[tpm2.0 principle and Application guide] Chapter 16, 17 and 18

直播预约通道开启!解锁音视频应用快速上线的秘诀

Redis publishing and subscription

SlashData开发者工具榜首等你而定!!!

CVPR 2022 - learning non target knowledge for semantic segmentation of small samples

Draw squares with Obama (Lua)

POJ 2392 Space Elevator

企业展厅设计中常用的三种多媒体技术形式

嵌入式面试题(算法部分)

A hodgepodge of ICER knowledge points (attached with a large number of topics, which are constantly being updated)