当前位置:网站首页>[calculation of loss in yolov3]

[calculation of loss in yolov3]

2022-07-05 11:42:00 【Network starry sky (LUOC)】

List of articles

YOLOv1 It's a anchor-free Of , from YOLOv2 It's starting to introduce Anchor, stay VOC2007 There will be mAP Promoted 10 percentage .YOLOv3 It's still in use Anchor, This article mainly talks about ultralytics edition YOLOv3 Of Loss Part of the calculation , Actually this part loss There is a big gap with the original , And through arc Appoint loss How to build , If you want to see the original loss It can be below release Of v6 Download source code in .

Github Address : https://github.com/ultralytics/yolov3

Github release: https://github.com/ultralytics/yolov3/releases

1. Anchor

Faster R-CNN in Anchor The size and proportion of are designed by hand , It may not fit the dataset , It may have a negative impact on model performance .YOLOv2 and YOLOv3 It is through clustering algorithm to get the most suitable k Boxes . Clustering distance is through IoU To define ,IoU The bigger it is , The closer the border is .

d(box,centroid)=1−IoU(box,centroid)

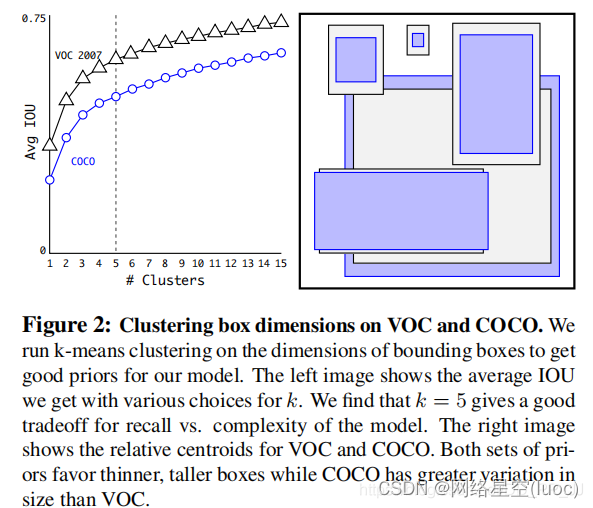

Anchor The more , Average IoU The bigger , The better the result. , But it will bring the burden of calculation , The picture below is YOLOv2 The number and average of clusters in the paper IoU Diagram for , stay YOLOv2 I chose 5 individual anchor As a balance of precision and speed .

YOLOv2 Middle clustering Anchor Quantity and sum IoU Diagram for

2. Offset formula

stay Faster RCNN in , The offset formula of the central coordinate is :

among xa、ya Represents the central coordinates ,wa and ha For width and height ,tx and ty

It is predicted by the model Anchor be relative to Ground Truth The offset , By calculation x,y Is the central coordinate of the final prediction box .

And in the YOLOv2 and YOLOv3 in , The offset is limited , If the offset is not limited , So the center of the frame can be anywhere in the image , May lead to instability in training .

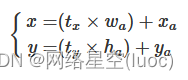

The meaning of the formula

Compare with the picture above :

cx and cy Respectively represent the coordinates of the upper left corner of the area where the center point is located .

pw and ph Represent the Anchor Width and height .

σ(tx) and σ(ty) Represents the distance between the center point of the prediction frame and the upper left corner ,σ representative sigmoid function , Limit the offset to the current grid in , It's good for model convergence .

tw and th Represents the predicted width and height offset ,Anchor Multiply the width and height of the index by the width and height of the index , Yes Anchor Adjust the length and width of .

σ(to) It's a confidence prediction , It's the probability that the current frame has a target multiplied by bounding box and ground truth Of IoU Result

3. Loss

YOLOv3 One of the parameters in is ignore_thresh, stay ultralytics Version of YOLOv3 The corresponding in is train.py In the document iou_t Parameters ( The default is 0.225).

The positive and negative samples are determined according to the following rules :

If a prediction box with all Ground Truth Maximum IoU<ignore_thresh when , The prediction box is a negative sample .

If Ground Truth The center point of the is in an area , This area is responsible for detecting the object . Will have the largest... With the object IoU As a positive sample ( Note that there is no use for ignore thresh, Even if the biggest IoU<ignore thresh It will not affect that the prediction box is a positive sample )

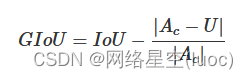

stay YOLOv3 in ,Loss It's divided into three parts :

- One is xywh Part of the error , That is to say bbox It brings loss

- One is the error of confidence , That is to say obj It brings loss

- The last one is the error caused by category , That is to say class It brings loss

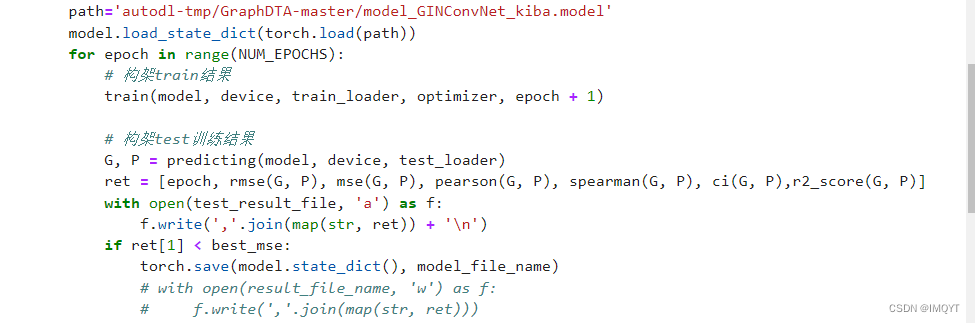

In the code, they correspond to lbox, lobj, lcls,yolov3 Used in loss The formula is as follows :

![lboxlclslobjloss=λcoord∑i=0S2∑j=0B1obji,j(2−wi×hi)[(xi−xi)2+(yi−yi)2+(wi−wi)2+(hi−hi)2]=λclass∑i=0S2∑j=0B1obji,j∑c∈classespi(c)log(pi(c))=λnoobj∑i=0S2∑j=0B1noobji,j(ci−ci)2+λobj∑i=0S2∑j=0B1obji,j(ci−ci^)2=lbox+lobj+lcls](/img/8c/1ad99b8fc1c5490f70dc81e1e5c27e.png)

among :

S: representative grid size, S2 representative 13x13,26x26, 52x52

B: box

1obj i,j: If in i,j Situated box Targeted , Its value is 1, Otherwise 0

1noobj i,j: If in i,j Situated box No target , Its value is 1, Otherwise 0

BCE(binary cross entropy) The specific calculation formula is as follows :

The above is in the paper yolov3 Corresponding darknet. and pytorch Version of yolov3 Big change , There's a lot of room for change , It can be adjusted by parameters .

It is divided into three parts for specific analysis :

1. lbox part

stay ultralytics Version of YOLOv3 in , It uses GIOU, See GIOU Explain Links .

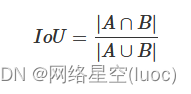

It's a simple formula ,IoU The formula is as follows :

and GIoU The formula is as follows :

among Ac

Represents the minimum closure area of two boxes , That is, the area of the smallest box containing both the prediction box and the real box .

yolov3 Provided in IoU、GIoU、DIoU and CIoU And so on , With GIoU For example :

if GIoU: # Generalized IoU https://arxiv.org/pdf/1902.09630.pdf

c_area = cw * ch + 1e-16 # convex area

return iou - (c_area - union) / c_area # GIoU

You can see the code and GIoU The formula is the same , Let's take a look at lbox Calculation part :

giou = bbox_iou(pbox.t(), tbox[i],

x1y1x2y2=False, GIoU=True)

lbox += (1.0 - giou).sum() if red == 'sum' else (1.0 - giou).mean()

You can see box Of loss yes 1-giou Value .

2. lobj part

lobj For confidence , That is, the bounding box Is there a probability of an object in . stay yolov3 In the code obj loss Can pass arc To specify the , There are two patterns :

If the default Pattern , Use BCEWithLogitsLoss, take obj loss and cls loss Calculate separately :

BCEobj = nn.BCEWithLogitsLoss(pos_weight=ft([h['obj_pw']]), reduction=red)

if 'default' in arc: # separate obj and cls

lobj += BCEobj(pi[..., 4], tobj) # obj loss

# pi[...,4] Corresponding to the confidence level of the target contained in the box , and giou Calculation BCE

# Is equivalent to obj loss and cls loss Calculate separately

If the BCE Pattern , Also used BCEWithLogitsLoss, The objects of calculation are all cls loss:

BCE = nn.BCEWithLogitsLoss(reduction=red)

elif 'BCE' in arc: # unified BCE (80 classes)

t = torch.zeros_like(pi[..., 5:]) # targets

if nb:

t[b, a, gj, gi, tcls[i]] = 1.0 # Corresponding to positive sample class Confidence is set to 1

lobj += BCE(pi[..., 5:], t)#pi[...,5:] It corresponds to all class

3. lcls part

If it's a singleton ,cls loss=0

If it's a multi class situation , There are also two modes :

If the default Pattern , It uses BCEWithLogitsLoss Calculation class loss.

BCEcls = nn.BCEWithLogitsLoss(pos_weight=ft([h['cls_pw']]), reduction=red)

# cls loss Only calculate between multiple classes loss, Single class does not calculate

if 'default' in arc and model.nc > 1:

t = torch.zeros_like(ps[:, 5:]) # targets

t[range(nb), tcls[i]] = 1.0 # Set the corresponding class by 1

lcls += BCEcls(ps[:, 5:], t) # Use BCE Calculate categories loss

If the CE Pattern , It uses CrossEntropy Calculate at the same time obj loss and cls loss.

CE = nn.CrossEntropyLoss(reduction=red)

elif 'CE' in arc: # unified CE (1 background + 80 classes)

t = torch.zeros_like(pi[..., 0], dtype=torch.long) # targets

if nb:

t[b, a, gj, gi] = tcls[i] + 1 # because cls It's counting from zero , therefore +1

lcls += CE(pi[..., 4:].view(-1, model.nc + 1), t.view(-1))

# There will be obj loss and cls loss Calculate together , Use CrossEntropy Loss

The above three parts are summarized in the following figure :

4. Code

ultralytics Version of yolov3 Of loss It's quite different from what is proposed in the paper , Many parts of the code are from the author's experience . in addition , The code read here is 2020 year 2 The author's version of , People who pay attention to this library will know , Authors update very fast , When I write this article ,loss There have also been major changes , Added label smoothing And so on , Get rid of the pass arc To adjust loss The mechanism of , To simplify the loss part .

This part of the code adds a lot of comments , Many of them are passed by the author debug The result , I need to talk about debug Configuration of :

- Single data set class=1

- batch size=2

- The model is yolov3.cfg

Calculation loss This part of code can be roughly divided into two parts , Part of it is positive and negative sample selection , Part of it is loss Calculation .

1. Positive and negative sample selection part

This part of the main work is in each yolo Layers will be preset anchor and ground truth Match , Get a positive sample , Let's review the above YOLOv3 Selection rules of positive and negative samples in :

- If a prediction box with all Ground Truth Maximum IoU<ignore_thresh when , The prediction box is a negative sample .

- If Ground Truth The center point of the is in an area , This area is responsible for detecting the object . Will have the largest... With the object IoU As a positive sample ( Note that there is no use for ignore thresh, Even if the biggest IoU<ignore thresh It will not affect that the prediction box is a positive sample )

def build_targets(model, targets):

# targets = [image, class, x, y, w, h]

# there image It's a number , Represents the present batch The first few pictures of

# x,y,w,h All normalized , Divided by width or height

nt = len(targets)

tcls, tbox, indices, av = [], [], [], []

multi_gpu = type(model) in (nn.parallel.DataParallel,

nn.parallel.DistributedDataParallel)

reject, use_all_anchors = True, True

for i in model.yolo_layers:

# yolov3.cfg Three of them yolo layer , This part is used to get the corresponding yolo Layer of grid Size and anchor size

# ng representative num of grid (13,13) anchor_vec [[x,y],[x,y]]

# Notice the anchor_vec: If it is now yolo The first layer (downsample rate=32)

# This layer corresponds to anchor by :[116, 90], [156, 198], [373, 326]

# anchor_vec The actual value is divided by 32 Result :[3.6,2.8],[4.875,6.18],[11.6,10.1]

# Original picture 416x416 Corresponding anchor by [116, 90]

# Down sampling 32 After The Times 13x13 Corresponding anchor by [3.6,2.8]

if multi_gpu:

ng = model.module.module_list[i].ng

anchor_vec = model.module.module_list[i].anchor_vec

else:

ng = model.module_list[i].ng,

anchor_vec = model.module_list[i].anchor_vec

# iou of targets-anchors

# targets What is preserved in is ground truth

t, a = targets, []

gwh = t[:, 4:6] * ng[0]

if nt: # If there is a goal

# anchor_vec: shape = [3, 2] representative 3 individual anchor

# gwh: shape = [2, 2] representative 2 individual ground truth

# iou: shape = [3, 2] representative 3 individual anchor And the corresponding two ground truth Of iou

iou = wh_iou(anchor_vec, gwh) # Calculate prior box and GT Of iou

if use_all_anchors:

na = len(anchor_vec) # number of anchors

a = torch.arange(na).view(

(-1, 1)).repeat([1, nt]).view(-1) # structure 3x2 -> view To 6

# a = [0,0,1,1,2,2]

t = targets.repeat([na, 1])

# targets: [image, cls, x, y, w, h]

# Copy 3 individual : shape[2,6] to shape[6,6]

gwh = gwh.repeat([na, 1])

# gwh shape:[6,2]

else: # use best anchor only

iou, a = iou.max(0) # best iou and anchor

# take iou The maximum is darknet By default , Back to a It's the bottom corner

# reject anchors below iou_thres (OPTIONAL, increases P, lowers R)

if reject:

# Here all thresholds are less than ignore thresh To remove

j = iou.view(-1) > model.hyp['iou_t']

# iou threshold hyperparameter

t, a, gwh = t[j], a[j], gwh[j]

# Indices

b, c = t[:, :2].long().t() # target image, class

# What is taken is targets[image, class, x,y,w,h] in [image, class]

gxy = t[:, 2:4] * ng[0] # grid x, y

gi, gj = gxy.long().t() # grid x, y indices

# Notice the passage here long Turn it into plastic , Represents the upper left corner of the grid

indices.append((b, a, gj, gi))

# indice The content of the structure is :

''' b: One batch The corner sign in a: Represents the... Of the selected positive sample anchor The bottom corner of gj, gi: Represents the selected grid The coordinates of the upper left corner of '''

# Box

gxy -= gxy.floor() # xy

# Now? gxy What's saved is the offset , Is the need to YOLO Objects to fit

tbox.append(torch.cat((gxy, gwh), 1)) # xywh (grids)

# Save the corresponding offset and width height ( Corresponding 13x13 The size of )

av.append(anchor_vec[a]) # anchor vec

# av yes anchor vec Abbreviation , What was saved was a match anchor A list of

# Class

tcls.append(c)

# tcls Used to save a list of categories on the match

if c.shape[0]: # if any targets

assert c.max() < model.nc, 'Model accepts %g classes labeled from 0-%g, however you labelled a class %g. ' \

'See https://github.com/ultralytics/yolov3/wiki/Train-Custom-Data' % (

model.nc, model.nc - 1, c.max())

return tcls, tbox, indices, av

Comb it out in each YOLO Layer matching process :

take ground truth and anchor Match , obtain iou

Then there are two ways to match :

- Use yolov3 The matching mechanism of the original , Just choose iou The largest as a positive sample

- Use ultralytics Edition yolov3 The default matching mechanism for ,use_all_anchors=True When , Select all matching pairs

The above matching parts are being screened , Corresponding to the original yolo in ignore_thresh part , Match the above to iou<ignore_thresh Part of the screen out .

Finally, the matching content is returned to compute_loss Function .

2. loss Calculation part

This part is yolov3 Middle core loss Calculation , This part is to be understood with reference to the above explanation .

def compute_loss(p, targets, model):

# p: (bs, anchors, grid, grid, classes + xywh)

# predictions, targets, model

ft = torch.cuda.FloatTensor if p[0].is_cuda else torch.Tensor

lcls, lbox, lobj = ft([0]), ft([0]), ft([0])

tcls, tbox, indices, anchor_vec = build_targets(model, targets)

''' With yolov3 For example , There are three yolo layer tcls: One list Save three tensor, Every tensor There is 6(2 individual gtx3 individual anchor) Numbers representing categories tbox: One list Save three tensor, Every tensor shape [6,4],6(2 individual gtx3 individual anchor) individual bbox indices: One list Save three tuple, Every tuple Kept in 4 individual tensor: Represent the b: One batch The corner sign in a: Represents the... Of the selected positive sample anchor The bottom corner of gj, gi: Represents the selected grid The coordinates of the upper left corner of anchor_vec: One list Save three tensor, Every tensor shape [6,2], 6(2 individual gtx3 individual anchor) individual anchor, Note that size is relative to 13x13feature map Of anchor size '''

h = model.hyp # hyperparameters

arc = model.arc # # (default, uCE, uBCE) detection architectures

# The specific loss function used is through arc Parameters determine

red = 'sum' # Loss reduction (sum or mean)

# Define criteria

BCEcls = nn.BCEWithLogitsLoss(pos_weight=ft([h['cls_pw']]), reduction=red)

BCEobj = nn.BCEWithLogitsLoss(pos_weight=ft([h['obj_pw']]), reduction=red)

#BCEWithLogitsLoss = sigmoid + BCELoss

BCE = nn.BCEWithLogitsLoss(reduction=red)

CE = nn.CrossEntropyLoss(reduction=red) # weight=model.class_weights

# class label smoothing https://arxiv.org/pdf/1902.04103.pdf eqn 3

# cp, cn = smooth_BCE(eps=0.0)

# This is the latest version available in label smoothing The function of , It can only be used in many kinds of problems

if 'F' in arc: # add focal loss

g = h['fl_gamma']

BCEcls, BCEobj, BCE, CE = FocalLoss(BCEcls, g), FocalLoss(

BCEobj, g), FocalLoss(BCE, g), FocalLoss(CE, g)

# focal loss Can be used in cls loss perhaps obj loss

# Compute losses

np, ng = 0, 0 # number grid points, targets

# np This name is really a mystery , Suggest a change and numpy Abbreviations repeat

for i, pi in enumerate(p): # layer index, layer predictions

# stay yolov3 in ,p There are three yolo layer Output pi

# Shape is :(bs, anchors, grid, grid, classes + xywh)

b, a, gj, gi = indices[i] # image, anchor, gridy, gridx

tobj = torch.zeros_like(pi[..., 0])

# tobj = target obj, Shape is (bs, anchors, grid, grid)

np += tobj.numel() # return tobj The number of elements in

# Compute losses

nb = len(b)

if nb:

ng += nb # number of targets For the final average loss

# (bs, anchors, grid, grid, classes + xywh)

ps = pi[b, a, gj, gi] # That is to say, we have found the classes+xywh, Shape is [6(2x3),6]

# GIoU

pxy = torch.sigmoid(

ps[:, 0:2] # take x,y Conduct sigmoid

) # pxy = pxy * s - (s - 1) / 2, s = 1.5 (scale_xy)

pwh = torch.exp(ps[:, 2:4]).clamp(max=1E3) * anchor_vec[i]

# To prevent spillage clamp operation , multiply 13x13feature map Corresponding anchor

# This part is consistent with the above offset formula

pbox = torch.cat((pxy, pwh), 1) # predicted box

# pbox: predicted bbox shape:[6, 4]

giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False,

GIoU=True) # giou computation

# Calculation giou loss, Shape is 6

lbox += (1.0 - giou).sum() if red == 'sum' else (1.0 - giou).mean()

# bbox loss Directly by the giou decision

tobj[b, a, gj, gi] = giou.detach().type(tobj.dtype)

# target obj use giou replace 1, Represents the corresponding confidence of this point

# cls loss Only calculate between multiple classes loss, Single class does not calculate

if 'default' in arc and model.nc > 1:

t = torch.zeros_like(ps[:, 5:]) # targets

t[range(nb), tcls[i]] = 1.0 # Set the corresponding class by 1

lcls += BCEcls(ps[:, 5:], t) # Use BCE Calculate categories loss

if 'default' in arc: # separate obj and cls

lobj += BCEobj(pi[..., 4], tobj) # obj loss

# pi[...,4] Corresponding to the confidence level of the target contained in the box , and giou Calculation BCE

# Is equivalent to obj loss and cls loss Calculate separately

elif 'BCE' in arc: # unified BCE (80 classes)

t = torch.zeros_like(pi[..., 5:]) # targets

if nb:

t[b, a, gj, gi, tcls[i]] = 1.0 # Corresponding to positive sample class Confidence is set to 1

lobj += BCE(pi[..., 5:], t)

#pi[...,5:] It corresponds to all class

elif 'CE' in arc: # unified CE (1 background + 80 classes)

t = torch.zeros_like(pi[..., 0], dtype=torch.long) # targets

if nb:

t[b, a, gj, gi] = tcls[i] + 1 # because cls It's counting from zero , therefore +1

lcls += CE(pi[..., 4:].view(-1, model.nc + 1), t.view(-1))

# There will be obj loss and cls loss Calculate together , Use CrossEntropy Loss

# Use the corresponding weight to balance , This parameter is used by the author to search (random search) The way to search

lbox *= h['giou']

lobj *= h['obj']

lcls *= h['cls']

if red == 'sum':

bs = tobj.shape[0] # batch size

lobj *= 3 / (6300 * bs) * 2

# 6300 = (10 ** 2 + 20 ** 2 + 40 ** 2) * 3

# Input is 320x320 Pictures of the , There is 6300 individual anchor

# 3 representative 3 individual yolo layer , 2 It's a super parameter , Get... By experiment

# If you don't want to calculate , You can modify red='mean'

if ng:

lcls *= 3 / ng / model.nc

lbox *= 3 / ng

loss = lbox + lobj + lcls

return loss, torch.cat((lbox, lobj, lcls, loss)).detach()

It should be noted that , Three parts loss The balance weight of is not according to yolov3 The settings of the original are made , It's based on the evolution of super parameters , Specific please see :【 Learn from scratch YOLOv3】4. YOLOv3 Parameter evolution in

5. Add

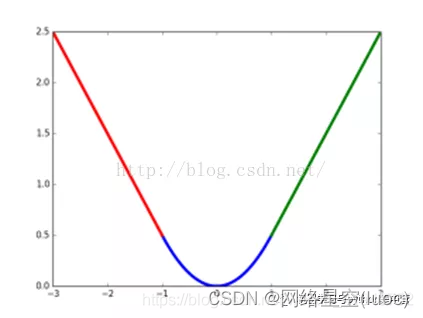

Add up BCEWithLogitsLoss Usage of , Take a look at BCELoss:

torch.nn.BCELoss The function of is the cross entropy calculation function of the binary classification task , Think of it as CrossEntropy The special case of . Its classification is limited to two categories ,y The value of must be {0,1},input It should be in the form of probability distribution . In the use of BCELoss I usually add one first sigmoid Activation layer , Commonly used in self encoder .

Calculation formula :

![ln=−wn[ynlog(xn)+(1−yn)log(1−xn)]](/img/33/50f21150d0d47b9255ad885dff964d.png)

wn It's in every category loss The weight , For class imbalance .

torch.nn.BCEWithLogitsLoss Of is equivalent to Sigmoid+BCELoss, namely input Pass by Sigmoid Activation function , take input In the form of a probability distribution .

Calculation formula :

![ln=−wn[ynlogσ(xn)+(1−yn)log(1−σ(xn))]](/img/55/9c2ea51f2d67de030c185cab20c128.png)

边栏推荐

- 石油化工企业安全生产智能化管控系统平台建设思考和建议

- Go language learning notes - analyze the first program

- 跨境电商是啥意思?主要是做什么的?业务模式有哪些?

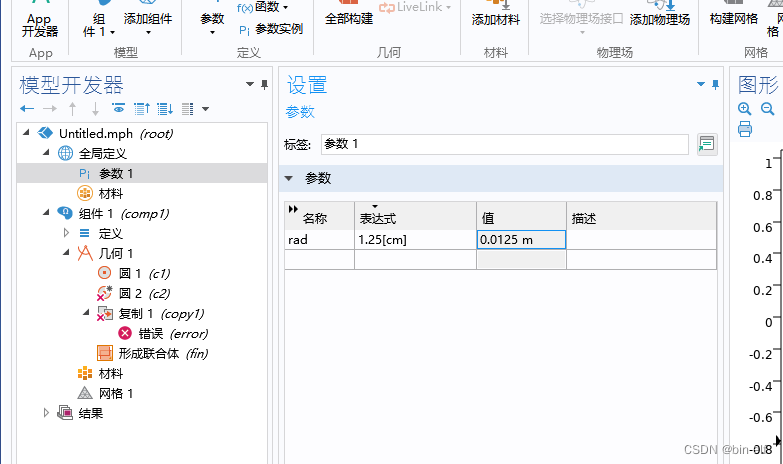

- COMSOL--建立几何模型---二维图形的建立

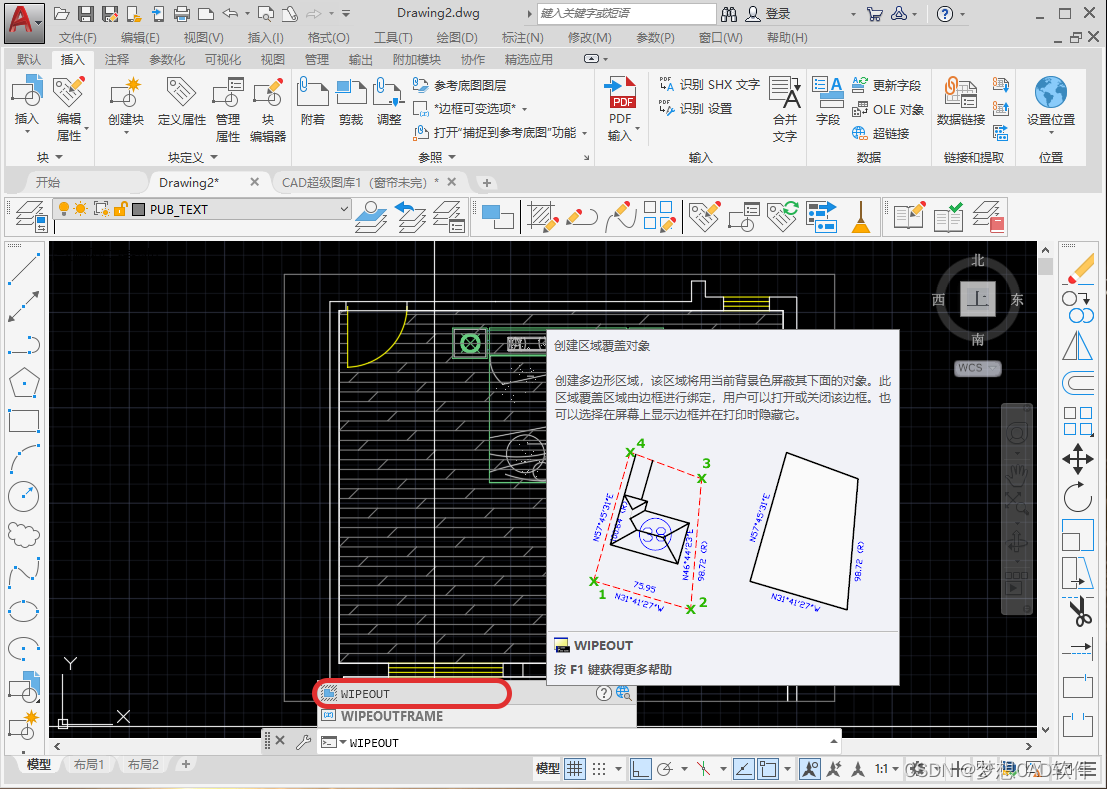

- AutoCAD -- mask command, how to use CAD to locally enlarge drawings

- C # implements WinForm DataGridView control to support overlay data binding

- 高校毕业求职难?“百日千万”网络招聘活动解决你的难题

- 15 methods in "understand series after reading" teach you to play with strings

- 12.(地图数据篇)cesium城市建筑物贴图

- SET XACT_ ABORT ON

猜你喜欢

【L1、L2、smooth L1三类损失函数】

AutoCAD -- mask command, how to use CAD to locally enlarge drawings

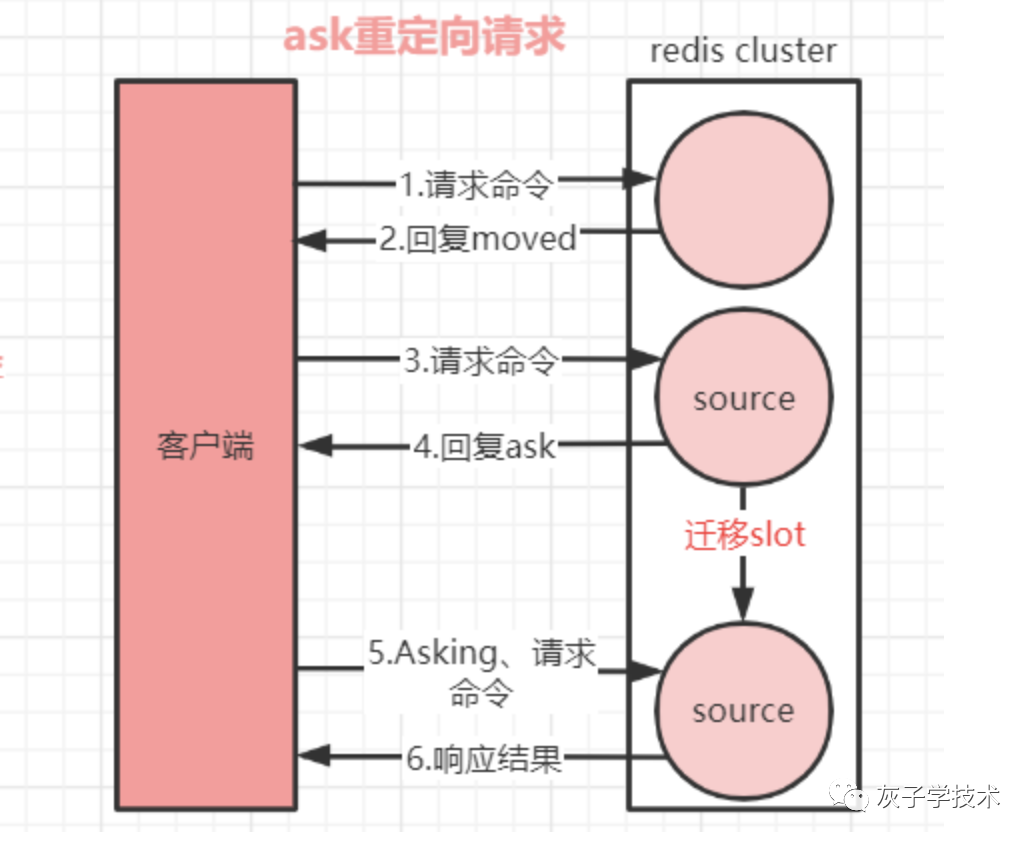

Redis集群的重定向

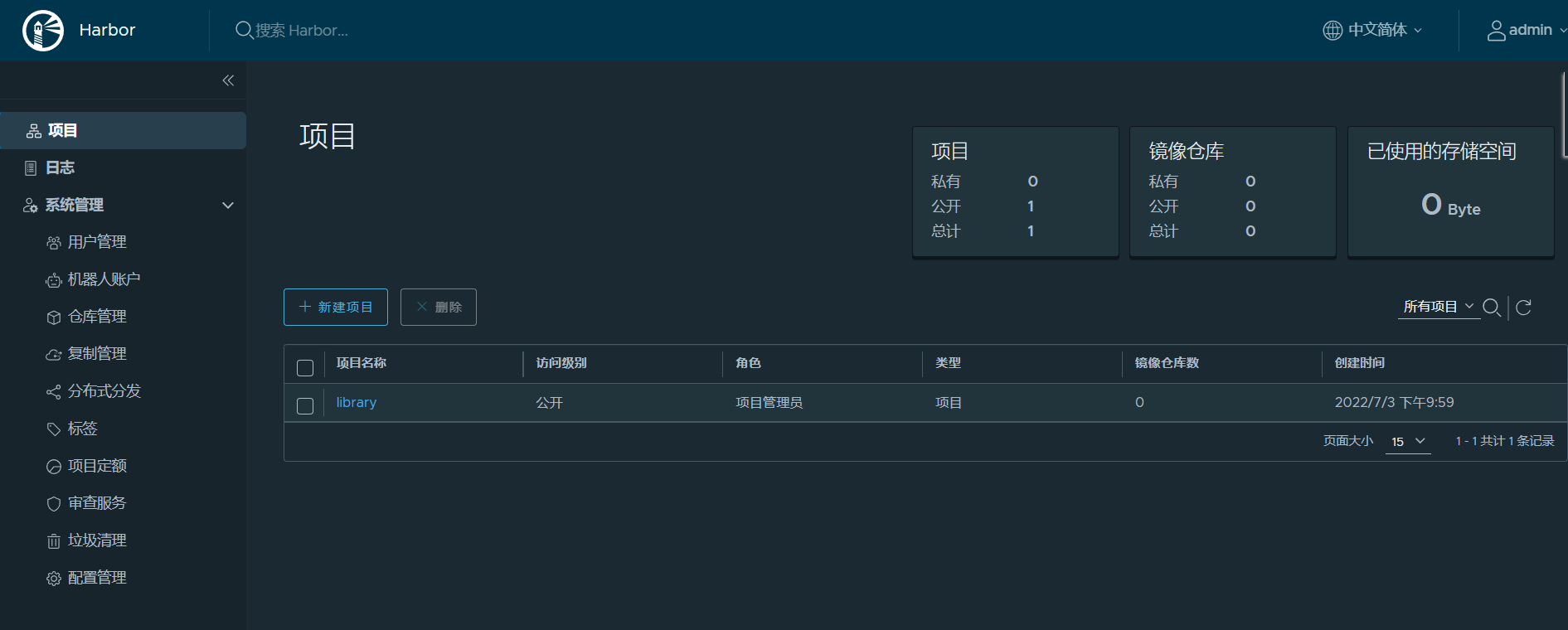

Harbor image warehouse construction

COMSOL -- establishment of geometric model -- establishment of two-dimensional graphics

The ninth Operation Committee meeting of dragon lizard community was successfully held

Ziguang zhanrui's first 5g R17 IOT NTN satellite in the world has been measured on the Internet of things

pytorch训练进程被中断了

iTOP-3568开发板NPU使用安装RKNN Toolkit Lite2

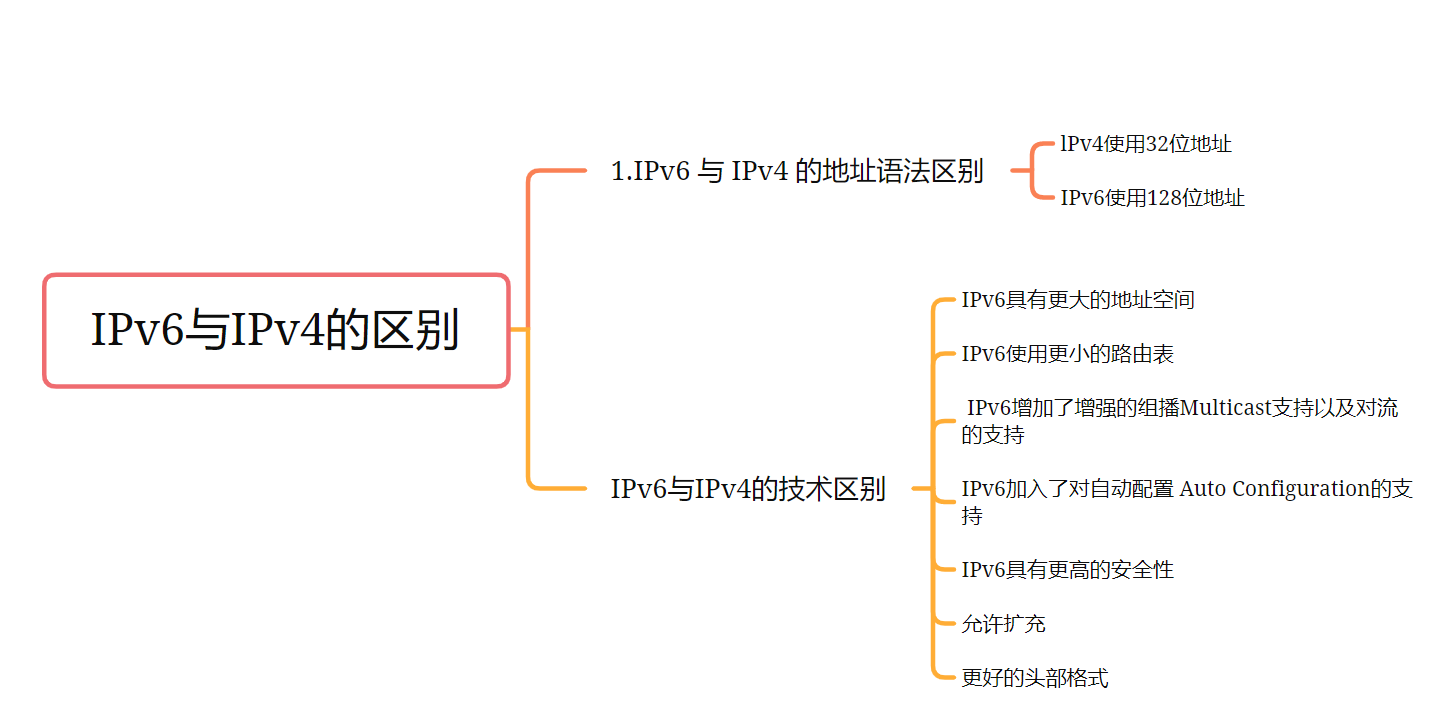

IPv6与IPv4的区别 网信办等三部推进IPv6规模部署

随机推荐

中非 钻石副石怎么镶嵌,才能既安全又好看?

Redis集群的重定向

[crawler] bugs encountered by wasm

MySQL 巨坑:update 更新慎用影响行数做判断!!!

【爬虫】wasm遇到的bug

iTOP-3568开发板NPU使用安装RKNN Toolkit Lite2

边缘计算如何与物联网结合在一起?

comsol--三维图形随便画----回转

Technology sharing | common interface protocol analysis

13. (map data) conversion between Baidu coordinate (bd09), national survey of China coordinate (Mars coordinate, gcj02), and WGS84 coordinate system

What about SSL certificate errors? Solutions to common SSL certificate errors in browsers

I used Kaitian platform to build an urban epidemic prevention policy inquiry system [Kaitian apaas battle]

[LeetCode] Wildcard Matching 外卡匹配

解决readObjectStart: expect { or n, but found N, error found in #1 byte of ...||..., bigger context ..

Mongodb replica set

IPv6与IPv4的区别 网信办等三部推进IPv6规模部署

How did the situation that NFT trading market mainly uses eth standard for trading come into being?

Implementation of array hash function in PHP

What does cross-border e-commerce mean? What do you mainly do? What are the business models?

汉诺塔问题思路的证明