当前位置:网站首页>Paper notes: limit multi label learning galaxc (temporarily stored, not finished)

Paper notes: limit multi label learning galaxc (temporarily stored, not finished)

2022-07-06 02:14:00 【Min fan】

Abstract : Share your understanding of the paper . See the original D. Saini, A. K. Jain, K. Dave, J. Jiao, A. Singh, R. Zhang and M. Varma, GalaXC: Graph neural networks with labelwise attention for extreme classification, in WWW 2021. 7 Among the authors 6 This is from Microsoft Research , Fight them , I feel like I have a funny head .

1. Contribution of thesis

- Deal with the situation that labels exist in documents : labels and documents cohabit the same space.

- Use tag text and tag relevance : label text and label correlations, label metadata.

- Tag level attention mechanism : label-wise attention mechanism.

- Hot start ( Some labels are known ) The effect is good : warm-start scenarios where predictions need to be made on data points with partially revealed label sets,

- Can handle millions of tags .

- Fast and good .

2. motivation

- Work has shown that , With the use of application independent features ( For example, traditional word bag features ) comparison , Learning intensive application specific document representation can lead to better predictions .These works have demonstrated that learning dense application-specific document representations can lead to better predictions than using application-agnostic features such as the traditional bag-of-words features.

- 5-10 Short text of tags . For example, use the title to predict relevant web pages or advertisements . Short textual descriptions with typically only 5-10 tokens. Examples include applications such as predicting related webpages or related products using only the title of a given webpage/product and predicting relevant ads/keywords/searches for

user queries. - Use a variety of metadata, such as tag text 、 Label relevance 、 Label hierarchy , Better serve the tail label . XC applications often make available label metadata in various forms such as label text, label correlations or label hierarchies.

- Label features . Contemporary XC algorithms have explored utilizing label features.

- Hot start and auxiliary data sources . Warm-start and auxiliary sources of data.

- Most of the existing work uses document diagrams instead of documents - Label map ( see Table 1). existing works mostly use document-document graphs and not joint document-label graphs at extreme scales.

2. Basic symbols

| Symbol | meaning | remarks |

|---|---|---|

| G \mathbb{G} G | Bipartite graph | G = ( D ∪ L , E ) \mathbb{G} = (\mathbb{D} \cup \mathbb{L}, \mathbb{E}) G=(D∪L,E) |

| D \mathbb{D} D | A collection of text nodes | The element is recorded as d d d, The base number is N N N |

| L \mathbb{L} L | Label node set | The element is recorded as l l l, The base number is L L L |

| y i \mathbf{y}_i yi | The first i i i A real label vector of text | The value range is { − 1 , + 1 } L \{-1, +1\}^L { −1,+1}L |

| x ^ i 0 \hat{\mathbf{x}}_i^0 x^i0 | The first i i i The eigenvector of a document | D D D dimension |

| z ^ l 0 \hat{\mathbf{z}}_l^0 z^l0 | The first l l l Eigenvectors of labels | D D D dimension |

| v ^ n 0 \hat{\mathbf{v}}_n^0 v^n0 | x ^ i 0 \hat{\mathbf{x}}_i^0 x^i0 And z ^ l 0 \hat{\mathbf{z}}_l^0 z^l0 The unified expression of | D D D dimension |

| N \mathcal{N} N | Ask neighbors to operate | V → 2 V \mathbb{V} \to 2^\mathbb{V} V→2V |

| C \mathcal{C} C | Convolution operation | |

| T \mathcal{T} T | Transformation operation | transformation |

| a ^ n k \hat{\mathbf{a}}_n^k a^nk | C k ( { v ^ m k − 1 , a ^ m k − 1 : m ∈ N ( n ) } ) \mathcal{C}_k(\{\hat{\mathbf{v}}_m^{k-1}, \hat{\mathbf{a}}_m^{k-1}: m \in \mathcal{N}(n)\}) Ck({ v^mk−1,a^mk−1:m∈N(n)}) | GNN operation |

| v ^ n k \hat{\mathbf{v}}_n^k v^nk | T k ( { v ^ n k − 1 , a ^ n k − 1 } ) \mathcal{T}_k(\{\hat{\mathbf{v}}_n^{k-1}, \hat{\mathbf{a}}_n^{k-1}\}) Tk({ v^nk−1,a^nk−1}) | GNN operation |

| W \mathbf{W} W | coefficient matrix | D × L D \times L D×L dimension |

| K K K | hop Count | |

| e l k e_{lk} elk | label l l l In the k k k individual hop scalar |

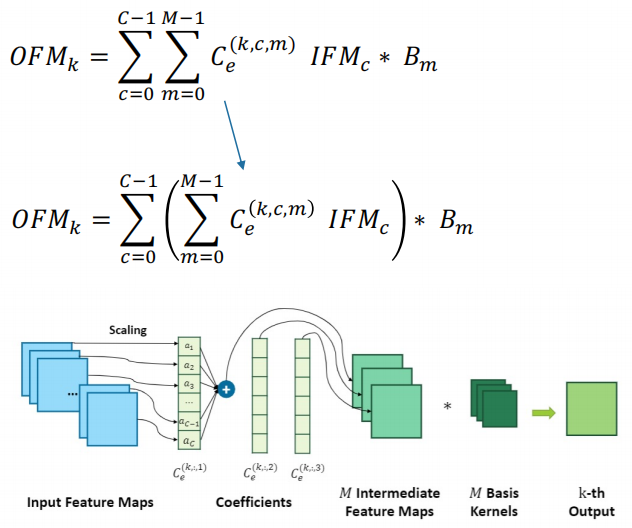

3. programme

Graph convolution block The specific operation is

a ^ n k = C k ( a ^ n k − 1 ) = ( 1 + ϵ k ) ⋅ a ^ n k − 1 + ∑ m ∈ N ( n ) a ^ m k − 1 \hat{\mathbf{a}}_n^k = \mathcal{C}_k(\hat{\mathbf{a}}_n^{k-1}) = (1 + \epsilon_k) \cdot \hat{\mathbf{a}}_n^{k-1} + \sum_{m \in \mathcal{N}(n)}\hat{\mathbf{a}}_m^{k-1} a^nk=Ck(a^nk−1)=(1+ϵk)⋅a^nk−1+m∈N(n)∑a^mk−1

Embedding The specific operation is

v ^ n k = T k ( a ^ n k ) \hat{\mathbf{v}}_n^k = \mathcal{T}_k(\hat{\mathbf{a}}_n^k) v^nk=Tk(a^nk)

Make

α l k = exp ( e l k ) / ∑ k ′ ∈ [ K ] exp e l k ′ \alpha_{lk} = \exp(e_{lk}) / \sum_{k' \in [K]} \exp e_{lk'} αlk=exp(elk)/k′∈[K]∑expelk′

It represents the first k k k individual hop Proportion of time .

The calculation formula of label embedding is

x ^ ( l ) = ∑ k ∈ [ k ] α l k ⋅ x ^ k \hat{\mathbf{x}}^{(l)} = \sum_{k \in [k]} \alpha_{lk} \cdot \hat{\mathbf{x}}^{k} x^(l)=k∈[k]∑αlk⋅x^k

Be careful : there k k k The power has not been understood .

The tag score is

s l = * w l , x ^ ( l ) * s_l = \langle \mathbf{w}_l, \hat{\mathbf{x}}^{(l)} \rangle sl=*wl,x^(l)*

4. Summary

Before reading the program , I can't understand this paper at all .

边栏推荐

- [community personas] exclusive interview with Ma Longwei: the wheel is not easy to use, so make it yourself!

- RDD creation method of spark

- 0211 embedded C language learning

- 更改对象属性的方法

- This time, thoroughly understand the deep copy

- Ali test open-ended questions

- I like Takeshi Kitano's words very much: although it's hard, I will still choose that kind of hot life

- Redis string type

- Overview of spark RDD

- Global and Chinese markets hitting traffic doors 2022-2028: Research Report on technology, participants, trends, market size and share

猜你喜欢

Social networking website for college students based on computer graduation design PHP

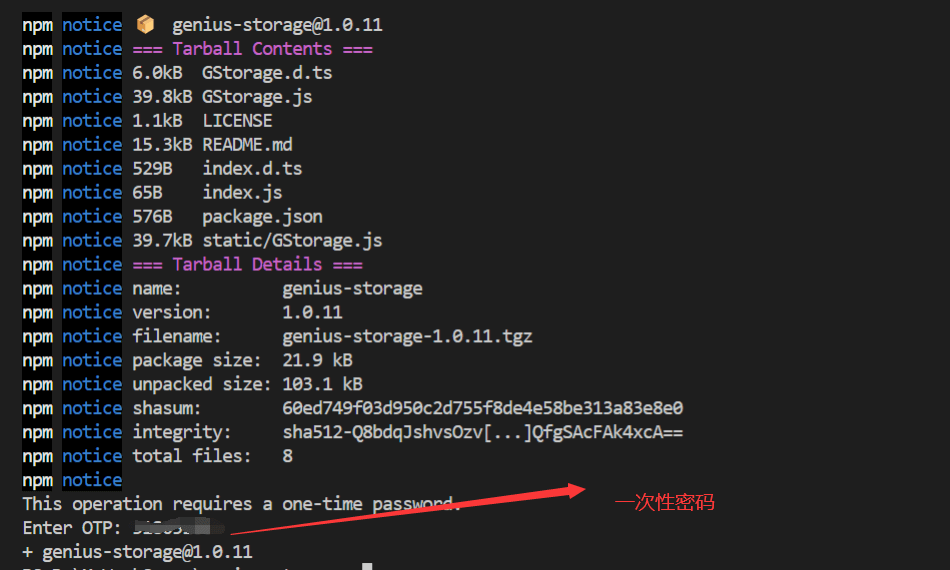

Publish your own toolkit notes using NPM

dried food! Accelerating sparse neural network through hardware and software co design

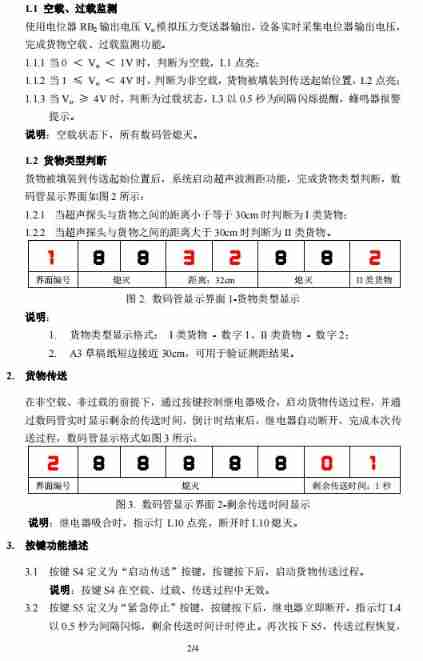

The intelligent material transmission system of the 6th National Games of the Blue Bridge Cup

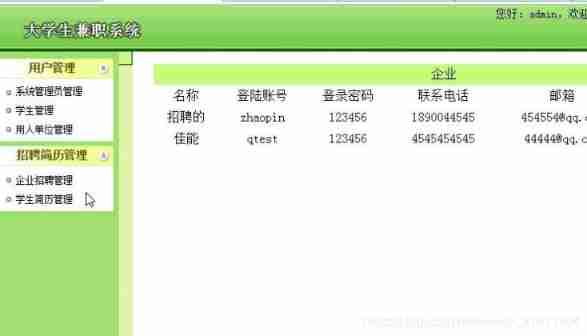

Computer graduation design PHP part-time recruitment management system for College Students

Card 4G industrial router charging pile intelligent cabinet private network video monitoring 4G to Ethernet to WiFi wired network speed test software and hardware customization

Redis-字符串类型

1. Introduction to basic functions of power query

Computer graduation design PHP campus restaurant online ordering system

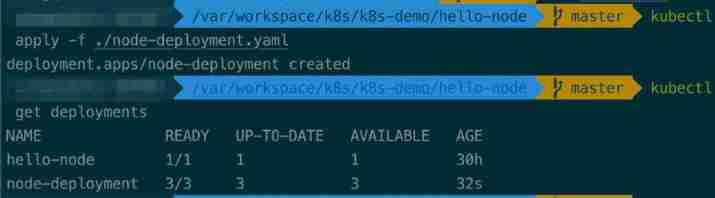

Kubernetes stateless application expansion and contraction capacity

随机推荐

Selenium waiting mode

Sword finger offer 38 Arrangement of strings

Kubernetes stateless application expansion and contraction capacity

MySQL learning notes - subquery exercise

How does redis implement multiple zones?

0211 embedded C language learning

Regular expressions: examples (1)

[solution] every time idea starts, it will build project

leetcode-2. Palindrome judgment

[ssrf-01] principle and utilization examples of server-side Request Forgery vulnerability

Using SA token to solve websocket handshake authentication

Shutter doctor: Xcode installation is incomplete

Computer graduation design PHP college classroom application management system

How to set an alias inside a bash shell script so that is it visible from the outside?

[solution] add multiple directories in different parts of the same word document

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

Install redis

Overview of spark RDD

01. Go language introduction

Concept of storage engine