当前位置:网站首页>Elk log analysis system

Elk log analysis system

2022-07-05 02:29:00 【No such person found 0330】

Catalog

2、ELK Introduction of components

filebeat combination logstash Benefits

4、 The basic characteristics of complete log system

3、 ... and 、ELK Log analysis system cluster deployment

Initialization environment ( All nodes )

ELK Elasticsearch Cluster deployment ( stay Node1、Node2 Operation on node )

install elasticsearch—rpm package

modify elasticsearch Master profile

es Performance tuning parameters

Optimize elasticsearch The memory permissions the user has

start-up elasticsearch Is it successfully opened

install Elasticsearch-head plug-in unit

Compilation and installation node

install Elasticsearch-head Data visualization tool

modify Elasticsearch Master profile

start-up elasticsearch-head service

ELK Kiabana Deploy ( stay Node1 Operation on node )

One 、ELK summary

1、ELK brief introduction

ELK The platform is a complete set of centralized log processing solutions , take ElasticSearch、Logstash and Kiabana Three open source tools are used together , Complete more powerful user query of logs 、 Sort 、 Statistical needs .

2、ELK Introduction of components

ElasticSearch

- Is based on Lucene( The architecture of a full-text search engine ) Developed distributed storage retrieval engine , Used to store all kinds of logs .

- Elasticsearch Yes, it is Java Developed , It can be done by RESTful Web Interface , So that users can communicate with Elasticsearch signal communication .

- Elasticsearch It's a real-time 、 Distributed and scalable search engine , Allow full text 、 Structured search , It is usually used to index and search large amounts of log data , It can also be used to search for many different types of documents .

Kiabana

- Kibana Usually with ElasticSearch Deploy together ,Kibana yes Elasticsearch A powerful data visualization Dashboard,Kibana Provide graphical web Interface to browse Elasticsearch Log data , Can be used to summarize 、 Analyze and search important data .

Logstash

As a data collection engine . It supports dynamic from various Data source collects data , And filter the data 、 analysis 、 Enrich 、 Unified format and other operations , Then store it in the location specified by the user . Usually sent to Elasticsearch.

Logstash from Ruby Language writing , Running on the Java virtual machine (JVM) On , It is a powerful data processing tool , Data transmission can be realized 、 Format processing 、 Format output .Logstash It has powerful plug-in function , Commonly used for log processing .

Other components that can be added

Filebeat

- Lightweight open source log file data collector . It is usually installed on the client that needs to collect data Filebeat, And specify the directory and log format ,Filebeat Can quickly collect data , And send it to logstash To analyze , Or send it directly to Elasticsearch Storage , Performance compared to JvM Upper logstash Obvious advantages , It's a replacement . Often applied to EFLK Architecture .

filebeat combination logstash Benefits

- adopt Logstash With disk based adaptive buffering system , The system will absorb the incoming throughput , To lessen Elasticsearch Pressure to keep writing data

- From other data sources ( Like databases ,s3 Object store or message delivery queue ) Extract from

- Sending data to multiple destinations , for example s3,HDFS(Hadoop distributed file system ) Or write to a file using conditional data flow logic to form a more complex processing pipeline

cache / Message queue (redis、kafka、RabbitMQ etc. ): Traffic peak shaving and buffering can be carried out for high concurrency log data , Such buffering can protect data from loss to a certain extent , You can also apply decoupling to the entire architecture .

Fluentd

- Is a popular open source data collector . because 1ogstash The disadvantage of being too heavy ,Logstash Low performance 、 More resource consumption and other problems , And then there's this Fluentd Appearance . Comparison 1ogstash,Fluentd Easier to use 、 Less resource consumption 、 Higher performance , More efficient and reliable in data processing , Welcomed by enterprises , Become logstash An alternative to , Often applied to EFK Architecture . stay Kubernetes It is also commonly used in clusters EFK As a scheme for log data collection .

- stay Kubernetes In the cluster, it is generally through DaemonSet To run the Fluentd, So that it's in every Kubernetes You can run one on a work node Pod.

- It gets the container log file 、 Filter and transform log data , And then pass the data to Elasticsearch colony , Index and store it in the cluster .

3、 Why use ELK

Logs mainly include system logs 、 Application logs and security logs . The system operation and maintenance personnel and developers can understand the software and hardware information of the server through the log 、 Check the errors in the configuration process and the causes of the errors . Regular log analysis can help you understand the load of the server , Performance security , So as to take measures to correct mistakes in time .

Often we use the log of a single machine grep、awk And other tools can basically achieve simple analysis , But when logs are distributed across different devices . If you manage hundreds of servers , You're still using the traditional method of logging in each machine in turn to look up the logs . Does this feel tedious and inefficient . We need to use centralized log management , for example : Open source syslog, Summarize the log collection on all servers . After centralized management of logs , Log statistics and retrieval has become a more cumbersome thing , Generally we use grep、awk and wc etc. Linux Command can realize retrieval and statistics , But for more demanding queries 、 Sorting, statistics and other requirements, as well as the huge number of machines, still use this method, which is inevitably a little ineffective. Generally, large-scale systems are a distributed deployment architecture , Different service modules are deployed on different servers , When problems arise , Most situations need to be based on the key information exposed by the problem , Go to specific servers and service modules , Building a centralized log system , It can improve the efficiency of location problem .

4、 The basic characteristics of complete log system

- collect : Be able to collect log data from multiple sources

- transmission : It can stably parse, filter and transmit log data to the storage system

- Storage : Store log data

- analysis : Support UI analysis

Warning : Able to provide error reports , Monitoring mechanism

Two 、ELK How it works

Deploy on all servers that need to collect logs Logstash; Or you can centralize the log management on the log server , Deploy on the log server Logstash.

Logstash Collect the logs , Format the log and output it to Elasticsearch In a crowd .

Elasticsearch Index and store the formatted data .

Kibana from ES Query data in the cluster to generate charts , And display the front-end data .

summary :logstash As a log collector , Collect data from a data source , And send it to the message queue for buffering and then filter the data , format processing , And then leave it to Elasticsearch Storage ,kibana Visualize the log

3、 ... and 、ELK Log analysis system cluster deployment

| Server type | Systems and IP Address | Components that need to be installed | Hardware aspect |

|---|---|---|---|

| node01 node | CentOS7.4(64 position ) 192.168.52.110 | Elasticsearch 、 Kibana | 2 nucleus 4G |

| node02 node | CentOS7.4(64 position ) 192.168.52.120 | Elasticsearch | 2 nucleus 4G |

| Apache node | CentOS7.4(64 position ) 192.168.52.130 | Logstash Apache | 2 nucleus 4G |

Initialization environment ( All nodes )

# Turn off firewall

systemctl stop firewalld.service

setenforce 0ELK Elasticsearch Cluster deployment ( stay Node1、Node2 Operation on node )

# Set up Java Environmental Science

java -version

# If not installed ,

yum -y install java

Deploy Elasticsearch Software

install elasticsearch—rpm package

Upload elasticsearch-5.5.0.rpm To /opt Under the table of contents

rpm -ivh elasticsearch-5.5.0.rpm

Modify hostname

# With node01 For example

hostnamectl set-hostname node01

su

vim /etc/hosts

192.168.52.110 node01

192.168.52.120 node02

modify elasticsearch Master profile

cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

vim /etc/elasticsearch/elasticsearch.yml

--17-- uncomment , Specify the cluster name

cluster.name: my-elk-cluster

--23-- uncomment , Specify the node name :Node1 The node is node1,Node2 The node is node2

node.name: node1

node.master: true# whether master node ,false Why not

node.data: true# Whether the data node ,false Why not

--34-- uncomment , Specify the data storage path

path.data: /var/lib/elasticsearch

--38-- uncomment , Specify the log storage path

path.logs: /var/log/elasticsearch

--44-- uncomment , avoid es Use swap Swap partition

bootstrap.memory_lock: false

--56-- uncomment , Set listening address ,0.0.0.0 For all addresses

network.host: 0.0.0.0

--60-- uncomment ,ES The default listening port of the service is 9200

http.port: 9200 # Appoint es The cluster provides an interface for external access

transport.tcp.port: 9300 # Appoint es Internal communication interface of the cluster

--69-- uncomment , Cluster discovery is realized by unicast , Specify the nodes to discover

discovery.zen.ping.unicast.hosts: ["192.168.52.120:9300", "192.168.2.110:9300"]

grep -v "^#" /etc/elasticsearch/elasticsearch.ymles Performance tuning parameters

# Optimize the maximum memory size and the maximum number of file descriptors. Restart to take effect

vim /etc/security/limits.conf

......

* soft nofile 65536 The maximum number of file descriptors that can be opened ( Soft limit )

* hard nofile 131072 The maximum number of file descriptors that can be opened ( Hard limit )

* soft memlock unlimited The maximum number of processes available to a single user ( Soft limit )

* hard memlock unlimited The maximum number of processes available to a single user ( Hard limit )Optimize elasticsearch The memory permissions the user has

because ES Build on lucene, and lucene The power of design is lucene Can make good use of operating system memory to cache index data , To provide fast query performance .lucene The index file of segements It's stored in a single file , And immutable , about OS Come on , Be able to keep the index file in cache in , For quick access to ; therefore , It's necessary for us to leave half of our physical memory to lucene ; The other half of the physical memory is reserved for ES(JVM heap ). therefore , stay ES Memory settings , The following principles can be followed :

- When the machine memory is less than 64G when , Follow the general principles ,50% to ES,50% Leave it to the operating system , for lucene Use

- When the machine memory is greater than 64G when , Follow the principles : It is suggested to assign to ES Distribute 4~32G The memory of , Other memory is reserved for the operating system , for lucene Use

vim /etc/sysctl.conf

# The maximum number of memory mapped areas a process can have , Reference data ( Distribute 2g/262144,4g/4194304,8g/8388608)

vm.max_map_count=262144

sysctl -p Reload profile

sysctl -a | grep vm.max_map_count

start-up elasticsearch Is it successfully opened

systemctl start elasticsearch.service

systemctl enable elasticsearch.service

netstat -antp | grep 9200View node information

Browser access 2http://192.168.52.110:9200 、 2http://192.168.52.120:9200 Look at the node Node01、Node02 Information about .

Browser access 2http://192.168.52.110:9200/_cluster/health?pretty 、 http://192.168.52.120:9200/_cluster/health?pretty View the health of the cluster , You can see status The value is green( green ), Indicates that the node is running healthily .

Browser access http://192.168.52.110:9200/_cluster/state?pretty Check the cluster status information .

Viewing the status of the cluster in the above way is not user-friendly , You can install Elasticsearch-head plug-in unit , You can more easily manage clusters .

install Elasticsearch-head plug-in unit

Elasticsearch stay 5.0 After version ,Elasticsearch-head The plug-in needs to be installed as a stand-alone service , Need to use npm Tools (NodeJS Package management tools ) install .

install Elasticsearch-head The dependent software needs to be installed in advance node and phantomjs.

node: It's based on Chrome V8 Engine JavaScript Running environment .

phantomjs: It's based on webkit Of JavaScriptAPI, It can be understood as an invisible browser , Anything based on webkit What browsers do , It can do .

Compilation and installation node

# Upload package node-v8.2.1.tar.gz To /opt

yum install gcc gcc-c++ make -y

cd /opt

tar zxvf node-v8.2.1.tar.gz

cd node-v8.2.1/

./configure

make && make installinstall phantomjs

# Upload package phantomjs-2.1.1-linux-x86_64.tar.bz2 To opt

cd /opt

tar xvf phantomjs-2.1.1-linux-x86_64.tar.bz2

cd /opt/phantomjs-2.1.1-linux-x86_64/bin

ln -s phantomjs /usr/local/bin

install Elasticsearch-head Data visualization tool

# Upload package elasticsearch-head.tar.gz To /opt

cd /opt

tar zxvf elasticsearch-head.tar.gz -C /usr/local/src/

cd /usr/local/src/elasticsearch-head/

npm install

modify Elasticsearch Master profile

vim /etc/elasticsearch/elasticsearch.yml

......

-- Add the following at the end --

http.cors.enabled: true

# Enable cross domain access support , The default is false

http.cors.allow-origin: "*"

# Specify the domain names and addresses allowed for cross domain access for all

systemctl restart elasticsearch

start-up elasticsearch-head service

Must be after decompression elasticsearch-head Start the service in the directory , The process will read the gruntfile.js file , Otherwise, it may fail to start .

cd /usr/local/src/elasticsearch-head/

npm run start &

> [email protected] start /usr/local/src/elasticsearch-head

> grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

#elasticsearch-head The listening port is 9100

netstat -natp |grep 9100

adopt Elasticsearch-head see Elasticsearch Information

Access... Through a browser http://192.168.52.110:9100/ Address and connect to the cluster . If you see a cluster health value of green green , It means the cluster is healthy .

Insert Index

# Insert a test index by command , The index for index-demo, The type is test.

curl -X PUT 'localhost:9200/index-demo/test/1?pretty&pretty' -H 'content-Type: application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

// The output is as follows :

{

"_index": "index-demo",

"_type" : "test",

"_id": "1",

"_version": 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"created" : true

}

Browser access http://192.168.52.110:9100/ View index information , You can see that the index is fragmented by default 5 individual , And there's a copy .

Click on “ Data browsing ”, Will find node1 The index created on is index-demo, The type is test Information about .

ELK Logstash Deploy ( stay Apache Operation on node )

Logstash It is generally deployed on the server that needs to monitor its logs . In this case ,Logstash Deployed in Apache Server , Used to collect Apache Log information of the server and send it to Elasticsearch.

Change host name

hostnamectl set-hostname apache

su

install Apahce service (httpd)

yum -y install httpd

systemctl start httpd

install Java Environmental Science

yum -y install java

java -version

install logstash

# Upload package logstash-5.5.1.rpm To /opt Under the table of contents

cd /opt

rpm -ivh logstash-5.5.1.rpm

systemctl start logstash.service

systemctl enable logstash.service

ln -s /usr/share/logstash/bin/logstash /usr/local/bin/

test Logstash

Logstash Command common options :

| -f | With this option you can specify Logstash Configuration file for , Configure according to the configuration file Logstash Input and output streams of . |

|---|---|

| -t | Test the configuration file for correctness , And then quit . |

| -e | Get... From the command line , Input 、 The output is followed by a string , This string can be treated as Logstash Configuration of ( If it's empty , It is used by default stdin As input ,stdout As the output ). |

Define input and output streams :

# The input is standard input , The output is standard output ( Similar pipe )

logstash -e 'input { stdin{} } output { stdout{} }'

......

www.baidu.com # Type in ( The standard input )

# Output results ( standard output )

www.sina.com.cn # Type in ( The standard input )

# Output results ( standard output )

// perform ctrl+c sign out

Definition logstash The configuration file

Logstash The configuration file basically consists of three parts :input、output as well as filter( Optional , Choose to use as needed ).

| input | Indicates collecting data from a data source , Common data sources such as Kafka、 Log files, etc |

|---|---|

| filter | Represents the data processing layer , Including formatting data 、 Data type conversion 、 Data filtering, etc , regular expression |

| output | It means that you will Logstash The collected data is processed by the filter and output to Elasticsearch. |

# The format is as follows :

input {...}

filter {...}

output {...}

# In each part , You can also specify multiple access methods . for example , To specify two log source files , The format is as follows :

input {

file {

path =>"/var/log/messages"

type"syslog"

file { path =>"/var/log/httpd/access.log"

type =>"apache"

}

}

modify Logstash The configuration file , Let it collect system logs /var/log/messages, And output it to elasticsearch in .

chmod +r /var/log/messages # Give Way Logstash You can read logs

vim /etc/logstash/conf.d/system.conf

input {

file{

path =>"/var/log/messages" # Specify the location of the logs to collect </span>

type =>"system" # Custom log type identification

start_position =>"beginning" # Means to collect... From the beginning

}

}

output {

elasticsearch { # Output to elasticsearch

hosts => ["192.168.52.110:9200","192.168.52.120:9200"] # Appoint elasticsearch The address and port of the server

index =>"system-%{+YYYY.MM.dd}" # Specify output to elasticsearch Index format

}

}

systemctl restart logstash

Browser access http://192.168.52.110:9100/ View index information

ELK Kiabana Deploy ( stay Node1 Operation on node )

install Kiabana

# Upload package kibana-5.5.1-x86_64.rpm To /opt Catalog

cd /opt

rpm -ivh kibana-5.5.1-x86_64.rpm

Set up Kibana Primary profile for

vim /etc/kibana/kibana.yml

--2-- uncomment ,Kiabana The default listening port of the service is 5601

server.port: 5601

--7-- uncomment , Set up Kiabana The monitoring address of ,0.0.0.0 For all addresses

server.host: "0.0.0.0"

--21-- uncomment , To configure es Server's ip, If it is a cluster, configure it master Node ip

elasticsearch.url: "http://192.168.52.110:9200","http://192.168.52.120:9200"

--30-- uncomment , Set in the elasticsearch Add .kibana Indexes

kibana.index: ".kibana"

--86-- uncomment , To configure kibana Log file path for ( You need to create... Manually ), Otherwise, the default is messages Keep a log in the library

logging.dest: /var/log/kibana.log

Create a log file , start-up Kibana service

touch /var/log/kibana.log

chown kibana:kibana /var/log/kibana.log

systemctl start kibana.service

systemctl enable kibana.service

netstat -natp | grep 5601

verification Kibana

Browser access http://192.168.52.110:5601

The first time you log in, you need to add a Elasticsearch Indexes :

Index name or pattern

// Input :system-* # Enter the previously configured... In the index name Output Prefix “system”

single click “create” Button to create , single click “Discover” Button to view chart information and log information .

Data display can be classified , stay “Available Fields” Medium “host”, And then click “add” Button , You can see the following “host” The result of screening

take Apache Log of the server ( Access to the 、 FALSE ) Add to Elasticsearch And pass Kibana Show

vim /etc/logstash/conf.d/apache_log.conf

input {

file{

path => "/etc/httpd/logs/access_log"> # Specify the exact directory location

type=> "access"

start_position => "beginning"

}

file{

path => "/etc/httpd/logs/error_log" # Specify the error log directory

type => "error"

start_position => "beginning"

}

output {

if[ type ] == "access" {

elasticsearch {

hosts => ["192.168.52.110:9200","192.168.52.120:9200"]

index => "apache_access-%{+YYYY.MM.dd}"

}

}

if [ type] == "error"{

elasticsearch {

hosts => ["192.168.52.110:9200","192.168.52.120:9200"]

index => "apache_access-%{+YYYY.MM.dd}

}

}

}

cd /etc/logstash/conf.d/

/usr/share/logstash/bin/logstash -f apache_log.confBrowser access http://192.168.52.110:9100 Check whether the index is created

Browser access http://192.168.52.110:5601 Sign in Kibana

single click “Index ->Create Index Pattern” Button to add an index , Enter the previously configured... In the index name Output

Prefix apache_access-*, And click “Create” Button .

Add... In the same way apache_error-* Indexes .

choice “Discover” tab , Select the newly added... From the middle drop-down list apache_access-* 、apache_error-* Indexes , You can view the corresponding charts and log information .

边栏推荐

- LeetCode 314. Binary tree vertical order traversal - Binary Tree Series Question 6

- 官宣!第三届云原生编程挑战赛正式启动!

- Using druid to connect to MySQL database reports the wrong type

- Android advanced interview question record in 2022

- RichView TRVUnits 图像显示单位

- 【LeetCode】222. The number of nodes of a complete binary tree (2 mistakes)

- [技术发展-26]:新型信息与通信网络的数据安全

- Codeforces Round #770 (Div. 2) ABC

- Introduce reflow & repaint, and how to optimize it?

- RichView TRVStyle MainRVStyle

猜你喜欢

Security level

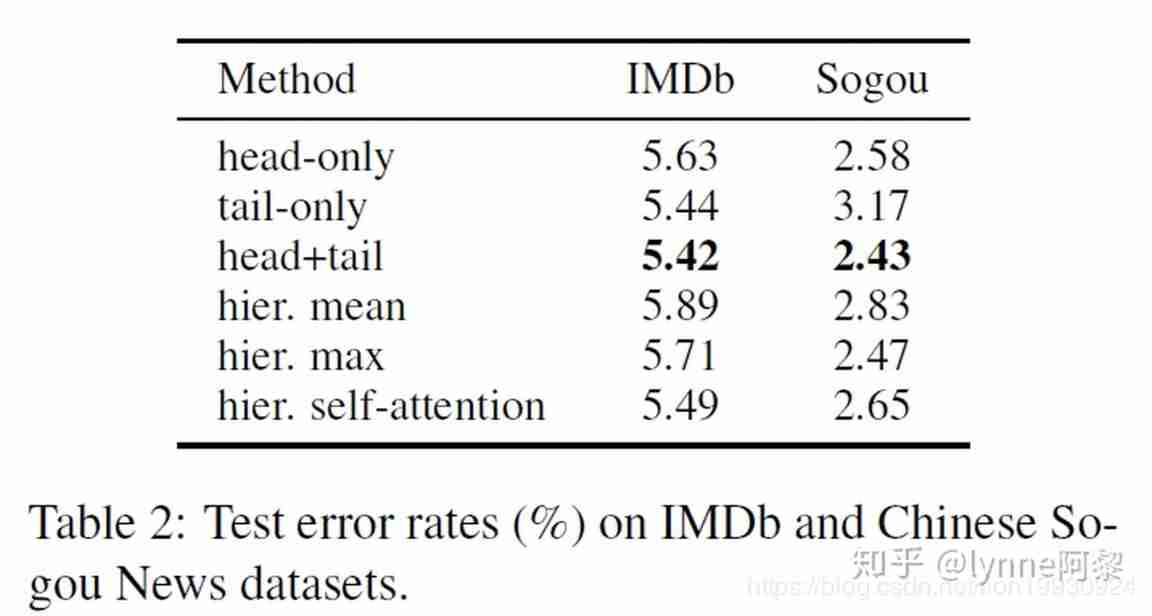

Bert fine tuning skills experiment

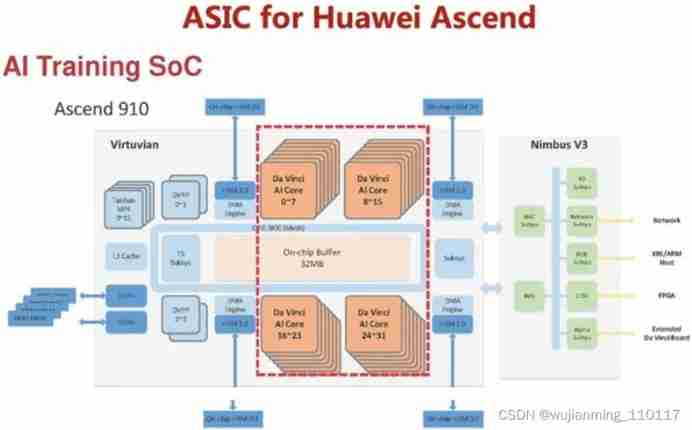

Traditional chips and AI chips

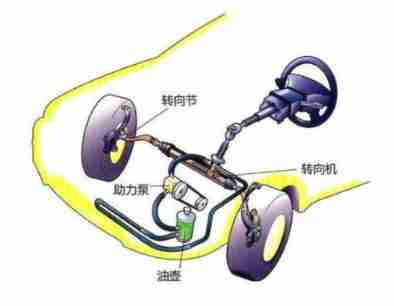

The steering wheel can be turned for one and a half turns. Is there any difference between it and two turns

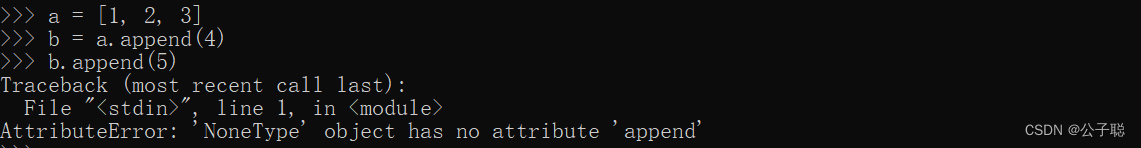

Problem solving: attributeerror: 'nonetype' object has no attribute 'append‘

How to build a technical team that will bring down the company?

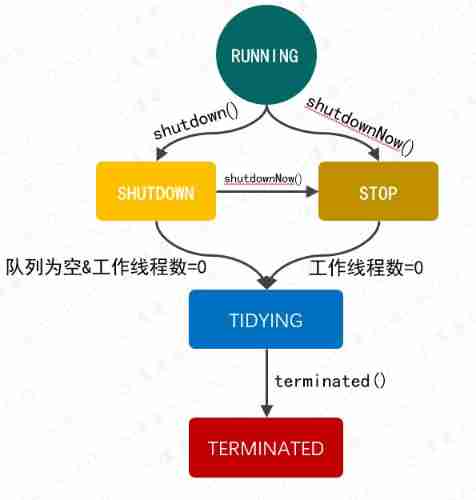

Write a thread pool by hand, and take you to learn the implementation principle of ThreadPoolExecutor thread pool

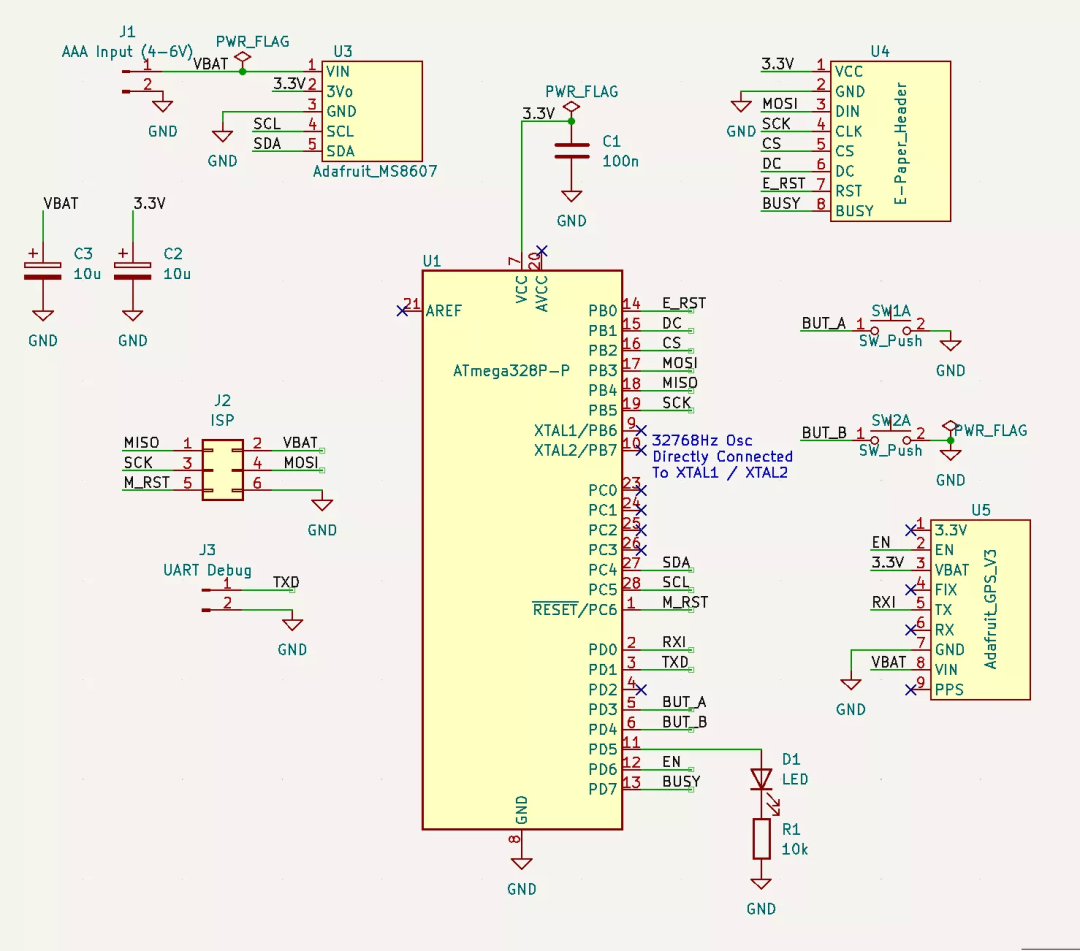

如何做一个炫酷的墨水屏电子钟?

Tucson will lose more than $400million in the next year

Visual explanation of Newton iteration method

随机推荐

Application and Optimization Practice of redis in vivo push platform

I use these six code comparison tools

Abacus mental arithmetic test

R language uses logistic regression and afrima, ARIMA time series models to predict world population

[uc/os-iii] chapter 1.2.3.4 understanding RTOS

When the low alcohol race track enters the reshuffle period, how can the new brand break the three major problems?

Character painting, I use characters to draw a Bing Dwen Dwen

Timescaledb 2.5.2 release, time series database based on PostgreSQL

Grub 2.12 will be released this year to continue to improve boot security

RichView TRVStyle MainRVStyle

How to build a technical team that will bring down the company?

【LeetCode】110. Balanced binary tree (2 brushes of wrong questions)

openresty ngx_lua執行階段

Last week's hot review (2.7-2.13)

【LeetCode】404. Sum of left leaves (2 brushes of wrong questions)

spoon插入更新oracle数据库,插了一部分提示报错Assertion botch: negative time

STL container

[source code attached] Intelligent Recommendation System Based on knowledge map -sylvie rabbit

Runc hang causes the kubernetes node notready

Traditional chips and AI chips