当前位置:网站首页>Exploration of short text analysis in the field of medical and health (II)

Exploration of short text analysis in the field of medical and health (II)

2022-07-05 02:02:00 【Necther】

Preface

Let's briefly review the previous content , Last one 《 Exploration of short text parsing in the field of medical and health ( One )》 We briefly introduce the commonly used concept/phrase Automatic extraction and evaluation methods , Then we introduced two methods of structured electronic medical record , First build MeSH Semantic tree , Split the symptom atomic words ; Second use multi-view attention based denoising auto encoder(MADAE) Calculate the similarity of the model , Related to the subject words that already exist in the Library . In this article, we continue to optimize the extraction results , On the one hand, let's talk about the pit left in the previous section , What is an existing theme word , How do we determine the subject words ?

appetizer

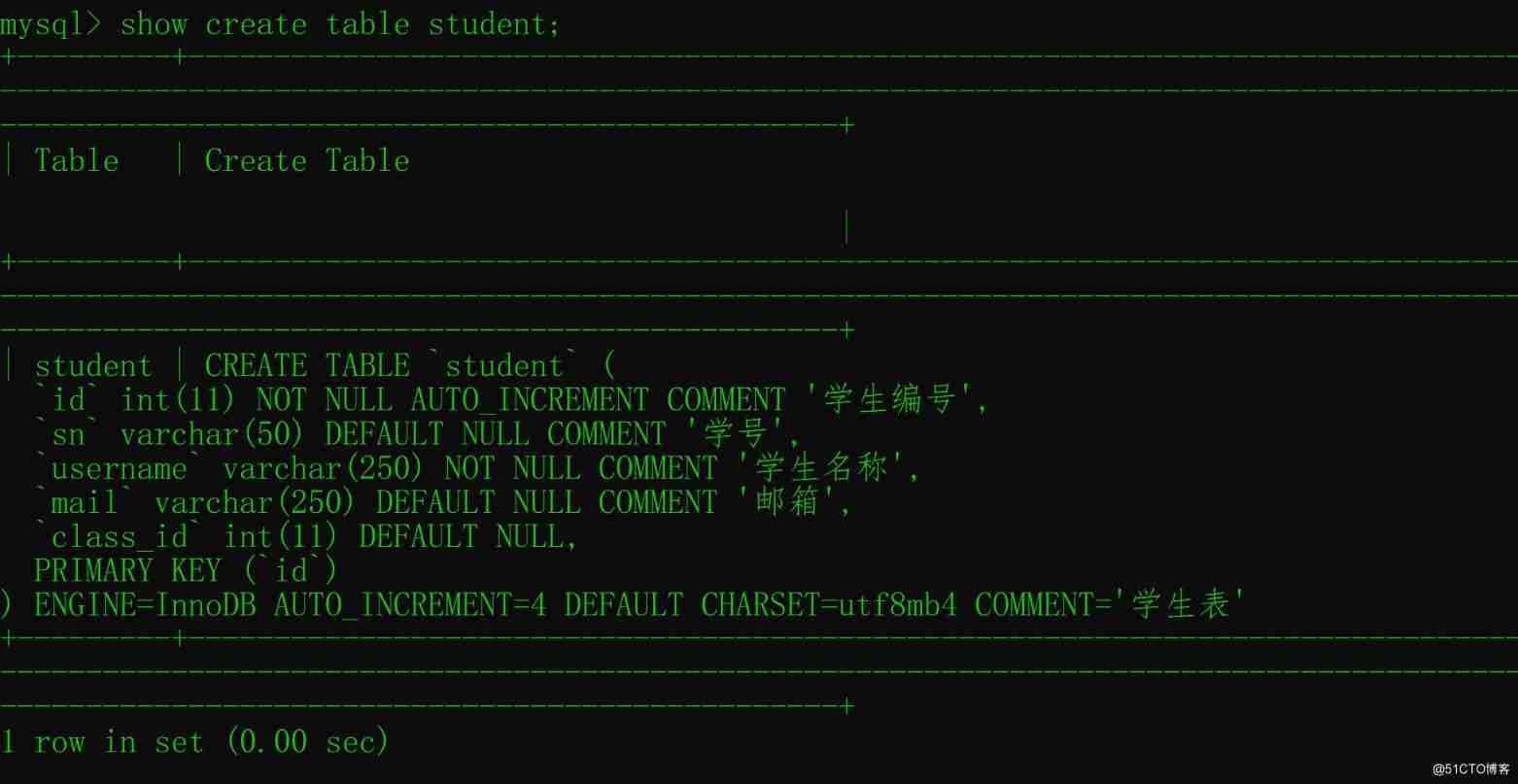

10 The 5th China health information processing Conference (CHIP2019) Released an evaluation task [1], Clinical terminology standardization task , It is similar to the standardization task we mentioned last , Give a surgery original word , It is required to give its corresponding surgical standard words . As shown in the figure below :

This is very consistent with what we are doing now , So take this data to practice . For information retrieval related tasks , General ideas are divided into Recall and Fine discharge ( Or rearrange Rerank) Two phases , Here we only use the past method to verify the recall stage . First, analyze the data , There are three relationships in the data , one-on-one , One to many , For one more , Many to many Mainly solve the one-to-one problem first , A brief review MADAE[2] Model .

First use simple Noise Function Data enhancement , On the third floor Multi-view Encoders, They are sound , word , Words in three ways embedding Layer for input . Because of the serious lack of context , There is no way to characterize contextual information , So just use Denoising Text Embedding After generating the vector Top-1 The only result is 73%. So we made two improvements , First of all, will Noise Function Replace with EDA[3] Build data set , And will Multi-view Encoder Layer to join bert Of , I found that the effect is much better , The results are shown in the table below :

Later, it was found that there were many one-to-many and many to one problems in the test set , My one to many approach is to origin Words with '+' No. is divided , As a result, the final accuracy rate drops a lot .ps: Just this Unilateral thyroidectomy with partial lobectomy On the test set 80 individual , Be sure to look at the data set carefully .

Let's briefly talk about the ideas of subsequent optimization , First, let's take a look at the result of calculating the similarity ,key It's the original word ,target Is the result of the validation set ,topK yes BERT+DAE The ranking result given by similarity calculation , We can use Top-3 The results construct positive and negative samples , In the picture '*' As a positive sample , In the picture 'x' As a negative sample , Then build a classifier model , It can be used origin and ^target( Predicted results ) The results of some similarity measures are used as features , For example jaccard,Edit Distance,word2vec wait . After that, the classification results are right topK Conduct rerank, Please develop all interesting gameplay by yourself .

I followed up with the top three ppt, The way to rank first is to use LCS The longest common subsequence is roughened , Finally, fine discharge can be used directly bert Building classifiers . Fine sorting process plays a very important role in the final effect of information retrieval , After fine discharge , The first few teams are interested in the data set top1 The accuracy can be improved to 90%+. If you're interested, you can [1] Email contact data .

Interesting main course

Named entity recognition is one of the most important technologies for structured text , It can effectively help us analyze users' intentions and optimize recommendation results , But there are two problems in practical application :

- How to obtain high-quality annotations in vertical or open fields .

- With the continuous accumulation of new data, it will continue to produce , How to use existing data , Mining new words and accurately corresponding to the corresponding category .

While ensuring quality, we are constantly acquiring new vocabulary , The easiest way is to find experts in this vertical field , Continuously annotate some unstructured text . But the cost of this method is relatively high , And the cycle is longer . Use remote supervision , According to a high-quality dictionary, the unstructured text is " Pseudo label ", But in the vertical field, due to the limited generalization ability , We can't guarantee the effectiveness of named entity recognition , for instance , Apples can be food , It can also be a company , When we label the text with remote supervision , Unable to distinguish effectively .

Biomedical field to solve the problem of low generalization , A hybrid classifier model based on rules and statistics is proposed [9]. Use prefixes to match , Spelling rules ,N-gram, Part of speech tagging and some context sensitive grammars are used as features , Use Bayesian Networks , Decision tree and naive Bayes classifier model are fused . The accuracy is improved on this data set 1.6 A little bit , The results are as follows :

Recently, I read some papers on named entity recognition using remote supervision , One of them 《Distantly Supervised Named Entity Recognition using Positive-Unlabeled Learning》[10-11], The author mainly uses unlabeled data and domain dictionaries NER Method , The author expressed the task as positive unmarked (PU, Positive-Unlabeled) Learning problems , Because a dictionary cannot contain all the entity words in a sentence , So the traditional BIO,BIESO Annotation mode , Instead, it turns into a binary classification problem , Map entity words as positive samples , Non entity words as negative samples . stay AdaSampling[12] While constantly expanding dictionary data, it is also constantly optimizing PU classifier .

Although this semi supervised method has some gap with supervised learning , But it also achieved relatively good results . What impressed me was this 《HAMNER: Headword Amplified Multi-span Distantly Supervised Method for Domain Specific Named Entity Recognition》 This article applies the text structuring method and noun phrase mining technology mentioned in the previous article , To provide for named entity recognition pseudo annotation.

The author puts forward a HAMNER:Headword Amplified Multi-span Named Entity Recognition Mark in the way of remote supervision . This article aims to reduce the gap between traditional supervised learning methods and remote supervision , The paper shows that , The remote monitoring method has two limitations , The first one is oov problem , New noun phrases discovered , There is no exact corresponding category , Let's say ,ROSAH syndrome, If this word doesn't exist in the dictionary , We can do it according to its headword syndrome To judge that it is actually a disease type . Another limitation is that the remote monitoring method is likely to bring many uncertain boundary problems , for instance , I want to buy an apple mobile phone , Apple is Food It can also be Company, Apple phones are Mobile Phone, So first make sure mention Then judge the type mention type, And give more accurate entity boundary according to its confidence .

See from the flow chart , There are two sources of dictionary data used in our remote supervision , High quality dictionaries with categories and extracted phrase( In this article, span), Generate training data Pseudo label data . that problem And then there is , How to get out of the dictionary Noun phrase, How to solve synonyms and spelling mistakes , Two methods are mentioned , One of them uses Auto Phrase, That is, the method of noun phrase mining we talked about in the previous article [4-7]. Extracted phrase When there is no category , And use headword and word embedding The way to calculate the similarity determines the extraction Noun Phrase .

Enter Dictionary D, High quality phrase,headword The threshold sum of frequency headword Similarity threshold , according to phrase Record the category with the maximum matching similarity ,phrase There are likely to be multiple category labels ,T by phrase Set of category labels . When constructing training data of pseudo tags , According to the positive maximum matching principle , Nesting or overlapping is not allowed . However, multiple categories are allowed for the same entity .

Not only can each entity have multiple category labels , When building data, we should also give a weight to each category of word entities w, This weight is based on Algorithm 1 Calculate the maximum similarity value in . Like the example shown in the figure , Will include granuloa cell tumors(phrase) As input layer ,ELMo, Character Representation and Word Embedding As embedding layer , Hidden layer is Bidirectional LSTM, Take the final result as Word Representation And according to phrase Of span Divide the sentence into three parts , Each part calculates a hidden representation , Finally, after splicing the three results, the final EntityType As this phrase Categories . And we can get phrase Weight under this category w.

The last step , According to the neural network model phrase And all types of probability estimates , Divide sentences , because phrase The positions between them do not overlap each other and there is no nesting , And in the sentence should be to retain a fixed category of information , Therefore, there are many phrase when , According to its minimum joint probability , As the best solution , Dynamic programming is used to optimize .

Dessert that is not sweet at all

In fact, the whole method is not difficult to understand , But the content is relatively rich , First of all, we need to have a custom dictionary of a certain scale in the vertical field , according to auto phrase And other noun phrase mining methods , Excavate a batch of high-quality noun phrases , And simply make some notes , Build the classifier model according to the context information , Determine its conditional probability value under each category , The sentence with the greatest probability phrase Categories , Then calculate the minimum joint probability result , Use dynamic programming , Find the best solution , As the final pseudo label training data . The advantage of this is , Reduce the cost of manual labeling , And in some fields, the effect is close to that of expert annotation .

This article is also recently seen , Some of these methods are also used , And is trying to make some improvements , The original text is drawn phrase Given the category , Use headword Calculate similarity with word vector . But there will be oov problem , Probably not phrase The corresponding headword when , Consider using some rules , Or according to [5] The method of standardizing symptom data mentioned in , Mark a batch of data , Build semantic trees and other ways to help us give phrase Final category .

summary

stay 2019CCKS See many marking systems made by medical research laboratories , But there is a problem , That is how to determine the source of high-quality labels , If you look for experts in the medical field to mark , Time costs are high , And the labeling process is quite boring , It will lead to missing information or wrong annotation .

The way of remote supervision can help us reduce the cost of a certain amount of manual annotation ,HAMNER The method proved , The effect of pseudo tagging using domain dictionary and mined noun phrases is better than using domain dictionary alone , And its F1 and Pre It is not much different from the manual annotation results in some field datasets .

We will try to use HAMNER, Semantic tree , Knowledge map entity alignment and some simple manual annotation methods to solve the problem of constructing training data . Use HAMNER Construct pseudo annotation data for unstructured text , Then manually review the pseudo labeled data phrase The boundary and category of category words in the sentence are correct .

quote

[1] http://www.cips-chip.org.cn/evaluation

[2] [IEEE 2018] Chinese Medical Concept Normalization by Using Text and Comorbidity Network Embedding

[3] [EMNLP 2019]EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks

[4] HAMNER: Headword Amplified Multi-span Distantly Supervised Method for Domain Specific Named Entity Recognition

[5] Talk about... In the field of health care Phrase Mining - You know

[6] Exploration of short text parsing in the field of medical and health ( One ) - You know

[7] [IEEE 2019]An efficient method for high quality and cohesive topical phrase mining

[8] [IEEE 2018]Automated phrase mining from massive text corpora.

[9] [IJACSA 2017]Bio-NER: Biomedical Named Entity Recognition using Rule-Based and Statistical Learners

[10] [ACL 2019] Distantly Supervised Named Entity Recognition using Positive-Unlabeled Learning

[11] https://blog.csdn.net/a609640147/article/details/91048682

[12] [IJCAI 2017]Positive unlabeled learning via wrapper-based adaptive sampling

边栏推荐

- A tab Sina navigation bar

- Timescaledb 2.5.2 release, time series database based on PostgreSQL

- Is there a sudden failure on the line? How to make emergency diagnosis, troubleshooting and recovery

- es使用collapseBuilder去重和只返回某个字段

- Talk about the things that must be paid attention to when interviewing programmers

- 179. Maximum number - sort

- Kibana installation and configuration

- "2022" is a must know web security interview question for job hopping

- R语言用logistic逻辑回归和AFRIMA、ARIMA时间序列模型预测世界人口

- What sparks can applet container technology collide with IOT

猜你喜欢

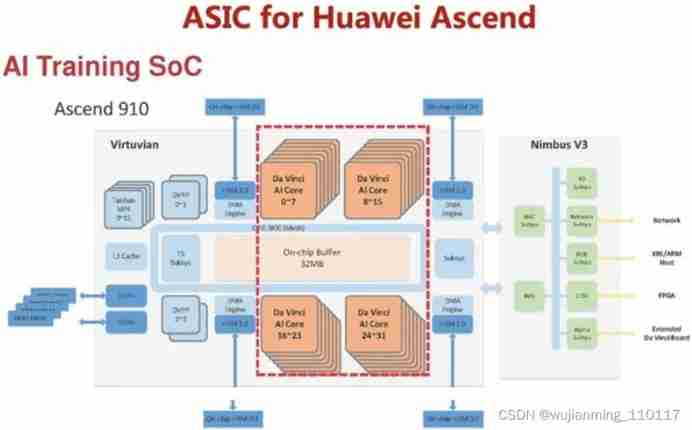

Traditional chips and AI chips

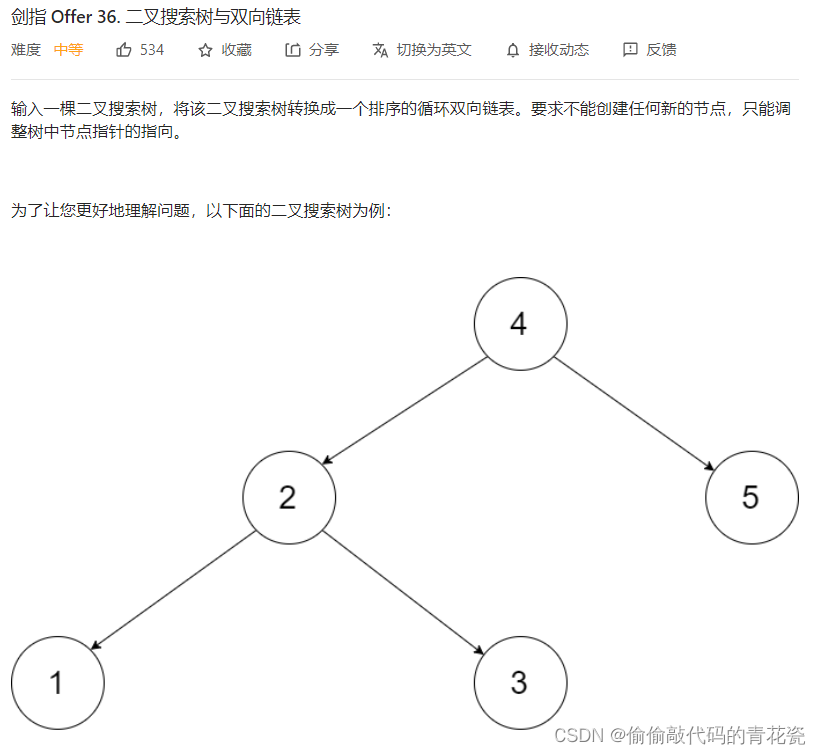

力扣剑指offer——二叉树篇

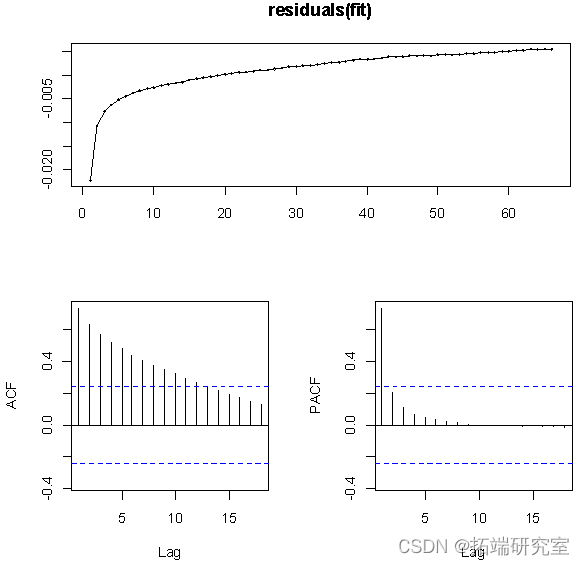

R语言用logistic逻辑回归和AFRIMA、ARIMA时间序列模型预测世界人口

Security level

![[source code attached] Intelligent Recommendation System Based on knowledge map -sylvie rabbit](/img/3e/ab14f3a0ddf31c7176629d891e44b4.png)

[source code attached] Intelligent Recommendation System Based on knowledge map -sylvie rabbit

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

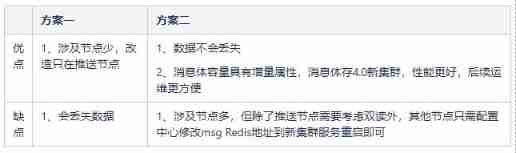

The application and Optimization Practice of redis in vivo push platform is transferred to the end of metadata by

Five ways to query MySQL field comments!

Kibana installation and configuration

Video display and hiding of imitation tudou.com

随机推荐

batchnorm.py这个文件单GPU运行报错解决

PowerShell: use PowerShell behind the proxy server

Action News

Wechat applet: wechat applet source code download new community system optimized version support agent member system function super high income

[OpenGL learning notes 8] texture

R language uses logistic regression and afrima, ARIMA time series models to predict world population

. Net starts again happy 20th birthday

Using druid to connect to MySQL database reports the wrong type

Interesting practice of robot programming 16 synchronous positioning and map building (SLAM)

Common bit operation skills of C speech

如何搭建一支搞垮公司的技术团队?

Win: enable and disable USB drives using group policy

[机缘参悟-38]:鬼谷子-第五飞箝篇 - 警示之一:有一种杀称为“捧杀”

Interesting practice of robot programming 15- autoavoidobstacles

Richview trvunits image display units

Grpc message sending of vertx

How to build a technical team that will bring down the company?

Stored procedure and stored function in Oracle

A tab Sina navigation bar

Interpretation of mask RCNN paper