当前位置:网站首页>DropBlock: Regularization method and reproduction code for convolutional layers

DropBlock: Regularization method and reproduction code for convolutional layers

2022-08-04 07:18:00 【hot-blooded chef】

论文:https://arxiv.org/pdf/1810.12890.pdf

1、什么是Dropout?

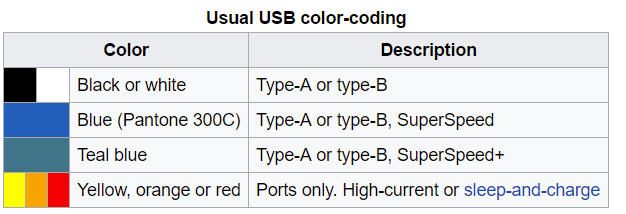

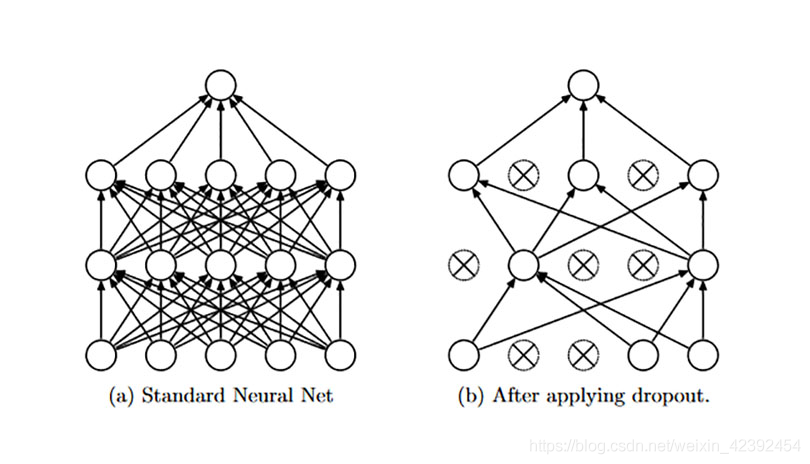

I believe you must understandDropout,所谓DropoutIs a method improving neural network generalization,Can effectively reduce the fitting.Why it works?From the chart analysis:

A standard neural network as shown in figure(a)所示,Because of the training data(Suppose to face data)的局限性,Makes the neural network is very dependent on a single neuron,And other neurons is equivalent to not work,Network only every time to determine whether the individual in the eyes.If at this time with a new set of test data directly to the network identification,Probably because in the picture, his eyes obscured and wrong results.It is also more common over fitting phenomenon.

So if we like(b)一样,Random inactivation of several neurons,Forcing the network to allow more neurons work,Network not only identify the human eye characteristics,But will also identify the mouth,鼻子等.So in the face of test set,Even if the training data and test data of different,Accuracy is not too low.

2、DropBlock

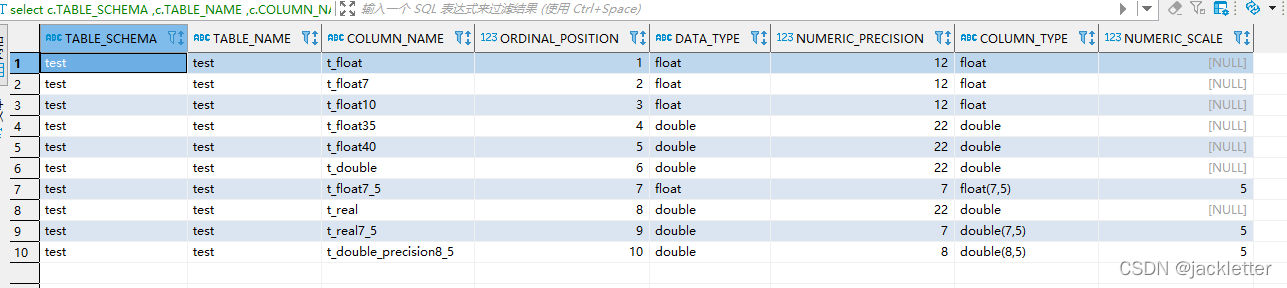

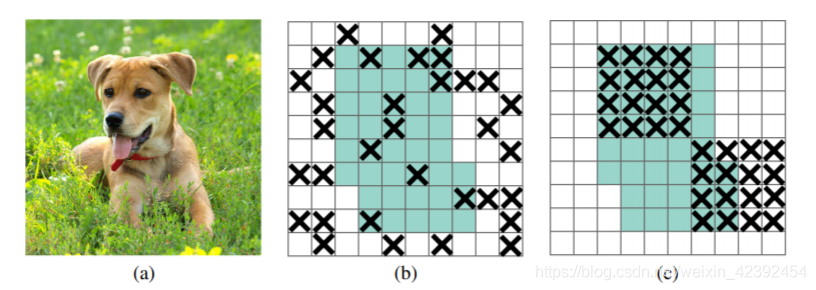

dropoutRegularization method is widely used in the connection layer,且效果较好,But for volume but less effective at the grass-roots level.Because the volume at the grass-roots level is the characteristics of the spatial correlation of,Just randomly on the space for some neurons deactivation,Equivalent to only make a few pixels deactivation,And the characteristics of the normal image is greater than the size of a pixel point,Convolution layer can still other no inactivation by sensing area unit determine the corresponding characteristics of,So the information will through the volume at the grass-roots level to the next layer of.

作者提出了DropBlock,A structureddropout.它和dropout思想一样,Is randomly throw away,But you lost a single piece of a lost,如下图(c)所示.Thus forcing the convolution layer neurons can not be lost through spatially associated reasoning out the characteristics of a living area information,Forcing the network from other feature reasoning,起到正则化作用.

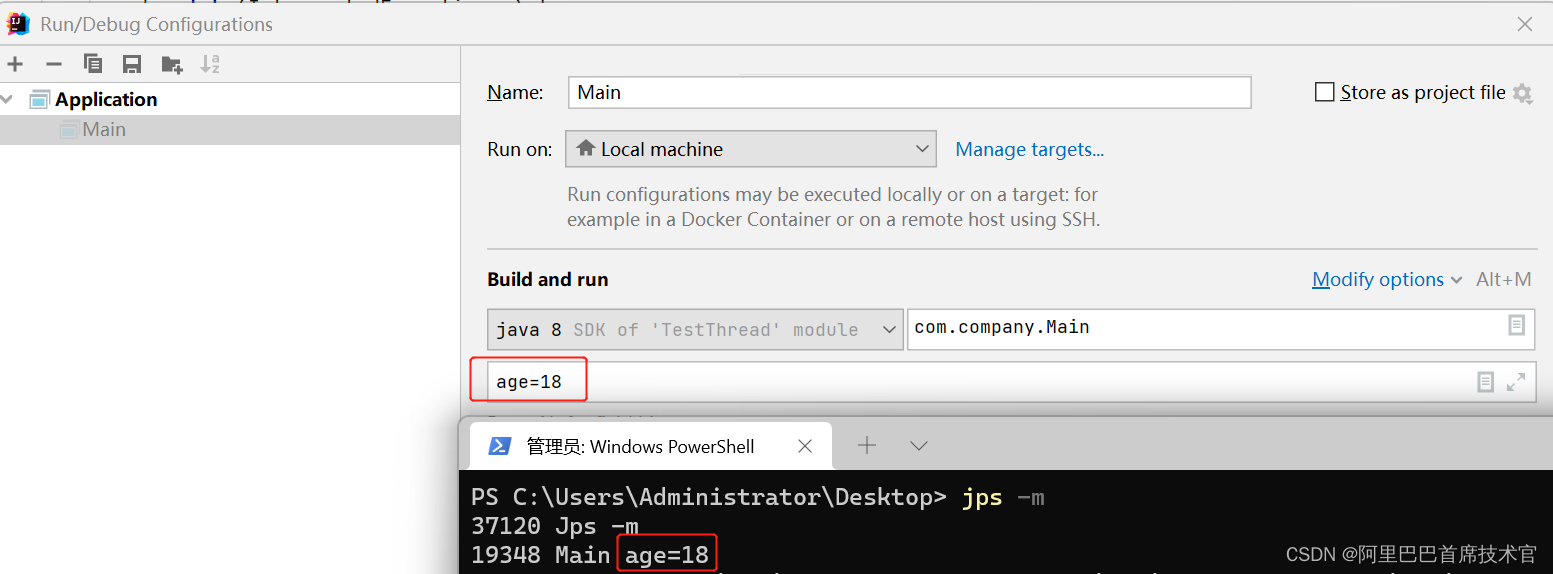

3、DropBlock算法流程

简单来说,就两步:

- Randomly select two points on characteristic figure(和dropout一样的操作)

- 以这个点为中心,Diffusion of fixed size side,Then he put the rectangular piece of inactivation of neurons

但为了DropBlockDoes not exceed the area of feature layer etc,There are also some super parameters need to set up,Detailed pseudo-code algorithm process as shown in the figure below:

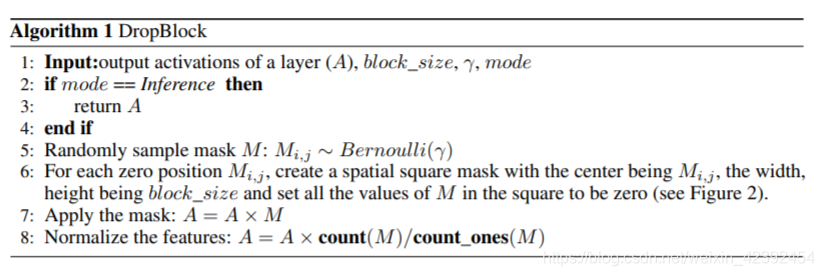

算法流程

- A feature input layer(A), b l o c k _ s i z e , γ , m o d e block\_size,\gamma,mode block_size,γ,mode三个参数

- 通过 m o d e mode modeJudgment is in the stage of training or prediction stage,如果是测试阶段,The direct return feature layerA

- 根据 γ \gamma γ,Using Bernoulli function( B e r n o u l l i Bernoulli Bernoulli)生成只有0,1的矩阵M

- 每个0Center of lattice as,创建形状为( b l o c k _ s i z e ∗ b l o c k _ s i z e block\_size * block\_size block_size∗block_size)The space of a squaremaskAnd fill out this area0处理

- 将A与M点乘

- For the sake of the layer with the same mean and variance,进行归一化操作

其中 γ \gamma γIs calculated:

γ = 1 − k e e p _ p r o b ( b l o c k _ s i z e ) 2 ( f e a t _ s i z e ) 2 ( f e a t _ s i z e − b l o c k _ s i z e + 1 ) 2 \gamma = \frac{1 - keep\_prob}{(block\_size)^2}\frac{(feat\_size)^2}{(feat\_size-block\_size+1)^2} γ=(block_size)21−keep_prob(feat_size−block_size+1)2(feat_size)2

- k e e p _ p r o b keep\_prob keep_prob是和dropoutThe probability of preserving neurons,The experimental results obtained in0.75-0.95之间比较好

- f e a t _ s i z e feat\_size feat_size是特征层的大小

- ( f e a t _ s i z e − b l o c k _ s i z e + 1 ) 2 (feat\_size-block\_size+1)^2 (feat_size−block_size+1)2保证dropOut of the area was not more than the size of the feature layer

DropBlock调度

固定的 k e e p _ p r o b keep\_prob keep_probIn training is not good.太小的 k e e p _ p r o b keep\_prob keep_probWould the hero at the start of the training,所以从1开始逐渐减小,Model will be more strong robustness.

4、实验结果

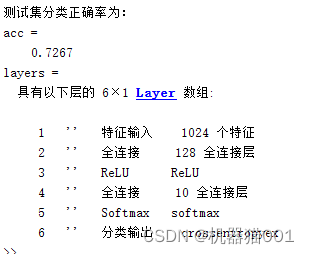

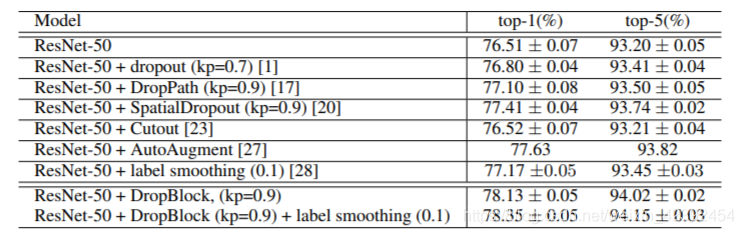

DropBlockVerifying the accuracy of

所有的实验都是在ImageNetData set on the test,DropBlock( b l o c k _ s i z e = 7 block\_size=7 block_size=7)相比dropout,Accuracy is about1.13%提升.

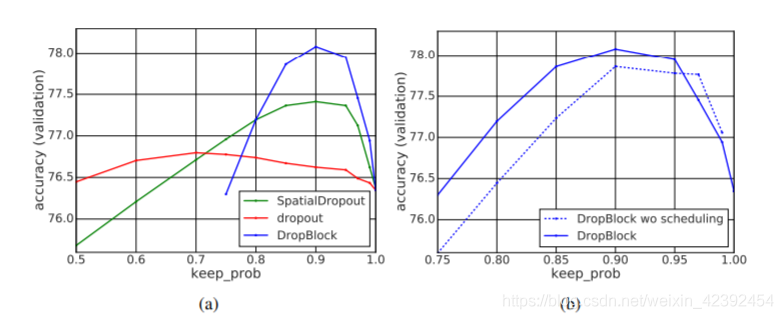

Keep_prob的选取

The main contrast in different k e e p _ p r o b keep\_prob keep_prob数值下,DropBlock和DropBlock的影响.其中所有的drop方法都是在ResNet的group3和group4上进行的,结果显示在 k e e p _ p r o b = 0.9 keep\_prob=0.9 keep_prob=0.9时效果最好.

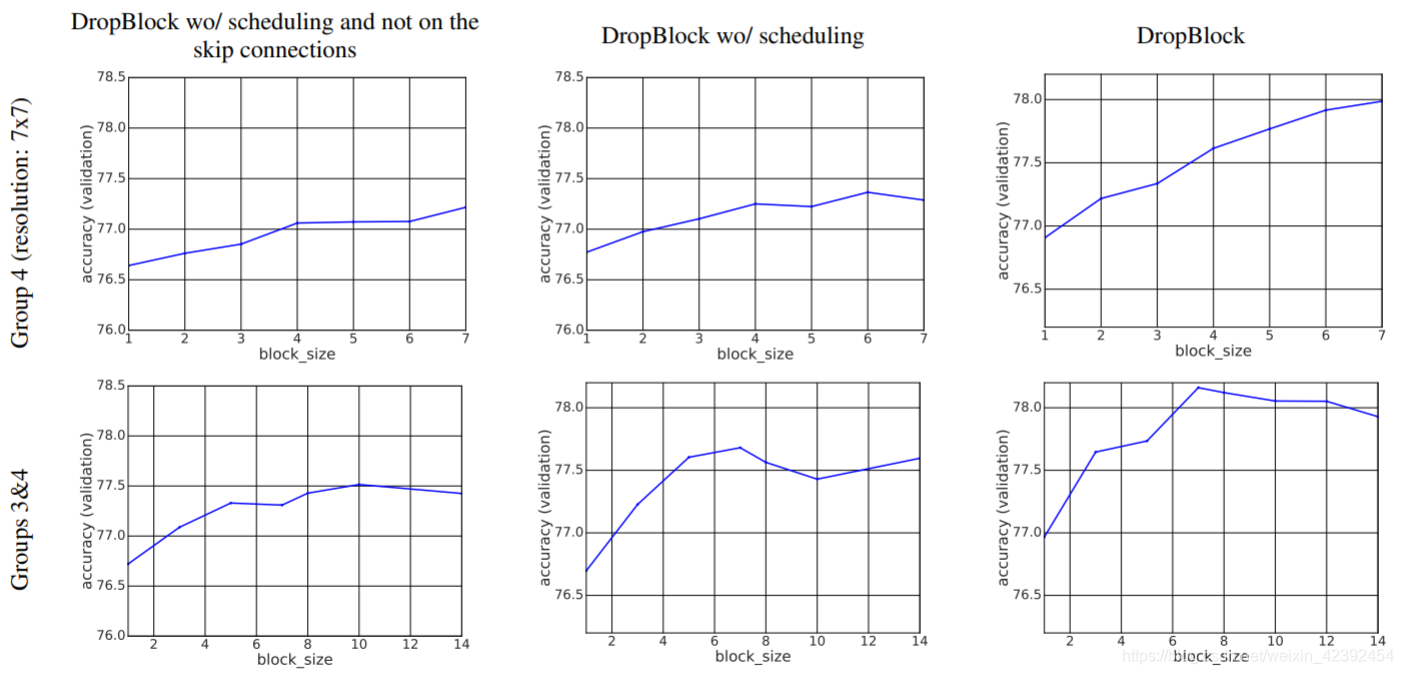

Block_szie的选取

同样,所有的drop方法都是在ResNet的group3和group4上进行的,结果显示 b l o c k _ s i z e = 7 block\_size=7 block_size=7时效果最好.

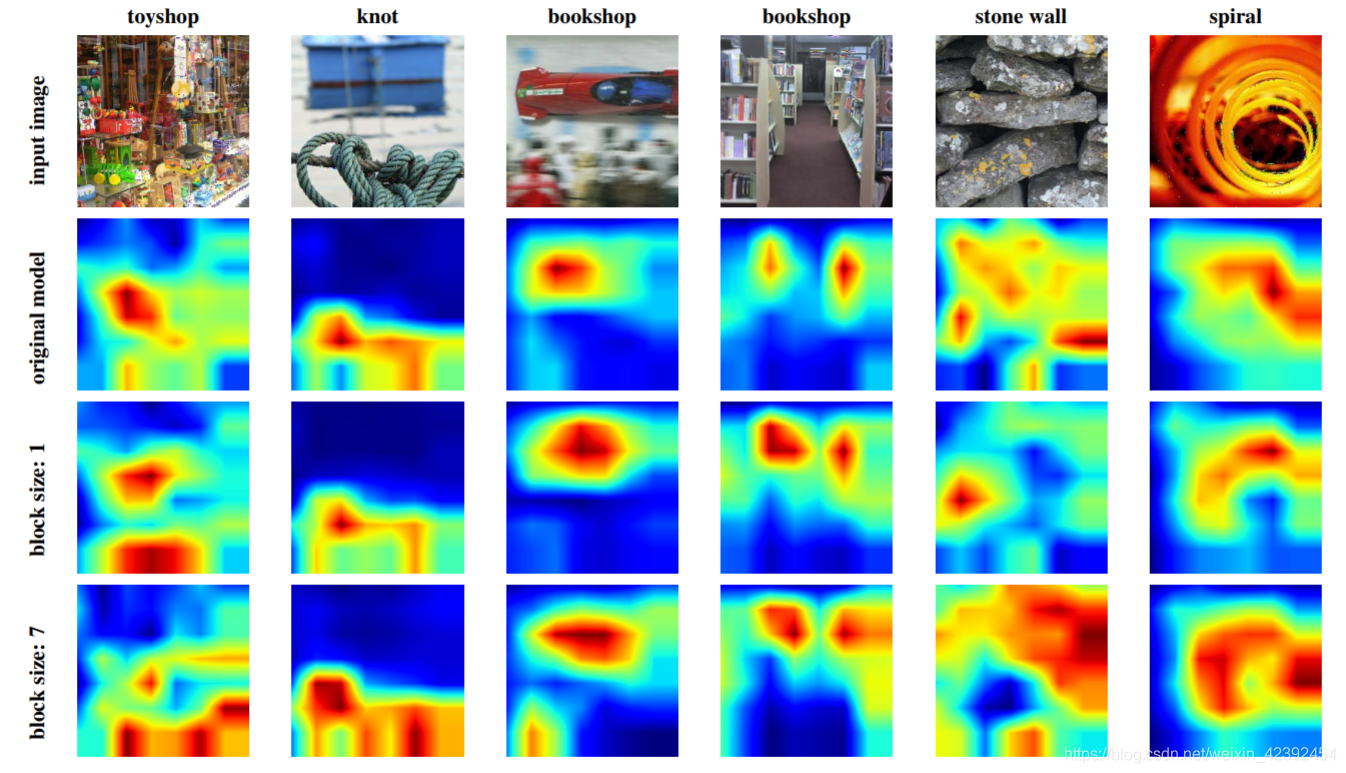

结果可视化

The author also on hot to do the contrast,我们可以清楚的看到, b l o c k _ s i z e = 1 block\_size=1 block_size=1时,Characteristics of the activated areas sometimes than withoutDropBlock多,Sometimes the effect will be worse.这也很容易理解, b l o c k _ s i z e = 1 block\_size=1 block_size=1时DropBlock退化成dropout.而在 b l o c k _ s i z e = 7 block\_size=7 block_size=7时,Activate the regional obviously a lot more.

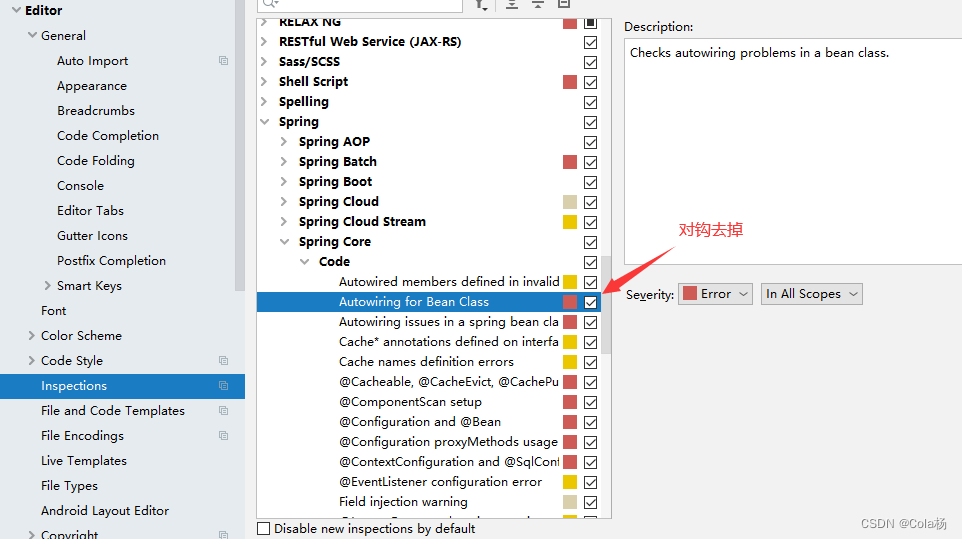

5、算法复现

The authors of the paper and not related to open source code,May be because of better repetition.The author himself withKerasThe custom level of repetition.

边栏推荐

猜你喜欢

随机推荐

用手机也能轻松玩转MATLAB编程

Base64编码原理

国内外知名源码商城系统盘点

Computer software: recommend a disk space analysis tool - WizTree

数组的一些方法

Activiti 工作流引擎 详解

基于爬行动物搜索RSA优化LSTM的时间序列预测

unity webgl报 Uncaught SyntaxError: JSON.parse: unexpected character at line 1 column 1 of the JSON

如何用matlab做高精度计算?【第一辑】

从零开始单相在线式不间断电源(UPS)(硬件)

事件链原理,事件代理,页面的渲染流程,防抖和节流,懒加载和预加载

无监督特征对齐的迁移学习理论框架

Hardware Knowledge: Introduction to RTMP and RTSP Traditional Streaming Protocols

Provide 和 Inject 的用法

MySQL复制表结构、表数据的方法

MySQL外键(详解)

反序列化字符逃逸漏洞之

NelSon:一款新的适配matlab编程语法的编程工具

ES6新语法:symbol,map容器

Promise.all 使用方法