当前位置:网站首页>Feature generation

Feature generation

2022-07-07 21:00:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm the king of the whole stack .

Characteristic criteria

Distinctiveness : Different category patterns can be divided in the feature space

invariance : The change of the same category pattern in the feature space ( change 、 deformation 、 noise ) Selection is highly differentiated 、 And agree with the characteristics of certain invariance

Some methods of feature generation 1 Time domain 、 frequency domain 、 Video union The correlation coefficient 、FFT、DCT、Wavelet、Gabor 2 Statistics 、 structure 、 blend Histogram 、 attribute - The diagram 3 Bottom 、 Middle level 、 high-level Color 、 gradient (Robert、Prewitt、Sobel、 Difference + smooth 、HOG)、 texture ( class Harr、LBP)、 shape 、 semantics 4 Model ARMA、LPC

Three examples

A SIFT 1 Build the Gauss pyramid Make difference generation DOG(LOG) Approximation of 2 Find the extreme point , And the optimal extreme point is obtained according to the derivative 3 basis Hessian matrix ( Can autocorrelation function ) Remove edges and unstable points 4 Carry out gradient description narration

For detailed steps, please refer to 《 Image local invariance features and description 》 And http://underthehood.blog.51cto.com/2531780/658350 with SIFT Staring code , Please refer to

B Bag of Words 1 clustering - Building a dictionary 2 Map to dictionary , then SVM Wait for other classifiers to train and classify

There are some details 1 Feature extraction 2 Codebook generation 3 Coding(Hard or Soft) 4 Polling(Average or Max) 5 Classify

“ Now Computer Vision Medium Bag of words It is also very popular to describe the characteristics of images .

The general idea is this , If there is 5 Class image . There are... In each category 10 Images . In this way, each image is divided into patch( It can be rigid cutting or like SIFT Based on key point detection ), such . Each image consists of many patch Express , Every patch Use an eigenvector to represent , If we use Sift It means , An image may have hundreds of patch, Every patch The dimension of the eigenvector 128.

The next step is to build Bag of words Model , If Dictionary The dictionary's Size by 100, That is to say 100 Word . Then we can use K-means Algorithm for all patch Clustering ,k=100, We know , etc. k-means When converging . We also got each cluster The final center of mass . So this 100 A center of mass ( dimension 128) It's the dictionary reed 100 A word , The dictionary is built .

How to use the dictionary after it is built ? It's this , Initialize one first 100 individual bin The initial value of 0 Histogram h. There are not many images patch Well ? Let's calculate these again patch And the distance from each centroid , Look at each patch Which centroid is near , So histogram h Corresponding to bin Just add 1, Then calculate all of this image patches after , I get one bin=100 Histogram . Then normalize . Use this 100 Weide vector to represent this image .

After calculating all the images . Then we can carry out classification, clustering, training and prediction . “

C Image saliency 1 Multiscale comparison 2 Histogram around the center 3 Color space distribution

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/116276.html Link to the original text :https://javaforall.cn

边栏推荐

- openGl超级宝典学习笔记 (1)第一个三角形「建议收藏」

- C language helps you understand pointers from multiple perspectives (1. Character pointers 2. Array pointers and pointer arrays, array parameter passing and pointer parameter passing 3. Function point

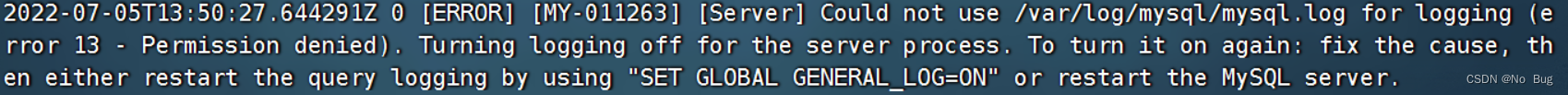

- ERROR: 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your

- 软件缺陷静态分析 CodeSonar 5.2 新版发布

- Validutil, "Rethinking the setting of semi supervised learning on graphs"

- 【奖励公示】第22期 2022年6月奖励名单公示:社区明星评选 | 新人奖 | 博客同步 | 推荐奖

- Klocwork 代码静态分析工具

- Airiot helps the urban pipe gallery project, and smart IOT guards the lifeline of the city

- Cocos2d-x 游戏存档[通俗易懂]

- guava多线程,futurecallback线程调用不平均

猜你喜欢

I Basic concepts

Airiot helps the urban pipe gallery project, and smart IOT guards the lifeline of the city

Helix QAC 2020.2 new static test tool maximizes the coverage of standard compliance

C语言多角度帮助你深入理解指针(1. 字符指针2. 数组指针和 指针数组 、数组传参和指针传参3. 函数指针4. 函数指针数组5. 指向函数指针数组的指针6. 回调函数)

ERROR: 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your

MySQL storage expression error

Ubuntu安装mysql8遇到的问题以及详细安装过程

Intelligent software analysis platform embold

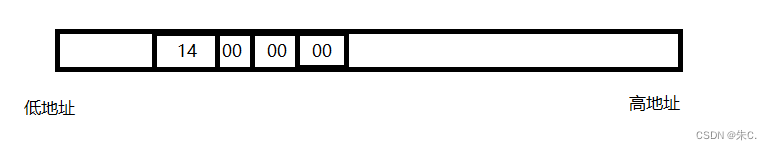

C语言 整型 和 浮点型 数据在内存中存储详解(内含原码反码补码,大小端存储等详解)

How does codesonar help UAVs find software defects?

随机推荐

H3C s7000/s7500e/10500 series post stack BFD detection configuration method

如何满足医疗设备对安全性和保密性的双重需求?

HOJ 2245 浮游三角胞(数学啊 )

使用高斯Redis实现二级索引

Cantata9.0 | new features

The latest version of codesonar has improved functional security and supports Misra, c++ parsing and visualization

恶魔奶爸 A1 语音听力初挑战

[concept of network principle]

SQL注入报错注入函数图文详解

寫一下跳錶

Mysql子查询关键字的使用方式(exists)

目标:不排斥 yaml 语法。争取快速上手

目前股票开户安全吗?可以直接网上开户吗。

How to meet the dual needs of security and confidentiality of medical devices?

openGl超级宝典学习笔记 (1)第一个三角形「建议收藏」

ISO 26262 - 基于需求测试以外的考虑因素

【网络原理的概念】

恶魔奶爸 B3 少量泛读,完成两万词汇量+

Differences and connections between MinGW, mingw-w64, tdm-gcc and other tool chains "suggestions collection"

How to choose fund products? What fund is suitable to buy in July 2022?