当前位置:网站首页>Yyds dry goods inventory embedded matrix

Yyds dry goods inventory embedded matrix

2022-07-05 03:04:00 【LolitaAnn】

This article is my note sharing , The content mainly comes from teacher Wu Enda's in-depth learning course . [1]

Embedding matrix

We talked about it earlier word embedding. In this section, let's talk about embedding matrix.

In the process of learning , In fact, I will one hot The pile of vectors of is transformed into a embedding Matrix .

That is to say, what you finally get should be a embedding Matrix , Not every word vector .

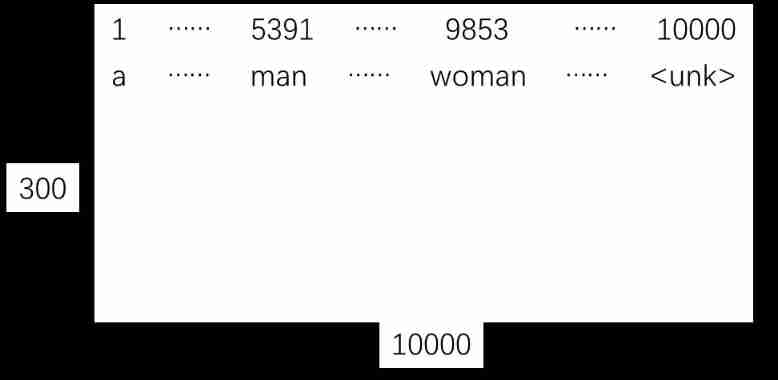

Let's continue our previous example , Suppose your dictionary is 1 ten thousand . Now you do word embedding choice 300 Features .

Then what you finally get should be a 300×10000 The matrix of dimensions .

We call this matrix word embedding The big matrix of .

from Embedding matrix Get words in e vector

Method 1

Remember ours one-hot Vector . The length of the vector is the length of the word list . The position of the word in the alphabet in the vector is 1, The rest of the figures are 0.

So I want to get . Of a word word embedding, We just need to put one-hot Multiply our vector by word embedding The matrix of .

So we can get the word in the corresponding position e vector .

Those who have studied linear algebra in the above formula should not be difficult to understand .

Let's take a simple example .

Method 2

Theoretically speaking, the above method is feasible , But it is not so used in practical applications . In practical application, we usually use a function to directly create word word embedding To find , Find the vector of the corresponding position .

In practice, you will never use one 10000 Of length word embedding, Because it's really a little short . So you should be exposed to a very large matrix . If a large matrix is multiplied by a super long one-hot If the vectors of are multiplied , Its computational overhead is very large .

边栏推荐

- D3js notes

- Design and implementation of campus epidemic prevention and control system based on SSM

- El tree whether leaf node or not, the drop-down button is permanent

- 【LeetCode】111. Minimum depth of binary tree (2 brushes of wrong questions)

- [micro service SCG] 33 usages of filters

- Devtools的简单使用

- IPv6 experiment

- Port, domain name, protocol.

- Pat class a 1160 forever (class B 1104 forever)

- Moco V2 literature research [self supervised learning]

猜你喜欢

this+闭包+作用域 面试题

Zabbix

Design and implementation of community hospital information system

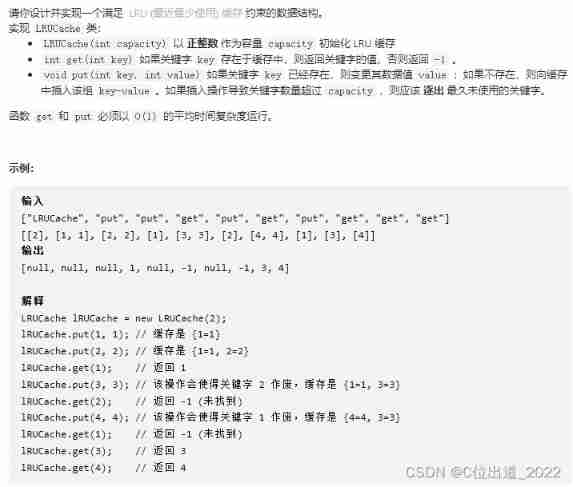

LeetCode146. LRU cache

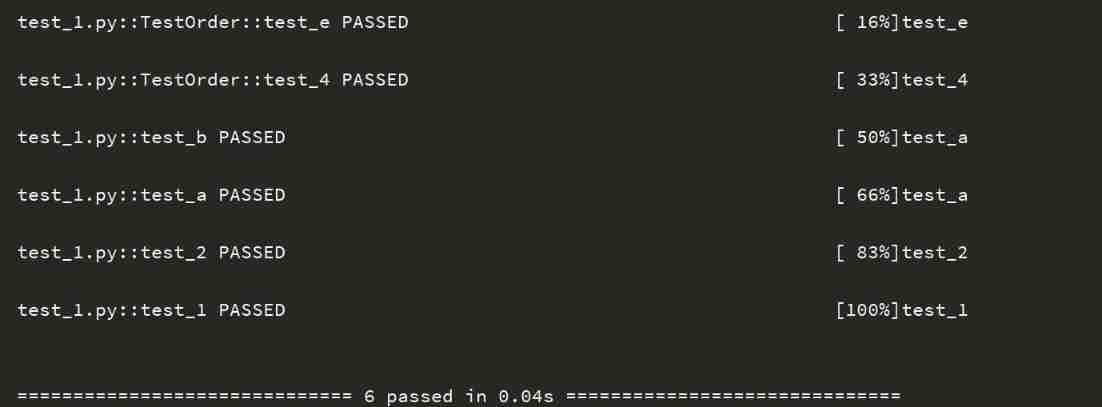

Pytest (4) - test case execution sequence

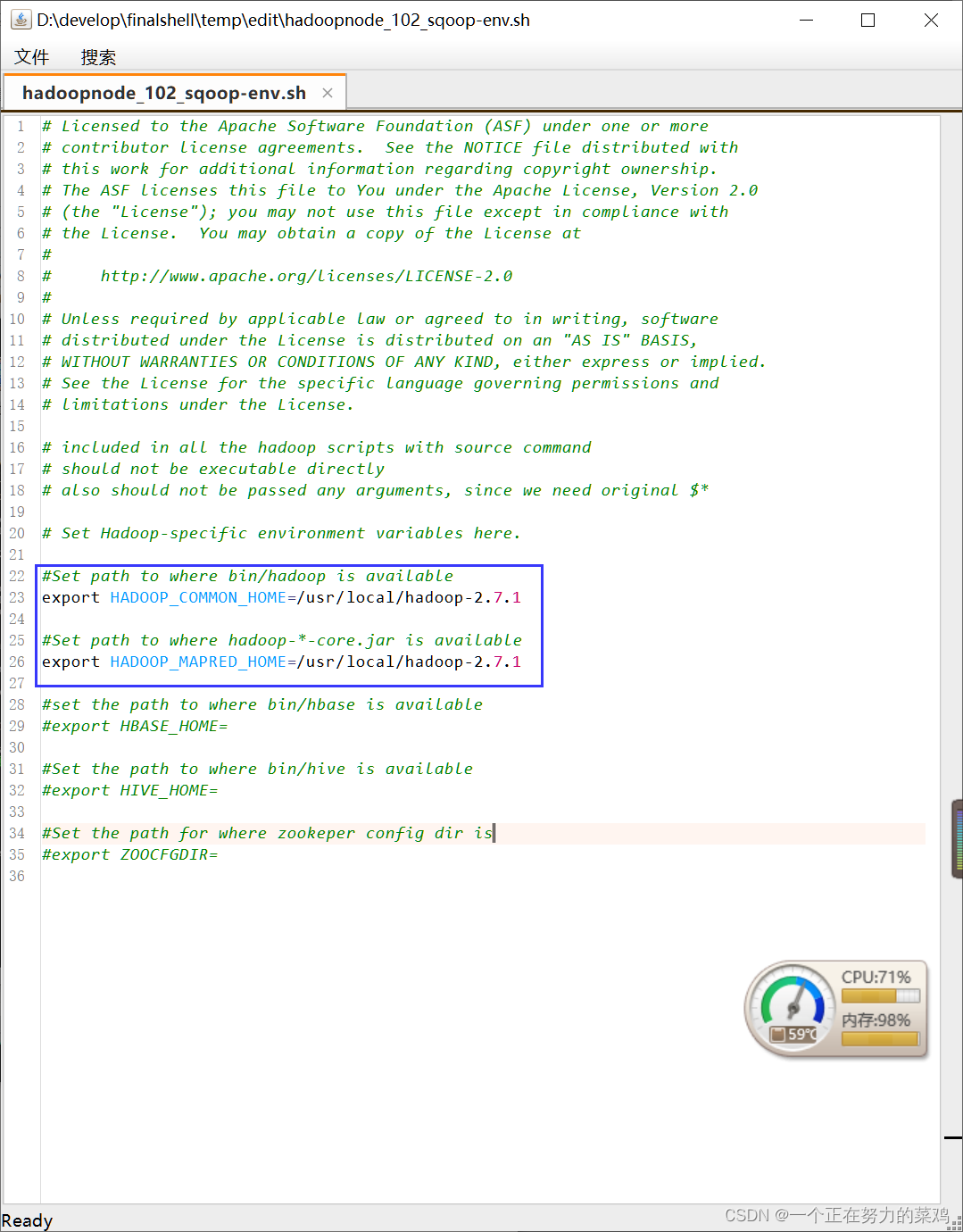

Sqoop installation

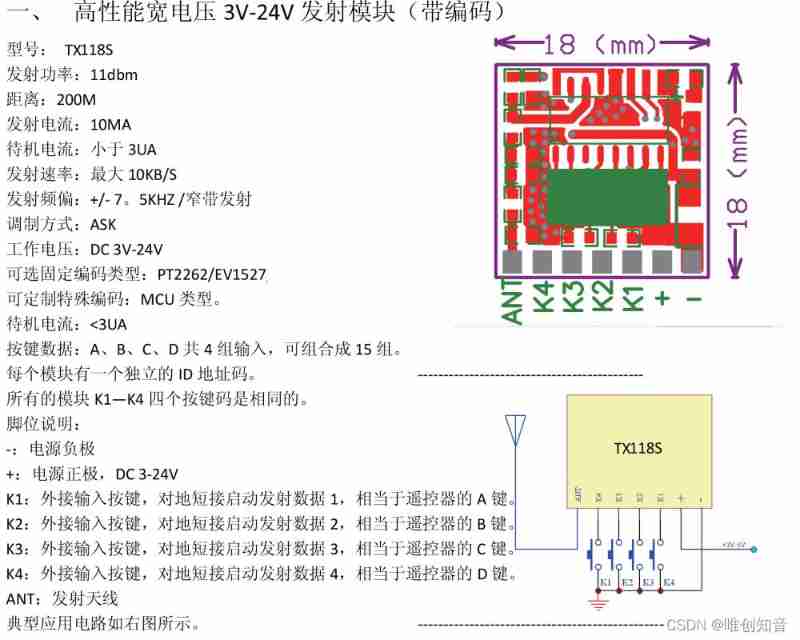

Voice chip wt2003h4 B008 single chip to realize the quick design of intelligent doorbell scheme

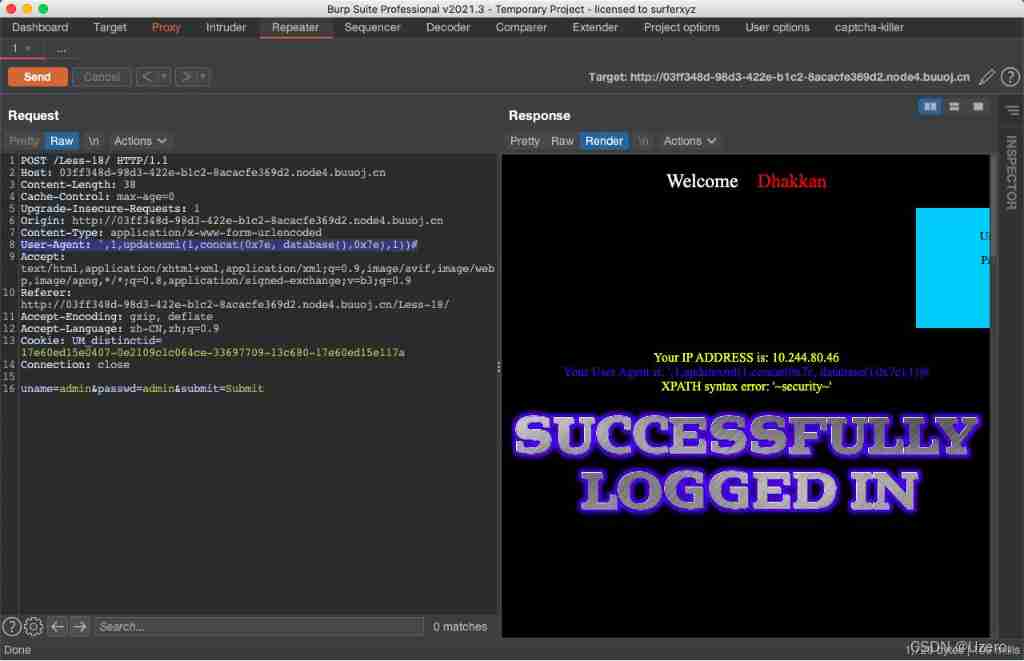

SQL injection exercise -- sqli Labs

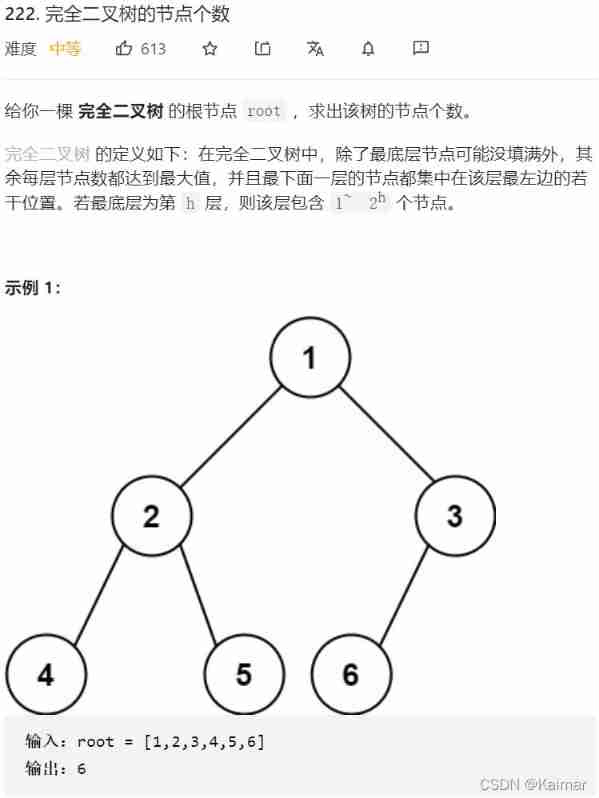

【LeetCode】222. The number of nodes of a complete binary tree (2 mistakes)

Design of kindergarten real-time monitoring and control system

随机推荐

ASP. Net core 6 framework unveiling example demonstration [01]: initial programming experience

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

Anchor free series network yolox source code line by line explanation Part 2 (a total of 10, ensure to explain line by line, after reading, you can change the network at will, not just as a participan

Idea inheritance relationship

Clean up PHP session files

Bumblebee: build, deliver, and run ebpf programs smoothly like silk

ASP. Net core 6 framework unveiling example demonstration [01]: initial programming experience

2022/02/13

LeetCode 237. Delete nodes in the linked list

C file in keil cannot be compiled

Cut! 39 year old Ali P9, saved 150million

Apache build web host

tuple and point

Elfk deployment

el-select,el-option下拉选择框

Design and practice of kubernetes cluster and application monitoring scheme

Good documentation

1.五层网络模型

Machine learning experiment report 1 - linear model, decision tree, neural network part

Last words record