当前位置:网站首页>Sqoop I have everything you want

Sqoop I have everything you want

2022-07-06 17:40:00 【Bald Second Senior brother】

Catalog

Sqoop brief introduction :

sqoop yes apache One of its “Hadoop And the relational database server ” Tools for .

Data import :

MySQL,Oracle Import data to Hadoop Of HDFS、HIVE、HBASE And other data storage systems

Export data :

from Hadoop Export data to relational database from the file system of mysql etc.

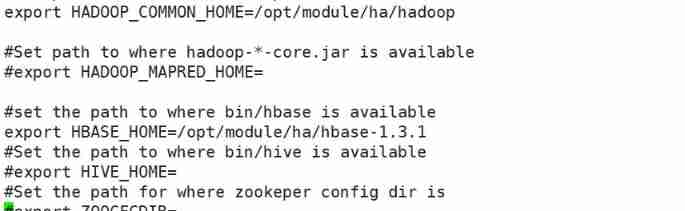

The configuration file :

1. Environment variable configuration

export SQOOP_HOME=/opt/module/ha/sqoop export PATH=$PATH:$SQOOP_HOME/bin

2. To configure sqoop Medium

file

hadoop,hive,hbase You can configure it if you need it

Sqoop Use

codegen

effect :

Generate code to interact with database records , Map tables in a relational database to a Java class , There are fields corresponding to each column in this class .

Example :

bin/sqoop codegen \ --connect jdbc:mysql://ljx:3306/company \ --username root \ --password 123456 \ --table staff \ --bindir /opt/module/sqoop/staff \ ### Folder needs to exist --class-name Staff \ --fields-terminated-by "\t" --outdir /opt/module/sqoop/staff ### Folder needs to exist

create-hive-table

effect :

Import table definitions Hive, Generate the... Corresponding to the table structure of the relational database hive Table structure

Example :

sqoop create-hive-table --connect jdbc:mysql://ljx:3306/sample --username root --password 123456 --table student --hive-table hive-student

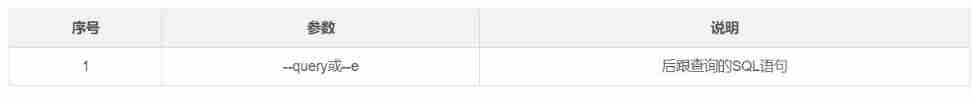

eval

effect :

assessment SQL Statement and display the results , Often used in import Before the data , Get to know SQL Is the statement correct , Is the data normal , And the results can be displayed on the console .

Example :

sqoop eval --connect jdbc:mysql://ljx:3306/mysql --username root --password 123456 --query "select * from user"

export( Very important )

effect :

from HDFS( Include Hive and HBase) Export data to a relational database .

Example :

sqoop export --connect jdbc:mysql://ljx:3306/sample --username root --password 123456 --table student --export-dir /user/hive/warehous/jjj --input-fields-terminated-by "\t" -m 1

import( important )

effect :

Import tables from the database HDFS,( Include Hive,HBase) in , If the import is Hive, So when Hive When there is no corresponding table in , Automatically create .

Example : take mysql in user Import table data to hdfs in

sqoop import --connect jdbc:mysql://ljx:3306/mysql --username root --password 123456 --table user

import-all-tables

effect :

Can be RDBMS All tables in are imported into HDFS in , Each table corresponds to a HDFS Catalog

Limiting conditions :

Each table must have a single column primary key , Or designate --autoreset-to-one-mapper Parameters .

Each table can only import All columns , That is, columns cannot be specified import.

Example :

sqoop import-all-tables --connect jdbc:mysql://ljx:3306/sample --username root --password 123456 --warehouse-dir /all_table

import-mainframe

effect :

direct import host , This order is too violent , and import-all-tables Not much difference , Wait until you need to study .

job

effect :

Used to generate a sqoop Mission , It will not be executed immediately after generation , It needs to be done manually .

Example :

establish job

qoop job --create myjob -- import-all-tables --connect jdbc:mysql://ljx:3006/mysql --username root --password 123456 --warehouse-dir /user/all_user

Be careful : In the use of job To perform sqoop The instruction of needs to be in -- Add a space after

list-databases

effect :

List the available databases on the server

Example :

sqoop list-databases --connect jdbc:mysql://ljx:3306 --username root --password 123456

list-tables

effect :

List the available tables in the database

Example :

sqoop list-tables --connect jdbc:mysql://ljx:3306/mysql --username root --password 123456

merge

effect :

take HDFS The data under different directories in is merged and put into the specified directory

Example :

[[email protected] sqoop-1.4.7]$ bin/sqoop merge \ --new-data /tdata/newdata/ \ --onto /tdata/olddata/ \ --target-dir /tdata/merged \ --jar-file /opt/module/sqoop/staff/Staff.jar \ --class-name Staff \ --merge-key id

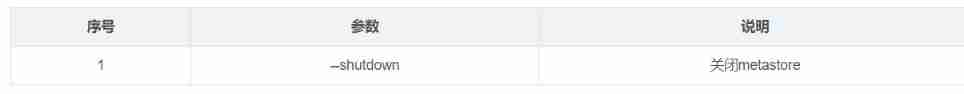

metastore

effect :

Recorded Sqoop job Metadata information , If the service is not started , So default job The storage directory of metadata is ~/.sqoop, Can be found in sqoop-site.xml Revision in China .

Example :

start-up sqoop Of metastore service

sqoop metastore

version

effect :

Display version information

Example :

sqoop version

The same thing sqoop take mysql Data transmission hive

sqoop import --connect jdbc:mysql://ljx:3306/sample --username root --password 123456 --table student --hive-import -m 1

And use import Import to hdfs Only more than --hive-import , If you want to specify hive Database and tables of can be used --hive-database And --hive-table To specify the

The same thing sqoop take mysql Data transmission hbase

sqoop import --connect jdbc:mysql://ljx:3306/mysql --username root --password 123456 --table help_keyword --hbase-table new_help_keyword --column-family person --hbase-row-key help_keyword_id

Be careful : Import time hbase The specified table should exist in

边栏推荐

- 【Elastic】Elastic缺少xpack无法创建模板 unknown setting index.lifecycle.name index.lifecycle.rollover_alias

- Program counter of JVM runtime data area

- Concept and basic knowledge of network layering

- Final review of information and network security (full version)

- Xin'an Second Edition: Chapter 12 network security audit technology principle and application learning notes

- 02 personal developed products and promotion - SMS platform

- [reverse] repair IAT and close ASLR after shelling

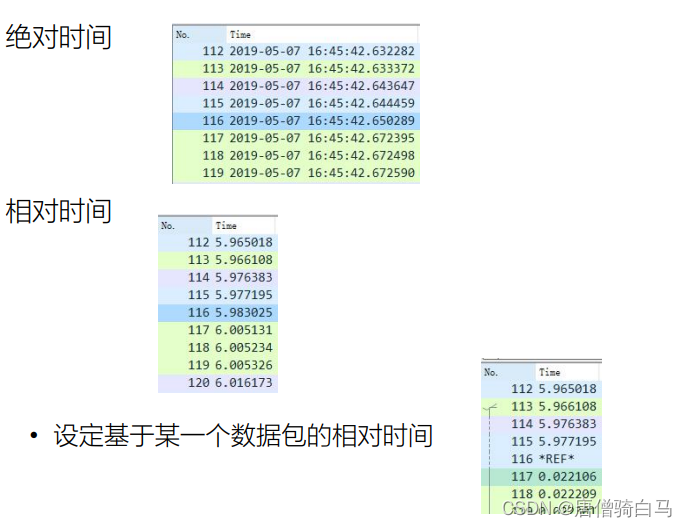

- 全网最全tcpdump和Wireshark抓包实践

- 当前系统缺少NTFS格式转换器(convert.exe)

- [VNCTF 2022]ezmath wp

猜你喜欢

Akamai anti confusion

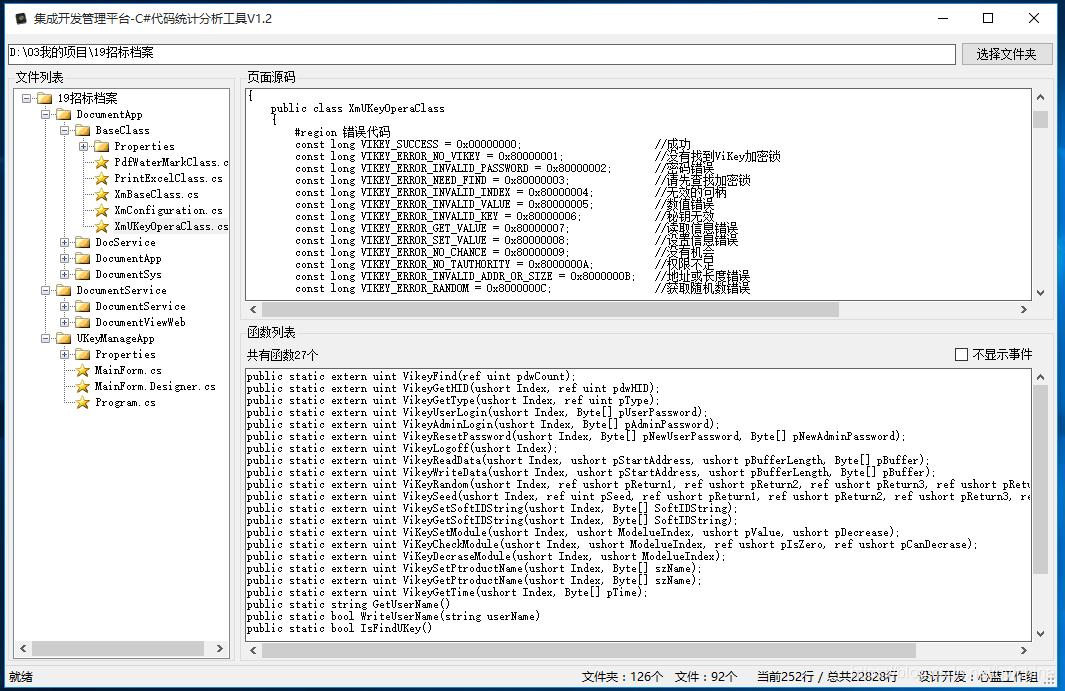

06 products and promotion developed by individuals - code statistical tools

BearPi-HM_ Nano development environment

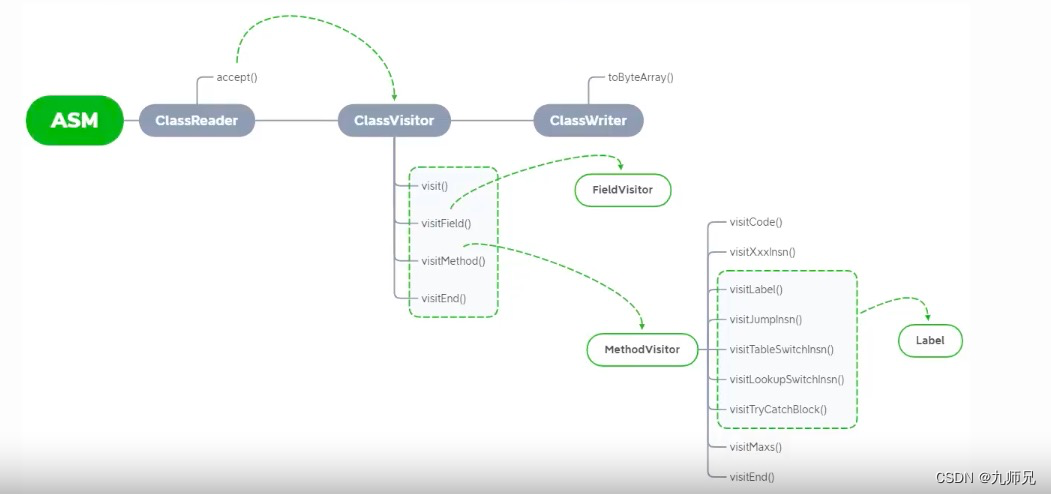

![[ASM] introduction and use of bytecode operation classwriter class](/img/0b/87c9851e577df8dcf8198a272b81bd.png)

[ASM] introduction and use of bytecode operation classwriter class

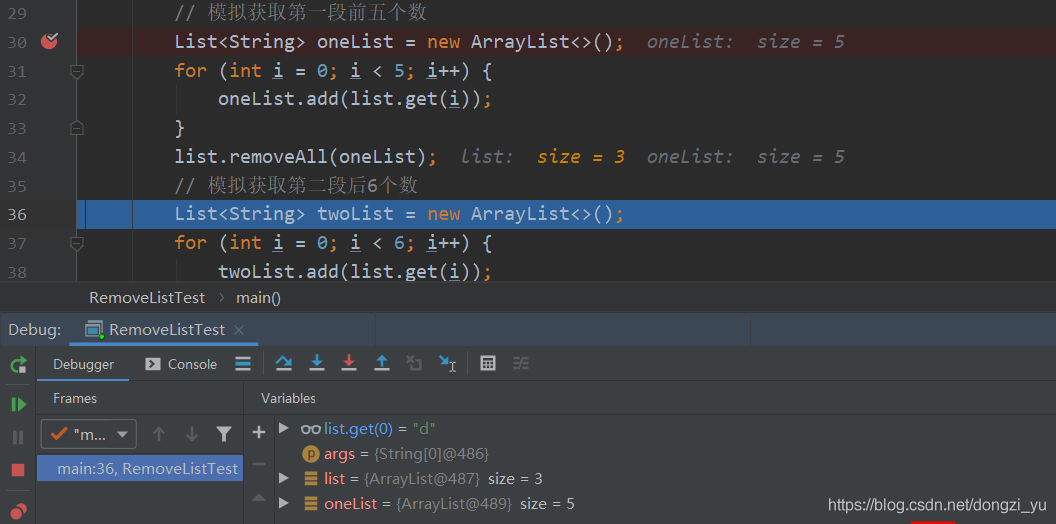

List set data removal (list.sublist.clear)

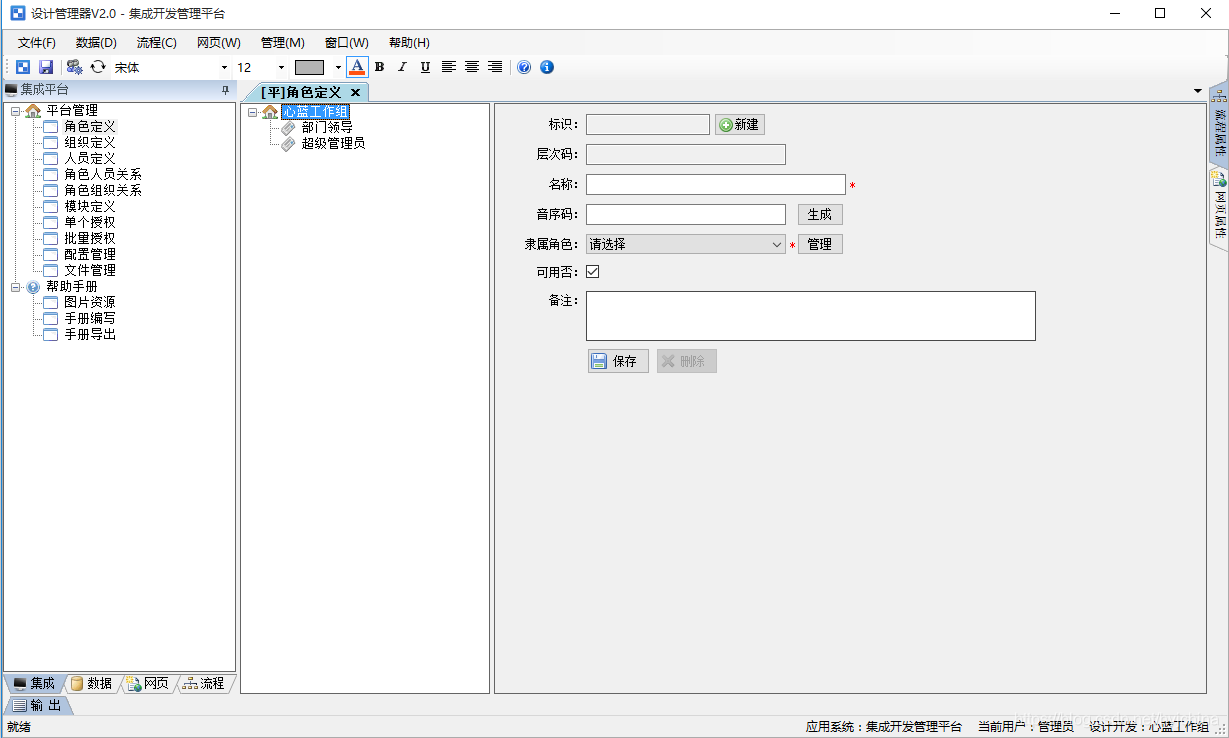

Integrated development management platform

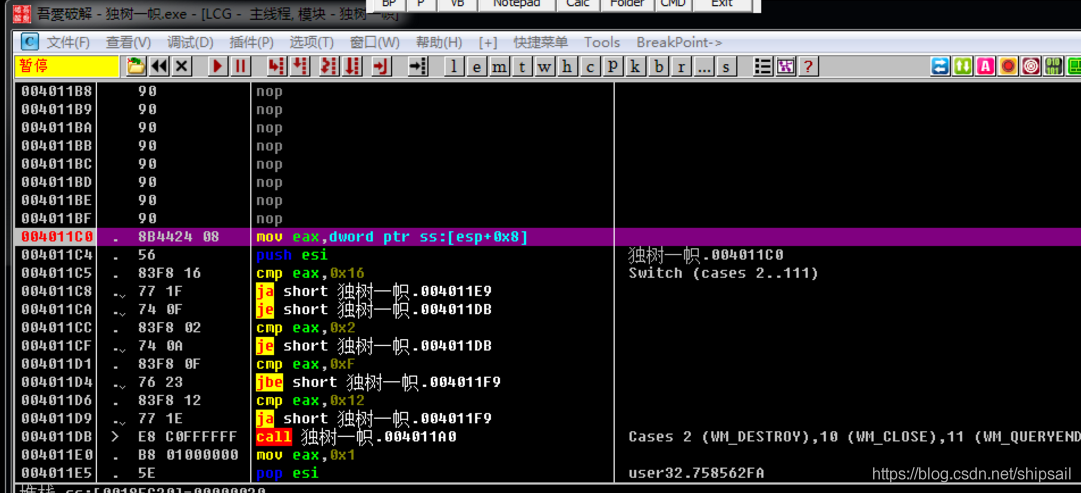

【逆向初级】独树一帜

【ASM】字节码操作 ClassWriter 类介绍与使用

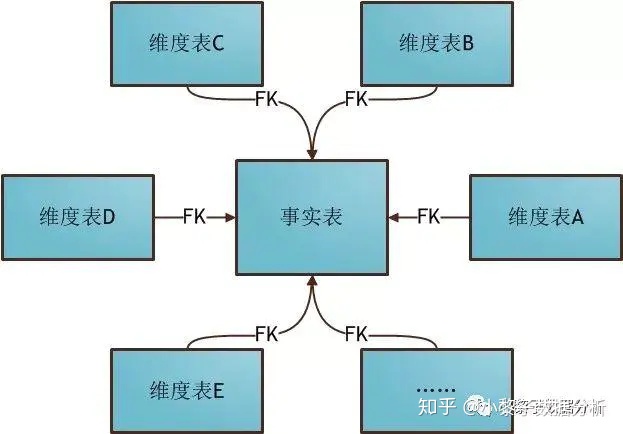

Models used in data warehouse modeling and layered introduction

全网最全tcpdump和Wireshark抓包实践

随机推荐

Interpretation of Flink source code (I): Interpretation of streamgraph source code

微信防撤回是怎么实现的?

The art of Engineering (3): do not rely on each other between functions of code robustness

Xin'an Second Edition: Chapter 24 industrial control safety demand analysis and safety protection engineering learning notes

Grafana 9 正式发布,更易用,更酷炫了!

轻量级计划服务工具研发与实践

【MySQL入门】第三话 · MySQL中常见的数据类型

Uipath browser performs actions in the new tab

Guidelines for preparing for the 2022 soft exam information security engineer exam

Final review of information and network security (based on the key points given by the teacher)

TCP connection is more than communicating with TCP protocol

Kernel link script parsing

Wordcloud colormap color set and custom colors

[VNCTF 2022]ezmath wp

Application service configurator (regular, database backup, file backup, remote backup)

C # nanoframework lighting and key esp32

Re signal writeup

Flink parsing (III): memory management

【MySQL入门】第一话 · 初入“数据库”大陆

Single responsibility principle