当前位置:网站首页>Engineering deployment (III): optimization of low computing power platform model performance

Engineering deployment (III): optimization of low computing power platform model performance

2022-07-08 02:20:00 【pogg_】

Preface : This article discusses how to improve the performance of the model on low-end mobile devices , The article aims at the model ( Do not change the original model op Under the circumstances , No need to retrain ) And post-processing optimization , If there is something wrong , Hope the criticism points out !

One 、 Model optimization

1.1 op The fusion

The model optimization here refers to the model convolution layer and bn Fusion of layers or conection,identity Isoparametric operation , Changing your mind comes from a discussion you didn't intend to participate in one day :

The boss thinks fuse It can be done , But it's not necessary ,fuse(conv+bn)=CB Its function lies in others , And it has little effect on speed increase , But I am more insistent on my point of view , because yolov5 The comparison is based on high computing power graphics cards , Low end card , Not even GPU,NPU The blessed equipment has an obvious speed-up effect .

Especially for too much reuse group conv or depthwise conv Model of , for instance ,shufflenetv2 Regarded as an efficient mobile network, it is often used on the end side backbone, We see a single shuffle block(stride=2) The component of uses two deep separable convolutions :

Just a whole set of network is used 25 Group depthwise conv( The reason lies in shufflenet The series is low computing power cpu Equipment design , It is inevitable to reuse a large number of deep separation convolutions )

So with this original intention , Made a set based on v5lite-s Model experiment , And post the test results for everyone to exchange :

The above test results are based on shuffle block All convolution sums of bn The result of layer fusion , extract coco val2017 Medium 1000 A picture to test , You can see , stay i5 On the core of ,fuse The later model is x86 cpu The previous single forward acceleration is obvious . If for arm End cpu, The effect will be more obvious .

The fusion script is as follows :

import torch

from thop import profile

from copy import deepcopy

from models.experimental import attempt_load

def model_print(model, img_size):

# Model information. img_size may be int or list, i.e. img_size=640 or img_size=[640, 320]

n_p = sum(x.numel() for x in model.parameters()) # number parameters

n_g = sum(x.numel() for x in model.parameters() if x.requires_grad) # number gradients

stride = max(int(model.stride.max()), 32) if hasattr(model, 'stride') else 32

img = torch.zeros((1, model.yaml.get('ch', 3), stride, stride), device=next(model.parameters()).device) # input

flops = profile(deepcopy(model), inputs=(img,), verbose=False)[0] / 1E9 * 2 # stride GFLOPS

img_size = img_size if isinstance(img_size, list) else [img_size, img_size] # expand if int/float

fs = ', %.6f GFLOPS' % (flops * img_size[0] / stride * img_size[1] / stride) # imh x imw GFLOPS

print(f"Model Summary: {

len(list(model.modules()))} layers, {

n_p} parameters, {

n_g} gradients{

fs}")

if __name__ == '__main__':

load = 'weights/v5lite-e.pt'

save = 'weights/repv5lite-e.pt'

test_size = 320

print(f'Done. Befrom weights:({

load})')

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = attempt_load(load, map_location=device) # load FP32 model

torch.save(model, save)

model_print(model, test_size)

print(model)

The fusion op The core code is as follows :

if type(m) is Shuffle_Block:

if hasattr(m, 'branch1'):

re_branch1 = nn.Sequential(

nn.Conv2d(m.branch1[0].in_channels, m.branch1[0].out_channels,

kernel_size=m.branch1[0].kernel_size, stride=m.branch1[0].stride,

padding=m.branch1[0].padding, groups=m.branch1[0].groups),

nn.Conv2d(m.branch1[2].in_channels, m.branch1[2].out_channels,

kernel_size=m.branch1[2].kernel_size, stride=m.branch1[2].stride,

padding=m.branch1[2].padding, bias=False),

nn.ReLU(inplace=True),

)

re_branch1[0] = fuse_conv_and_bn(m.branch1[0], m.branch1[1])

re_branch1[1] = fuse_conv_and_bn(m.branch1[2], m.branch1[3])

# pdb.set_trace()

# print(m.branch1[0])

m.branch1 = re_branch1

if hasattr(m, 'branch2'):

re_branch2 = nn.Sequential(

nn.Conv2d(m.branch2[0].in_channels, m.branch2[0].out_channels,

kernel_size=m.branch2[0].kernel_size, stride=m.branch2[0].stride,

padding=m.branch2[0].padding, groups=m.branch2[0].groups),

nn.ReLU(inplace=True),

nn.Conv2d(m.branch2[3].in_channels, m.branch2[3].out_channels,

kernel_size=m.branch2[3].kernel_size, stride=m.branch2[3].stride,

padding=m.branch2[3].padding, bias=False),

nn.Conv2d(m.branch2[5].in_channels, m.branch2[5].out_channels,

kernel_size=m.branch2[5].kernel_size, stride=m.branch2[5].stride,

padding=m.branch2[5].padding, groups=m.branch2[5].groups),

nn.ReLU(inplace=True),

)

re_branch2[0] = fuse_conv_and_bn(m.branch2[0], m.branch2[1])

re_branch2[2] = fuse_conv_and_bn(m.branch2[3], m.branch2[4])

re_branch2[3] = fuse_conv_and_bn(m.branch2[5], m.branch2[6])

# pdb.set_trace()

m.branch2 = re_branch2

# print(m.branch2)

self.info()

The following figure is not carried out fuse Model parameter quantity of , Amount of computation , And the individual shuffle block Structure , You can see the unmerged shuffle block In a single branch2 Branches contain 8 Height op.

The parameters of the fused model are reduced 0.5 ten thousand , The amount of calculation is less 0.6 ten thousand , Mainly from bn layer , And you can see individual branch2 In the branch op Three less , A complete set of backbone The network has been reduced 25 individual bn layer

1.2 Re parameterization

The repartition operation mentioned in the preface is more important than op The fusion , Introduce the previously mentioned g Model : Pursuit of perfection :Repvgg Reparameterization pairs YOLO Experiment and thinking of industrial landing (https://zhuanlan.zhihu.com/p/410874403), because g The model is high performance gpu involve ,backbone Used repvgg, Pass during training rbr_1x1 and identity Go up , But reasoning must be re parameterized into 3×3 Convolution , To have high cost performance , The most intuitionistic , Use the following code for each repvgg block Re parameterization and fusion :

if type(m) is RepVGGBlock:

if hasattr(m, 'rbr_1x1'):

# print(m)

kernel, bias = m.get_equivalent_kernel_bias()

rbr_reparam = nn.Conv2d(in_channels=m.rbr_dense.conv.in_channels,

out_channels=m.rbr_dense.conv.out_channels,

kernel_size=m.rbr_dense.conv.kernel_size,

stride=m.rbr_dense.conv.stride,

padding=m.rbr_dense.conv.padding, dilation=m.rbr_dense.conv.dilation,

groups=m.rbr_dense.conv.groups, bias=True)

rbr_reparam.weight.data = kernel

rbr_reparam.bias.data = bias

for para in self.parameters():

para.detach_()

m.rbr_dense = rbr_reparam

# m.__delattr__('rbr_dense')

m.__delattr__('rbr_1x1')

if hasattr(self, 'rbr_identity'):

m.__delattr__('rbr_identity')

if hasattr(self, 'id_tensor'):

m.__delattr__('id_tensor')

m.deploy = True

m.forward = m.fusevggforward # update forward

# continue

# print(m)

if type(m) is Conv and hasattr(m, 'bn'):

# print(m)

m.conv = fuse_conv_and_bn(m.conv, m.bn) # update conv

delattr(m, 'bn') # remove batchnorm

m.forward = m.fuseforward # update forward

""" Re parameterization is required before fuse operation , Otherwise, re parameterization will fail """

The following results can directly see the number of model layers 、 There are obvious changes in the amount of calculation and parameters , The following figure shows the model parameters and calculation amount before and after re parameterization 、 Model structure ::

Two 、 post-processing

2.1 Inverse function operation

The optimization of post-processing is also important , The purpose of post-processing optimization is to reduce inefficient loops or judgment statements , Avoid using expensive operators in large numbers .

We use yolov5 be based on ncnn demo Test and modify the code , But because the source code links too many libraries , Let's smoke alone general_poprosal function , imitation general_poprosal Write a paragraph using sigmoid Calculation confidence Compare again 80 class , Calculation bbox Coordinate operation .

float sigmoid(float x)

{

return static_cast<float>(1.f / (1.f + exp(-x)));

}

vector<float> ram_cls_num(int num)

{

std::vector<float> res;

float a = 10.0, b = 100.0;

srand(time(NULL));// Set random number seed , Make each random sequence different

cout<<"number class:"<<endl;

for (int i = 1; i <= num; i++)

{

float number = rand() % (N + 1) / (float)(N + 1);

res.push_back(number);

cout<<number<<' ';

}

cout<<endl;

return res;

}

int sig()

{

int num_anchors = 3;

int num_grid_y = 224;

int num_grid_x = 224;

float prob_threshold = 0.6;

std::vector<float> num_class = ram_cls_num(80);

clock_t start, ends;

start = clock();

for (int q = 0; q < num_anchors; q++)

{

for (int i = 0; i < num_grid_y; i++)

{

for (int j = 0; j < num_grid_x; j++)

{

float tmp = i * num_grid_x + j;

float box_score = rand() % (N + 1) / (float)(N + 1);

// find class index with max class score

int class_index = 0;

float class_score = 0;

for (int k = 0; k < num_class.size(); k++)

{

float score = num_class[k];

if (score > class_score)

{

class_index = k;

class_score = score;

}

}

float prob_threshold = 0.6;

float confidence = sigmoid(box_score) * sigmoid(class_score);

if (confidence >= prob_threshold)

{

float dx = sigmoid(1);

float dy = sigmoid(2);

float dw = sigmoid(3);

float dh = sigmoid(4);

}

}

}

}

ends = clock() - start;

cout << "sigmoid function cost time:" << ends << "ms" <<endl;

return 0;

}

It takes time here :

number class:

0.65 0.08 0.62 0.33 0.79 0.7 0.44 0 0.96 0.75 0.92 0.66 0.54 0.23 0.14 0.75 0.94 0.88 0.76 0.81 0.28 0.37 0.34 0.19 0.46 0.93 0.79 0.86 0.64 0.55 0.84 0.91 0.33 0.53 0.71 0.53 0.69 0.63 0.67 0.35 0.24 0.97 0.94 0.91 0.66 0.63 0.14 0.4 0.28 0.24 0.29 0.2 0.58 0.65 0.51 0.79 0.49 0.47 0.94 0.84 0.38 0.84 0.88 0.61 0.99 0.17 0.02 0.02 0.42 0.96 0.48 0.6 0.08 0.33 0.84 0.04 0.8 0.22 0.16 0.57

sigmoid function cost time:68ms

Modify the function , First use sigmoid The inverse function of unsigmoid Calculation prob_threshold, There is no need to traverse first 80 Categories find the category with the highest score , You won't encounter cutting into the third for After the cycle, it must be carried out twice sigmoid operation ( Calculation confidence) The problem of , Only when box_score > unsigmoid(prob_threshold) It's going to happen 80 Class max score lookup , Calculate again bbox coordinate ,confidence Etc .

float unsigmoid(float x)

{

return static_cast<float>(-1.0f * (float)log((1.0f / x) - 1.0f));

}

int unsig()

{

int num_anchors = 3;

int num_grid_y = 224;

int num_grid_x = 224;

float prob_threshold = 0.6;

std::vector<float> num_class = ram_cls_num(80);

un_prob = unsigmoid(prob_threshold)

clock_t start, ends;

start = clock();

for (int q = 0; q < num_anchors; q++)

{

for (int i = 0; i < num_grid_y; i++)

{

for (int j = 0; j < num_grid_x; j++)

{

float tmp = i * num_grid_x + j;

float box_score = rand() % (N + 1) / (float)(N + 1);

// find class index with max class score

if (box_score > un_prob )

// First use sigmoid The inverse function of bypasses twice sigmoid, At the same time, put the front of 80 Class comparison comes after judgment , If the conditions are not met, do not

{

int class_index = 0;

float class_score = 0;

for (int k = 0; k < num_class.size(); k++)

{

float score = num_class[k];

if (score > class_score)

{

class_index = k;

class_score = score;

}

}

float confidence = sigmoid(box_score) * sigmoid(class_score);

if (confidence >= prob_threshold)

{

float dx = sigmoid(1);

float dy = sigmoid(2);

float dw = sigmoid(3);

float dh = sigmoid(4);

}

}

}

}

}

ends = clock() - start;

cout << "unsigmoid function cost time:" << ends << "ms" <<endl;

return 0;

}

give the result as follows :

number class:

0.65 0.08 0.62 0.33 0.79 0.7 0.44 0 0.96 0.75 0.92 0.66 0.54 0.23 0.14 0.75 0.94 0.88 0.76 0.81 0.28 0.37 0.34 0.19 0.46 0.93 0.79 0.86 0.64 0.55 0.84 0.91 0.33 0.53 0.71 0.53 0.69 0.63 0.67 0.35 0.24 0.97 0.94 0.91 0.66 0.63 0.14 0.4 0.28 0.24 0.29 0.2 0.58 0.65 0.51 0.79 0.49 0.47 0.94 0.84 0.38 0.84 0.88 0.61 0.99 0.17 0.02 0.02 0.42 0.96 0.48 0.6 0.08 0.33 0.84 0.04 0.8 0.22 0.16 0.57

unsigmoid function cost time:77ms

It seems that the posture is wrong , Let's raise prob_threshold=0.6, Get new results :

sigmoid function cost time:69ms

unsigmoid function cost time:47ms

At this point, you can see the benefits , Keep raising the threshold ,unsigmoid The shorter the function takes , But instead, the targets are stuck by too high a threshold , The second half of the function cannot be . So we can see , Using inverse function calculation can bypass twice sigmoid Index operation of ( Calculation confidense), But whether to use this method still needs to be analyzed according to the actual business , If the target box_score All on the low side , Then this optimization will only become negative optimization .

2.2 omp Multi parallel

If there are a lot of after-treatment for loop , And the loop has no data dependency and function dependency , Consider using openml Library for multi-threaded parallel acceleration , Search for example 80 Class score The highest class :

#pragma omp parallel for num_threads(ncnn::get_big_cpu_count())

for (int k = 0; k < num_class; k++) {

float score = featptr[5 + k];

if (score > class_score) {

class_index = k;

class_score = score;

}

}

Or multithreading calculates the location information of each target :

#pragma omp parallel for num_threads(ncnn::get_big_cpu_count())

for (int i = 0; i < count; i++) {

objects[i] = proposals[picked[i]];

// adjust offset to original unpadded

float x0 = (objects[i].rect.x) / scale;

float y0 = (objects[i].rect.y) / scale;

float x1 = (objects[i].rect.x + objects[i].rect.width) / scale;

float y1 = (objects[i].rect.y + objects[i].rect.height) / scale;

// clip

x0 = std::max(std::min(x0, (float) (img_w - 1)), 0.f);

y0 = std::max(std::min(y0, (float) (img_h - 1)), 0.f);

x1 = std::max(std::min(x1, (float) (img_w - 1)), 0.f);

y1 = std::max(std::min(y1, (float) (img_h - 1)), 0.f);

objects[i].rect.x = x0;

objects[i].rect.y = y0;

objects[i].rect.width = x1 - x0;

objects[i].rect.height = y1 - y0;

}

but ncnn The underlying source code of has realized Parallel Computing , Therefore, there is no accelerating effect , But it can be recorded as a method for later use .

After the above modification, the model detection effect is as follows :

xiaomi 10+CPU(Snapdragon 865):

redmi K30+CPU(Snapdragon 730G):

Code link :https://github.com/ppogg/ncnn-android-v5lite

Welcome star and fork~

边栏推荐

- Yolo fast+dnn+flask realizes streaming and streaming on mobile terminals and displays them on the web

- Leetcode question brushing record | 27_ Removing Elements

- XMeter Newsletter 2022-06|企业版 v3.2.3 发布,错误日志与测试报告图表优化

- 银行需要搭建智能客服模块的中台能力,驱动全场景智能客服务升级

- 力争做到国内赛事应办尽办,国家体育总局明确安全有序恢复线下体育赛事

- Opengl/webgl shader development getting started guide

- 很多小夥伴不太了解ORM框架的底層原理,這不,冰河帶你10分鐘手擼一個極簡版ORM框架(趕快收藏吧)

- Force buckle 4_ 412. Fizz Buzz

- 2022年5月互联网医疗领域月度观察

- JVM memory and garbage collection-3-direct memory

猜你喜欢

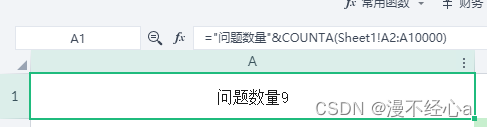

excel函数统计已存在数据的数量

Learn face detection from scratch: retinaface (including magic modified ghostnet+mbv2)

入侵检测——Uniscan

Unity 射线与碰撞范围检测【踩坑记录】

Thread deadlock -- conditions for deadlock generation

XMeter Newsletter 2022-06|企业版 v3.2.3 发布,错误日志与测试报告图表优化

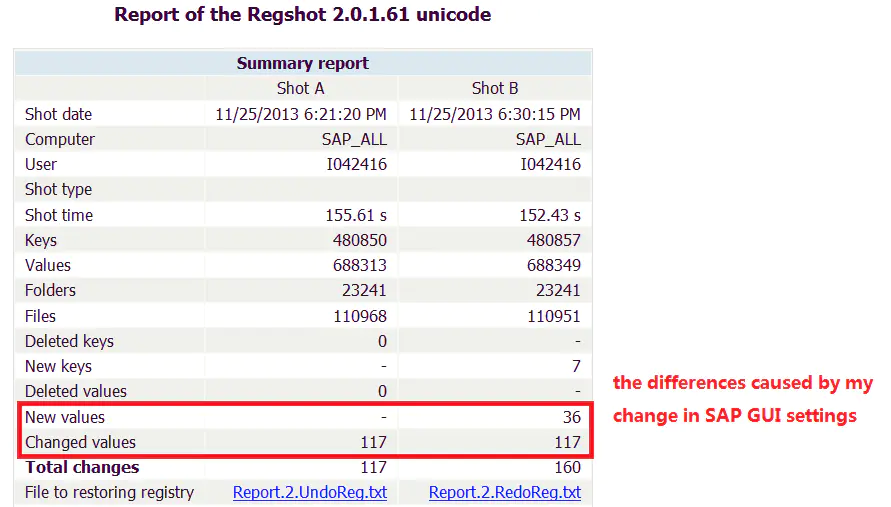

Talk about the realization of authority control and transaction record function of SAP system

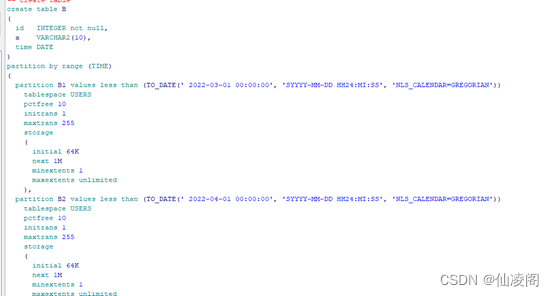

非分区表转换成分区表以及注意事项

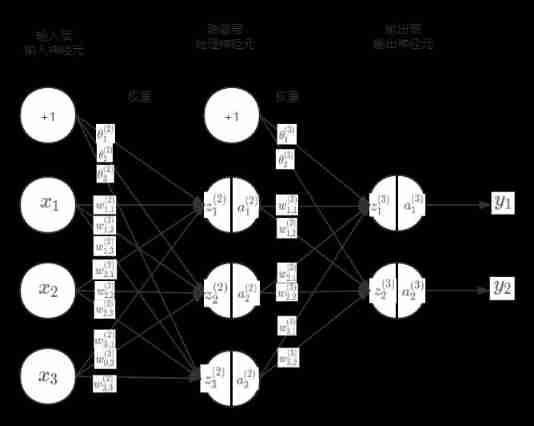

Ml backward propagation

谈谈 SAP iRPA Studio 创建的本地项目的云端部署问题

随机推荐

QT -- create QT program

The generosity of a pot fish

Key points of data link layer and network layer protocol

leetcode 865. Smallest Subtree with all the Deepest Nodes | 865. The smallest subtree with all the deepest nodes (BFs of the tree, parent reverse index map)

Industrial Development and technological realization of vr/ar

分布式定时任务之XXL-JOB

1331:【例1-2】后缀表达式的值

魚和蝦走的路

Opengl/webgl shader development getting started guide

Where to think

Gaussian filtering and bilateral filtering principle, matlab implementation and result comparison

企业培训解决方案——企业培训考试小程序

Unity 射线与碰撞范围检测【踩坑记录】

Neural network and deep learning-5-perceptron-pytorch

鱼和虾走的路

Ml backward propagation

leetcode 865. Smallest Subtree with all the Deepest Nodes | 865.具有所有最深节点的最小子树(树的BFS,parent反向索引map)

mysql报错ORDER BY clause is not in SELECT list, references column ‘‘which is not in SELECT list解决方案

Force buckle 4_ 412. Fizz Buzz

Nanny level tutorial: Azkaban executes jar package (with test samples and results)