当前位置:网站首页>Spark SQL learning bullet 2

Spark SQL learning bullet 2

2022-07-05 02:26:00 【Several storehouses of cabbage white】

I'm busy every day, but I don't know what I've done

Small talk

I'm a little weak recently , Study without energy , Driving test appointment has been hanging on me . Cry and chirp

On the subject

Last time Data Frame yes Spark SQL At the heart of , This article introduces two ways to Spark SQL operation

Use Spark SQL Data analysis , There are two options , The first one is DSL Language , The second one is SQL Language . Of course , You can also use Hive SQL.

Use DSL To operate

First create Spark Session object

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark_Sql")

val sparkSession = SparkSession.builder().config(sparkConf).getOrCreate()

import sparkSession.implicits._Use Data Frame Of API Analyze

// Read Json file , Return to one Data Frame object

val frame = sparkSession.read.json("date/people.json")

// View in the form of a table people Information

frame.show()Take a look at the style of the table

By reading the json File creation Data Frame object ,Data Frame Provides flexibility , And powerful with optimization API, for example select,where,order by,group by ,limit,union Such operators operate ,Data Frame take SQL select Each component of the statement is encapsulated into the same name API, We can SQL Boy Get familiar more quickly Spark SQL. There is no need for RDD Can also perform data analysis .

Here are some examples to use Data Frame Of Api

- Output in tree format Data Frame Object structure information

frame.printSchema()

You can see ,root After the root node , The following fields are json The fields inside , These fields are also output in table structure . At the same time, the type of each field is also marked .

2. stay SQL Inside , The most frequently written sentence is Select, Most of them are queries , It can be said that almost all of them are queries . stay DSL There are also select, It said that ,Data Frame Of Api There is also a corresponding SQL keyword . Pass below select show

stay SQL It uses query statements

select name from surface

DSL It's like this in English

frame.select("name").show()

Why show,show The data will be displayed in table structure , If not show Method , What will it look like

println(frame.select("name"))

The type of this field displayed , At the beginning printSchema Method

What's shown above Select Method is just one of them , For the author , I don't think this way is good , I prefer the following way

frame.select($"name")

Take a look at the results

2. Use combination DSL Analyze

Look for ages greater than 25 And the next year's age and gender, in ascending order of age

frame.select($"name",$"age" +1,$"gender") .where($"age" > 25)

.orderBy(frame("age").asc) .show()Take a look at the results

Of course! , You can also group and aggregate . stay SQL Inside is group by + sum, stay DSL in , It needs to be done first groupby Then use it directly when counting count

val frame = sparkSession.read.json("date/people.json")

// Group aggregation

frame.groupBy($"age" ).count().show()

The above example can be flexibly used Data Frame Provided Api Realized SQL Same operation , But if you put it in RDD Programming , For the operation of grouping aggregation , It needs to be done first groupbykey And then again map transformation .

Not to mention RDD Read Json Will be converted into a RDD[String], And then convert to other RDD Type of .

Spark SQL It can be parsed directly Json And infer the structural information (Schema)

If you don't want to learn DSL, It doesn't matter. , Here's how to use it SQL The query

perform SQL Inquire about

SparkSession Provides direct reading SQL Methods ,SQL Statements can be passed directly as strings to sql Method inside , And the object returned is Data Frame object . But I want to implement it like this , You need to Data Frame Object is registered as a temporary table , Then you can operate

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark_Sql")

val sparkSession = SparkSession.builder().config(sparkConf).getOrCreate()

import sparkSession.implicits._

// Read json file

val frame = sparkSession.read.json("date/people.json")

// Register as a temporary form

frame.createOrReplaceTempView("people")

// call Spark Session Of SQL Interface , The temporary table is SQL Inquire about

sparkSession.sql("select age,count(*) from people group by age").show()Take a look at the results

You need to register as a temporary table , Only then can we carry on SQL Inquire about . But there is a problem with the temporary table , This Spark Session At the end , This watch won't work , Therefore, there is a comprehensive temporary table

Comprehensive temporary table

The scope of the comprehensive temporary table is a Spark All sessions within the application , Will persist , Share... In all sessions . Let's demonstrate

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark_Sql")

val sparkSession = SparkSession.builder().config(sparkConf).getOrCreate()

import sparkSession.implicits._

// Read json file

val frame = sparkSession.read.json("date/people.json")

// Register as a global table

frame.createOrReplaceGlobalTempView("people")

// The query

sparkSession.sql("select name,age from people").show()

// Create a new session

sparkSession.newSession().sql("select name,age from people").show()Be careful , Wrong.

Look at the error message

Can't find people This global table . We have set up , Why is there no such table ?

Referencing a global table requires global_temp Are identified . This global_temp It is equivalent to the database of the system , The global table is in this database .

Now that you know the reason for the mistake , Let's take a look at the correct code and results

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Spark_Sql")

val sparkSession = SparkSession.builder().config(sparkConf).getOrCreate()

import sparkSession.implicits._

// Read json file

val frame = sparkSession.read.json("date/people.json")

// Register as a global table

frame.createOrReplaceGlobalTempView("people")

// The query

sparkSession.sql("select name,age from global_temp.people").show()

// New conversation

sparkSession.newSession().sql("select name,age from global_temp.people").show()No report error , And there are results

summary

What will be updated tomorrow is RDDS and Data Frame and Data Set The relationship and transformation between .

I didn't write much today , Also quite few , Tomorrow I will spend an afternoon writing a long article

Today's subject 4 is still being accepted

Today is also a day to miss her

边栏推荐

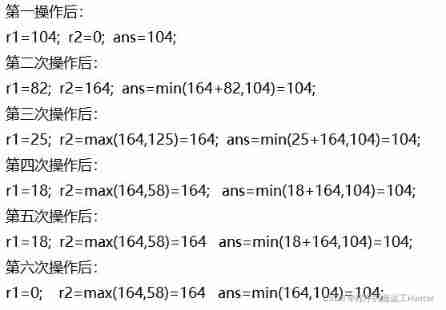

- Luo Gu Pardon prisoners of war

- Subject 3 how to turn on the high beam diagram? Is the high beam of section 3 up or down

- Marubeni Baidu applet detailed configuration tutorial, approved.

- Use the difference between "Chmod a + X" and "Chmod 755" [closed] - difference between using "Chmod a + X" and "Chmod 755" [closed]

- The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

- Practice of tdengine in TCL air conditioning energy management platform

- Spoon inserts and updates the Oracle database, and some prompts are inserted with errors. Assertion botch: negative time

- Exploration of short text analysis in the field of medical and health (II)

- 低度酒赛道进入洗牌期,新品牌如何破局三大难题?

- 官宣!第三届云原生编程挑战赛正式启动!

猜你喜欢

The most powerful new household god card of Bank of communications. Apply to earn 2100 yuan. Hurry up if you haven't applied!

Missile interception -- UPC winter vacation training match

Character painting, I use characters to draw a Bing Dwen Dwen

Scientific research: are women better than men?

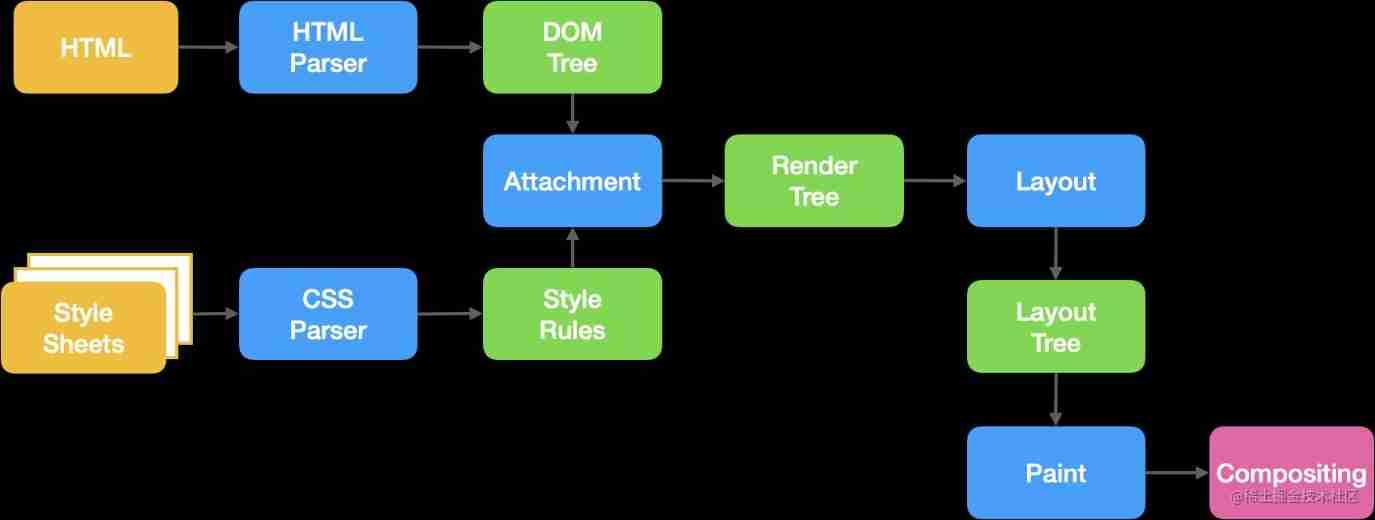

Introduce reflow & repaint, and how to optimize it?

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

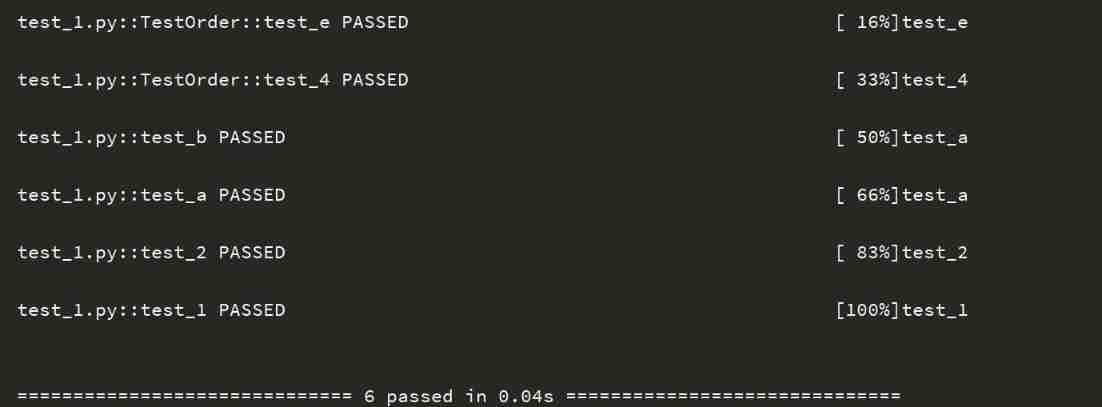

Pytest (4) - test case execution sequence

Single line function*

![ASP. Net core 6 framework unveiling example demonstration [01]: initial programming experience](/img/22/08617736a8b943bc9c254aac60c8cb.jpg)

ASP. Net core 6 framework unveiling example demonstration [01]: initial programming experience

【LeetCode】98. Verify the binary search tree (2 brushes of wrong questions)

随机推荐

Tucson will lose more than $400million in the next year

Android advanced interview question record in 2022

Summary of regularization methods

Word processing software

Yolov5 model training and detection

力扣剑指offer——二叉树篇

使用druid连接MySQL数据库报类型错误

Comment mettre en place une équipe technique pour détruire l'entreprise?

85.4% mIOU! NVIDIA: using multi-scale attention for semantic segmentation, the code is open source!

Li Kou Jianzhi offer -- binary tree chapter

丸子百度小程序详细配置教程,审核通过。

. Net starts again happy 20th birthday

【LeetCode】110. Balanced binary tree (2 brushes of wrong questions)

Unpool(nn.MaxUnpool2d)

JVM - when multiple threads initialize the same class, only one thread is allowed to initialize

Write a thread pool by hand, and take you to learn the implementation principle of ThreadPoolExecutor thread pool

Learn tla+ (XII) -- functions through examples

Action News

Bert fine tuning skills experiment

Icu4c 70 source code download and compilation (win10, vs2022)