当前位置:网站首页>Grouping convolution and DW convolution, residuals and inverted residuals, bottleneck and linearbottleneck

Grouping convolution and DW convolution, residuals and inverted residuals, bottleneck and linearbottleneck

2022-07-06 06:26:00 【jq_ ninety-eight】

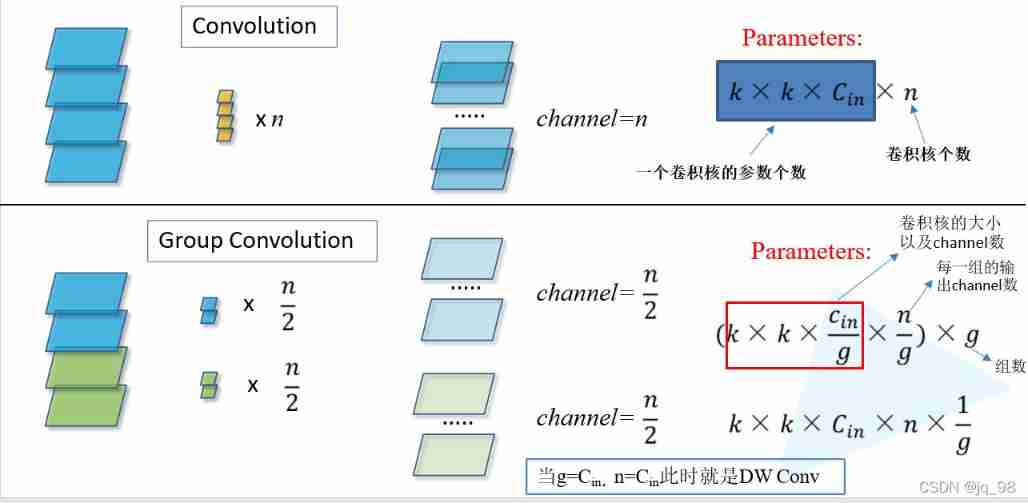

Grouping convolution (Group Convolution)

Group convolution in ResNext Used in the

First of all, it must be clear :

Conventional convolution (Convolution) The parameter quantity of is :

K*K*C_in*n

K It's the size of the convolution kernel ,C_in yes input Of channel Count ,n Is the number of convolution kernels (output Of channel Count )

The parameter quantity of block convolution is :

K*K*C_in*n*1/g

K It's the size of the convolution kernel ,C_in yes input Of channel Count ,n Is the number of convolution kernels (output Of channel Count ),g Is the number of groups

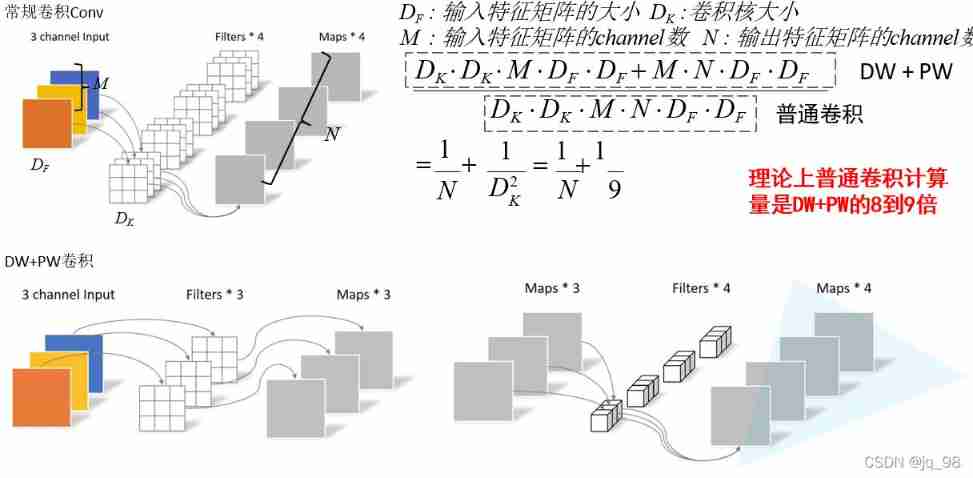

DW(Depthwise Separable Conv)+PW(Pointwise Conv) Convolution

DW Convolution is also called deep separable convolution ,DW+PW The combination of MobileNet Used in

DW The parameter quantity of convolution is :

K*K*C_in ( here C_in = n)

K It's the size of the convolution kernel ,C_in yes input Of channel Count ,DW The convolution , The number of convolution kernels and input Of channel The same number

PW The parameter quantity of convolution is :

1*1*C_in*n

PW The convolution kernel of convolution is 1*1 size ,C_in yes input Of channel Count ,n Is the number of convolution kernels (output Of channel Count )

summary

- The parameter quantity of block convolution is conventional convolution (Convolution) Parameter quantity 1/g, among g Is the number of groups

- DW The parameter quantity of convolution is conventional convolution (Convolution) Parameter quantity 1/n, among n Is the number of convolution kernels

- When in packet convolution g=C_in, n=C_in when ,DW== Grouping convolution

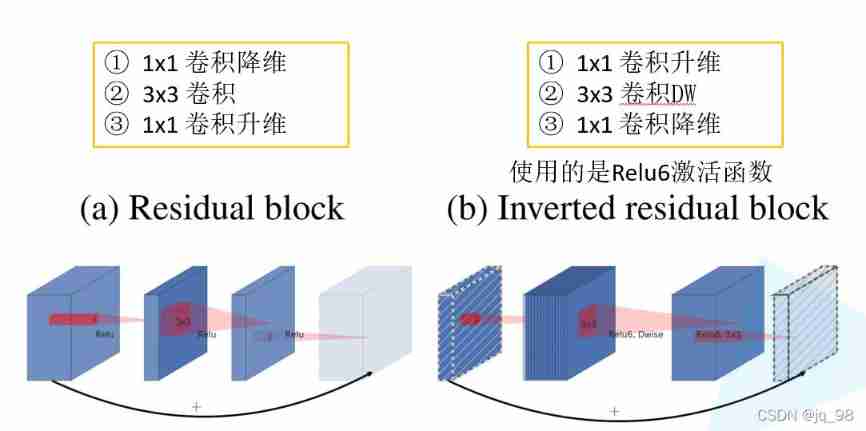

Residuals And Inverted Residuals

bottleneck And linearbottleneck

Bottleneck It refers to the bottleneck layer ,Bottleneck Structure is actually to reduce the number of parameters ,Bottleneck Three steps are first PW Dimensionality reduction of data , Then the convolution of conventional convolution kernel , Last PW Dimension upgrading of data ( Similar to the hourglass ).

The focus here is on health in the network ( l ) dimension -> Convolution -> l ( drop ) Dimensional structure , Rather than shortcut

Linear Bottlececk: in the light of MobileNet v2 Medium Inverted residual block The last of the structure 1*1 The convolution layer uses a linear activation function , instead of relu Activation function

边栏推荐

- [postman] the monitors monitoring API can run periodically

- 模拟卷Leetcode【普通】1062. 最长重复子串

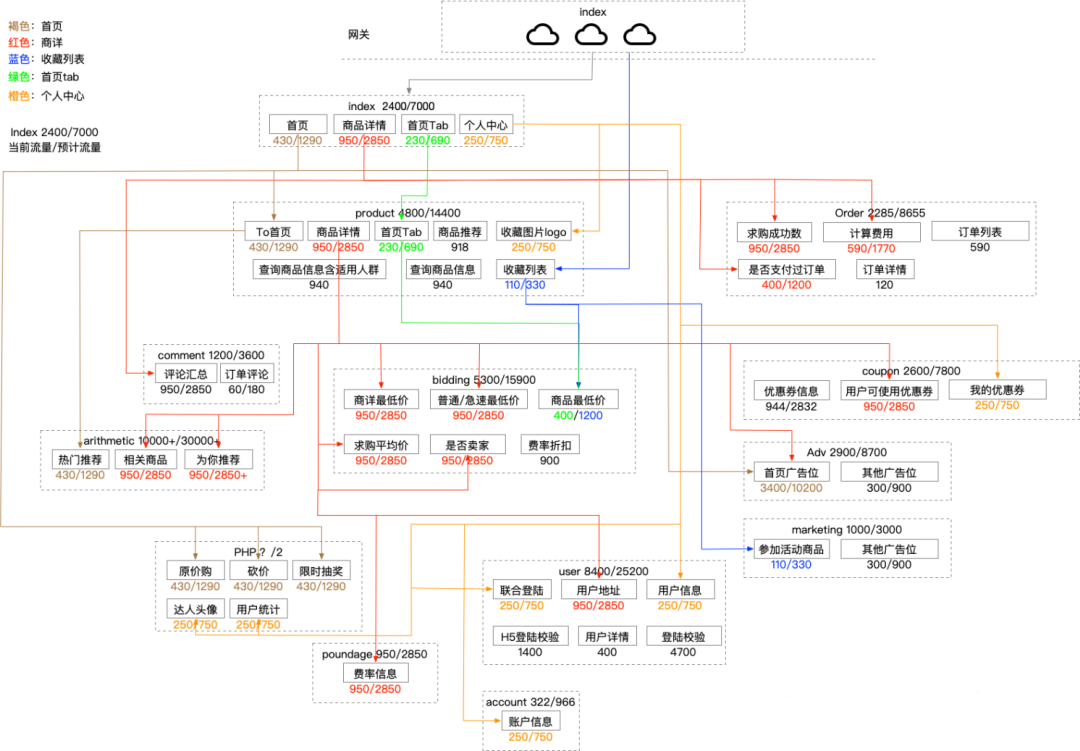

- Full link voltage measurement: building three models

- leetcode 24. Exchange the nodes in the linked list in pairs

- Engineering organisms containing artificial metalloenzymes perform unnatural biosynthesis

- D - How Many Answers Are Wrong

- [postman] collections - run the imported data file of the configuration

- E - food chain

- 专业论文翻译,英文摘要如何写比较好

- 还在为如何编写Web自动化测试用例而烦恼嘛?资深测试工程师手把手教你Selenium 测试用例编写

猜你喜欢

调用链监控Zipkin、sleuth搭建与整合

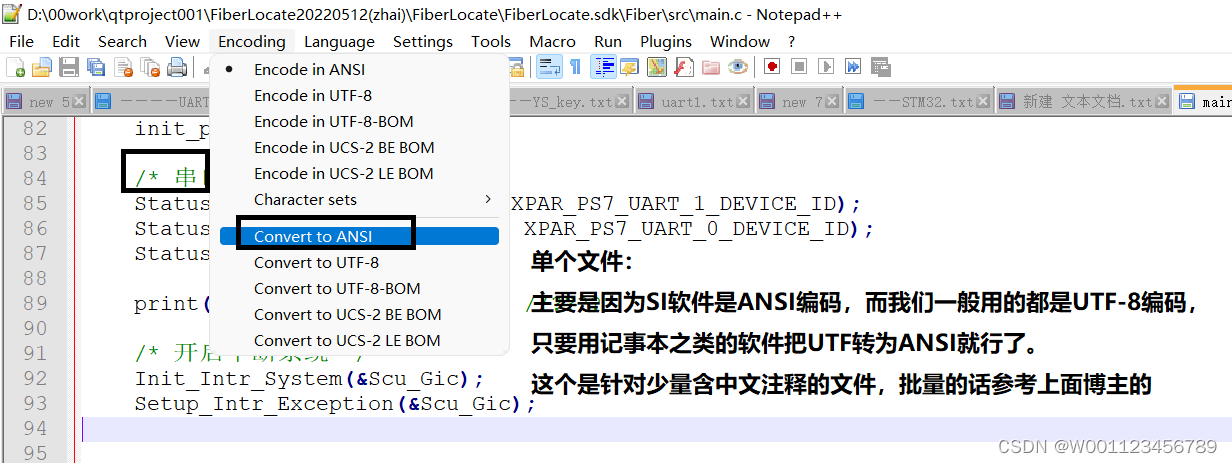

SourceInsight Chinese garbled

Full link voltage measurement: building three models

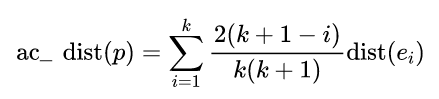

Summary of anomaly detection methods

Selenium source code read through · 9 | desiredcapabilities class analysis

Engineering organisms containing artificial metalloenzymes perform unnatural biosynthesis

基于JEECG-BOOT的list页面的地址栏参数传递

黑猫带你学UFS协议第4篇:UFS协议栈详解

MySQL之基础知识

Coordinatorlayout+nestedscrollview+recyclerview pull up the bottom display is incomplete

随机推荐

Simulation volume leetcode [general] 1091 The shortest path in binary matrix

[C language] string left rotation

基於JEECG-BOOT的list頁面的地址欄參數傳遞

联合索引的左匹配原则

【Tera Term】黑猫带你学TTL脚本——嵌入式开发中串口自动化神技能

JMeter做接口测试,如何提取登录Cookie

还在为如何编写Web自动化测试用例而烦恼嘛?资深测试工程师手把手教你Selenium 测试用例编写

生物医学英文合同翻译,关于词汇翻译的特点

JDBC requset corresponding content and function introduction

LeetCode 731. My schedule II

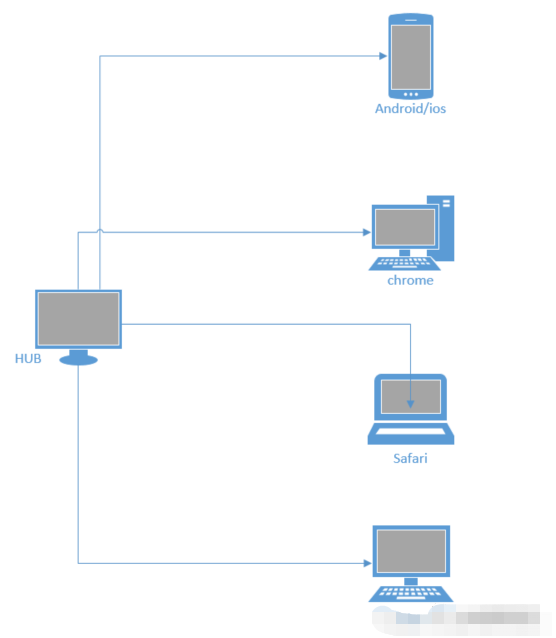

【无App Push 通用测试方案

Summary of the post of "Web Test Engineer"

Testing of web interface elements

Remember the implementation of a relatively complex addition, deletion and modification function based on jeecg-boot

org. activiti. bpmn. exceptions. XMLException: cvc-complex-type. 2.4. a: Invalid content beginning with element 'outgoing' was found

Black cat takes you to learn UFS Protocol Part 8: UFS initialization (boot operation)

Database - current read and snapshot read

Simulation volume leetcode [general] 1109 Flight reservation statistics

模拟卷Leetcode【普通】1219. 黄金矿工

Simulation volume leetcode [general] 1061 Arrange the smallest equivalent strings in dictionary order