1. summary

In the previous article , A material corresponds to a drawing call instruction . Even in this case , Two 3D objects use the same material , But they use different material parameters , Then it will still cause two drawing instructions . The reason lies in , Graphics work is a state machine , The state has changed , You must perform a drawing call instruction .

GPU Instantiation is used to solve such problems : For things like grass 、 Objects like trees , They often have a large amount of data , But at the same time, there are only minor differences, such as location 、 Posture 、 Color, etc. . If you render like a regular object , There must be many drawing instructions used , Resource occupation is bound to be large . A reasonable strategy is , We specify an object that needs to be drawn , And a large number of different parameters of the object , Then draw it in a drawing call according to the parameters —— That's what's called GPU Instantiation .

2. Detailed discussion

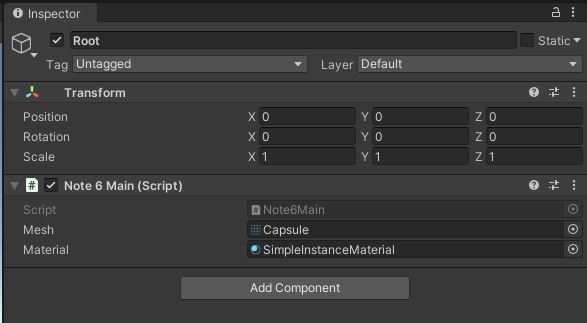

First , We create an empty GameObject object , And attach the following script :

using UnityEngine;

// Instantiate parameters

public struct InstanceParam

{

public Color color;

public Matrix4x4 instanceToObjectMatrix; // Instantiate to the matter matrix

}

[ExecuteInEditMode]

public class Note6Main : MonoBehaviour

{

public Mesh mesh;

public Material material;

int instanceCount = 200;

Bounds instanceBounds;

ComputeBuffer bufferWithArgs = null;

ComputeBuffer instanceParamBufferData = null;

// Start is called before the first frame update

void Start()

{

instanceBounds = new Bounds(new Vector3(0, 0, 0), new Vector3(100, 100, 100));

uint[] args = new uint[5] { 0, 0, 0, 0, 0 };

bufferWithArgs = new ComputeBuffer(1, args.Length * sizeof(uint), ComputeBufferType.IndirectArguments);

int subMeshIndex = 0;

args[0] = mesh.GetIndexCount(subMeshIndex);

args[1] = (uint)instanceCount;

args[2] = mesh.GetIndexStart(subMeshIndex);

args[3] = mesh.GetBaseVertex(subMeshIndex);

bufferWithArgs.SetData(args);

InstanceParam[] instanceParam = new InstanceParam[instanceCount];

for (int i = 0; i < instanceCount; i++)

{

Vector3 position = Random.insideUnitSphere * 5;

Quaternion q = Quaternion.Euler(Random.Range(0.0f, 90.0f), Random.Range(0.0f, 90.0f), Random.Range(0.0f, 90.0f));

float s = Random.value;

Vector3 scale = new Vector3(s, s, s);

instanceParam[i].instanceToObjectMatrix = Matrix4x4.TRS(position, q, scale);

instanceParam[i].color = Random.ColorHSV();

}

int stride = System.Runtime.InteropServices.Marshal.SizeOf(typeof(InstanceParam));

instanceParamBufferData = new ComputeBuffer(instanceCount, stride);

instanceParamBufferData.SetData(instanceParam);

material.SetBuffer("dataBuffer", instanceParamBufferData);

material.SetMatrix("ObjectToWorld", Matrix4x4.identity);

}

// Update is called once per frame

void Update()

{

if(bufferWithArgs != null)

{

Graphics.DrawMeshInstancedIndirect(mesh, 0, material, instanceBounds, bufferWithArgs, 0);

}

}

private void OnDestroy()

{

if (bufferWithArgs != null)

{

bufferWithArgs.Release();

}

if(instanceParamBufferData != null)

{

instanceParamBufferData.Release();

}

}

}

This script means , Set a mesh and a material , Through randomly obtained instantiation parameters , Render multiple instances of this mesh :

GPU The key interface of instantiation is Graphics.DrawMeshInstancedIndirect().Graphics A series of interfaces of an object are Unity The bottom of the API, It needs to be called every frame .Graphics.DrawMeshInstanced() You can also draw instances , But at most, you can only draw 1023 An example . So still Graphics.DrawMeshInstancedIndirect() better .

Instantiate parameters InstanceParam and GPU Buffer parameters bufferWithArgs Are stored in one ComputeBuffer In the object .ComputeBuffe Defined a GPU Data buffer object , Ability to map to Unity Shader Medium StructuredBuffer

Shader "Custom/SimpleInstanceShader"

{

Properties

{

}

SubShader

{

Tags{"Queue" = "Geometry"}

Pass

{

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex vert

#pragma fragment frag

#pragma target 4.5

sampler2D _MainTex;

float4x4 ObjectToWorld;

struct InstanceParam

{

float4 color;

float4x4 instanceToObjectMatrix;

};

#if SHADER_TARGET >= 45

StructuredBuffer<InstanceParam> dataBuffer;

#endif

// Vertex shader input

struct a2v

{

float4 position : POSITION;

float3 normal: NORMAL;

float2 texcoord : TEXCOORD0;

};

// Vertex shader output

struct v2f

{

float4 position: SV_POSITION;

float2 texcoord: TEXCOORD0;

float4 color: COLOR;

};

v2f vert(a2v v, uint instanceID : SV_InstanceID)

{

#if SHADER_TARGET >= 45

float4x4 instanceToObjectMatrix = dataBuffer[instanceID].instanceToObjectMatrix;

float4 color = dataBuffer[instanceID].color;

#else

float4x4 instanceToObjectMatrix = float4x4(1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1);

float4 color = float4(1.0f, 1.0f, 1.0f, 1.0f);

#endif

float4 localPosition = mul(instanceToObjectMatrix, v.position);

//float4 localPosition = v.position;

float4 worldPosition = mul(ObjectToWorld, localPosition);

v2f o;

//o.position = UnityObjectToClipPos(v.position);

o.position = mul(UNITY_MATRIX_VP, worldPosition);

o.texcoord = v.texcoord;

o.color = color;

return o;

}

fixed4 frag(v2f i) : SV_Target

{

return i.color;

}

ENDCG

}

}

Fallback "Diffuse"

}

This is an improvement from 《Unity3D Learning notes 3——Unity Shader Preliminary use of 》 Simple instantiation shader . The position of instantiated drawing is often not fixed , It means Shader Model matrix obtained in UNITY_MATRIX_M It is generally incorrect . Therefore, the key of instantiation rendering is to recalculate the model matrix , Otherwise, the drawing position is incorrect . The instantiated data is often located close , So you can first pass in a reference position ( matrix ObjectToWorld), Then the instantiated data can only be passed into the relative matrix of this position (instanceToObjectMatrix).

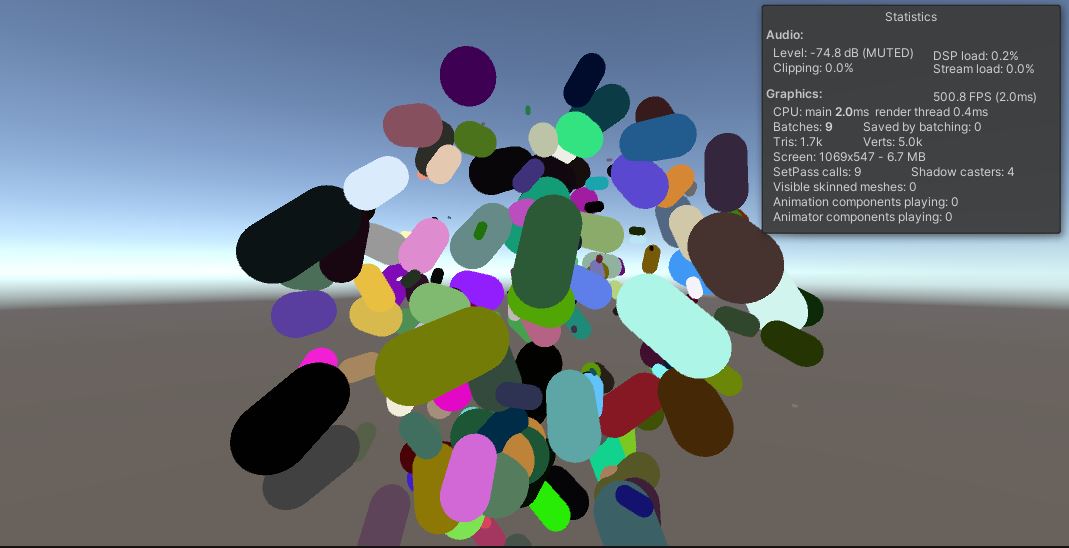

The final running results are as follows , Lots of different positions are drawn 、 Different posture 、 Capsules of different sizes and colors , And the performance is basically unaffected .

![[daily] win10 system setting computer never sleeps](/img/94/15f5a368e395b6948f409c5f6fc871.jpg)