TL;DR

In this article , We used... Separately Kubernetes Native network strategy and Cilium The network strategy of Pod Network level isolation . The difference is , The former only provides information based on L3/4 Network strategy of ; The latter supports L3/4、L7 Network strategy of .

Improve network security through network policies , It can greatly reduce the cost of implementation and maintenance , At the same time, it has little impact on the system .

Especially based on eBPF technology Cilium, It solves the problem of insufficient kernel scalability , Provide security and reliability for the workload from the kernel level 、 Observable network connection .

background

Why do you say Kubernetes There are security risks in the network ? In the cluster Pod The default is not isolated , That is to say Pod The network between them is interconnected , Can communicate with each other .

There will be problems here , For example, due to data sensitive services B Only specific services are allowed A Ability to visit , And the service C cannot access B. To prohibit services C Right service B The interview of , There are several options :

- stay SDK Provide common solutions in , Realize the function of white list . First, the request must bear the identification of the source , Then the server can receive the request of releasing a specific ID by rule setting , Reject other requests .

- Cloud native solutions , Using service grid RBAC、mTLS function .RBAC Implementation principle and application layer SDK Similar scheme , But an abstract generic solution that belongs to the infrastructure layer ;mTLS It will be more complicated , Authentication during the connection handshake phase , Involving the issuance of certificates 、 Verification and other operations .

The above two schemes have their own advantages and disadvantages :

- SDK The implementation of the scheme is simple , However, large-scale systems will face difficulties in upgrading and promotion 、 The high cost of multilingual support .

- The scheme of service grid is a general scheme of infrastructure layer , Naturally multilingual . But for users without landing grid , The architecture changes greatly , The high cost . If only to solve the safety problem , The cost performance of using grid scheme is very low , Not to mention that the implementation of the existing grid is difficult and the later use and maintenance cost is high .

Continue to look for solutions at the lower level of infrastructure , Start with the network layer .Kubernetes Provided Network strategy NetworkPolicy, Then you can achieve “ Network level isolation ”.

The sample application

In further demonstration NetworkPolicy Before the plan , Let's start with a sample application for demonstration . We use Cilium In the interactive tutorial Cilium getting started Used in “ Star Wars: ” scene .

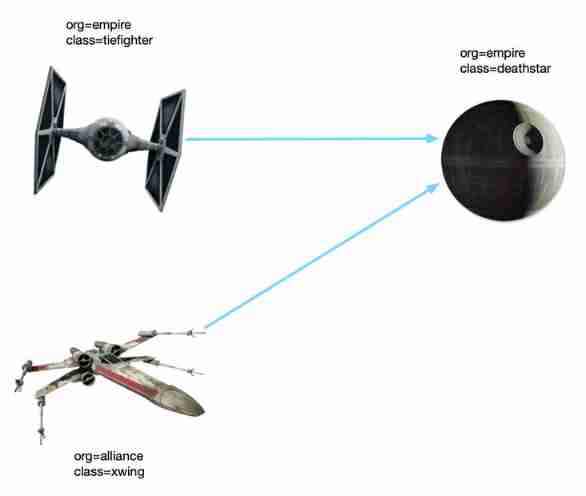

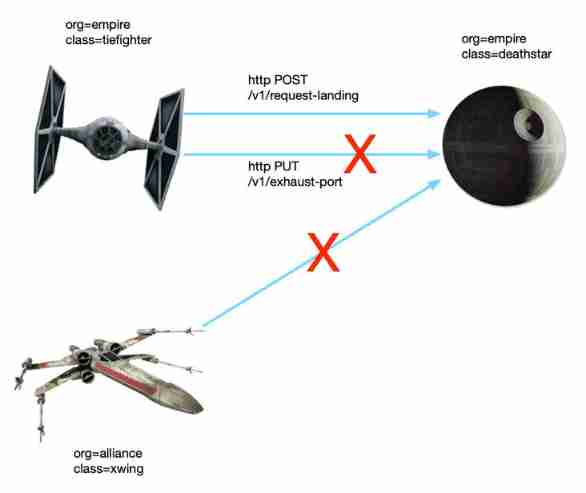

Here are three applications , Star Wars fans are probably no stranger :

- Death Star deathstar: stay

80Port supply web service , Yes 2 individual copy , adopt Kubernetes Service The load balancing is Imperial fighter External provision ” land “ service . - Titanium fighter tiefighter: Execute login request .

- X Wing fighter xwing: Execute login request .

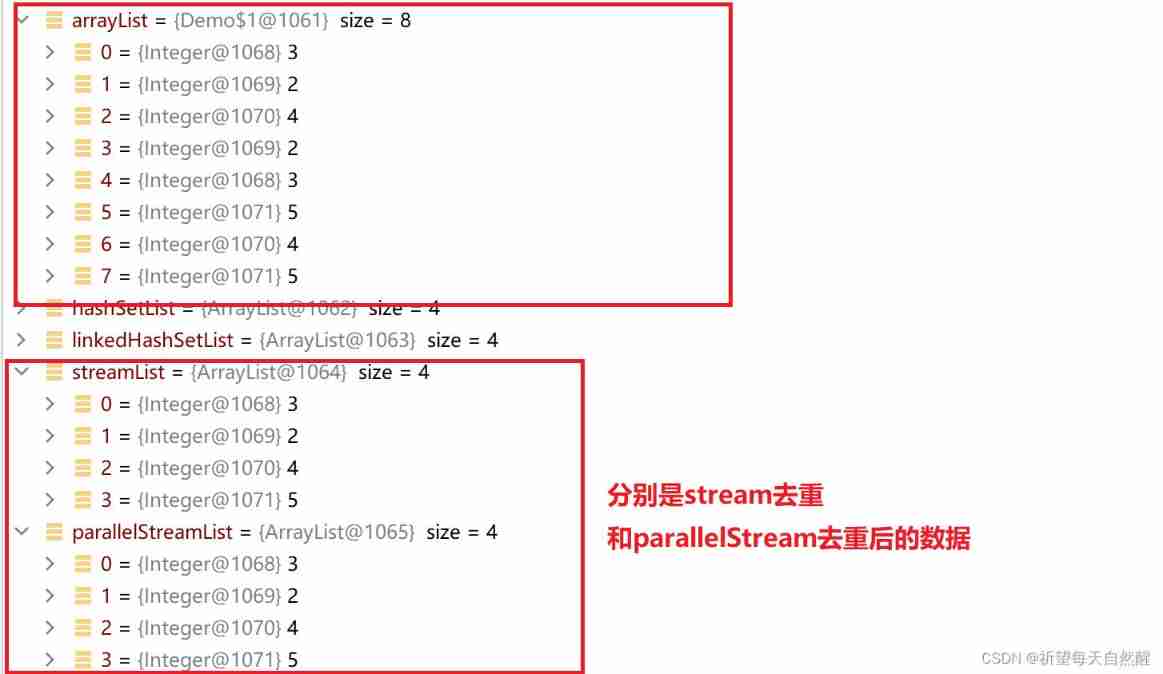

As shown in the figure , We used Label Three applications are identified :organdclass. When implementing network policy , We will use these two tags to identify the load .

# app.yaml

---

apiVersion: v1

kind: Service

metadata:

name: deathstar

labels:

app.kubernetes.io/name: deathstar

spec:

type: ClusterIP

ports:

- port: 80

selector:

org: empire

class: deathstar

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deathstar

labels:

app.kubernetes.io/name: deathstar

spec:

replicas: 2

selector:

matchLabels:

org: empire

class: deathstar

template:

metadata:

labels:

org: empire

class: deathstar

app.kubernetes.io/name: deathstar

spec:

containers:

- name: deathstar

image: docker.io/cilium/starwars

---

apiVersion: v1

kind: Pod

metadata:

name: tiefighter

labels:

org: empire

class: tiefighter

app.kubernetes.io/name: tiefighter

spec:

containers:

- name: spaceship

image: docker.io/tgraf/netperf

---

apiVersion: v1

kind: Pod

metadata:

name: xwing

labels:

app.kubernetes.io/name: xwing

org: alliance

class: xwing

spec:

containers:

- name: spaceship

image: docker.io/tgraf/netperfKubernetes Network strategy

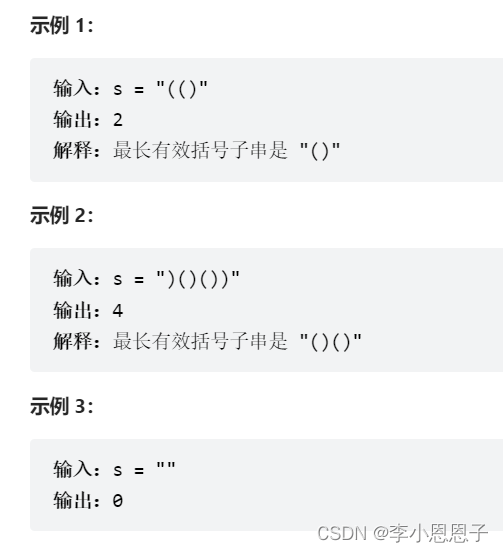

Can pass Official documents Get more details , Here we directly release the configuration :

# native/networkpolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: policy

namespace: default

spec:

podSelector:

matchLabels:

org: empire

class: deathstar

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

org: empire

ports:

- protocol: TCP

port: 80podSelector: Indicates the workload balancing to which the network policy is applied , adopt label Here you are deathstar Of 2 individual Pod.policyTypes: Indicates the type of traffic , It can beIngressorEgressOr both . Use hereIngress, Indicates a response to the selection deathstar Pod Inbound traffic execution rules for .ingress.from: Indicates the source workload of the traffic , Is also usedpodSelectorand Label Make a selection , It's selected hereorg=empireThat's all “ Imperial fighters ”.ingress.ports: Indicates the entry port of traffic , Here's a list deathstar Service port for .

Next , Let's test .

test

Prepare the environment first , We use K3s As Kubernetes Environmental Science . But because of K3s default CNI plug-in unit Flannel Network policy is not supported , We need to change the plug-in , Choose here Calico, namely K3s + Calico The plan .

First create a single node cluster :

curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" INSTALL_K3S_EXEC="--flannel-backend=none --cluster-cidr=10.42.0.0/16 --disable-network-policy --disable=traefik" sh - here , be-all Pod All in Pending state , Because you still need to install Calico:

kubectl apply -f https://projectcalico.docs.tigera.io/manifests/calico.yamlstay Calico After successful operation , be-all Pod Will also run successfully .

The next step is to deploy the application :

kubectl apply -f app.yamlBefore executing the strategy , Execute the following command to see “ Whether the fighter can land on the Death Star ”:

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landedFrom the results , Two kinds of ” aircraft “(Pod load ) All accessible deathstar service .

Execute the network policy at this time :

kubectl apply -f native/networkpolicy.yaml Try it again ” land “,xwing Your login request will stop there ( Need to use ctrl+c sign out , Or add... When requested --connect-timeout 2).

reflection

Use Kubernetes The network strategy realizes what we want , The function of white list is added to the service from the network level , This scheme has no transformation cost , It also has little impact on the system .

Cilium It's over before you play ? Let's keep looking :

Sometimes our services will expose some management endpoints , Some administrative operations are performed by the system call , Like hot updates 、 Restart, etc. . These endpoints do not allow ordinary services to call , Otherwise, it will cause serious consequences .

For example, in the example ,tiefighter Visited deathstar Management endpoint /exhaust-port:

kubectl exec tiefighter -- curl -s -XPUT deathstar.default.svc.cluster.local/v1/exhaust-port

Panic: deathstar exploded

goroutine 1 [running]:

main.HandleGarbage(0x2080c3f50, 0x2, 0x4, 0x425c0, 0x5, 0xa)

/code/src/github.com/empire/deathstar/

temp/main.go:9 +0x64

main.main()

/code/src/github.com/empire/deathstar/

temp/main.go:5 +0x85There is Panic error , Check Pod You'll find that dealthstar Hang up .

Kubernetes Your network strategy can only work in L3/4 layer , Yes L7 The layer is powerless .

Still have to ask Cilium.

Cilium Network strategy

because Cilium It involves Linux kernel 、 Network and many other knowledge points , To clarify the implementation principle, there is a lot of space . So here is only the introduction of the official website , Later, I hope to have time to write another article about implementation .

Cilium brief introduction

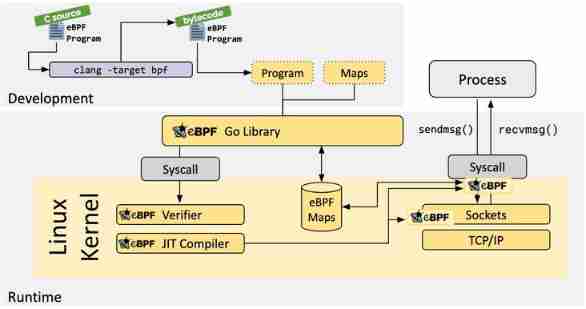

Cilium It's open source software , For providing 、 Protect and observe the container working load ( Cloud native ) Network connection between , By revolutionary kernel technology eBPF Push .

eBPF What is it? ?

Linux The kernel has always been monitoring / Observability 、 Ideal place for networking and security functions . But in many cases, this is not easy , Because these jobs need to modify the kernel source code or load the kernel module , The final implementation form is to superimpose new abstractions on the existing layers of abstractions . eBPF It's a revolutionary technology , It can run sandbox programs in the kernel (sandbox programs), There is no need to modify the kernel source code or load kernel modules .

take Linux After the kernel becomes programmable , Can be based on existing ( Instead of adding new ) Abstract layer to create more intelligent 、 More functional infrastructure software , Without increasing the complexity of the system , It doesn't sacrifice execution efficiency and security .

Let's see Cilium Network strategy of :

# cilium/networkpolicy-L4.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L7 policy to restrict access to specific HTTP call"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCPAnd Kubernetes There is little difference in the native network strategy , Refer to the previous introduction to understand , Let's go straight to the test .

test

because Cilium And that's it CNI, So the previous cluster can't be used , Uninstall the cluster first :

k3s-uninstall.sh

# !!! Remember to clean up the previous cni plug-in unit

sudo rm -rf /etc/cni/net.dUse the same command to create a single node cluster :

curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" INSTALL_K3S_EXEC="--flannel-backend=none --cluster-cidr=10.42.0.0/16 --disable-network-policy --disable=traefik" sh -

# cilium Will use this variable

export KUBECONFIG=/etc/rancher/k3s/k3s.yamlNext install Cilium CLI:

curl -L --remote-name-all https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-amd64.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-amd64.tar.gz /usr/local/bin

rm cilium-linux-amd64.tar.gz{,.sha256sum}

cilium version

cilium-cli: v0.10.2 compiled with go1.17.6 on linux/amd64

cilium image (default): v1.11.1

cilium image (stable): v1.11.1

cilium image (running): unknown. Unable to obtain cilium version, no cilium pods found in namespace "kube-system"install Cilium To the cluster :

cilium installstay Cilium Running successfully :

cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 1

cilium-operator Running: 1

Cluster Pods: 3/3 managed by Cilium

Image versions cilium-operator quay.io/cilium/operator-generic:[email protected]:977240a4783c7be821e215ead515da3093a10f4a7baea9f803511a2c2b44a235: 1

cilium quay.io/cilium/cilium:[email protected]:251ff274acf22fd2067b29a31e9fda94253d2961c061577203621583d7e85bd2: 1Deploy the application :

kubectl apply -f app.yamlTest the service call after the application is started :

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landedperform L4 Network strategy :

kubectl apply -f cilium/networkpolicy-L4.yamlTry it again “ land ” Death Star ,xwing Fighters also cannot land , explain L4 The rules of .

Let's try again L7 Layer rules :

# cilium/networkpolicy-L7.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L7 policy to restrict access to specific HTTP call"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCP

rules:

http:

- method: "POST"

path: "/v1/request-landing"Enforcement rules :

kubectl apply -f cilium/networkpolicy-L7.yamlThis time , Use tiefighter Call the management interface of the Death Star :

kubectl exec tiefighter -- curl -s -XPUT deathstar.default.svc.cluster.local/v1/exhaust-port

Access denied

# The login interface works normally

kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landedThis time back to Access denied, explain L7 The rules of the layer are in effect .

The article is issued in official account

The cloud points north

![[Li Kou brushing questions] one dimensional dynamic planning record (53 change exchanges, 300 longest increasing subsequence, 53 largest subarray and)](/img/1c/973f824f061d470a4079487d75f0d0.png)