Reptile battle ( 5、 ... and ): Climb Douban top250

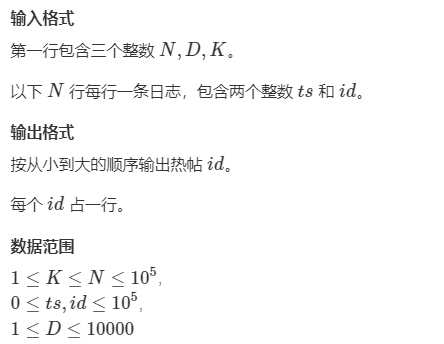

One 、 Website analysis

1、 Page analysis

Through packet capturing analysis , Available data is not dynamically loaded , But static pages , So we can send the request directly to the page , You can get the data

2、 Source code analysis

adopt F12 Debugging tools can get page data , namely , The data of this page , Stored in a class named grid_view Of ol Inside the label , At the same time, the class name is unique in the page , So we can use this node to locate our data , Traverse li label , Get content

3、 Content analysis

4、 Link analysis

"""

1. https://movie.douban.com/top250?start=0

2. https://movie.douban.com/top250?start=25

3. https://movie.douban.com/top250?start=50

n. https://movie.douban.com/top250?start=25*(n-1)

"""

urls = [https://movie.douban.com/top250?start=25*(i-1) for i in range(11)] # There is a total of 250 movie

so , We can use for loop , Or Mr. Cheng link , Use the form of stack to access , Or you can crawl the page recursively

Two 、 Write code

1、 Get every page url

# !/usr/bin/python3

# -*- coding: UTF-8 -*-

__author__ = "A.L.Kun"

__file__ = "123.py"

__time__ = "2022/7/6 10:19"

import requests, re # The import module

from lxml import etree # Conduct xpath analysis

from fake_useragent import UserAgent # Use random request headers

import pandas as pd # Import data parsing module fast

urls = [f'https://movie.douban.com/top250?start={25*(i-1)}' for i in range(10, 0, -1)] # Get all of url link , Store as global variable , Use the method of stack , So take the reverse order

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive',

'Host': 'movie.douban.com',

'Pragma': 'no-cache',

'sec-ch-ua': '"Not A;Brand";v="99", "Chromium";v="102", "Google Chrome";v="102"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'Sec-Fetch-Dest': 'document',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'none',

'Sec-Fetch-User': '?1',

'Upgrade-Insecure-Requests': '1',

'user-agent': UserAgent().random

}

lis_data = [] # Store the crawled data

while urls:

print(urls.pop())

2、 obtain ol Inside li label

def get_tags(url):

headers.update({'user-agent': UserAgent().random}) # bring user-agent It's random enough

resp = requests.get(url, headers=headers) # Send a request

resp.encoding = "utf-8" # Set encoding

tree = etree.HTML(resp.text) # Get the page source , hand etree

ol = tree.xpath('//*[@id="content"]/div/div[1]/ol/li') # Get ol

for li in ol:

print(li)

get_tags("https://movie.douban.com/top250?start=0")

3、 get data

def get_data(li):

imgSrc = li.xpath(".//img/@src")[0] # Picture links

try:

imgSrc = imgSrc.replace("webp", "jpg")

except Exception as e:

imgSrc = " Picture not found "

title = li.xpath(".//img/@alt")[0] # title

detailUrl = li.xpath(".//div[@class='hd']/a/@href")[0] # Detailed address

detail = li.xpath(".//div[@class='bd']/p[1]/text()") # It contains the director , year , type , We only need the year and type

time = re.search(r"\d+", detail[1]).group() # Year of publication

type_ = " ".join(re.findall(r"[\u4e00-\u9fa5]+", detail[1])) # Film type

score = li.xpath(".//span[@class='rating_num']/text()")[0] # Get the score

quote = li.xpath(".//span[@class='inq']/text()")[0] # Movie motto

# print(title, imgSrc, detailUrl, time, type_, score, quote) # Output the acquired data

lis_data.append({

" title ": title,

" Picture links ": imgSrc,

" Details page link ": detailUrl,

" Publication date ": time,

" Film type ": type_,

" score ": score,

" Maxim ": quote

}) # Store the results in a prepared container , Submit to pandas Library for parsing , Here you can also write data to the database

# Test use

resp = requests.get("https://movie.douban.com/top250?start=25", headers=headers)

resp.encoding = "utf-8"

tree = etree.HTML(resp.text) # Get the page source , hand etree

ol = tree.xpath('//*[@id="content"]/div/div[1]/ol/li') # Get ol

for li in ol :

get_data(li)

print(lis_data)

4、 Data cleaning

def parse_data():

df = pd.DataFrame(lis_data)

new_df = df.dropna() # Sure , Discard empty data

# At the same time, you can also do some chart analysis and other work , Let's omit

# print(new_df)

new_df.to_excel("./douban.xlsx", index=None)

parse_data()

3、 ... and 、 Complete code

# !/usr/bin/python3

# -*- coding: UTF-8 -*-

__author__ = "A.L.Kun"

__file__ = "123.py"

__time__ = "2022/7/6 10:19"

import requests, re # The import module

from lxml import etree # Conduct xpath analysis

from fake_useragent import UserAgent # Use random request headers

import pandas as pd # Import data parsing module fast

from logging import Logger

log = Logger(__name__)

urls = [f'https://movie.douban.com/top250?start={25*(i-1)}' for i in range(10, 0, -1)] # Get all of url link , Store as global variable , Use the method of stack , So take the reverse order

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive',

'Host': 'movie.douban.com',

'Pragma': 'no-cache',

'sec-ch-ua': '"Not A;Brand";v="99", "Chromium";v="102", "Google Chrome";v="102"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'Sec-Fetch-Dest': 'document',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'none',

'Sec-Fetch-User': '?1',

'Upgrade-Insecure-Requests': '1',

'user-agent': UserAgent().random

}

lis_data = [] # Store the crawled data

def get_data(li):

imgSrc = li.xpath(".//img/@src")[0] # Picture links

try:

imgSrc = imgSrc.replace("webp", "jpg")

except Exception as e:

imgSrc = " Picture not found "

title = li.xpath(".//img/@alt")[0] # title

detailUrl = li.xpath(".//div[@class='hd']/a/@href")[0] # Detailed address

detail = li.xpath(".//div[@class='bd']/p[1]/text()") # It contains the director , year , type , We only need the year and type

time = re.search(r"\d+", detail[1]).group() # Year of publication

type_ = " ".join(re.findall(r"[\u4e00-\u9fa5]+", detail[1])) # Film type

score = li.xpath(".//span[@class='rating_num']/text()")[0] # Get the score

try:

quote = li.xpath(".//span[@class='inq']/text()")[0] # Movie motto

except Exception as e:

quote = " There is no maxim for the time being !"

# print(title, imgSrc, detailUrl, time, type_, score, quote) # Output the acquired data

lis_data.append({

" title ": title,

" Picture links ": imgSrc,

" Details page link ": detailUrl,

" Publication date ": time,

" Film type ": type_,

" score ": score,

" Maxim ": quote

}) # Store the results in a prepared container , Submit to pandas Library for parsing , Here you can also write data to the database

def get_tags(url):

headers.update({'user-agent': UserAgent().random}) # bring user-agent It's random enough

resp = requests.get(url, headers=headers) # Send a request

resp.encoding = "utf-8" # Set encoding

tree = etree.HTML(resp.text) # Get the page source , hand etree

ol = tree.xpath('//*[@id="content"]/div/div[1]/ol/li') # Get ol

for li in ol:

get_data(li) # Get data

log.info(f"{url}, Data acquisition complete ")

def parse_data():

df = pd.DataFrame(lis_data)

new_df = df.dropna() # Sure , Discard empty data

# At the same time, you can also do some chart analysis and other work , Let's omit

# print(new_df)

new_df.to_excel("./douban.xlsx", index=None, encoding="utf-8")

# print(new_df)

def main():

while urls:

get_tags(urls.pop())

parse_data()

if __name__ == "__main__":

main()