当前位置:网站首页>HKUST & MsrA new research: on image to image conversion, fine tuning is all you need

HKUST & MsrA new research: on image to image conversion, fine tuning is all you need

2022-07-07 06:22:00 【PaperWeekly】

author | Machine center editorial department

source | Almost Human

In the field of natural language processing , Network tuning has made a lot of progress , Now this idea extends to the field of image to image conversion .

Many content production projects need to convert simple sketches into realistic pictures , This involves image to image conversion (image-to-image translation), It uses a depth generation model to learn the conditional distribution of a given input natural image .

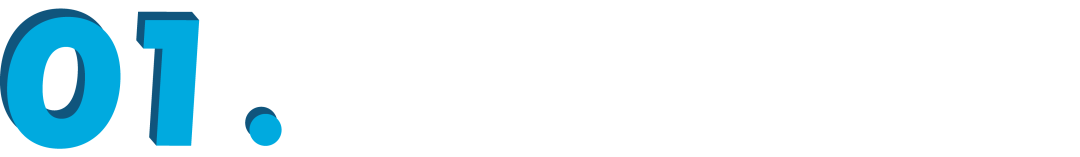

The basic concept of image to image conversion is to capture natural image manifolds using pre trained neural networks (manifold). Image transformation is similar to traversing manifolds and locating feasible input semantic points . The system uses many pictures to pre train the synthetic network , To provide reliable output from any sampling of its potential space . Synthetic network through pre training , Downstream training adjusts user input to the potential representation of the model .

these years , We have seen many task - specific approaches to achieve SOTA level , However, the current solution is still difficult to create high fidelity images for practical use .

In a recent paper , Researchers from Hong Kong University of science and technology and Microsoft Research Asia think that , For image to image conversion , Pre training is All you need. Previous approaches required specialized architecture design , And train a single transformation model from scratch , Therefore, it is difficult to generate complex scenes with high quality , Especially in the case of insufficient paired training data .

therefore , Researchers regard each image to image conversion problem as a downstream task , A simple general framework is introduced , The framework uses a pre trained diffusion model to adapt to various image to image transformations . They call the proposed pre training image to image conversion model PITI(pretraining-based image-to-image translation). Besides , The researchers also propose to enhance texture synthesis in diffusion model training by using confrontation training , It is combined with normalized guided sampling to improve the generation quality .

Last , Researchers at ADE20K、COCO-Stuff and DIODE And other challenging benchmarks to make extensive empirical comparisons of various tasks , indicate PITI The composite image shows unprecedented realism and fidelity .

Paper title :

Pretraining is All You Need for Image-to-Image Translation

Thesis link :

https://arxiv.org/pdf/2205.12952.pdf

Project home page :

https://tengfei-wang.github.io/PITI/index.html

GAN Is dead , The diffusion model persists

The author did not use the best in a particular field GAN, Instead, a diffusion model is used , Composes a wide variety of images . secondly , It should generate images from two types of potential code : A description of visual semantics , The other is to adjust the image fluctuation . semantics 、 Low dimensional potential is critical for downstream tasks . otherwise , It is impossible to transform modal inputs into complex potential spaces . In view of this , They use GLIDE As a pre training generating prior , This is a data-driven model that can generate different pictures . because GLIDE Potential text used , It allows semantic latent space .

Diffusion and score based methods show cross benchmark generation quality . In class condition ImageNet On , These models are different from those based on GAN The method is comparable to . lately , The diffusion model trained with large-scale text image pairing shows amazing ability . A well-trained diffusion model can provide a general generation prior for synthesis .

frame

Authors can use prepositions (pretext) The task is to pre train a large amount of data , And develop a very meaningful potential space to predict picture Statistics .

For downstream tasks , They conditionally fine tune the semantic space to map the task specific environment . The machine creates believable visual effects based on pre trained information .

The author suggests using semantic input to pre train the diffusion model . They use text conditions 、 Image training GLIDE Model .Transformer The network encodes text input , And output for diffusion model token. According to the plan , Text embedding space is meaningful .

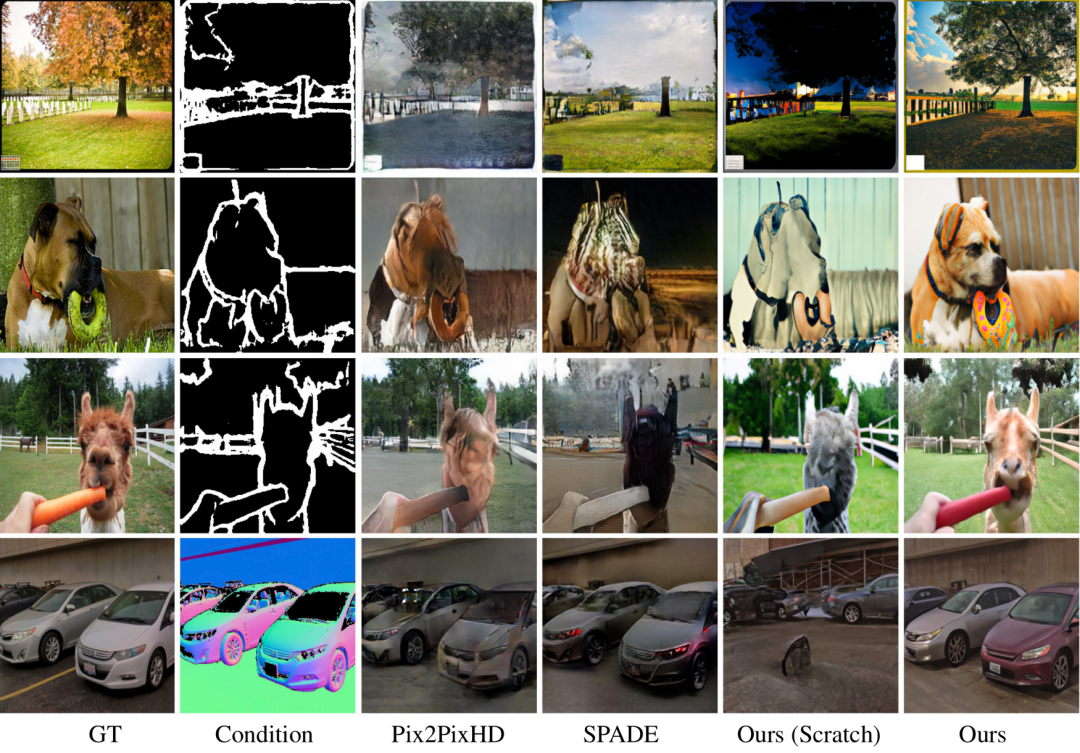

The picture above is the author's work . Compared to starting from scratch , The pre training model improves the image quality and diversity . because COCO Datasets have many categories and combinations , Therefore, the basic approach cannot provide beautiful results through a compelling architecture . Their method can be difficult Create rich details with precise semantics . The picture shows the versatility of their approach .

Experiment and influence

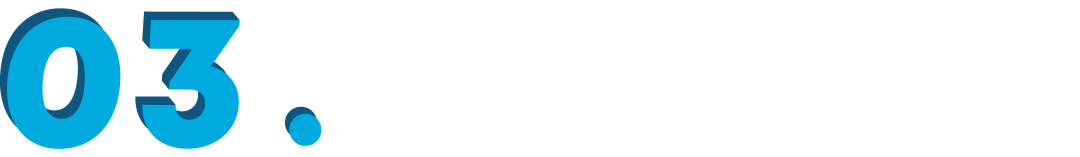

surface 1 Show , The performance of the proposed method is always better than other models . And the more advanced OASIS comparison , From mask to image synthesis ,PITI stay FID Significant improvements have been made in . Besides , This method also shows good performance in sketch to image and geometry to image synthesis tasks .

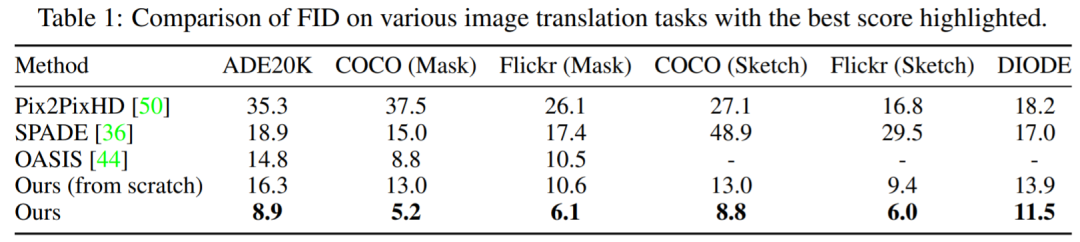

chart 3 The visualization results of the study on different tasks are shown . The result of the experiment is , Compared with the method of training from scratch , The pre training model significantly improves the quality and diversity of the generated images . The method used in this study can produce vivid details and correct semantics , Even challenging build tasks .

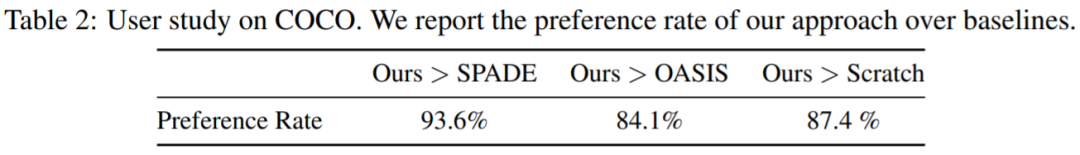

The study is still Amazon Mechanical Turk Upper COCO-Stuff Conducted a user study on mask to image synthesis , To obtain the 20 Of the participants 3000 ticket . Participants will get two pictures at a time , And were asked to choose a more realistic one to vote on . As shown in the table 2 Shown , The proposed approach is largely superior to the zero start model and other baselines .

Conditional image compositing creates high-quality images that meet the criteria . The fields of computer vision and graphics use it to create and manipulate information . Large scale pre training improves image classification 、 Object recognition and semantic segmentation . It is unknown whether large-scale pre training is beneficial to general generation tasks .

Energy use and carbon emissions are key issues in picture pre training . Pre training is energy consuming , But only once . Condition tuning allows downstream tasks to use the same pre training model . Pre training allows the generation model to be trained with less training data , When data is limited due to privacy issues or expensive annotation costs , It can improve the effect of image synthesis .

Link to the original text :https://medium.com/mlearning-ai/finetuning-is-all-you-need-d1b8747a7a98#7015

Read more

# cast draft through Avenue #

Let your words be seen by more people

How to make more high-quality content reach the reader group in a shorter path , How about reducing the cost of finding quality content for readers ? The answer is : People you don't know .

There are always people you don't know , Know what you want to know .PaperWeekly Maybe it could be a bridge , Push different backgrounds 、 Scholars and academic inspiration in different directions collide with each other , There are more possibilities .

PaperWeekly Encourage university laboratories or individuals to , Share all kinds of quality content on our platform , It can be Interpretation of the latest paper , It can also be Analysis of academic hot spots 、 Scientific research experience or Competition experience explanation etc. . We have only one purpose , Let knowledge really flow .

The basic requirements of the manuscript :

• The article is really personal Original works , Not published in public channels , For example, articles published or to be published on other platforms , Please clearly mark

• It is suggested that markdown Format writing , The pictures are sent as attachments , The picture should be clear , No copyright issues

• PaperWeekly Respect the right of authorship , And will be adopted for each original first manuscript , Provide Competitive remuneration in the industry , Specifically, according to the amount of reading and the quality of the article, the ladder system is used for settlement

Contribution channel :

• Send email :[email protected]

• Please note your immediate contact information ( WeChat ), So that we can contact the author as soon as we choose the manuscript

• You can also directly add Xiaobian wechat (pwbot02) Quick contribution , remarks : full name - contribute

△ Long press add PaperWeekly Small make up

Now? , stay 「 You know 」 We can also be found

Go to Zhihu home page and search 「PaperWeekly」

Click on 「 Focus on 」 Subscribe to our column

边栏推荐

- Test the foundation of development, and teach you to prepare for a fully functional web platform environment

- 牛客小白月赛52 E.分组求对数和(二分&容斥)

- Career experience feedback to novice programmers

- ML's shap: Based on the adult census income binary prediction data set (whether the predicted annual income exceeds 50K), use the shap decision diagram combined with the lightgbm model to realize the

- VMware安装后打开就蓝屏

- 安装mongodb数据库

- 你不知道的互联网公司招聘黑话大全

- [FPGA tutorial case 14] design and implementation of FIR filter based on vivado core

- Handling hardfault in RT thread

- win系统下安装redis以及windows扩展方法

猜你喜欢

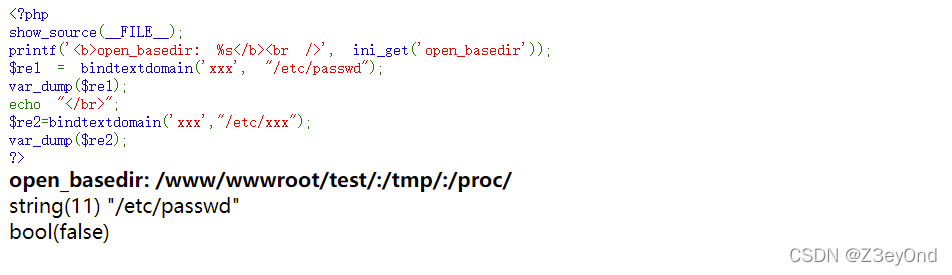

Bypass open_ basedir

Rk3399 platform development series explanation (WiFi) 5.53, hostapd (WiFi AP mode) configuration file description

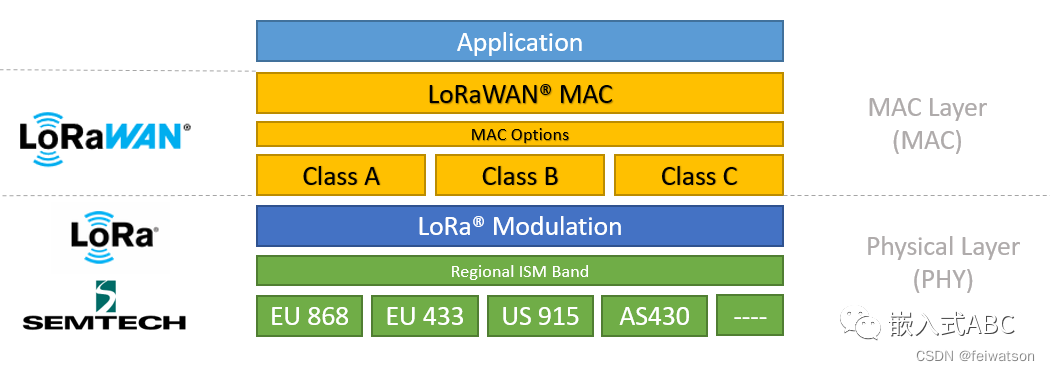

SubGHz, LoRaWAN, NB-IoT, 物联网

Vscode for code completion

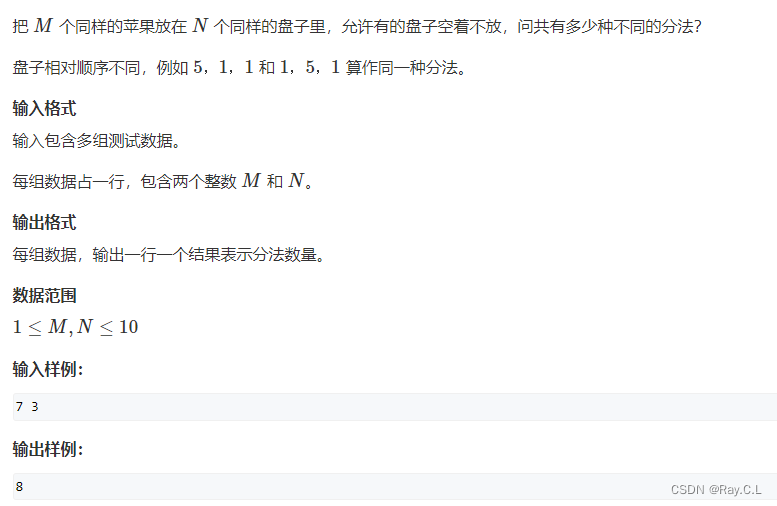

3428. 放苹果

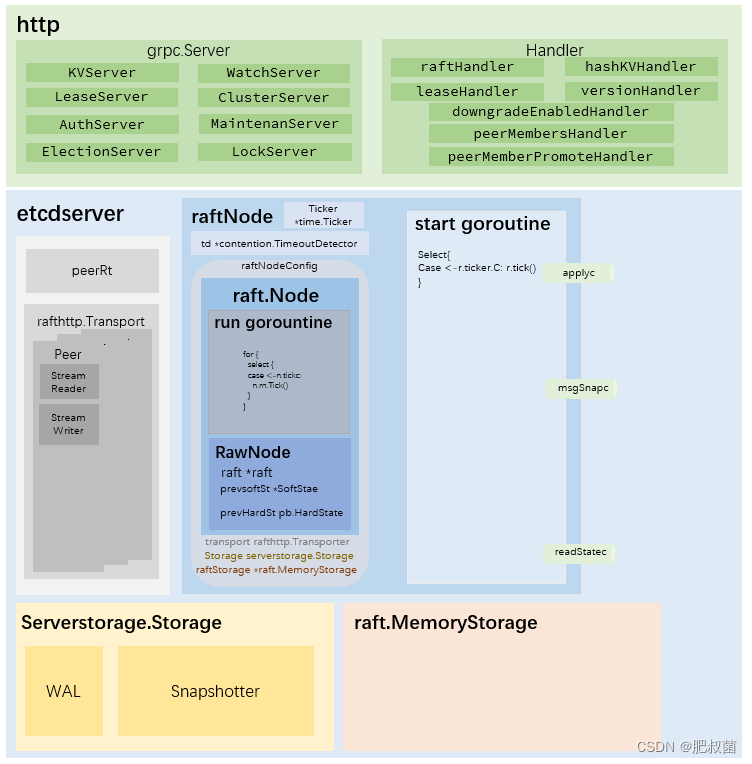

ETCD数据库源码分析——从raftNode的start函数说起

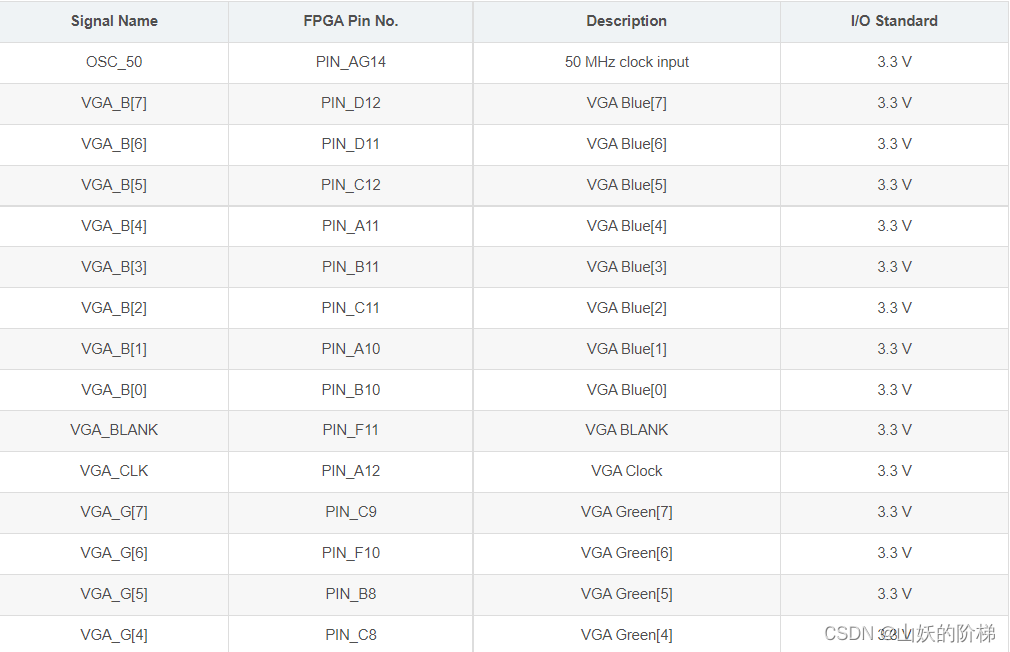

Implementation of VGA protocol based on FPGA

安装VMmare时候提示hyper-v / device defender 侧通道安全性

软件测试知识储备:关于「登录安全」的基础知识,你了解多少?

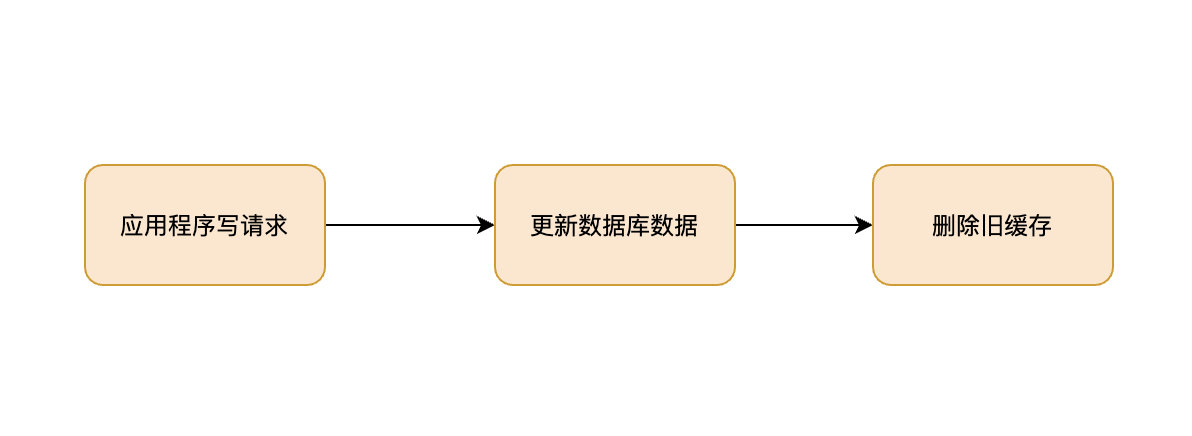

If you don't know these four caching modes, dare you say you understand caching?

随机推荐

基于ADAU1452的DSP及DAC音频失真分析

【OpenCV】形态学滤波(2):开运算、形态学梯度、顶帽、黑帽

蚂蚁庄园安全头盔 7.8蚂蚁庄园答案

JVM命令之 jstack:打印JVM中线程快照

The boss always asks me about my progress. Don't you trust me? (what do you think)

window下面如何安装swoole

k8s运行oracle

牛客小白月赛52 E.分组求对数和(二分&容斥)

Redisl garbled code and expiration time configuration

开发者别错过!飞桨黑客马拉松第三期链桨赛道报名开启

693. 行程排序

【GNN】图解GNN: A gentle introduction(含视频)

LM小型可编程控制器软件(基于CoDeSys)笔记二十三:伺服电机运行(步进电机)相对坐标转换为绝对坐标

如何解决数据库插入数据显示SQLSTATE[HY000]: General error: 1364 Field ‘xxxxx‘ doesn‘t have a default value错误

Jcmd of JVM command: multifunctional command line

On the discrimination of "fake death" state of STC single chip microcomputer

博士申请 | 上海交通大学自然科学研究院洪亮教授招收深度学习方向博士生

测试开发基础,教你做一个完整功能的Web平台之环境准备

Three updates to build applications for different types of devices | 2022 i/o key review

可极大提升编程思想与能力的书有哪些?