当前位置:网站首页>ECCV 2022 | Tencent Youtu proposed disco: the effect of saving small models in self supervised learning

ECCV 2022 | Tencent Youtu proposed disco: the effect of saving small models in self supervised learning

2022-07-04 23:17:00 【Zhiyuan community】

DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning

The paper :https://arxiv.org/abs/2104.09124 Code ( Open source ):https://github.com/Yuting-Gao/DisCo-pytorch

Motivation

Self supervised learning usually refers to the model learning general representations on large-scale unlabeled data , Migrate to downstream related tasks . Because the learned general characterization can significantly improve the performance of downstream tasks , Self supervised learning is widely used in various scenarios . Generally speaking , The larger the model capacity , The better the effect of self supervised learning [1,2]. conversely , Lightweight model (EfficientNet-B0, MobileNet-V3, EfficientNet-B1) The effect of self supervised learning is far less than that of the relatively large capacity model (ResNet50/101/152/50*2).

At present, the way to improve the performance of lightweight models in self supervised learning is mainly through distillation , Transfer the knowledge of the model with larger capacity to the student model .SEED [2] be based on MoCo-V2 frame [3,4], Large capacity model as Teacher, Lightweight model as Student, share MoCo-V2 Negative sample space in the frame (Queue), Through cross entropy, positive samples and the same negative samples are forced to Student And Teacher The distribution in space should be as same as possible .CompRess [1] And tried Teacher and Student Maintain their respective negative sample spaces , Use at the same time KL Divergence to narrow the distribution . The above methods can effectively Teacher Knowledge transferred to Student, So as to improve the lightweight model Student The effect of ( This article will use... Alternately Student And lightweight models ).

This paper proposes Distilled Contrastive Learning (DisCo), A simple and effective self supervised learning method based on distillation lightweight model , This method can significantly improve Student And Some lightweight models can be very close Teacher Performance of . This method has the following observations :

- Distillation learning based on self-monitoring , because The last layer of representation contains the global absolute position and local relative position information of different samples in the whole representation space , and Teacher This kind of information in Student Better , So just pull closer Teacher And Student The representation of the last layer may be the best .

- stay CompRess [1] in ,Teacher And Student The model shares a negative sample queue (1q) And have their own negative sample queue (2q) The gap is 1% Inside . This method is migrated to the downstream task data set CUB200, Car192, This method has its own negative sample queue and can even significantly exceed the shared negative sample queue . This explanation ,Student Not from Teacher Learn enough effective knowledge in the shared negative sample space .Student There is no need to rely on Teacher Negative sample space of .

- One of the benefits of abandoning shared queues , As a whole The framework does not depend on MoCo-V2, The whole framework is more concise .Teacher/Student The model can be compared with others MoCo-V2 More effective self-monitoring / Unsupervised representation learning method combined , Further improve the final performance of the lightweight model after distillation .

In the current self-monitoring methods ,MLP The low dimension of the hidden layer may be the bottleneck of distillation performance . Adding the dimension of the hidden layer of this structure in the self supervised learning and distillation stage can further improve the effect of the final lightweight model after distillation , There will be no extra cost in the deployment phase . Change the hidden layer dimension from 512->2048,ResNet-18 Can significantly improve 3.5%.

Method

This paper proposes a simple but effective framework Distilled Contrastive Learning (DisCo) .Student Self supervised learning will be carried out at the same time as learning the same sample in Teacher In the representation space of .

DisCo Framework

As shown in the figure above , Expand through data (Data Augmentation) The operation generates the image into two views (View). In addition to self supervised learning , A self supervised learning is also introduced Teacher Model . Require the same view of the same sample , after Student And fixed parameter Teacehr The final characterization of is consistent . In the main experiment of this paper , Self supervised learning is based on MoCo-V2 (Contrastive Learning), And keep the same sample passing Teacher And Student The characterization similarity of the output characterization is through consistent regularization (Consistency Regularization). This paper uses mean square error to make Student Learn that the sample is corresponding Teacher Distribution in space .

边栏推荐

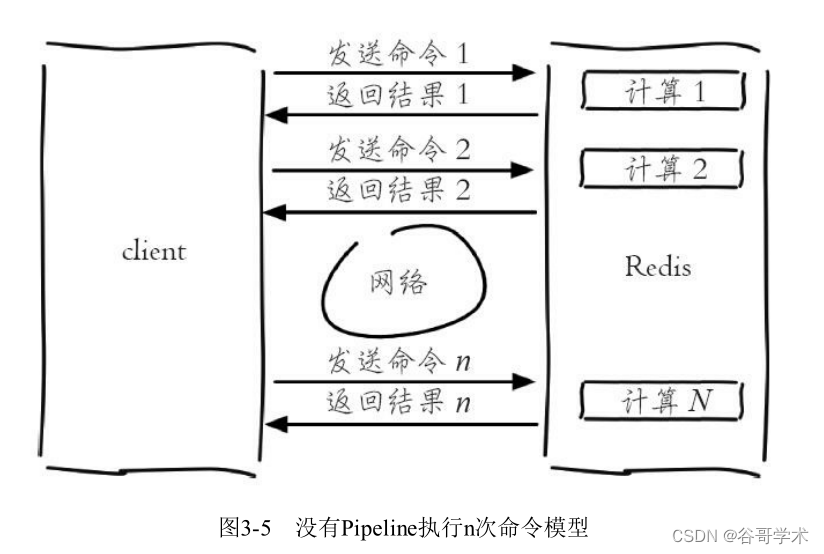

- Redis démarrer le tutoriel complet: Pipeline

- debug和release的区别

- P2181 diagonal and p1030 [noip2001 popularization group] arrange in order

- The initial trial is the cross device model upgrade version of Ruijie switch (taking rg-s2952g-e as an example)

- 【图论】拓扑排序

- ECS settings SSH key login

- The small program vant tab component solves the problem of too much text and incomplete display

- A complete tutorial for getting started with redis: transactions and Lua

- Redis入門完整教程:Pipeline

- HMS core unified scanning service

猜你喜欢

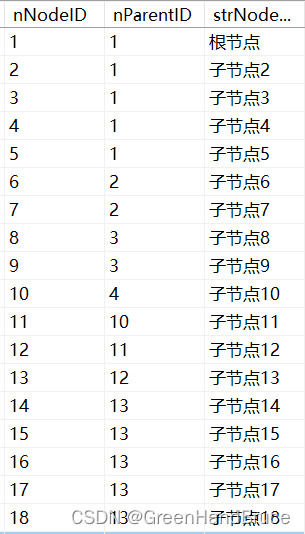

qt绘制网络拓补图(连接数据库,递归函数,无限绘制,可拖动节点)

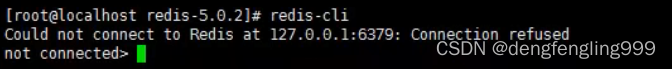

Redis: redis configuration file related configuration and redis persistence

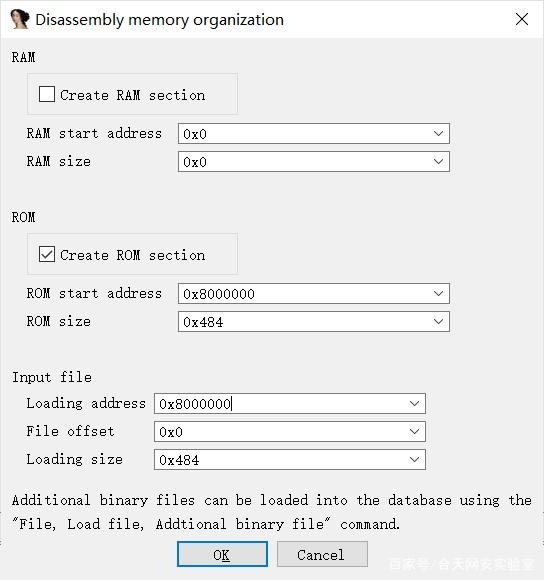

CTF competition problem solution STM32 reverse introduction

![[graph theory] topological sorting](/img/2c/238ab5813fb46a3f14d8de465b8999.png)

[graph theory] topological sorting

Redis入門完整教程:Pipeline

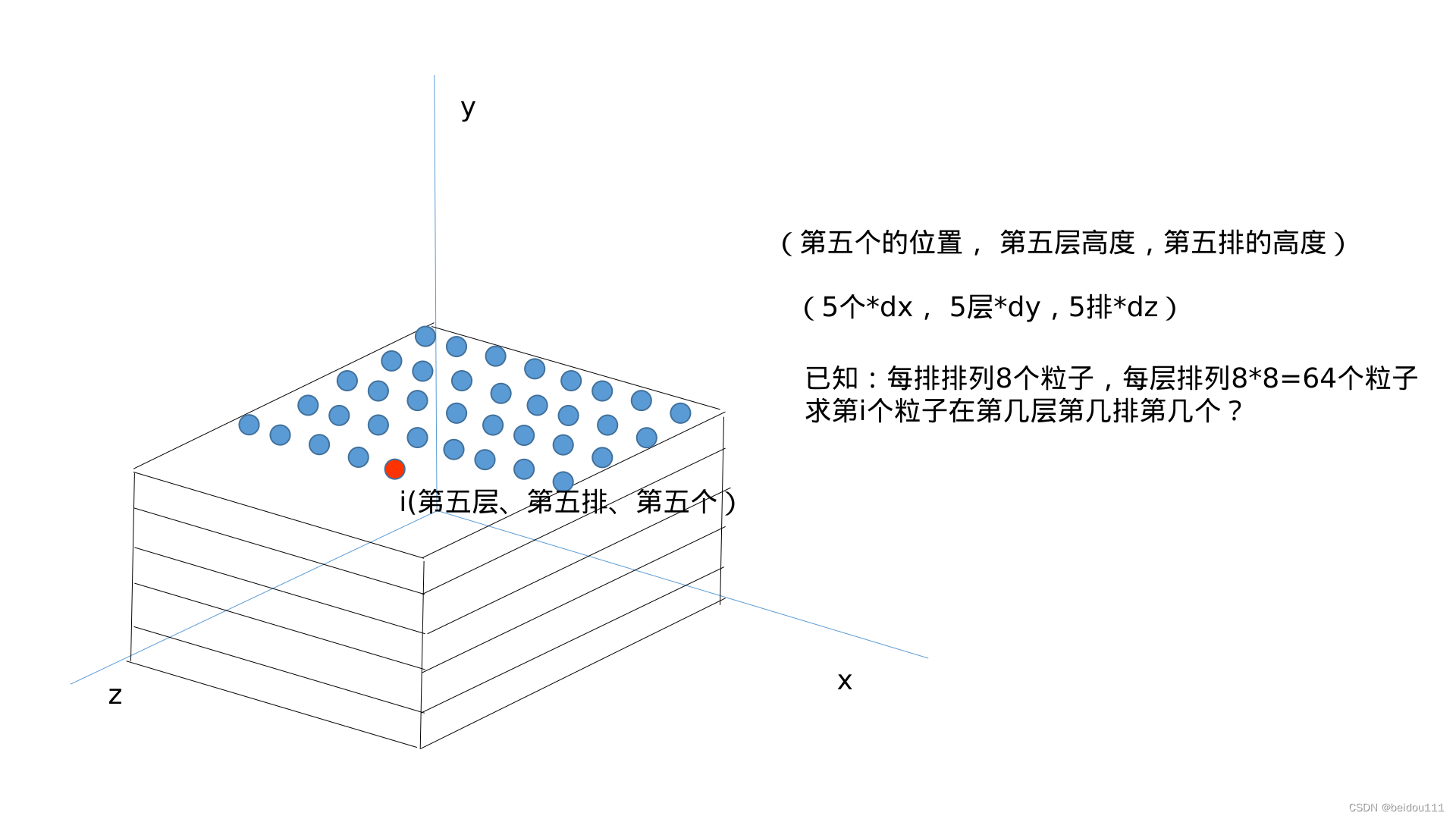

SPH中的粒子初始排列问题(两张图解决)

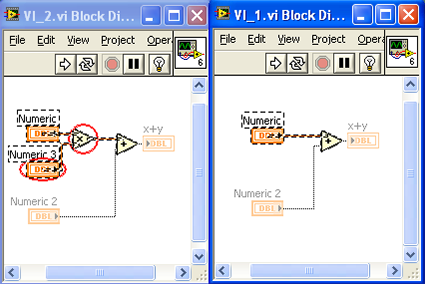

Compare two vis in LabVIEW

![P2181 对角线和P1030 [NOIP2001 普及组] 求先序排列](/img/79/36c46421bce08284838f68f11cda29.png)

P2181 对角线和P1030 [NOIP2001 普及组] 求先序排列

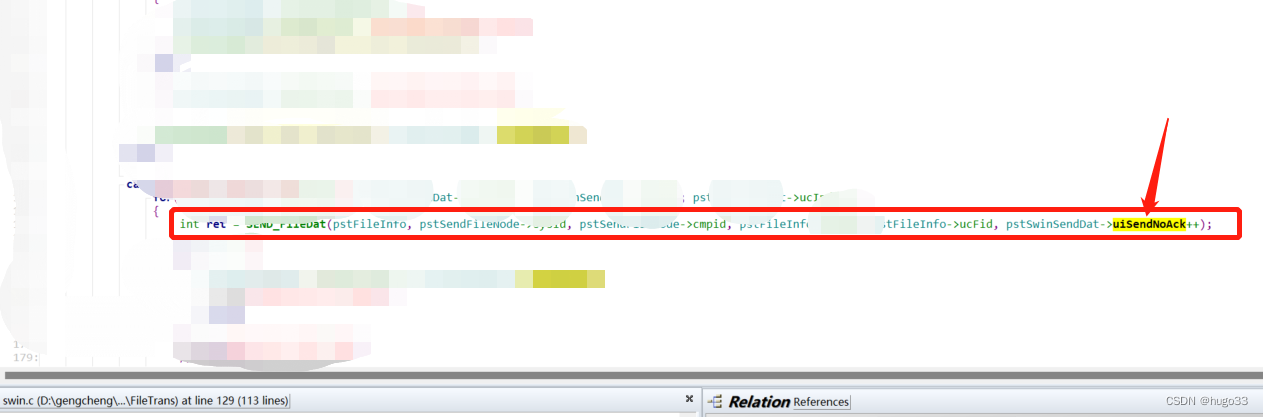

Analysis of the self increasing and self decreasing of C language function parameters

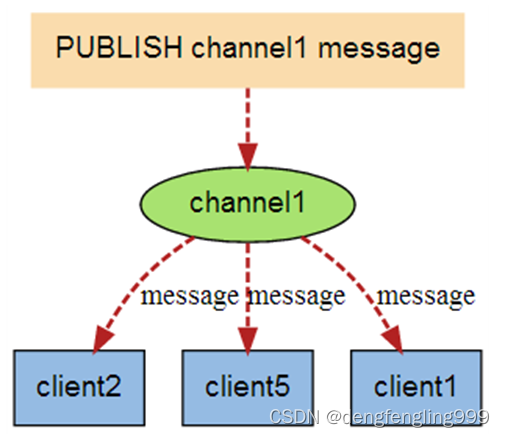

Redis: redis message publishing and subscription (understand)

随机推荐

机器学习在房屋价格预测上的应用

Redis introduction complete tutorial: detailed explanation of ordered collection

List related knowledge points to be sorted out

该如何去选择证券公司,手机上开户安不安全

LIst 相关待整理的知识点

Excel shortcut keys - always add

QT addition calculator (simple case)

MariaDB的Galera集群-双主双活安装设置

小程序vant tab组件解决文字过多显示不全的问题

Async await used in map

高通WLAN框架学习(30)-- 支持双STA的组件

位运算符讲解

The small program vant tab component solves the problem of too much text and incomplete display

Principle of lazy loading of pictures

Actual combat simulation │ JWT login authentication

Photoshop批量给不同的图片添加不同的编号

ICML 2022 || 3DLinker: 用于分子链接设计的E(3)等变变分自编码器

CTF競賽題解之stm32逆向入門

[Jianzhi offer] 6-10 questions

The caching feature of docker image and dockerfile