当前位置:网站首页>【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

2022-07-02 07:44:00 【bryant_ meng】

RSKT-2014

International conference on rough sets and knowledge technology

List of articles

1 Background and Motivation

The effect of pooling layer ( Read all the in-depth learning in one article 9 A pooling method !)

- Increase the network receptive field

- Suppress noise , Reduce information redundancy

- Reduce the amount of model calculation , Reduce the difficulty of network optimization , Prevent over fitting of network

- It makes the model more robust to the change of feature position in the input image

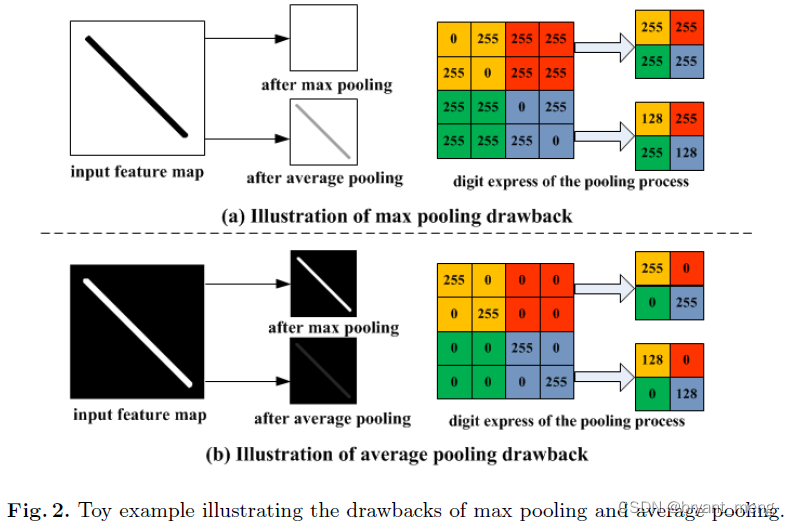

Author for max and ave pooling The shortcomings of ,

Put forward mix pooling——randomly employs the local max pooling and average pooling methods when training CNNs

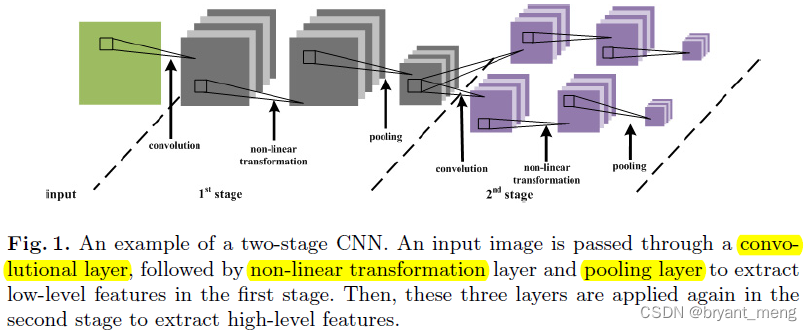

2 Review of Convolutional Neural Networks

- Convolutional Layer, Including convolution operation and activation function

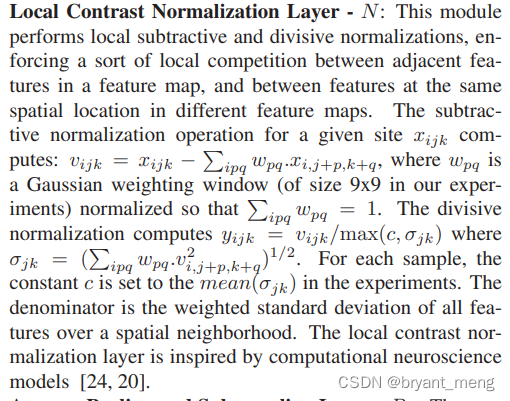

- Non-linear Transformation Layer, That is to say normalization layer , What's more popular now is BN etc. , It used to be LCN(local contrast normalization) and AlexNet Of LRN(the local response normalization) etc. ,PS: In the paper LCN There is something wrong with the formula ,LRN There is also a gap between the details and the original paper , The form is basically the same

- Feature Pooling Layer

3 Advantages / Contributions

reference dropout, blend max and ave Pooling , Put forward mixed pooling

4 Method

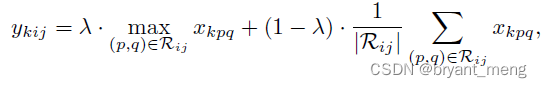

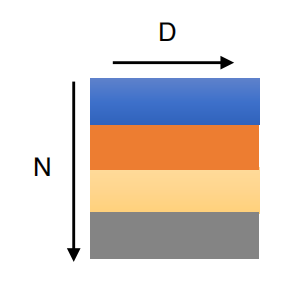

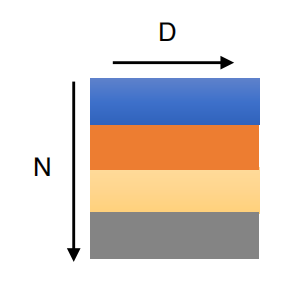

1)mixed pooling The formula

λ \lambda λ is a random value being either 0 or 1

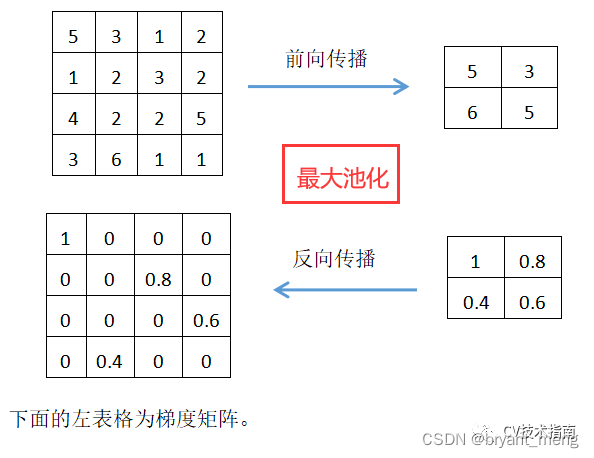

2)mixed pooling Back propagation

Have a look first max and ave pooling Back propagation of

max pooling

( This is from the Internet , Invasion and deletion !!!)

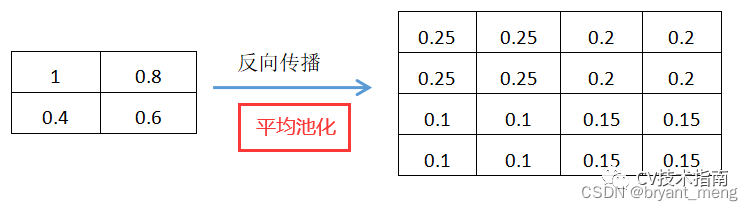

ave pooling

( This is from the Internet , Invasion and deletion !!!)

mixed pooling

I have to record it λ \lambda λ The value of , In order to correctly back spread

the pooling history about the random value λ \lambda λ in Eq. must be recorded during forward propagation.

3)Pooling at Test Time

Statistics training time pooling use max and ave Frequency of F m a x k F_{max}^{k} Fmaxk and F a v e k F_{ave}^{k} Favek, Whose frequency is high? When testing there pooling Just use who , Start metaphysics, right , Ha ha ha ha

5 Experiments

5.1 Datasets

- CIFAR-10

- CIFAR-100

- SVHN

5.2 Experimental Results

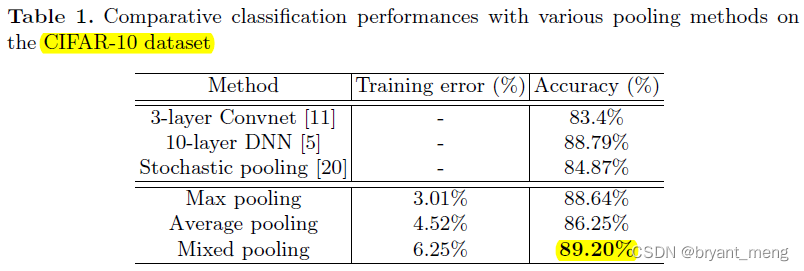

1)CIFAR-10

train error high ,acc high

The author explains This indicates that the proposed mixed pooling outperforms max pooling and average pooling to address the over-fitting problem

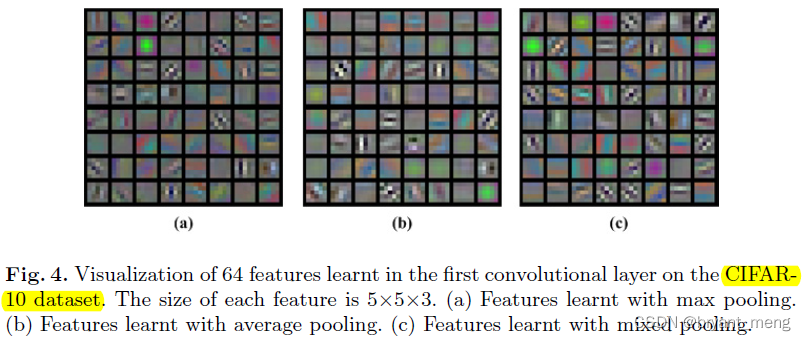

Visualization results

It can be seen that mixed pooling Contains more information

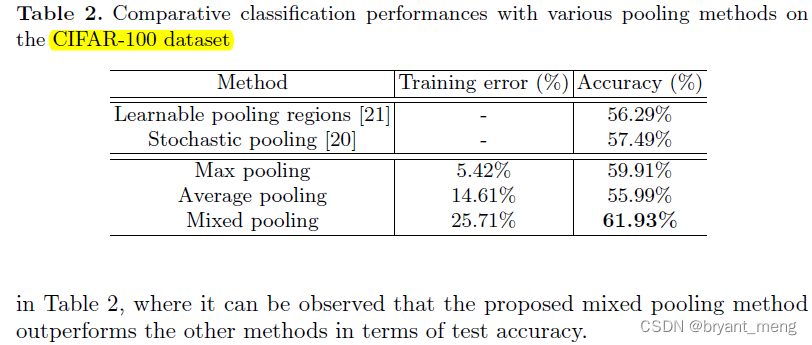

2)CIFAR-100

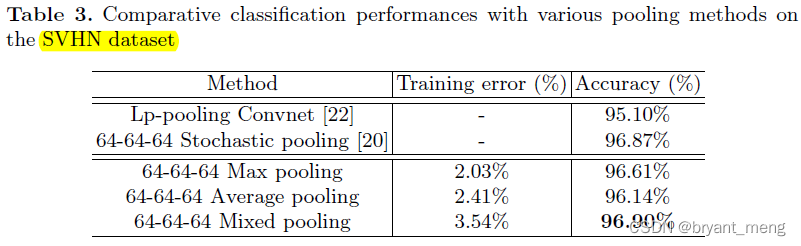

3)SVHN

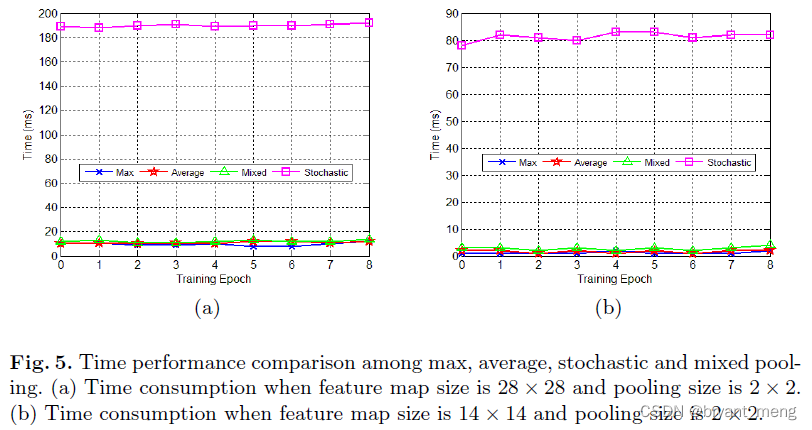

4)Time Performance

6 Conclusion(own) / Future work

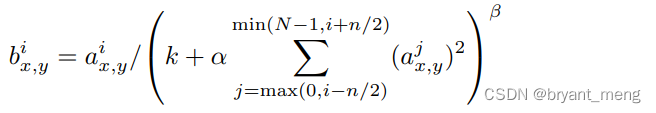

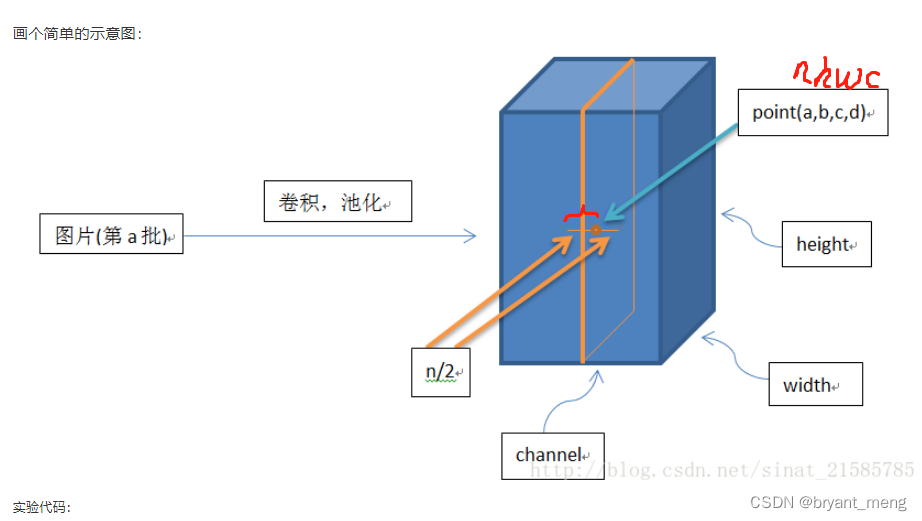

LRN

k , n , α , β k, n, \alpha, \beta k,n,α,β It's all super parameters , a , b a,b a,b Input and output characteristic diagram , x , y x,y x,y Space location , i i i Channel location

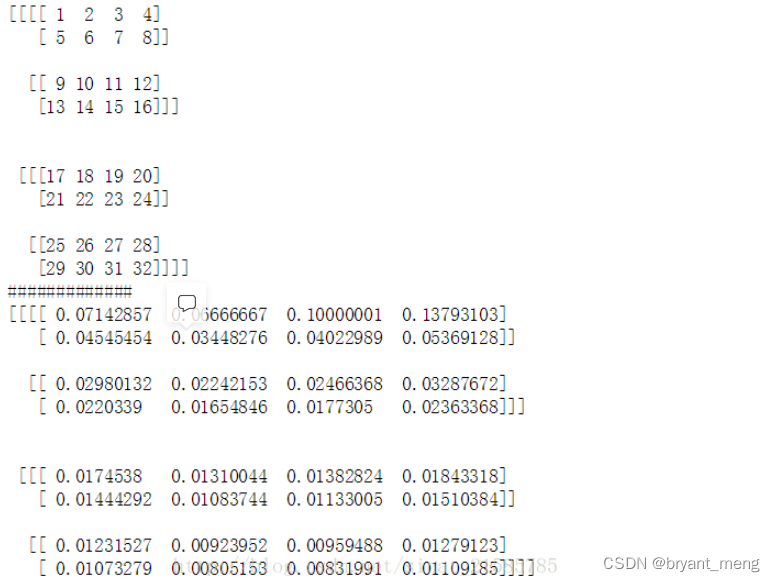

The following is from Local response normalization of deep learning LRN(Local Response Normalization) understand

import tensorflow as tf

import numpy as np

x = np.array([i for i in range(1,33)]).reshape([2,2,2,4])

y = tf.nn.lrn(input=x,depth_radius=2,bias=0,alpha=1,beta=1)

with tf.Session() as sess:

print(x)

print('#############')

print(y.eval())

LCN

《What is the best multi-stage architecture for object recognition?》

边栏推荐

- Find in laravel8_ in_ Usage of set and upsert

- How to efficiently develop a wechat applet

- Mmdetection model fine tuning

- Tencent machine test questions

- [model distillation] tinybert: distilling Bert for natural language understanding

- Huawei machine test questions

- 【Cutout】《Improved Regularization of Convolutional Neural Networks with Cutout》

- PHP returns the corresponding key value according to the value in the two-dimensional array

- Huawei machine test questions-20190417

- PHP uses the method of collecting to insert a value into the specified position in the array

猜你喜欢

Point cloud data understanding (step 3 of pointnet Implementation)

iOD及Detectron2搭建过程问题记录

How do vision transformer work?【论文解读】

![[introduction to information retrieval] Chapter 1 Boolean retrieval](/img/78/df4bcefd3307d7cdd25a9ee345f244.png)

[introduction to information retrieval] Chapter 1 Boolean retrieval

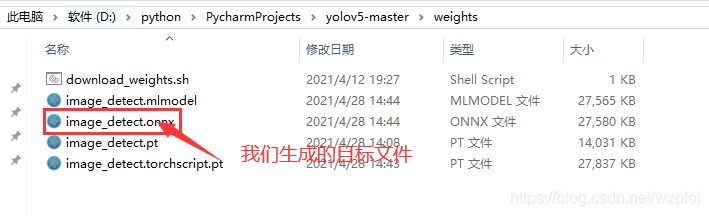

Implementation of yolov5 single image detection based on onnxruntime

Faster-ILOD、maskrcnn_ Benchmark installation process and problems encountered

【Paper Reading】

传统目标检测笔记1__ Viola Jones

![[medical] participants to medical ontologies: Content Selection for Clinical Abstract Summarization](/img/24/09ae6baee12edaea806962fc5b9a1e.png)

[medical] participants to medical ontologies: Content Selection for Clinical Abstract Summarization

点云数据理解(PointNet实现第3步)

随机推荐

One field in thinkphp5 corresponds to multiple fuzzy queries

label propagation 标签传播

[introduction to information retrieval] Chapter II vocabulary dictionary and inverted record table

A slide with two tables will help you quickly understand the target detection

Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

Mmdetection trains its own data set -- export coco format of cvat annotation file and related operations

Common machine learning related evaluation indicators

常见的机器学习相关评价指标

[medical] participants to medical ontologies: Content Selection for Clinical Abstract Summarization

Classloader and parental delegation mechanism

传统目标检测笔记1__ Viola Jones

Calculate the total in the tree structure data in PHP

win10+vs2017+denseflow编译

What if a new window always pops up when opening a folder on a laptop

点云数据理解(PointNet实现第3步)

Drawing mechanism of view (3)

CONDA creates, replicates, and shares virtual environments

Regular expressions in MySQL

MMDetection模型微调

[torch] the most concise logging User Guide