当前位置:网站首页>【FastDepth】《FastDepth:Fast Monocular Depth Estimation on Embedded Systems》

【FastDepth】《FastDepth:Fast Monocular Depth Estimation on Embedded Systems》

2022-07-02 06:26:00 【bryant_meng】

ICRA-2019

文章目录

1 Background and Motivation

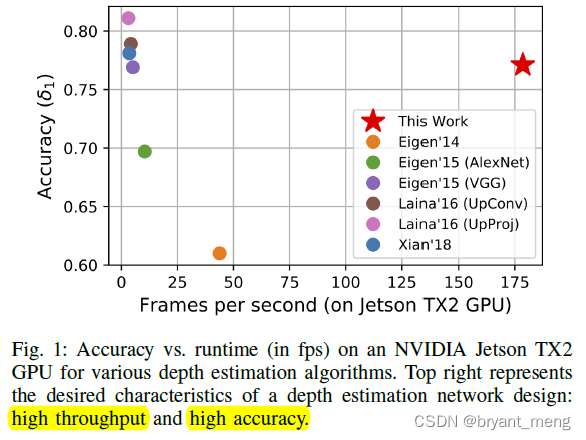

加速现有单目深度估计模型,使其不太损失精度的同时有较低延迟,能在 micro aerial vehicle 部署运行,辅助 mapping, localization, and obstacle avoidance 等 robotic tasks

2 Related Work

- Monocular Depth Estimation

- Efficient Neural Networks

- Network Pruning

3 Advantages / Contributions

加速单目深度估计模型:

- a low-complexity and low-latency decoder design

- a state-of-the-art pruning algorithm(NetAdapt 剪枝)

- Hardware-specific compilation(TVM 部署 DWConv 优化)

4 Method

1)整体结构

朴实无华的 U-Net 结构,skip connection 用的 add(没用 concat,avoid increasing the number of feature map channels)

upsample layer 细节如下

conv5(深度可分离卷积) + linear interpolation(相比于双线性,底层实现简单通用)

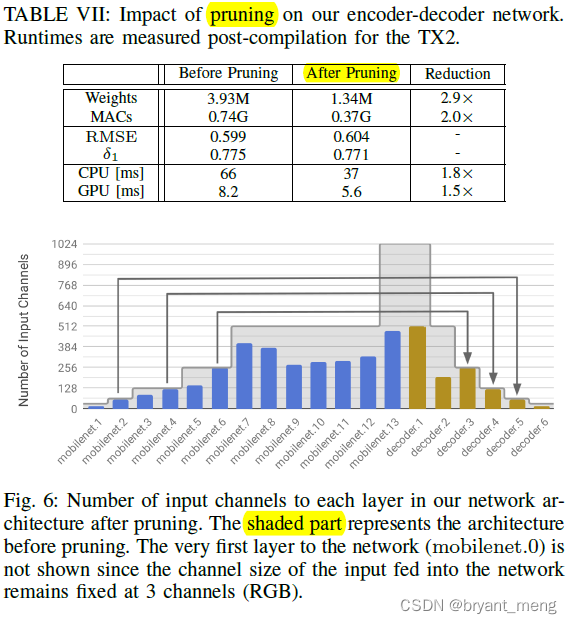

2)Network Pruning

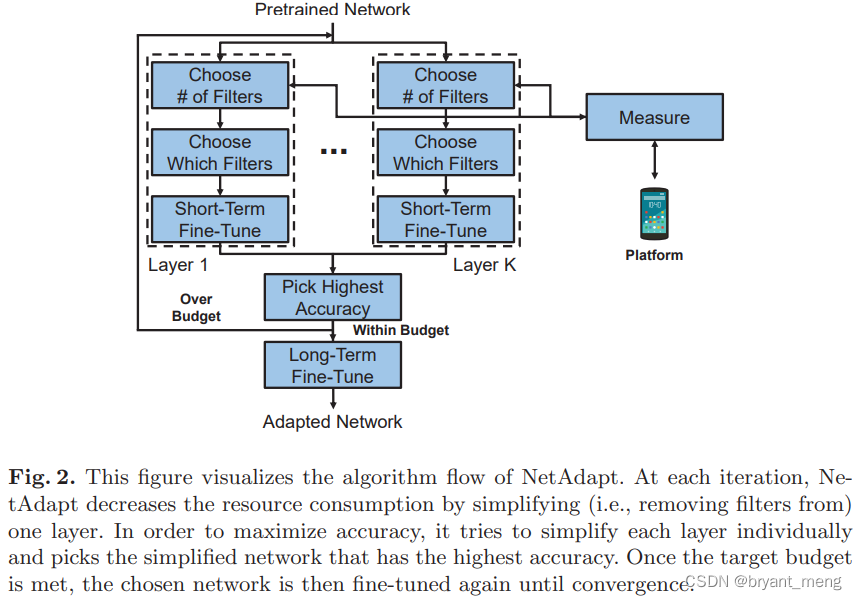

用的 NetAdapt 方法来剪枝

《NetAdapt: Platform-Aware Neural Network Adaptation for Mobile Applications》

就比较暴力和直接,下面的图更直观一些

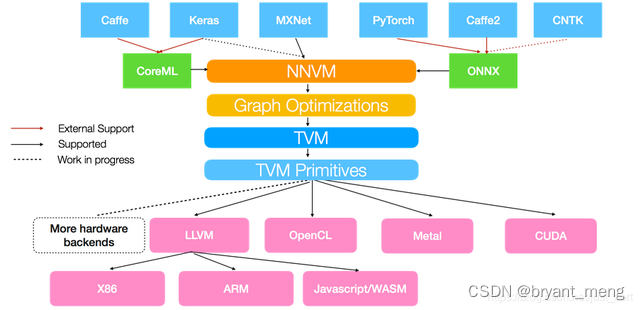

3)Network Compilation

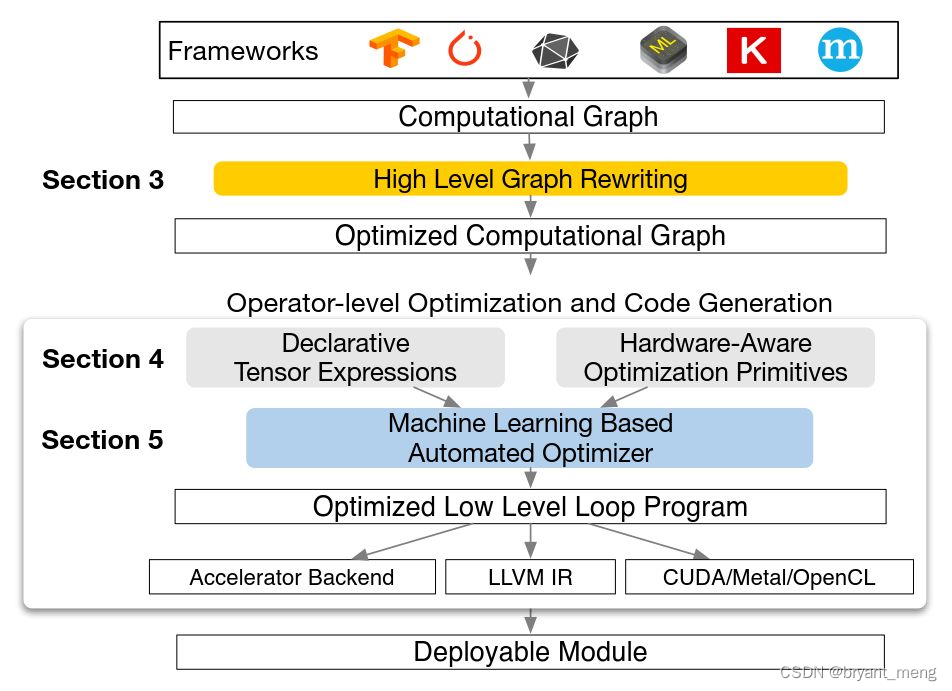

用 TVM 来加速 DWConv

参考:

TVM是一个支持 GPU、CPU、FPGA指令生成的开源编译器框架

TVM最大的特点是基于图和算符结构来优化指令生成,最大化硬件执行效率,它向上对接Tensorflow、Pytorch等深度学习框架,向下兼容GPU、CPU、ARM、TPU等硬件设备

TVM是一个端到端的指令生成器。它从深度学习框架中接收模型输入,然后进行图的转化和基本的优化,最后生成指令完成到硬件的部署。

TVM有两个主要特性:

- 支持将Keras、MxNet、PyTorch、Tensorflow、CoreML、DarkNet框架的深度学习模型编译为多种硬件后端的最小可部署模型。

- 能够自动生成和优化多个后端的张量操作并达到更好的性能。

下面感受下整体框架

再感受一下

再再再感受一下

5 Experiments

5.1 Datasets

评价指标

δ 1 \delta1 δ1 (the percentage of predicted pixels where the relative error is within 25%),越大越好

RMSE (root mean squared error),越小越好

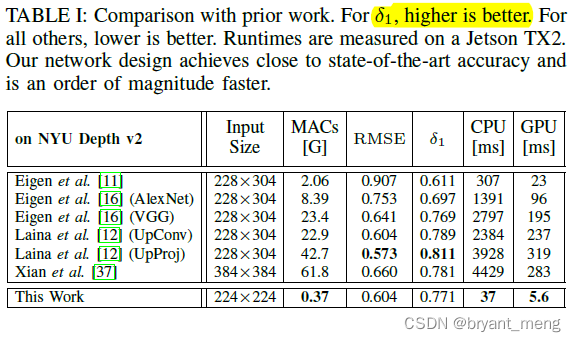

5.2 Final Results and Comparison With Prior Work

实验平台

NVIDIA Jetson TX2 系列模组可为嵌入式 AI 计算设备提供出色的速度与能效。配备NVIDIA Pascal GPU、高达 8 GB 内存、59.7 GB/s 的显存带宽以及各种标准硬件接口,每款超级计算机模组将真正的AI计算带到边缘端。

相比 encoder,decoder占了更多 runtime,需要重点优化

Jetson TX2 in high performance (max-N) 模式下,和其他方法对比

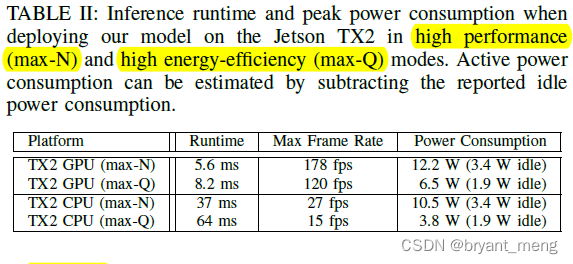

Jetson TX2 in high energy-efficiency (max-Q) 模式下的结果

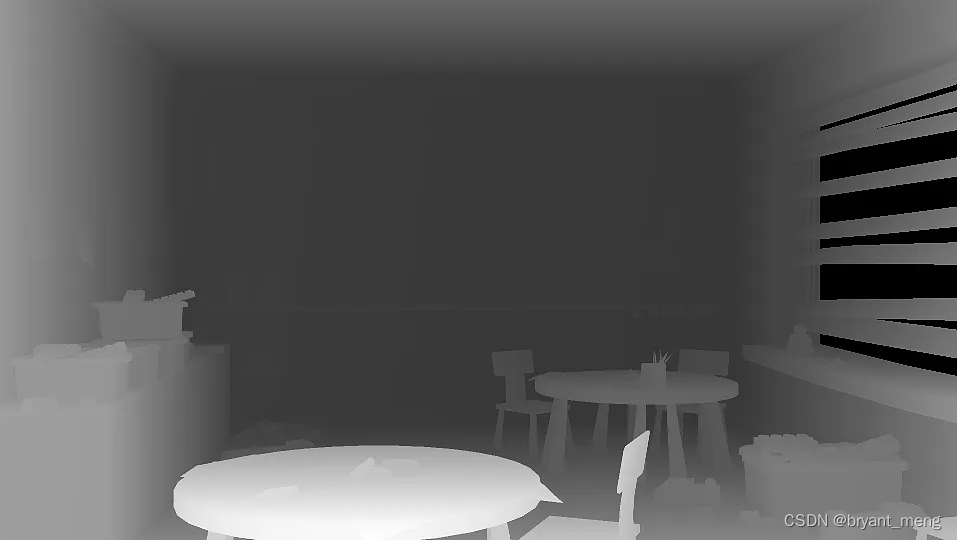

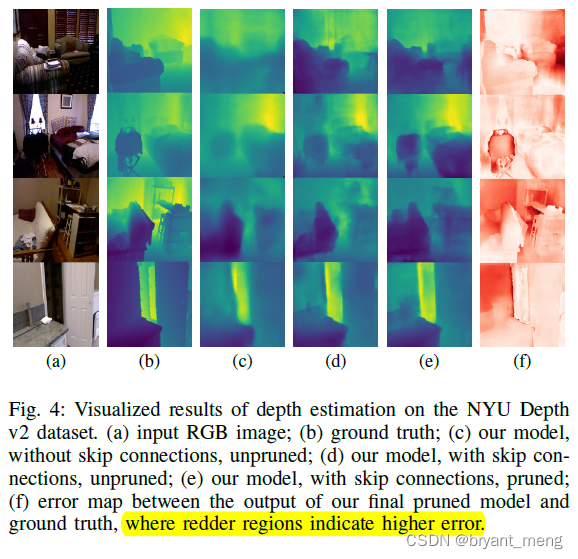

可视化结果如下,the error is highest at boundaries and at distant objects.

(c) 和(d)区别是 skip connection,(d)精细化了很多

5.3 Ablation Study

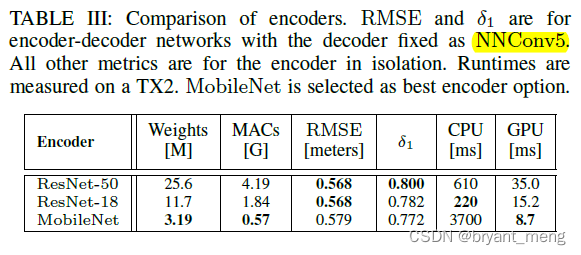

1)Encoder Design Space

选择的是 MobileNet,速度精度最好的权衡

2)Decoder Design Space

Upsample Operation,也即图 2 中的 upsample layer

(a)和(b)中的上采样操作是补零了 zero-insertion,(d)是 nearest neighbor interpolation

Depthwise Separable Convolution and Skip Connections

3)Hardware-Specific Optimization

把 DWConv 发挥到了进一步逼近理论压缩率的程度

4)Network Pruning

6 Conclusion(own) / Future work

更像是竞赛的技术报告!!!

code:https://github.com/dwofk/fast-depth

边栏推荐

猜你喜欢

iOD及Detectron2搭建过程问题记录

mmdetection训练自己的数据集--CVAT标注文件导出coco格式及相关操作

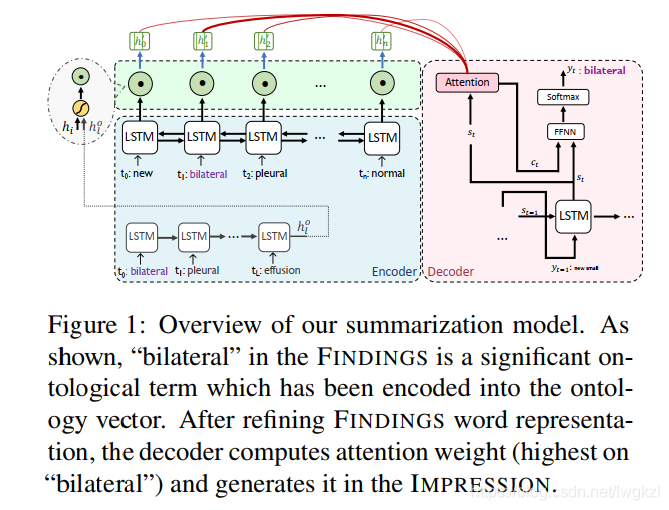

【MEDICAL】Attend to Medical Ontologies: Content Selection for Clinical Abstractive Summarization

【Programming】

Record of problems in the construction process of IOD and detectron2

常见的机器学习相关评价指标

Sparksql data skew

SSM laboratory equipment management

【信息检索导论】第七章搜索系统中的评分计算

腾讯机试题

随机推荐

【Ranking】Pre-trained Language Model based Ranking in Baidu Search

Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

【Programming】

解决latex图片浮动的问题

【多模态】CLIP模型

生成模型与判别模型的区别与理解

Huawei machine test questions

Play online games with mame32k

Using MATLAB to realize: power method, inverse power method (origin displacement)

SSM student achievement information management system

Using MATLAB to realize: Jacobi, Gauss Seidel iteration

SSM garbage classification management system

[paper introduction] r-drop: regulated dropout for neural networks

Common CNN network innovations

Optimization method: meaning of common mathematical symbols

【Hide-and-Seek】《Hide-and-Seek: A Data Augmentation Technique for Weakly-Supervised Localization xxx》

latex公式正体和斜体

【MEDICAL】Attend to Medical Ontologies: Content Selection for Clinical Abstractive Summarization

Pointnet understanding (step 4 of pointnet Implementation)

《Handwritten Mathematical Expression Recognition with Bidirectionally Trained Transformer》论文翻译