当前位置:网站首页>Introduction to yarn (one article is enough)

Introduction to yarn (one article is enough)

2022-07-07 05:57:00 【Yang Linwei】

List of articles

01 introduction

Reference material :《Yarn【 framework 、 principle 、 Multi queue configuration 】 》

Yarn It is a resource scheduling platform , Responsible for providing server computing resources for computing programs , Equivalent to a distributed operating system platform , and MapReduce Etc. is equivalent to the application running on the operating system .

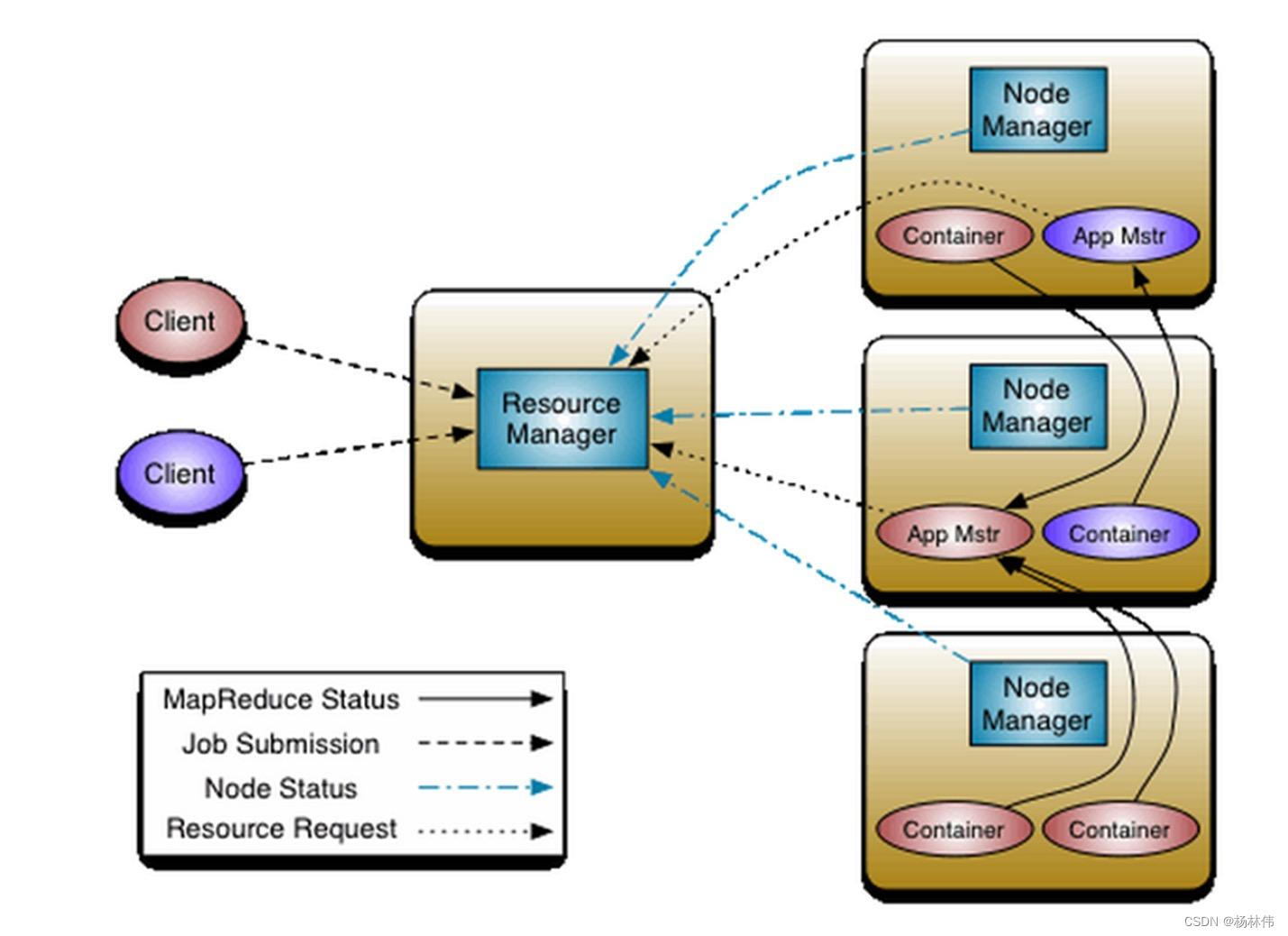

02 yarn framework

yarn Mainly by ResourceManager、NodeManager、ApplicationMaster and Container Etc , Here's the picture :

2.1 ResourceManager

ResourceManager(RM) The main functions are as follows :

- Handle client requests ;

- monitor NodeManager;

- Start or monitor ApplicationMaster;

- Resource allocation and scheduling .

2.2 NodeManager

NodeManager(NM) The main functions are as follows :

- Manage resources on a single node ;

- Processing comes from ResouceManager The order of ;

- Processing comes from ApplicationMaster The order of .

2.3 ApplicationMaster

ApplicationMaster(AM) It works as follows :

- Responsible for data segmentation ;

- Request resources for the application and assign them to internal tasks ;

- Task monitoring and fault tolerance .

2.4 Container

Container yes yarn Resource abstraction in , It encapsulates the dimension resources on a node , Such as : Memory 、CPU、 Hard disk and network, etc .

03 yarn working principle

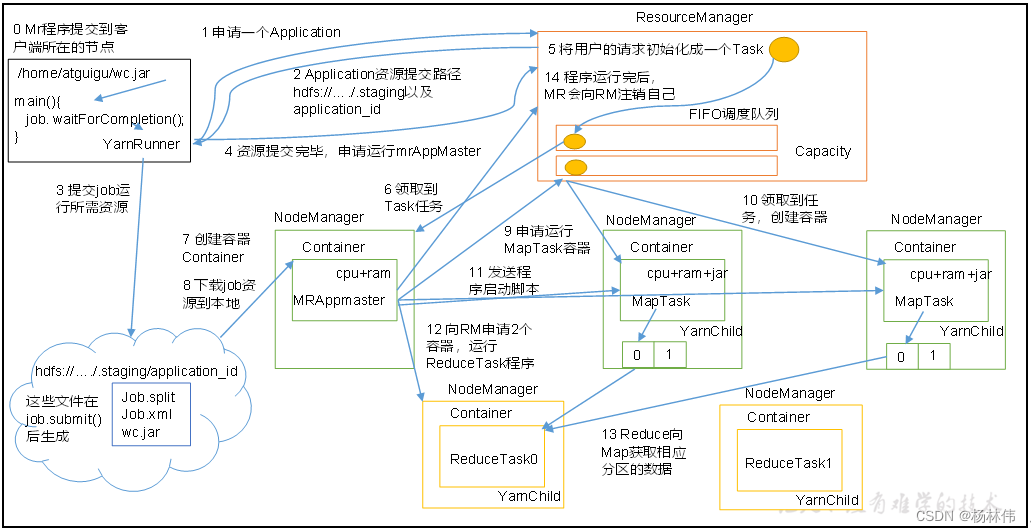

3.1 yarn Working mechanism

yarn The working mechanism is shown below ( Image from :https://www.cnblogs.com/wh984763176/p/13225690.html):

The process is as follows :

- MR The program is submitted to the node where the client is located .

- YarnRunner towards ResourceManager Apply for one Application.

- RM Return the resource path of the application to YarnRunner.

- The program submits the required resources to HDFS On .

- After the program resources are submitted , Apply to run mrAppMaster.

- RM Initialize the user's request to a Task.

- One of them NodeManager Received Task Mission .

- The NodeManager Create a container Container, And produce MRAppmaster.

- Container from HDFS Copy resources to local .

- MRAppmaster towards RM Apply to run MapTask resources .

- RM Will run MapTask The task is assigned to the other two NodeManager, The other two NodeManager Pick up tasks and create containers .

- MR To receive the task from two NodeManager Send program startup script , these two items. NodeManager To start, respectively, MapTask,MapTask Sort the data partition .

- MrAppMaster Wait for all MapTask After running , towards RM Apply for containers , function ReduceTask.

- ReduceTask towards MapTask Get the data of the corresponding partition .

- After the program runs ,MR Will send to RM Apply to cancel yourself .

3.2 yarn Task submission process

① Homework submission

- The first 1 Step :Client call job.waitForCompletion Method , Submit... To the entire cluster MapReduce Homework .

- The first 2 Step :Client towards RM Apply for an assignment id.

- The first 3 Step :RM to Client Return to the job Submit path and job of resource id.

- The first 4 Step :Client Submit jar package 、 Slice information and configuration files to the specified resource submission path .

- The first 5 Step :Client After submitting resources , towards RM Apply to run MrAppMaster.

② Job initialization

- The first 6 Step : When RM received Client After request , Will be job Add to capacity scheduler .

- The first 7 Step : Some free NM Take it Job.

- The first 8 Step : The NM establish Container, And produce MRAppmaster.

- The first 9 Step : download Client Commit resources to local .

③ Task assignment

- The first 10 Step :MrAppMaster towards RM Apply to run multiple MapTask Task resources .

- The first 11 Step :RM Will run MapTask The task is assigned to the other two NodeManager, The other two

NodeManager Pick up tasks and create containers .

④ Task run

- The first 12 Step :MR To receive the task from two NodeManager Send program startup script , these two items. NodeManager To start, respectively, MapTask,MapTask Sort the data partition .

- The first 13 Step :MrAppMaster Wait for all MapTask After running , towards RM Apply for containers , function ReduceTask.

- The first 14 Step :ReduceTask towards MapTask Get the data of the corresponding partition .

- The first 15 Step : After the program runs ,MR Will send to RM Apply to cancel yourself .

⑤ Progress and status updates

- YARN The tasks in will have their progress and status ( Include counter) Back to application manager , Client per second ( adopt mapreduce.client.progressmonitor.pollinterval Set up ) Request progress updates from app Manager , Show it to the user .

⑥ Homework done

- In addition to requesting job progress from the application manager , Every client 5 Seconds will pass through the call waitForCompletion() To check whether the homework is finished . The time interval can pass through mapreduce.client.completion.pollinterval To set up . When the homework is done , Application manager and Container Will clean up the working state . The job information will be stored by the job history server for later user verification .

04 yarn Resource scheduler

Hadoop There are three kinds of job scheduler :FIFO、Capacity Scheduler and Fair Scheduler.

Hadoop3.1.3 The default resource scheduler is Capacity Scheduler.

See... For specific settings :yarn-default.xml file

<property>

<description>The class to use as the resource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

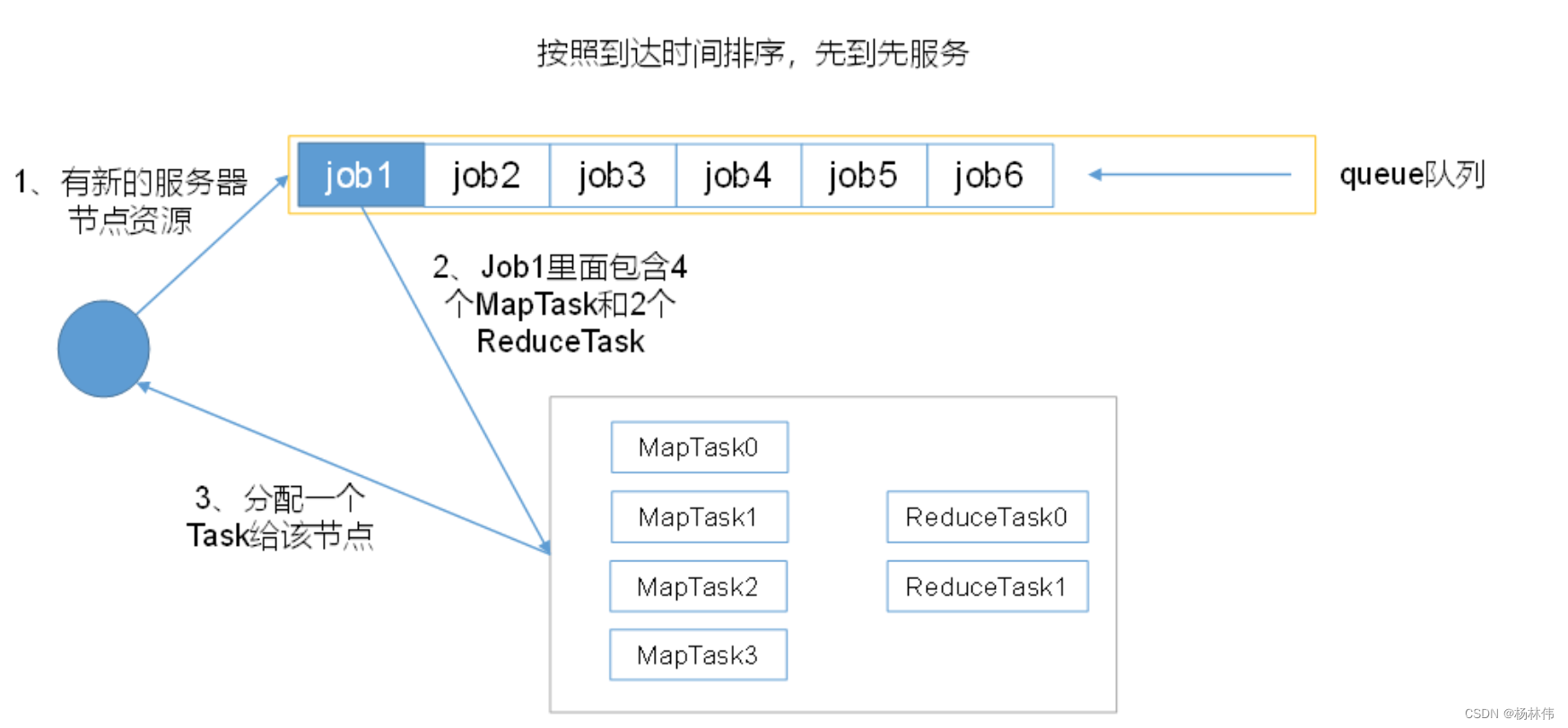

4.1 FIFO Scheduler

fifo : There is only one task in the queue at the same time :

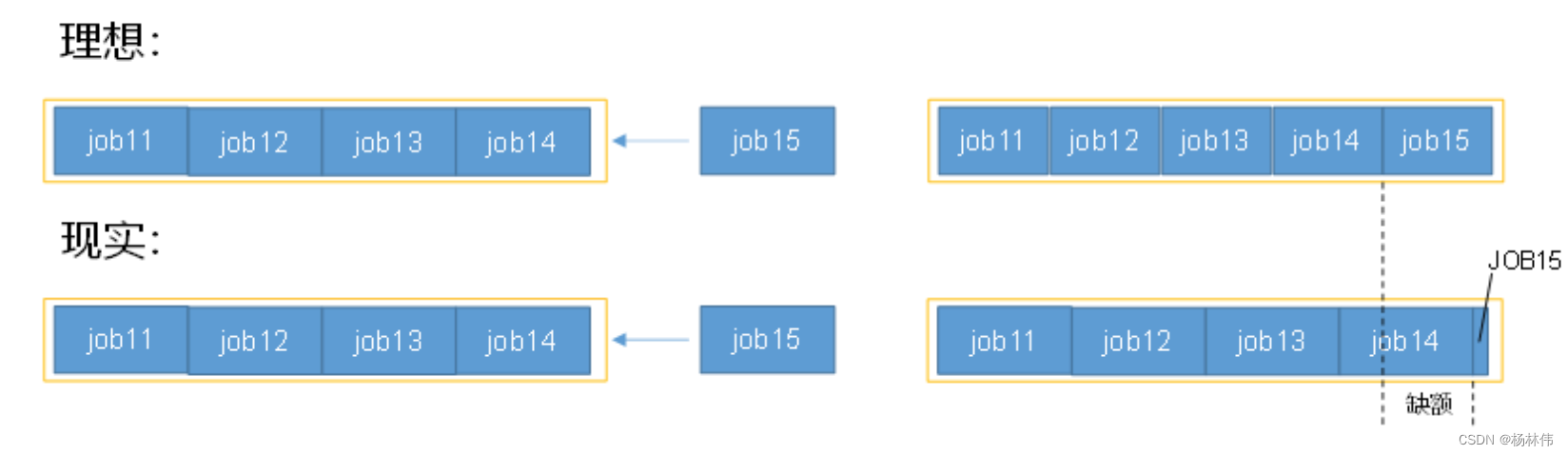

4.2 Container scheduler

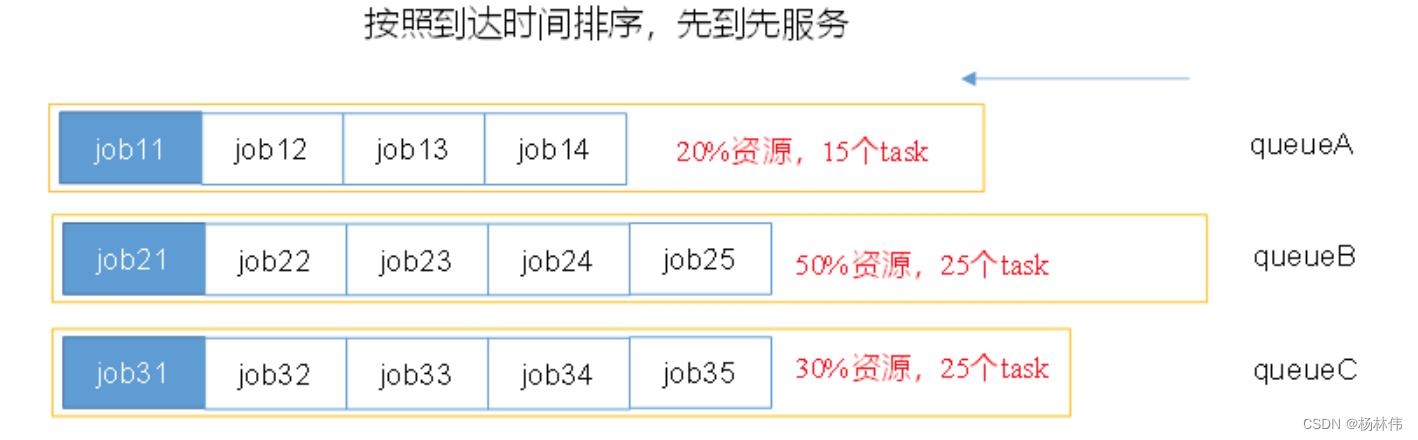

Multi queue : FIFO in each queue , There is only one task in the queue at the same time , The parallelism of a queue is the number of queues .

Container scheduler supports multiple queues , Each queue can be configured with a certain amount of resources , Each queue uses FIFO Scheduling strategy ;

In order to prevent the same user's jobs from monopolizing the resources in the queue , The scheduler will limit the resources occupied by jobs submitted by the same user :

- First , Calculate the ratio of the number of running tasks in each queue to the number of computing resources it should share , Select a queue with the lowest ratio ( That is, the most idle );

- secondly , According to the order of job priority and submission time , At the same time, the user resource and memory constraints are considered to sort the tasks in the queue .

Pictured above , The three queues are executed at the same time according to the sequence of tasks , such as :job11,job21 and job31 At the top of the queue , First run , It's also running in parallel .

4.3 Fair scheduler

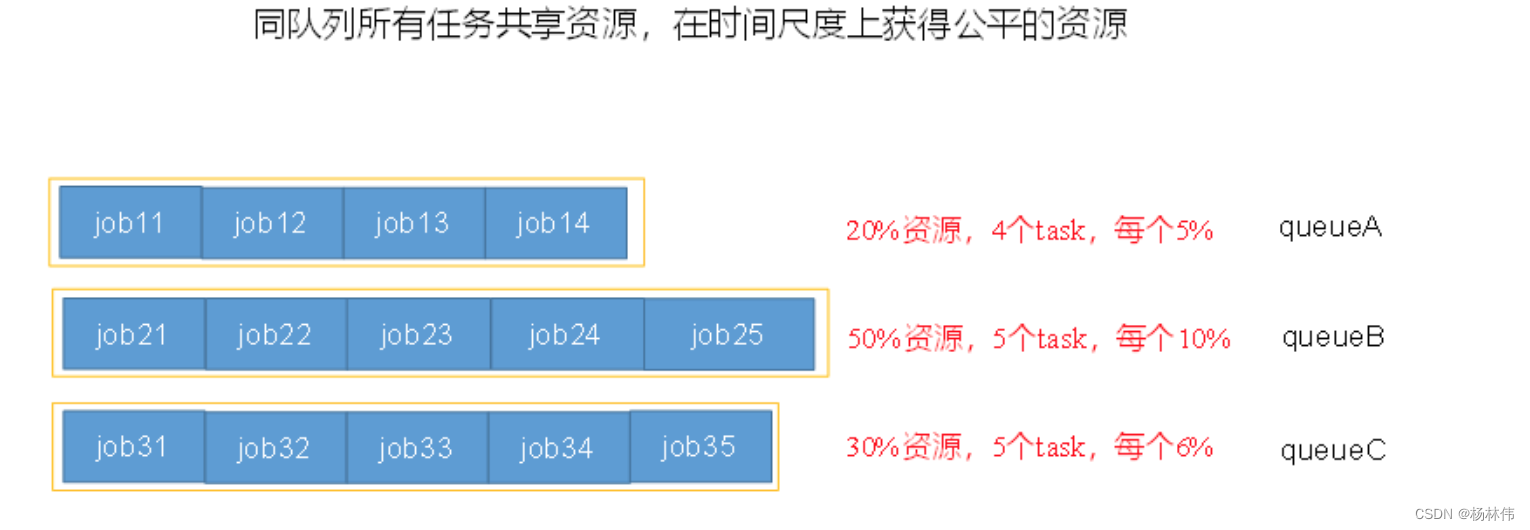

Multi queue : Each queue allocates resources to start tasks according to the size of the vacancy , There are multiple tasks in the same time queue . The parallelism of the queue is greater than or equal to the number of queues

The fair scheduler has the following characteristics :

- Support multiple queues and multiple jobs , Each queue can be configured separately ;

- Jobs in the same queue share the resources of the whole queue according to the priority of the queue , Concurrent execution ;

- Each job can set the minimum resource value , The scheduler will ensure that the job gets the above resources ;

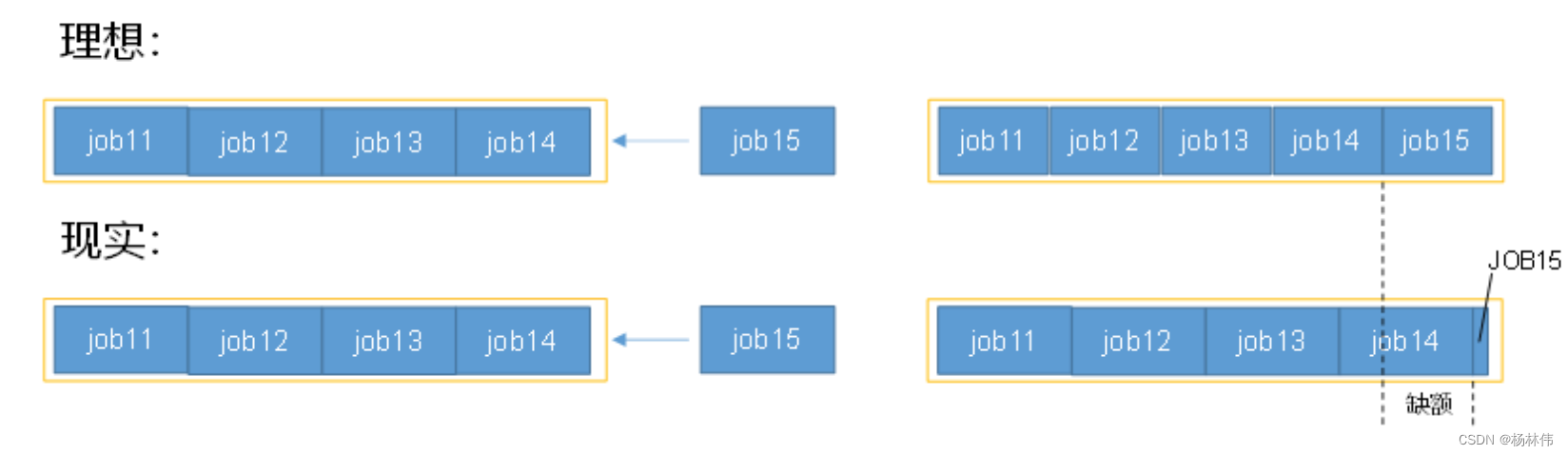

- The design goal is on a time scale , All operations receive fair resources . The gap between the resources that an operation should obtain and the resources actually obtained at a certain time is called “ A vacancy ”;

- The scheduler will give priority to allocating resources to jobs with large vacancies .

05 At the end of the article

This article mainly explains yarn The composition of 、 Working mechanism and its three resource schedulers , Thank you for reading , The end of this paper !

边栏推荐

- Interview skills of software testing

- Things about data storage 2

- How to get free traffic in pinduoduo new store and what links need to be optimized in order to effectively improve the free traffic in the store

- Web authentication API compatible version information

- Win configuration PM2 boot auto start node project

- 成为资深IC设计工程师的十个阶段,现在的你在哪个阶段 ?

- What EDA companies are there in China?

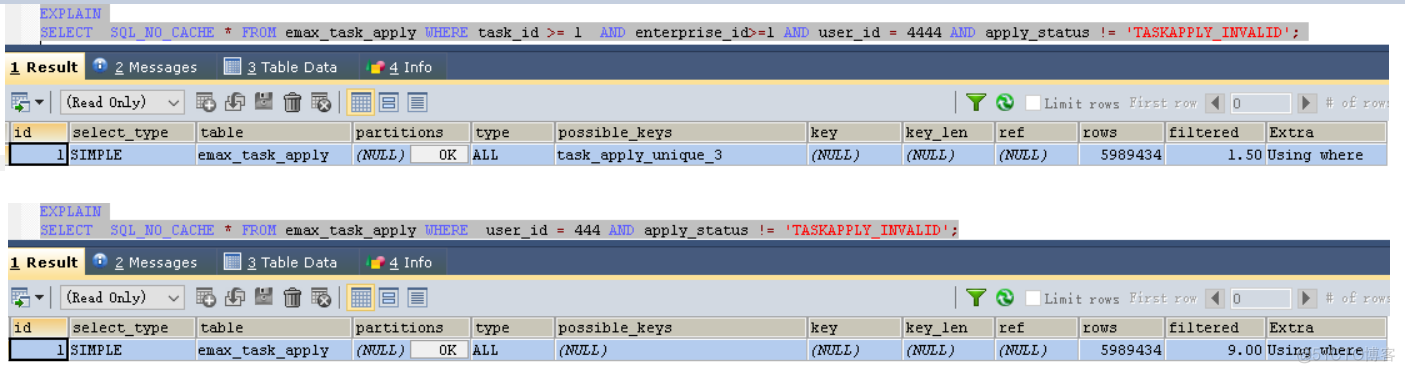

- 话说SQLyog欺骗了我!

- Pytorch builds neural network to predict temperature

- AI face editor makes Lena smile

猜你喜欢

数字IC面试总结(大厂面试经验分享)

Go语学习笔记 - gorm使用 - gorm处理错误 | Web框架Gin(十)

Get the way to optimize the one-stop worktable of customer service

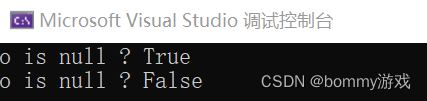

C nullable type

话说SQLyog欺骗了我!

Add salt and pepper noise or Gaussian noise to the picture

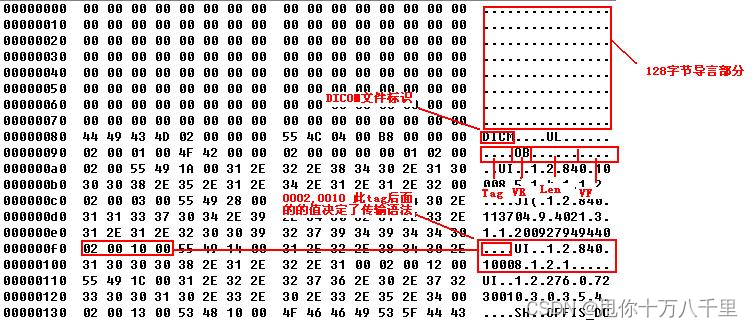

判断文件是否为DICOM文件

yarn入门(一篇就够了)

关于STC单片机“假死”状态的判别

Digital IC interview summary (interview experience sharing of large manufacturers)

随机推荐

《ClickHouse原理解析与应用实践》读书笔记(6)

How to improve website weight

Ten stages of becoming a Senior IC Design Engineer. What stage are you in now?

Five core elements of architecture design

数据中心为什么需要一套基础设施可视化管理系统

上海字节面试问题及薪资福利

EMMC print cqhci: timeout for tag 10 prompt analysis and solution

Dynamic memory management

Sidecar mode

I didn't know it until I graduated -- the principle of HowNet duplication check and examples of weight reduction

Detailed explanation of platform device driver architecture in driver development

《HarmonyOS实战—入门到开发,浅析原子化服务》

成为资深IC设计工程师的十个阶段,现在的你在哪个阶段 ?

zabbix_get测试数据库失败

What is make makefile cmake qmake and what is the difference?

Pytorch builds neural network to predict temperature

一个简单的代数问题的求解

Flask1.1.4 Werkzeug1.0.1 源碼分析:啟動流程

TCC of distributed transaction solutions

pytorch_ 01 automatic derivation mechanism