当前位置:网站首页>Crawler obtains real estate data

Crawler obtains real estate data

2022-07-06 22:19:00 【sunpro518】

Crawl from the data released by the Bureau of Statistics , Take real estate data as an example , There are some problems , But it was finally solved , The execution time is 2022-2-11.

The basic idea is to use Python Of requests Library to grab . Analyze the web 1 Discovery is dynamic loading . Find the Internet content inside , yes jsquery Loaded ( I don't know how the great God found it , I just looked for it one by one , Just look for something slightly reliable by name ).

With Real estate investment Take the case of :

find out js Request for :

https://data.stats.gov.cn/easyquery.htm?m=QueryData&dbcode=hgyd&rowcode=zb&colcode=sj&wds=%5B%5D&dfwds=%5B%7B%22wdcode%22%3A%22zb%22%2C%22valuecode%22%3A%22A0601%22%7D%5D&k1=1644573450992&h=1

Using the browser, you can see that the request returns a json result . All the data we want are here .

According to this idea, you can basically write crawler code 1 了 !

The above reference blog is written in 2018 year ,2022 There are two problems when testing in :

- Dynamic code is required for the first visit

- The access address is not authenticated , Direct access will report an error 400.

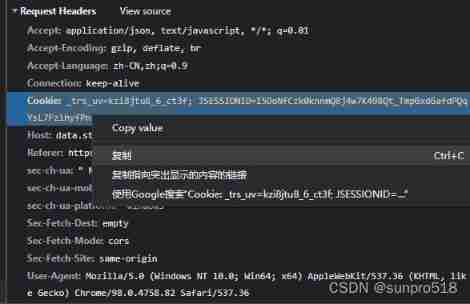

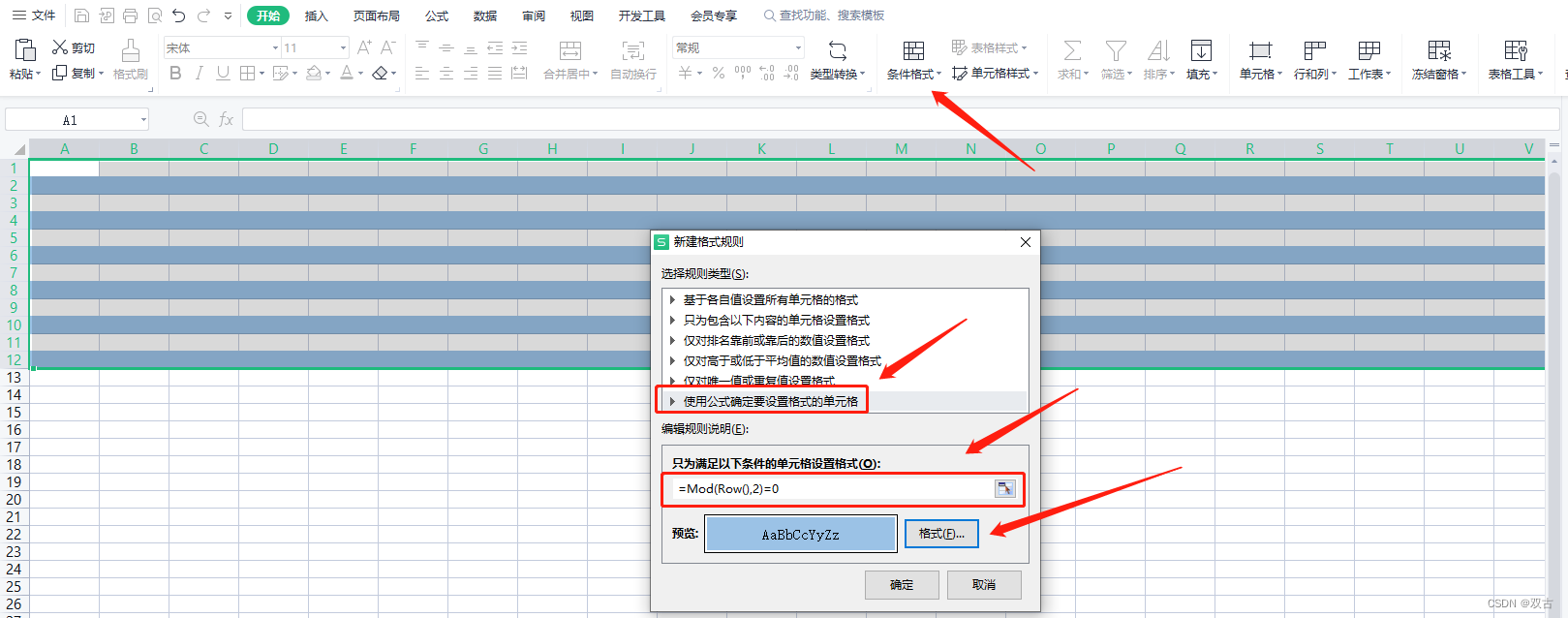

For the first question , use cookie Of requests request 2 Access can solve .

cookie That is Request Headers Medium cookie, Notice what I measured copy value There are certain problems , Direct replication can . I don't know which detail I didn't notice .

For the second question , Do not use ssl verification 34 The way , You can try to bypass this verification , But it will report InsecureRequestWarning, The solution to this problem is to suppress the warning 5.

Synthesize the above discussion , The implementation code is as follows :

# I use requests library

import requests

import time

# Used to obtain Time stamp

def gettime():

return int(round(time.time() * 1000))

# take query Pass in the parameter dictionary parameter

url = 'https://data.stats.gov.cn/easyquery.htm'

keyvalue = {

}

keyvalue['m'] = 'QueryData'

keyvalue['dbcode'] = 'hgnd'

keyvalue['rowcode'] = 'zb'

keyvalue['colcode'] = 'sj'

keyvalue['wds'] = '[]'

# keyvalue['dfwds'] = '[]'

# The one above is changed to the one below

keyvalue['dfwds'] = '[{"wdcode":"zb","valuecode":"A0301"}]'

keyvalue['k1'] = str(gettime())

# Find... From the web page cookie

cookie = '_trs_uv=kzi8jtu8_6_ct3f; JSESSIONID=I5DoNfCzk0knnmQ8j4w7K498Qt_TmpGxdGafdPQqYsL7FzlhyfPn!1909598655; u=6'

# Suppress the alarm caused by non verification

import urllib3

urllib3.disable_warnings()

headers = {

'Cookie':cookie,

'Host':'data.stats.gov.cn',

'User-Agent' : 'Mozilla/5.0 (Macintosh; Intel Mac 05 X 10_11_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36'

}

# Among them headers Contained in the cookie,param Is the request parameter dictionary ,varify That is, bypass authentication

r = requests.get(url,headers = headers,params=keyvalue,verify=False)

# Print status code

print(r.status_code)

# print(r.text)

# Print the results

print(r.text)

Some screenshots of the results are as follows :

The result has not been parsed , But this is used json Just decode it !

Python Crawl the relevant data of the National Bureau of Statistics ( original )︎︎

In reptile requests Advanced usage ( close cookie Make data requests )︎

Python The web crawler reports an error “SSL: CERTIFICATE_VERIFY_FAILED” Solutions for ︎

certificate verify failed:self signed certificate in certificate chain(_ssl.c:1076)︎

InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. terms of settlement ︎

边栏推荐

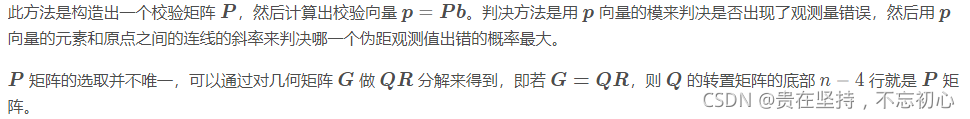

- GPS從入門到放弃(十三)、接收機自主完好性監測(RAIM)

- Four data streams of grpc

- [MySQL] online DDL details

- 【MySQL】Online DDL详解

- Method return value considerations

- Report on technological progress and development prospects of solid oxide fuel cells in China (2022 Edition)

- 解决项目跨域问题

- Yyds dry goods inventory C language recursive implementation of Hanoi Tower

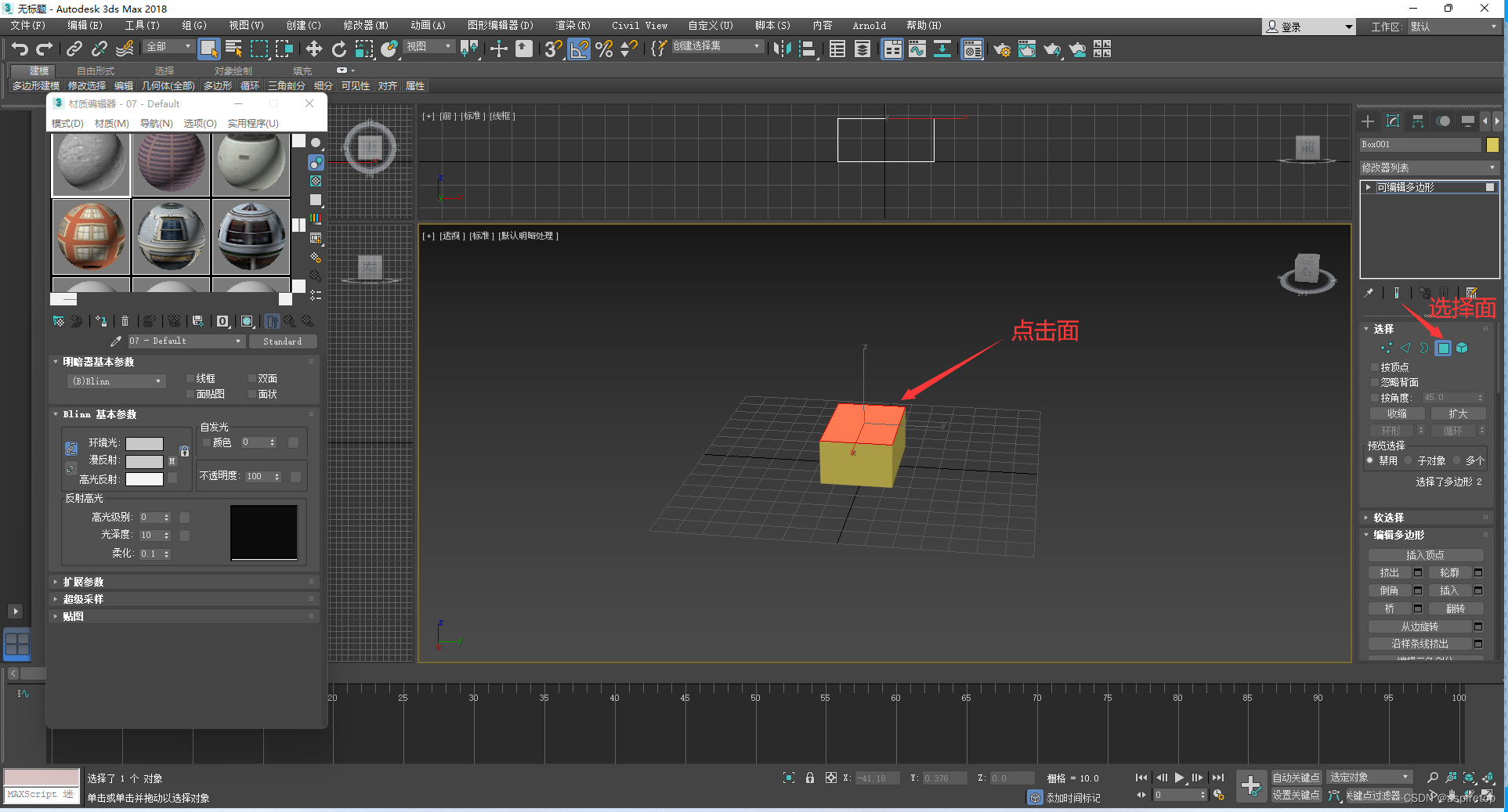

- 3DMAX assign face map

- 3DMax指定面贴图

猜你喜欢

搜素专题(DFS )

Oracle-控制文件及日志文件的管理

Embedded common computing artifact excel, welcome to recommend skills to keep the document constantly updated and provide convenience for others

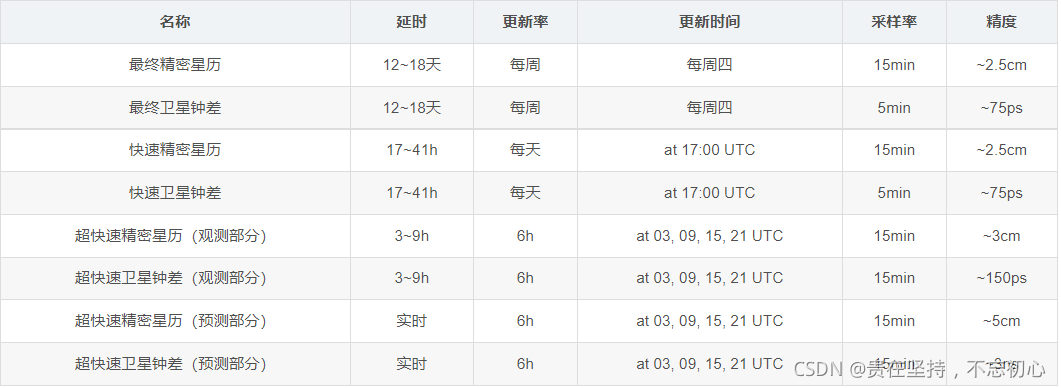

GPS从入门到放弃(十九)、精密星历(sp3格式)

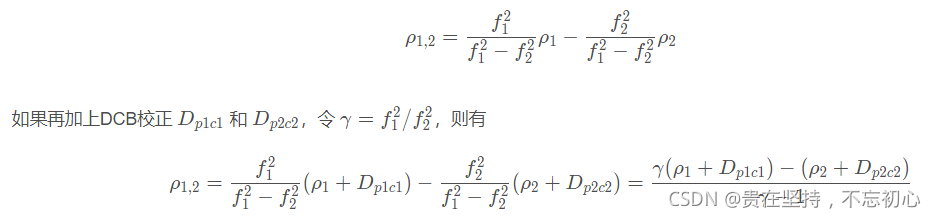

GPS从入门到放弃(十五)、DCB差分码偏差

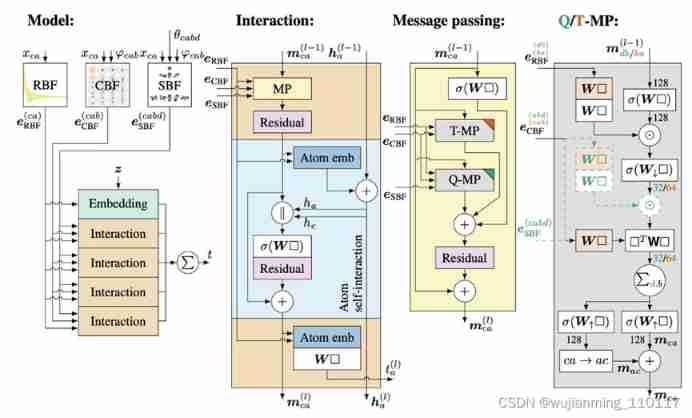

2021 geometry deep learning master Michael Bronstein long article analysis

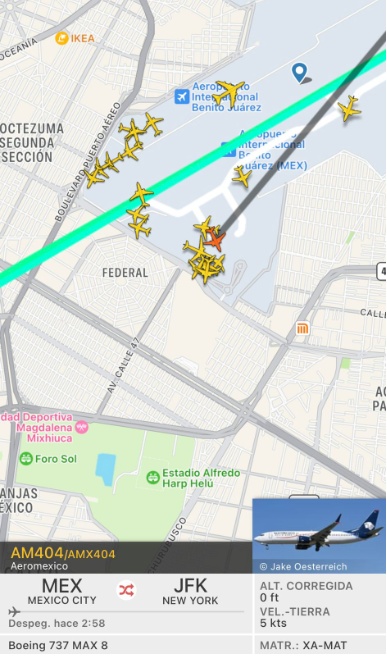

A Mexican airliner bound for the United States was struck by lightning after taking off and then returned safely

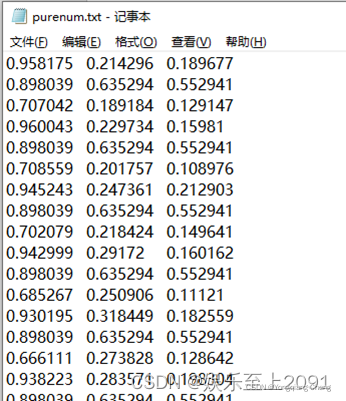

数据处理技巧(7):MATLAB 读取数字字符串混杂的文本文件txt中的数据

3DMax指定面贴图

GPS du début à l'abandon (XIII), surveillance autonome de l'intégrité du récepteur (raim)

随机推荐

Seata aggregates at, TCC, Saga and XA transaction modes to create a one-stop distributed transaction solution

HDR image reconstruction from a single exposure using deep CNNs阅读札记

HDR image reconstruction from a single exposure using deep CNN reading notes

Management background --5, sub classification

重磅新闻 | Softing FG-200获得中国3C防爆认证 为客户现场测试提供安全保障

解决项目跨域问题

Maximum product of three numbers in question 628 of Li Kou

中国1,4-环己烷二甲醇(CHDM)行业调研与投资决策报告(2022版)

Embedded common computing artifact excel, welcome to recommend skills to keep the document constantly updated and provide convenience for others

[sciter bug] multi line hiding

VIP case introduction and in-depth analysis of brokerage XX system node exceptions

C # realizes crystal report binding data and printing 4-bar code

Wechat red envelope cover applet source code - background independent version - source code with evaluation points function

ResNet-RS:谷歌领衔调优ResNet,性能全面超越EfficientNet系列 | 2021 arxiv

How does the uni admin basic framework close the creation of super administrator entries?

第3章:类的加载过程(类的生命周期)详解

Codeforces Round #274 (Div. 2) –A Expression

Classic sql50 questions

[daily] win10 system setting computer never sleeps

Solve project cross domain problems