当前位置:网站首页>A convolution substitution of attention mechanism

A convolution substitution of attention mechanism

2022-07-06 08:57:00 【cyz0202】

Reference from :fairseq

background

Common attention mechanisms are as follows

And generally use multi heads To perform the above calculation ;

time-consuming ;

Convolution has long been used in NLP in , It's just that the calculation amount of common use methods is not small , And the effect is not as good as Attention;

Is it possible to improve convolution in NLP The application of , Achieve both fast and good results ?

One idea is depthwise convolution( Reduce computation ), And imitate attention softmax Mechanism ( weighted mean );

The improved scheme

common depthwise convolution as follows

W by kernel, , The parameter size is : When d=1024,k=7,d*k=7168

, The parameter size is : When d=1024,k=7,d*k=7168

-------

In order to further reduce the amount of calculation , Can make  , In general

, In general  , Such as H=16,d=1024;

, Such as H=16,d=1024;

H The dimension becomes smaller , To keep right x Do a complete convolution calculation , It needs to be in the original d This dimension Repeated use W, That's different channel May use W Parameters in the same line ;

There are two ways to reuse , One is W stay d The translation of this dimension repeats , That is, every H Line is used once W;

Another way to consider W My line is d Dimensional repetition , That is, in the process of convolution calculation ,x stay d On this dimension Every time d/H That's ok Corresponding to W A line in , Such as x Of d Dimensionally [0-d/H) That's ok depthwise Convolution calculation uses W Of the 0 Line parameters ;( It's a little winding , Refer to the following formula 3, It's more intuitive )

The second way is used here , Immediate repetition ; Thinking about imitation Attention Of multi heads, Every head ( The size is d/H) Can calculate independently ; The second way is equivalent to x Every d/H That's ok (d Every head on the dimension ) Use separate kernel Parameters ;

Careful readers can find out , there H In fact, it's imitation Attention in muliti heads Of H;

Through the above design ,W The parameter quantity of is reduced to H*k( Such as H=16,k=7, Then the parameter quantity is only 112)

-------

In addition to reducing the amount of calculation , You can also let convolution imitate Attention Of softmax The process ;

Observation formula (1), Function and Attention softmax Output very similar , Namely weighted average ;

Function and Attention softmax Output very similar , Namely weighted average ;

So why don't we let  Also a distribution Well ?

Also a distribution Well ?

So make

-------

The final convolution is calculated as follows

notes :W Rounding on the bottom corner is the default ![c \in [1, d]](http://img.inotgo.com/imagesLocal/202207/06/202207060850360766_7.gif) , You can also change it to round down and make

, You can also change it to round down and make ![c \in [0, d-1]](http://img.inotgo.com/imagesLocal/202207/06/202207060850360766_2.gif)

Concrete realization

The above calculation method of line repetition cannot be directly calculated by using the existing convolution operator ;

In order to use matrix calculation , You can think of a compromise

Make  , Make

, Make  , perform BMM(Batch MatMul), Available

, perform BMM(Batch MatMul), Available

The above design can guarantee the result Out There is what we need right shape, So how to guarantee Out The value is also correct , That is, how to design W',x The specific value of ?

It's not hard to , Just according to the previous section Make W Row repeat With Yes x Conduct multi-head Calculation Just start with your thoughts ;

consider  , It's a simple one reshape, dimension 1 Of BH representative batch*H, hypothesis batch=1, That's it H Calculation header ; dimension 2 Of n Represents the length of the sequence n, namely n A place ; The last dimension ( namely channel) It represents a head, That is, the size of a calculation head ;

, It's a simple one reshape, dimension 1 Of BH representative batch*H, hypothesis batch=1, That's it H Calculation header ; dimension 2 Of n Represents the length of the sequence n, namely n A place ; The last dimension ( namely channel) It represents a head, That is, the size of a calculation head ;

In convolution calculation ,

Above x' Each head of ( The last dimension size d/H, common H individual ), Corresponding W Parameter is W in A row of parameters in order ( size k,H The head corresponds to W Of H That's ok ); meanwhile x' The first 2 Dimensions indicate n Convolution calculation shall be carried out for positions , And the calculation method of each position is the same as Window size is k And fixed value sequence To sum by weight ;

therefore W' dimension 1 Of BH representative And x' Of BH Convolution parameters corresponding to heads one by one (B=1 Time is H The head corresponds to H That's ok kernel Parameters );

W' dimension 2 Of n, representative n Convolution calculation shall be carried out for positions ;

W' dimension 3 Of n, It's right The current header corresponds to kernel Inner row Of k Parameters Extended to n Parameters , The extension method is 0 fill ;

The reason why we should be right now kernel The size is k Row parameters of Fill in n, The main reason is that the above convolution calculation is a fixed size of k The window of is... In length n Slide on the sequence ;

Because the number of convolution parameters is constant , But the position changes , It seems to be irregular calculation ; however Think about it , Although the position is sliding , But the length is n Slide on the sequence ; Can we construct a length of n Of fake kernel, In addition to the window where the real kerenl Parameter values , Other non window positions are filled 0 Well ? So we get a fixed length n Of fake kernel, It can be calculated in a unified form ;

Illustrate with examples , Suppose the calculation position is 10, Then the center of the window is also 10 This position , The specific position of the window may be [7,13], Then I'll remove Outside the window Other places All filled 0, You get a length of n Sequence of parameters , You can use unified n*n Dot product calculation method Go and x The length is n The input of ;

Of course , The above filling method Every time I get Fill sequence (fake kernel) It's all different , Because the current convolution line parameter value is unchanged , But the position is changing ;

Read about How does the deep learning framework implement convolution computation classmate You may be more familiar with this filling method ;

The above statement may still be a little misleading , Readers think  matrix multiplication , The first 1 individual n Express n A place , The first 2/3 individual n Indicates a current location Convolution calculation that occurs , The reason is n*n, In order to achieve Unified calculation form the 0 fill ;

matrix multiplication , The first 1 individual n Express n A place , The first 2/3 individual n Indicates a current location Convolution calculation that occurs , The reason is n*n, In order to achieve Unified calculation form the 0 fill ;

Wait for me to add a better legend ...

CUDA Realization

The above calculation method consumes resources , Consider customizing CUDA operator ;

I will write another article to talk about ; Custom convolution attention operator CUDA Realization

experimental result

To be continued

summary

- This paper introduces a convolution substitution of attention mechanism ;

- Through a certain design, the convolution can be lightweight , At the same time, imitate Attention To achieve better results ;

边栏推荐

- LeetCode:34. Find the first and last positions of elements in a sorted array

- After PCD is converted to ply, it cannot be opened in meshlab, prompting error details: ignored EOF

- To effectively improve the quality of software products, find a third-party software evaluation organization

- Leetcode: Sword Finger offer 42. Somme maximale des sous - tableaux consécutifs

- Tcp/ip protocol

- 项目连接数据库遇到的问题及解决

- 有效提高软件产品质量,就找第三方软件测评机构

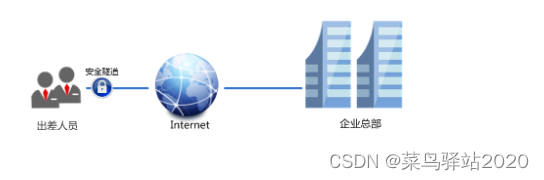

- TP-LINK enterprise router PPTP configuration

- 广州推进儿童友好城市建设,将探索学校周边200米设安全区域

- What are the common processes of software stress testing? Professional software test reports issued by companies to share

猜你喜欢

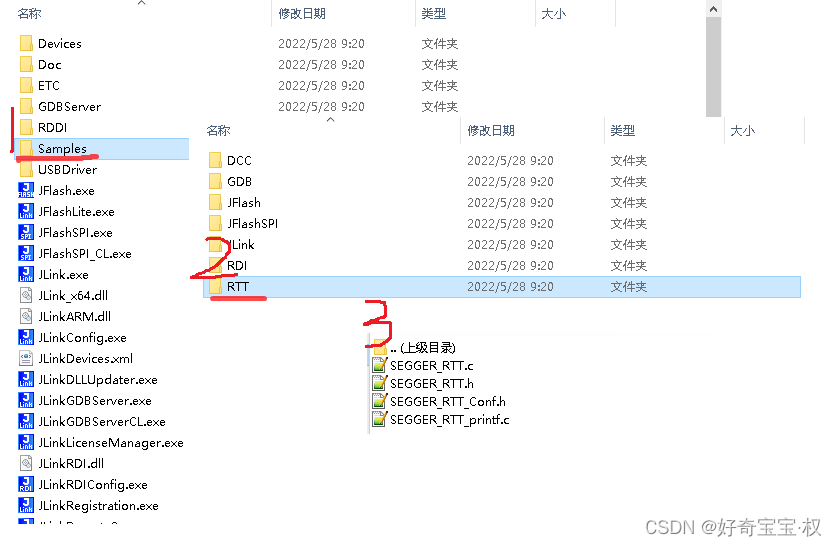

【嵌入式】使用JLINK RTT打印log

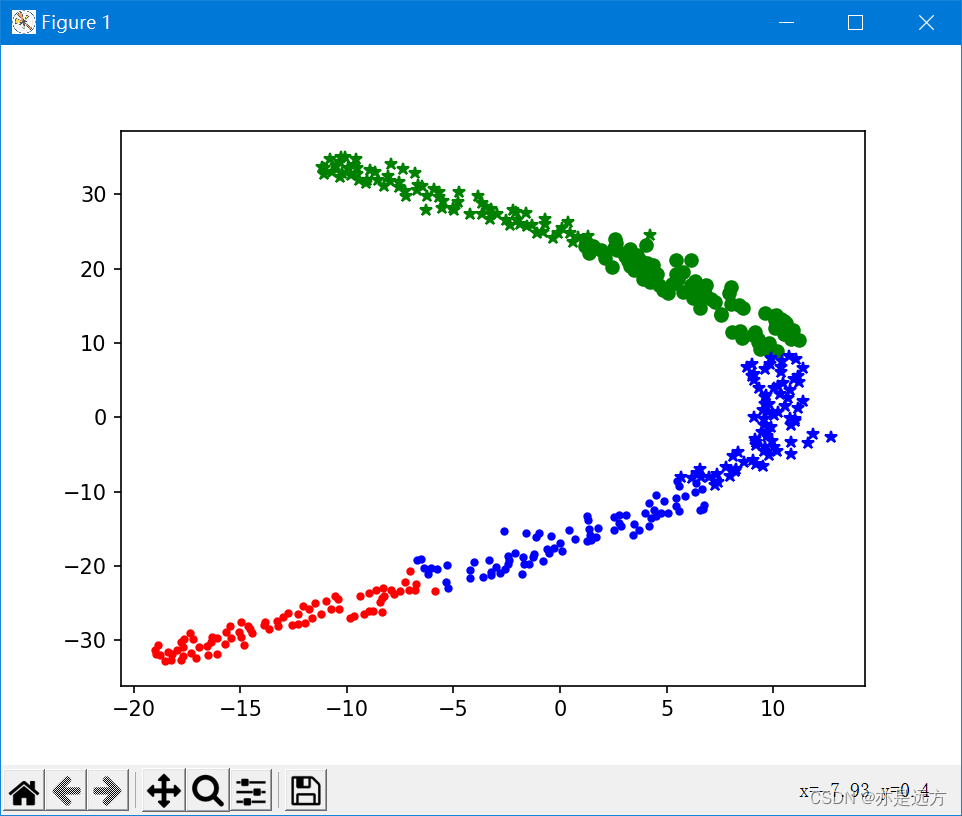

多元聚类分析

数字人主播618手语带货,便捷2780万名听障人士

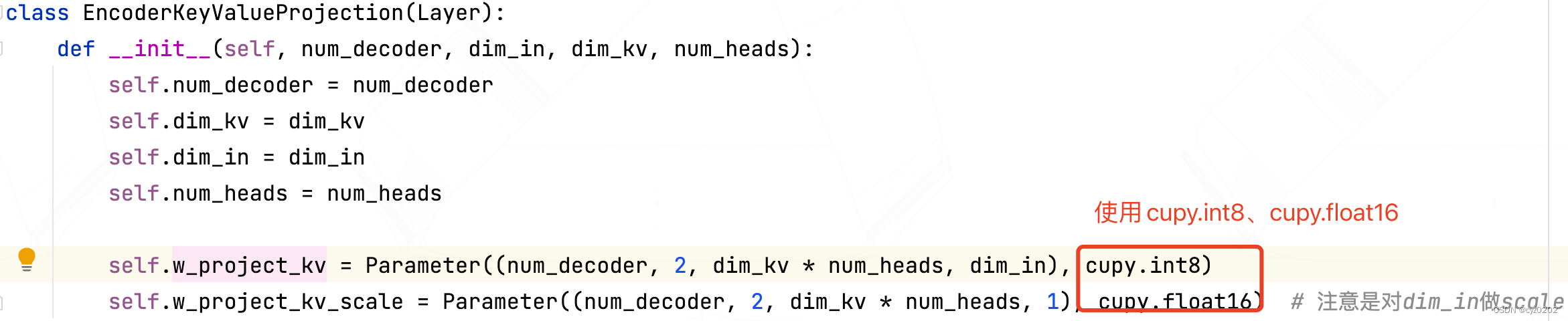

BMINF的后训练量化实现

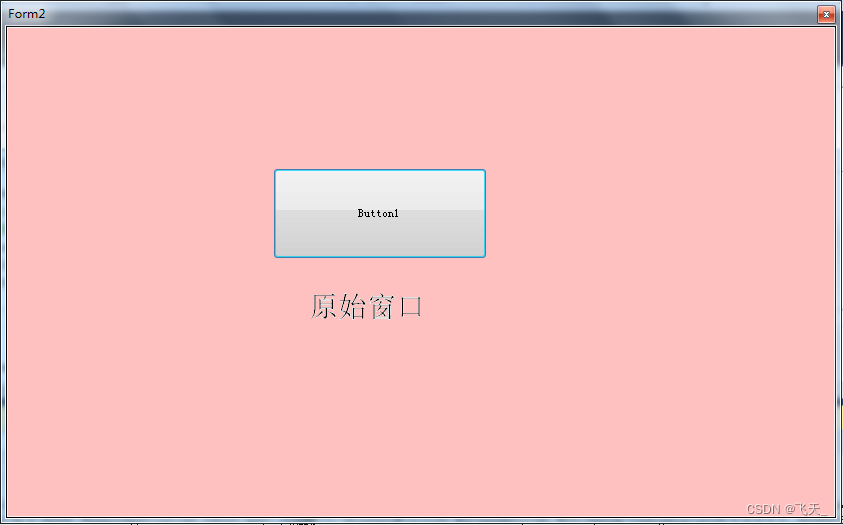

vb.net 随窗口改变,缩放控件大小以及保持相对位置

![[MySQL] limit implements paging](/img/94/2e84a3878e10636460aa0fe0adef67.jpg)

[MySQL] limit implements paging

![[OC]-<UI入门>--常用控件的学习](/img/2c/d317166e90e1efb142b11d4ed9acb7.png)

[OC]-<UI入门>--常用控件的学习

TP-LINK enterprise router PPTP configuration

![[embedded] print log using JLINK RTT](/img/22/c37f6e0f3fb76bab48a9a5a3bb3fe5.png)

[embedded] print log using JLINK RTT

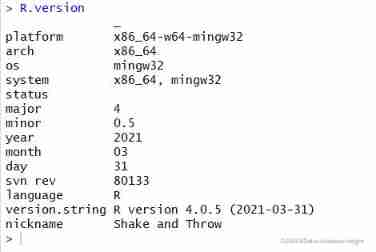

Using pkgbuild:: find in R language_ Rtools check whether rtools is available and use sys The which function checks whether make exists, installs it if not, and binds R and rtools with the writelines

随机推荐

LeetCode:26. Remove duplicates from an ordered array

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

Using C language to complete a simple calculator (function pointer array and callback function)

SAP ui5 date type sap ui. model. type. Analysis of the parsing format of date

LeetCode:221. Largest Square

R language uses the principal function of psych package to perform principal component analysis on the specified data set. PCA performs data dimensionality reduction (input as correlation matrix), cus

Fairguard game reinforcement: under the upsurge of game going to sea, game security is facing new challenges

CSP first week of question brushing

The network model established by torch is displayed by torch viz

LeetCode:剑指 Offer 42. 连续子数组的最大和

BN折叠及其量化

BMINF的后训练量化实现

可变长参数

Improved deep embedded clustering with local structure preservation (Idec)

Intel Distiller工具包-量化实现1

注意力机制的一种卷积替代方式

使用标签模板解决用户恶意输入的问题

Excellent software testers have these abilities

BMINF的後訓練量化實現

Leetcode刷题题解2.1.1