当前位置:网站首页>LeetCode每日一题(968. Binary Tree Cameras)

LeetCode每日一题(968. Binary Tree Cameras)

2022-07-03 09:01:00 【wangjun861205】

You are given the root of a binary tree. We install cameras on the tree nodes where each camera at a node can monitor its parent, itself, and its immediate children.

Return the minimum number of cameras needed to monitor all nodes of the tree.

Example 1:

Input: root = [0,0,null,0,0]

Output: 1

Explanation: One camera is enough to monitor all nodes if placed as shown.

Example 2:

Input: root = [0,0,null,0,null,0,null,null,0]

Output: 2

Explanation: At least two cameras are needed to monitor all nodes of the tree. The above image shows one of the valid configurations of camera placement.

Constraints:

- The number of nodes in the tree is in the range [1, 1000].

- Node.val == 0

为树中每个节点赋予一个编号, 这个不麻烦, left = curr * 2 + 1, right = curr * 2 + 2, 假设 dp[i][is_covered][need_place]代表的是树中第 i 个节点在是否被摄像头覆盖到(is_covered)和是不是一定要强制安装一个摄像头在该节点(need_place)的情况下以第 i 个节点为根节点的子树所需要的最小摄像头数量。这样我们就可以把情况分为三种, 一种是需要强制安装摄像头 need_place == true, 这样它的左右两个子节点都会被覆盖到(我们是自上而下, 不管父节点), 那结果就是 dp[i*2+1][true][false] + dp[i*2+2][true][false] + 1, 如果不需要强制安装, 那我们就看是否覆盖的情况(is_covered), 如果当前节点已经被覆盖了, 我们可以在当前节点放置摄像头, 那跟上面说的这种情况是一样的, dp[i*2+1][true][false] + dp[i*2+2][true][false] + 1, 也可以不放置, 因为当前节点已经被覆盖, 所以即使我们不在当前节点放置摄像头,那子节点也没有强制放置摄像头的必要, 那结果就是 dp[i*2+1][false][false] + dp[i*2+2][false][false], 这两种情况取小的返回。最后一种是既没有要求强制放置也没有被覆盖到, 那这样我们可以在当前节点放置摄像头(同上),也可以强制在左侧子节点或者右侧子节点放置摄像头, dp[i*2+1][false][true]或者 dp[i*2+2][false][true], 这三种情况取最小值

use std::cell::RefCell;

use std::collections::HashMap;

use std::rc::Rc;

impl Solution {

fn dp(root: &Option<Rc<RefCell<TreeNode>>>, val: i32, is_covered: bool, need_place: bool, cache: &mut HashMap<(i32, bool, bool), i32>) -> i32 {

if let Some(node) = root {

if need_place {

let left = if let Some(c) = cache.get(&(val * 2 + 1, true, false)) {

*c

} else {

Solution::dp(&node.borrow().left, val * 2 + 1, true, false, cache)

};

let right = if let Some(c) = cache.get(&(val * 2 + 2, true, false)) {

*c

} else {

Solution::dp(&node.borrow().right, val * 2 + 2, true, false, cache)

};

cache.insert((val, is_covered, need_place), left + right + 1);

return left + right + 1;

}

if is_covered {

let curr_place = {

let left = if let Some(c) = cache.get(&(val * 2 + 1, true, false)) {

*c

} else {

Solution::dp(&node.borrow().left, val * 2 + 1, true, false, cache)

};

let right = if let Some(c) = cache.get(&(val * 2 + 2, true, false)) {

*c

} else {

Solution::dp(&node.borrow().right, val * 2 + 2, true, false, cache)

};

left + right + 1

};

let curr_pass = {

let left = if let Some(c) = cache.get(&(val * 2 + 1, false, false)) {

*c

} else {

Solution::dp(&node.borrow().left, val * 2 + 1, false, false, cache)

};

let right = if let Some(c) = cache.get(&(val * 2 + 2, false, false)) {

*c

} else {

Solution::dp(&node.borrow().right, val * 2 + 2, false, false, cache)

};

left + right

};

let ans = curr_place.min(curr_pass);

cache.insert((val, is_covered, need_place), ans);

return ans;

}

let curr_place = {

let left = if let Some(c) = cache.get(&(val * 2 + 1, true, false)) {

*c

} else {

Solution::dp(&node.borrow().left, val * 2 + 1, true, false, cache)

};

let right = if let Some(c) = cache.get(&(val * 2 + 2, true, false)) {

*c

} else {

Solution::dp(&node.borrow().right, val * 2 + 2, true, false, cache)

};

left + right + 1

};

let left_place = {

if node.borrow().left.is_none() {

i32::MAX

} else {

let left = if let Some(c) = cache.get(&(val * 2 + 1, false, true)) {

*c

} else {

Solution::dp(&node.borrow().left, val * 2 + 1, false, true, cache)

};

let right = if let Some(c) = cache.get(&(val * 2 + 2, false, false)) {

*c

} else {

Solution::dp(&node.borrow().right, val * 2 + 2, false, false, cache)

};

left + right

}

};

let right_place = {

if node.borrow().right.is_none() {

i32::MAX

} else {

let left = if let Some(c) = cache.get(&(val * 2 + 1, false, false)) {

*c

} else {

Solution::dp(&node.borrow().left, val * 2 + 1, false, false, cache)

};

let right = if let Some(c) = cache.get(&(val * 2 + 2, false, true)) {

*c

} else {

Solution::dp(&node.borrow().right, val * 2 + 2, false, true, cache)

};

left + right

}

};

let ans = curr_place.min(left_place).min(right_place);

cache.insert((val, is_covered, need_place), ans);

return ans;

}

0

}

pub fn min_camera_cover(root: Option<Rc<RefCell<TreeNode>>>) -> i32 {

let mut cache = HashMap::new();

Solution::dp(&root, 0, false, false, &mut cache)

}

}

边栏推荐

- Hudi 数据管理和存储概述

- LeetCode 535. Encryption and decryption of tinyurl

- Flink学习笔记(八)多流转换

- Beego learning - Tencent cloud upload pictures

- 【点云处理之论文狂读经典版7】—— Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs

- LeetCode 871. Minimum refueling times

- [solution to the new version of Flink without bat startup file]

- Database execution error: SQL_ mode only_ full_ group_ by:

- Jenkins learning (II) -- setting up Chinese

- 【Kotlin疑惑】在Kotlin类中重载一个算术运算符,并把该运算符声明为扩展函数会发生什么?

猜你喜欢

Django operates Excel files through openpyxl to import data into the database in batches.

![[kotlin learning] classes, objects and interfaces - classes with non default construction methods or attributes, data classes and class delegates, object keywords](/img/ee/d982fd9e1f2283e09ad1a81d0b61b5.png)

[kotlin learning] classes, objects and interfaces - classes with non default construction methods or attributes, data classes and class delegates, object keywords

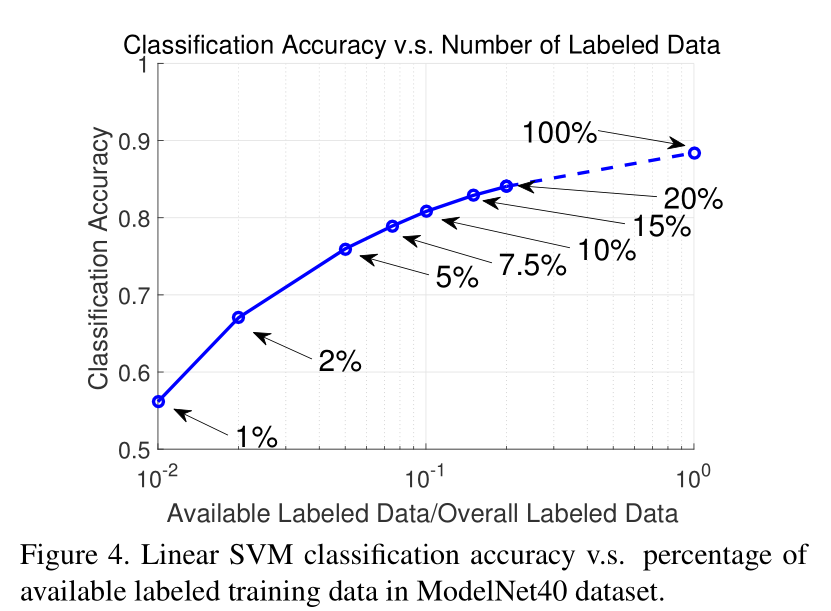

【点云处理之论文狂读经典版12】—— FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation

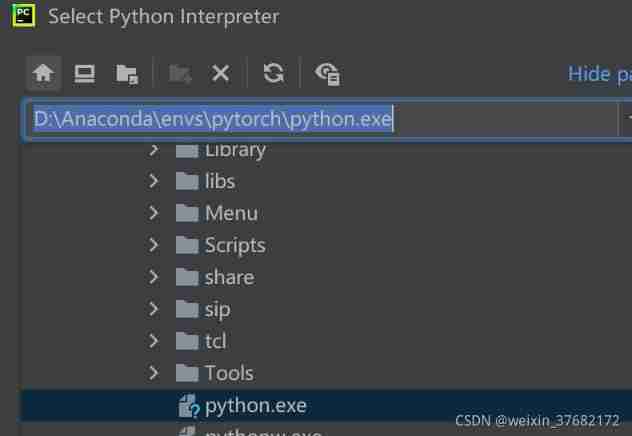

Move anaconda, pycharm and jupyter notebook to mobile hard disk

Hudi integrated spark data analysis example (including code flow and test results)

2022-2-14 learning xiangniuke project - Session Management

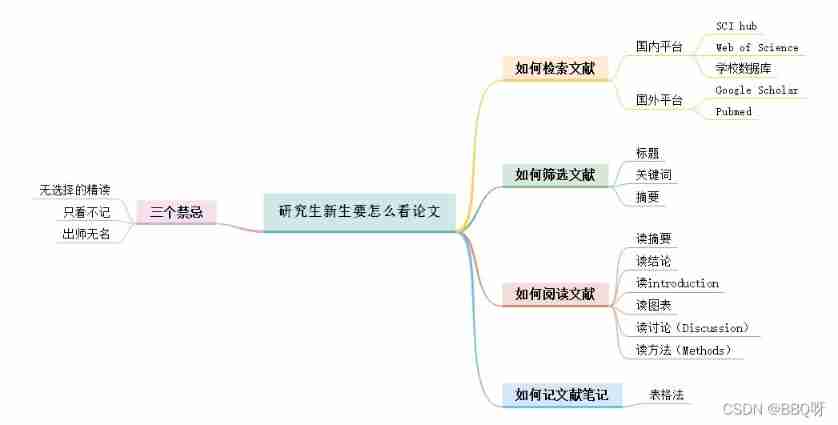

Just graduate student reading thesis

CATIA automation object architecture - detailed explanation of application objects (I) document/settingcontrollers

Alibaba cloud notes for the first time

State compression DP acwing 91 Shortest Hamilton path

随机推荐

npm install安装依赖包报错解决方法

What are the stages of traditional enterprise digital transformation?

Matlab dichotomy to find the optimal solution

【点云处理之论文狂读经典版8】—— O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis

2022-2-13 learning the imitation Niuke project - home page of the development community

Crawler career from scratch (I): crawl the photos of my little sister ① (the website has been disabled)

Use the interface colmap interface of openmvs to generate the pose file required by openmvs mvs

Win10 quick screenshot

Overview of image restoration methods -- paper notes

Spark 集群安装与部署

CATIA automation object architecture - detailed explanation of application objects (III) systemservice

The less successful implementation and lessons of RESNET

Spark 结构化流写入Hudi 实践

Crawler career from scratch (II): crawl the photos of my little sister ② (the website has been disabled)

图像修复方法研究综述----论文笔记

Hudi 快速体验使用(含操作详细步骤及截图)

Modify idea code

[point cloud processing paper crazy reading frontier version 10] - mvtn: multi view transformation network for 3D shape recognition

[point cloud processing paper crazy reading classic version 9] - pointwise revolutionary neural networks

Build a solo blog from scratch