当前位置:网站首页>Spark cluster installation and deployment

Spark cluster installation and deployment

2022-07-03 09:25:00 【Did Xiao Hu get stronger today】

List of articles

Spark Cluster installation deployment

Cluster planning :

The names of the three hosts are :hadoop102, hadoop103, hadoop104. The cluster planning is as follows :

| hadoop102 | hadoop103 | hadoop104 |

|---|---|---|

| Master+Worker | Worker | Worker |

Upload and unzip

Spark Download address :

https://spark.apache.org/downloads.html

take spark-3.0.0-bin-hadoop3.2.tgz File upload to Linux And decompress it in the specified location

tar -zxvf spark-3.0.0-bin-hadoop3.2.tgz -C /opt/module

cd /opt/module

mv spark-3.0.0-bin-hadoop3.2 spark

Modify the configuration file

Enter the path after decompression conf Catalog , modify slaves.template The file named slaves

mv slaves.template slaves

modify slaves file , add to work node

hadoop102 hadoop103 hadoop104modify spark-env.sh.template The file named spark-env.sh

mv spark-env.sh.template spark-env.shmodify spark-env.sh file , add to JAVA_HOME The environment variable corresponds to the cluster master node

export JAVA_HOME=/opt/module/jdk1.8.0_144 // Change it to your own jdk route SPARK_MASTER_HOST=hadoop102 SPARK_MASTER_PORT=7077distribution spark Catalog

xsync sparkNote that it needs to be installed in advance xsync Password free login , If not configured, you can refer to :https://blog.csdn.net/hshudoudou/article/details/123101151?spm=1001.2014.3001.5501

Start cluster

Execute script command :

sbin/start-all.sh![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-8w8JeNL9-1654759026708)(C:\Users\Husheng\Desktop\ Big data framework learning \image-20220609143834238.png)]](/img/e8/e1e53eee730483302151edf6945deb.png)

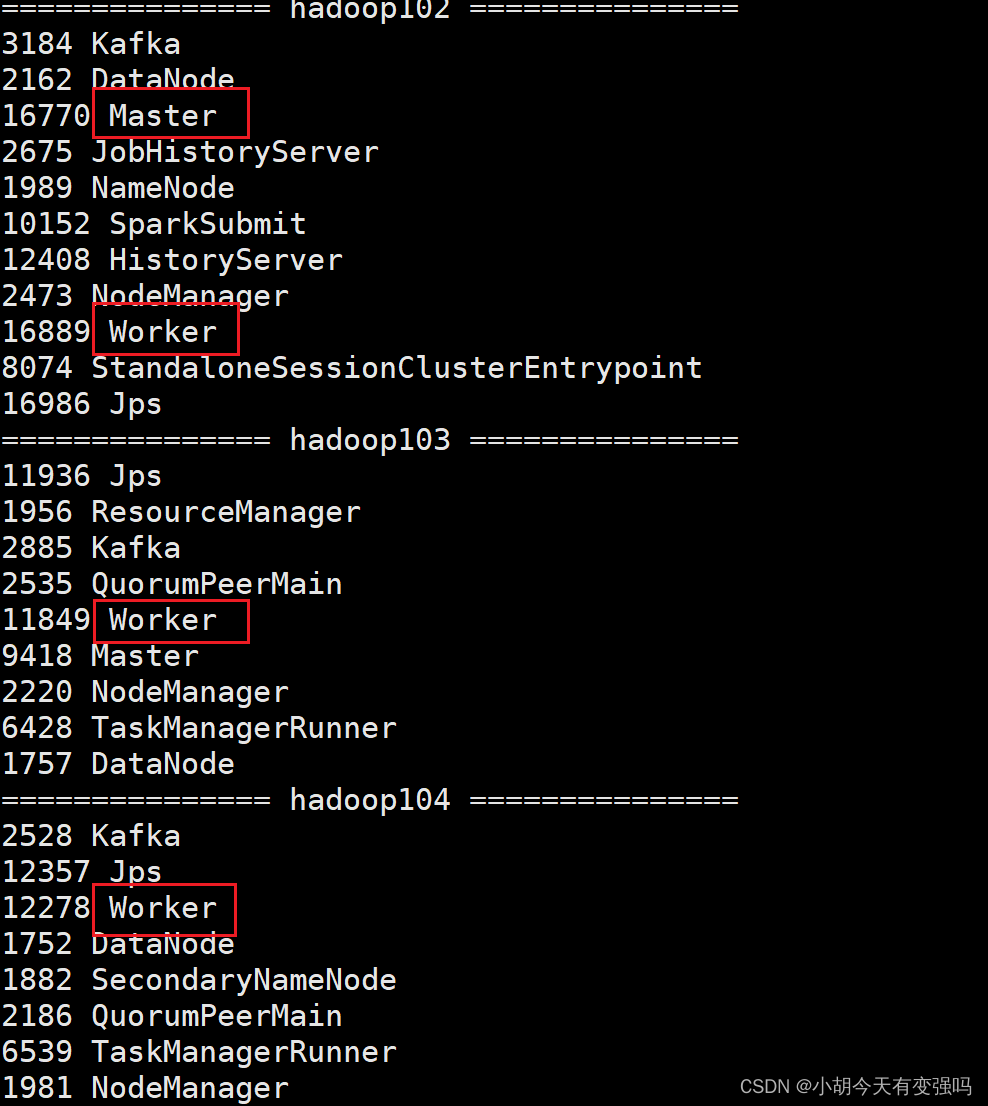

- Check the progress of three servers

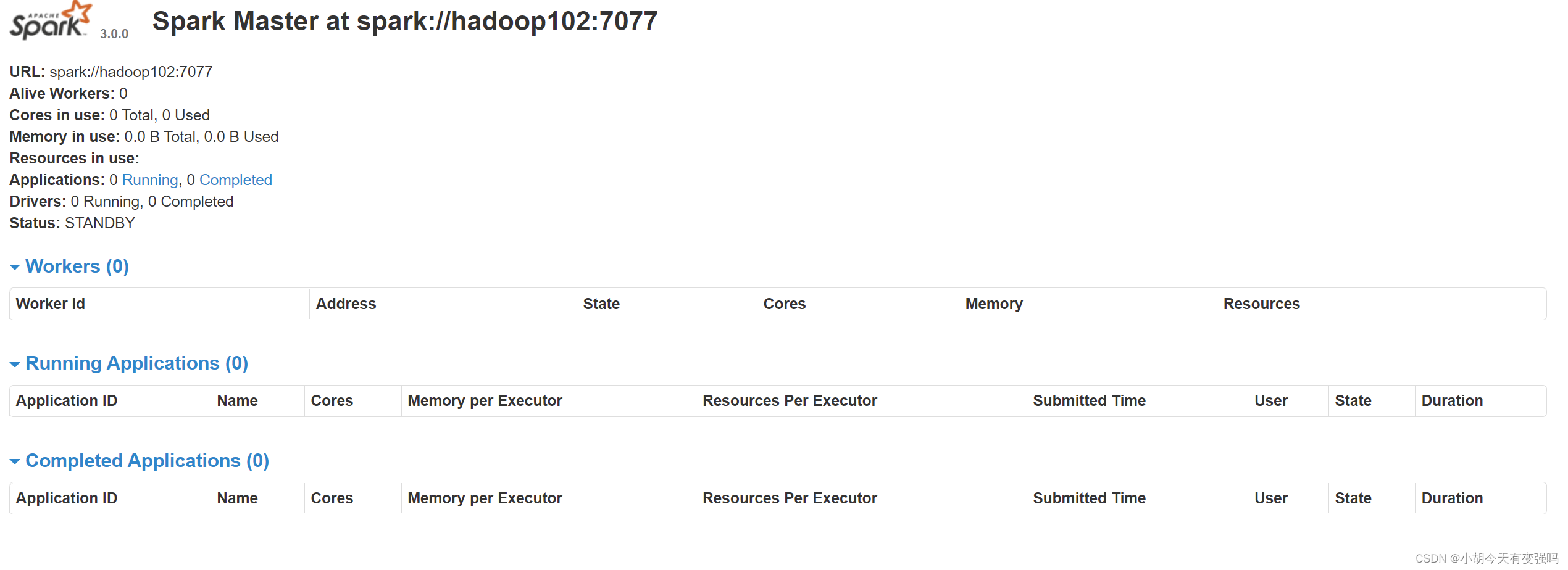

- see Master Resource monitoring Web UI Interface : http://hadoop102:8080

Submit app

bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://hadoop102:7077 \

./examples/jars/spark-examples_2.12-3.0.0.jar \

10

–class Represents the main class of the program to execute

–master spark://hadoop102:7077 Independent deployment mode , Connect to Spark colony

spark-examples_2.12-3.0.0.jar Where the running class is located jar package

Numbers 10 Represents the entry parameter of the program , Used to set the number of tasks in the current application

Submit parameter description : In submitting applications , Generally, some parameters will be submitted at the same time

| Parameters | explain | Examples of optional values |

|---|---|---|

| –class | Spark The class containing the main function in the program | |

| –master | spark The mode in which the program runs ( Environmental Science ) | Pattern :local[*]、spark://linux1:7077、 Yarn |

| –executor-memory 1G | Specify each executor Available memory is 1G | Other configurations can be set according to the situation |

| –total-executor-cores 2 | Specify all executor The use of cpu Auditing for 2 individual | |

| –executor-cores | Specify each executor The use of cpu Check the number | |

| application-jar | Packaged applications jar, Include dependencies . | |

| application-arguments | Pass to main() Method parameters | |

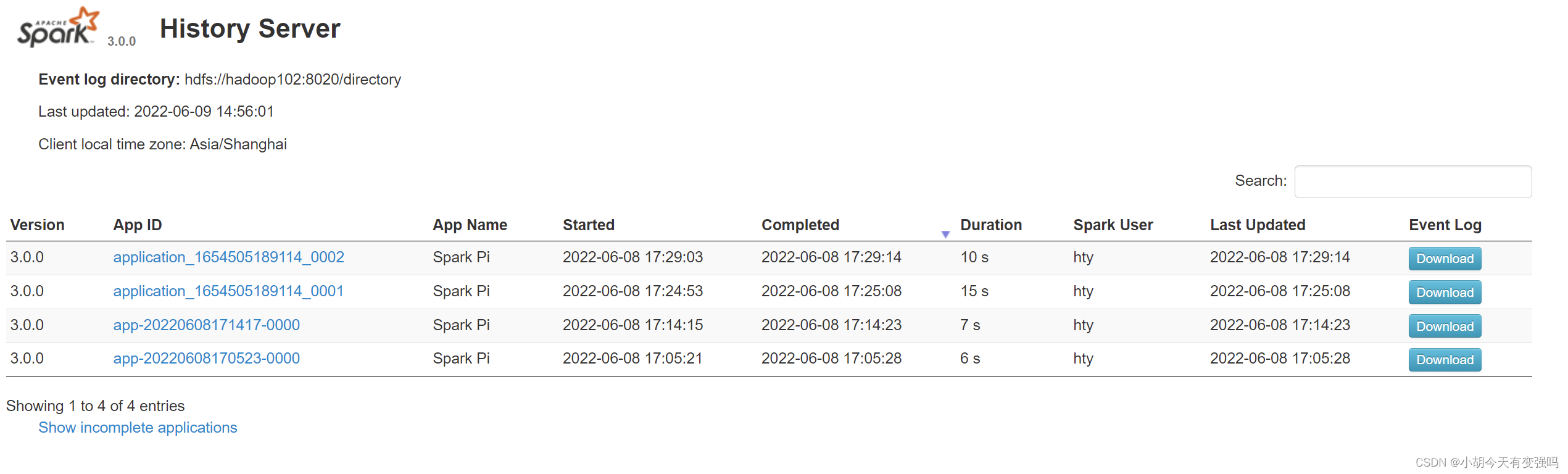

Configure history server

modify spark-defaults.conf.template The file named spark-defaults.conf

mv spark-defaults.conf.template spark-defaults.conf

modify spark-default.conf file , Configure log storage path

spark.eventLog.enabled true spark.eventLog.dir hdfs://hadoop102:8020/directory

Need to start the hadoop colony ,HDFS Upper directory The directory needs to exist in advance .

If it doesn't exist , You need to create :

sbin/start-dfs.sh

hadoop fs -mkdir /director

modify spark-env.sh file , Add log configuration

export SPARK_HISTORY_OPTS=" -Dspark.history.ui.port=18080 -Dspark.history.fs.logDirectory=hdfs://hadoop102:8020/directory -Dspark.history.retainedApplications=30"Distribution profile

xsync conf

Restart the cluster and history service

sbin/start-all.sh sbin/start-history-server.shReexecute the task

bin/spark-submit \ --class org.apache.spark.examples.SparkPi \ --master spark://hadoop102:7077 \ ./examples/jars/spark-examples_2.12-3.0.0.jar \ 10View history Services :http://hadoop102:18080

Configuration high availability (HA)

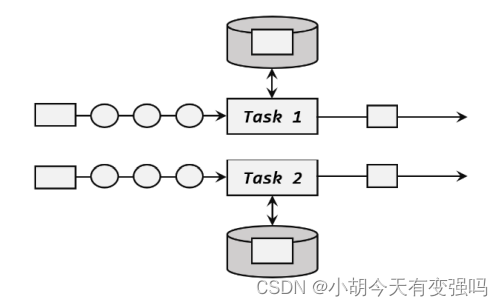

The so-called high availability is due to Master There is only one node , So there will be a single point of failure . therefore To solve the single point of failure problem , Multiple nodes need to be configured in the cluster Master node , Once active Master In case of failure , By standby Master Provide services , Ensure that the job can continue . The high availability here generally adopts Zookeeper Set up .

Cluster planning :

| hadoop02 | hadoop103 | hadoop04 |

|---|---|---|

| Master Zookeeper Worker | Master Zookeeper Worker | Zookeeper Worker |

Stop the cluster

sbin/stop-all.sh

start-up zookeeper

zk.sh start // Here is the use of script commands to start , If there is no script , Can be in zookeeper Under the directory, use the following command xstart zkmodify spark-env.sh Add the following configuration to the file

Note as follows :

#SPARK_MASTER_HOST=hadoop102

#SPARK_MASTER_PORT=7077

Add the following :

#Master The default access port of the monitoring page is 8080, But it could be with Zookeeper Conflict , So to 8989, It can also be from

Definition , visit UI Please note when monitoring the page

SPARK_MASTER_WEBUI_PORT=8989

export SPARK_DAEMON_JAVA_OPTS=" -Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=hadoop102,hadoop103,hadoop104 -Dspark.deploy.zookeeper.dir=/spark"

Distribution profile

xsync conf/

Yarn Pattern

The above deployment mode is independent deployment (Standalone) Pattern , Independent deployment mode is provided by Spark Provide its own computing resources , No other framework is required to provide resources . this This method reduces the coupling with other third-party resource frameworks , Very independent .Spark Mainly the calculation framework , Instead of a resource scheduling framework , Therefore, the resource scheduling provided by itself is not its strength , Therefore, we should make more use of Yarn Pattern .

- modify hadoop The configuration file /opt/module/hadoop/etc/hadoop/yarn-site.xml, And distribute

<!-- Whether to start a thread to check the amount of physical memory that each task is using , If the task exceeds the assigned value , Kill it directly , Default yes true -->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<!-- Whether to start a thread to check the amount of virtual memory that each task is using , If the task exceeds the assigned value , Kill it directly , Default yes true -->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

modify conf/spark-env.sh, add to JAVA_HOME and YARN_CONF_DIR To configure

export JAVA_HOME=/opt/module/jdk1.8.0_144 // Replace with your own jdk Catalog YARN_CONF_DIR=/opt/module/hadoop/etc/hadoop // Instead, pack it yourself hadoop Catalog

- start-up HDFS as well as YARN colony

边栏推荐

- Jenkins learning (II) -- setting up Chinese

- Django operates Excel files through openpyxl to import data into the database in batches.

- 2022-1-6 Niuke net brush sword finger offer

- WARNING: You are using pip version 21.3.1; however, version 22.0.3 is available. Prompt to upgrade pip

- [point cloud processing paper crazy reading classic version 7] - dynamic edge conditioned filters in revolutionary neural networks on Graphs

- Basic knowledge of database design

- Vs2019 configuration opencv3 detailed graphic tutorial and implementation of test code

- [point cloud processing paper crazy reading cutting-edge version 12] - adaptive graph revolution for point cloud analysis

- Windows安装Redis详细步骤

- PowerDesigner does not display table fields, only displays table names and references, which can be modified synchronously

猜你喜欢

What are the stages of traditional enterprise digital transformation?

Vs2019 configuration opencv3 detailed graphic tutorial and implementation of test code

LeetCode 57. Insert interval

MySQL installation and configuration (command line version)

AcWing 785. Quick sort (template)

2022-2-13 learning the imitation Niuke project - home page of the development community

![[point cloud processing paper crazy reading classic version 8] - o-cnn: octree based revolutionary neural networks for 3D shape analysis](/img/fa/36d28b754a9f380bfd86d4562268c3.png)

[point cloud processing paper crazy reading classic version 8] - o-cnn: octree based revolutionary neural networks for 3D shape analysis

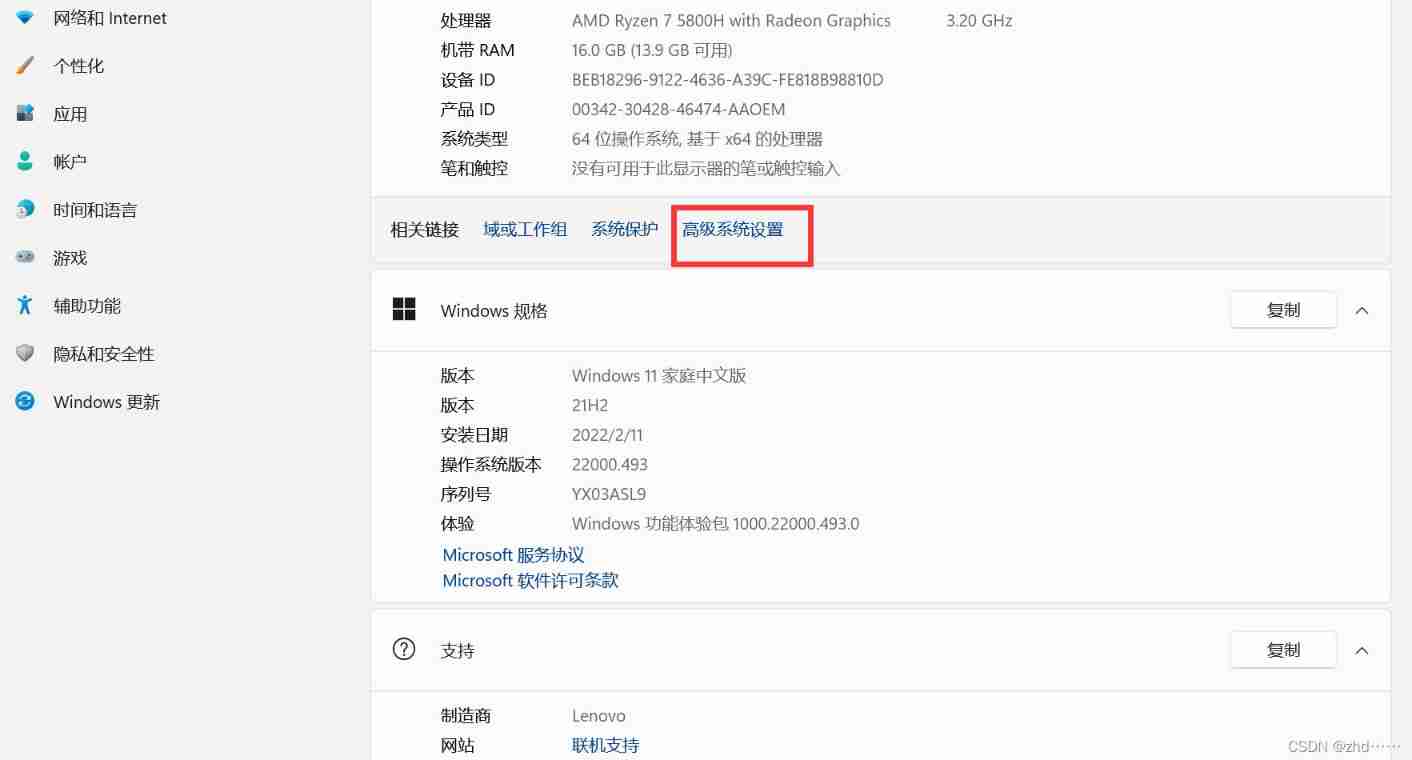

How to check whether the disk is in guid format (GPT) or MBR format? Judge whether UEFI mode starts or legacy mode starts?

Flink学习笔记(九)状态编程

AcWing 786. Number k

随机推荐

MySQL installation and configuration (command line version)

Basic knowledge of network security

Hudi 快速体验使用(含操作详细步骤及截图)

【Kotlin学习】类、对象和接口——定义类继承结构

【点云处理之论文狂读前沿版10】—— MVTN: Multi-View Transformation Network for 3D Shape Recognition

Notes on numerical analysis (II): numerical solution of linear equations

【点云处理之论文狂读前沿版12】—— Adaptive Graph Convolution for Point Cloud Analysis

Spark 集群安装与部署

Basic knowledge of database design

Crawler career from scratch (3): crawl the photos of my little sister ③ (the website has been disabled)

Sword finger offer II 029 Sorted circular linked list

NPM install installation dependency package error reporting solution

Flink-CDC实践(含实操步骤与截图)

AcWing 786. Number k

LeetCode 438. Find all letter ectopic words in the string

Hudi learning notes (III) analysis of core concepts

Hudi integrated spark data analysis example (including code flow and test results)

LeetCode 241. Design priorities for operational expressions

LeetCode每日一题(1024. Video Stitching)

Explanation of the answers to the three questions