当前位置:网站首页>Ncnn+int8+yolov4 quantitative model and real-time reasoning

Ncnn+int8+yolov4 quantitative model and real-time reasoning

2022-07-08 02:18:00 【pogg_】

notes : This article is reproduced in https://zhuanlan.zhihu.com/p/372278785, author pengtougu, Second master of Computer Science .

One 、 Preface

2021 year 5 month 7 Japan , Tencent Youtu laboratory officially launched ncnn The new version , There is no doubt about the contribution of this version , It's right again arm The end-to-end reasoning of the series is a big push , Cut out first nihui Big guy's blog about the new version ncnn The optimization of the point :

Continue to maintain excellent interface stability and compatibility

- API The interface is completely unchanged

- Quantitative calibration table Completely unchanged

- int8 The model quantification process is completely unchanged ( The point is this !!! Previous pair tensorflow I've never had a cold , A lot of it comes from tensorflow Every update version , Just kill the last version of the interface , Maybe it's on 2.0 This situation has improved a lot since then , But still the training is torch Use more )

ncnn int8 Quantitative tools (ncnn2table) New characteristics

- Support kl aciq easyquant Three quantitative strategies

- Support multiple input model quantization

- Support RGB/RGBA/BGR/BGRA/GRAY Model quantification of input

- Greatly improve multithreading efficiency

- Offline ( Inverse quantization - Activate - quantitative )->(requantize) The fusion , Implement end-to-end quantitative reasoning

You can have a look at more details nihui Big guy's blog :

https://zhuanlan.zhihu.com/p/370689914

Two 、 new edition ncnn Of int8 Quantitative exploration

Take advantage of the heat , Try the new version ncnn Quantitative version int8( The more important reason is the mid-term defense at the end of this month , Bisher is not finished yet , Run to the big guy's library , By the way )

2.1 Installation and compilation ncnn

Don't talk much , Install and compile the required environment before running the library , The installation and compilation process can be seen in another blog of mine :

https://zhuanlan.zhihu.com/p/368653551

2.2 yolov4-tiny quantitative int8

- Before quantification , Don't worry , Let's see ncnn Of wiki, Take a look at what needs to be done before quantifying :

https//github.com/Tencent/ncnn/wiki/quantized-int8-inference

wiki in : For support int8 Deployment of models on mobile devices , We provide a universal post training quantification tool , Can be float32 The model is converted to int8 Model .

in other words , Before quantifying , We need to yolov4-tiny.bin and yolov4-tiny.param These two weight files , Because I want to test it quickly int8 The performance of the version , I don't want to put yolov4-tiny.weights turn yolov4-tiny.bin and yolov4-tiny.param The steps are written out , Let's go model.zoo Go whoring these two opt file :

https://github.com/nihui/ncnn-assets/tree/master/models

- next , Follow the steps to use the compiled ncnn Optimize the two models :

./ncnnoptimize yolov4-tiny.param yolov4-tiny.bin yolov4-tiny-opt.param yolov4-tiny.bin 0

If it's direct model.zoo Of the two opt file , You can skip this step .

- Download calibration table image

First download the official 1000 Zhang ImageNet Images , Many students don't have a ladder , Slow download , You can use this link :

https://download.csdn.net/download/weixin_45829462/18704213

Here is a free download for you , If the download points are later modified by the official , That's the way ( The smile of a good man .jpg)

- Make calibration table file

linux Next , Switch to and images In the root of the same folder , direct

find images/ -type f > imagelist.txt

window Next , open Git Bash( No students install it by themselves , This tool is really easy to use ), Switch to and images In the root of the same folder , It's also the command line directly above :

Generate the required list.txt list , The format is as follows :

Then continue to enter the command :

./ncnn2table yolov4-tiny-opt.param yolov4-tiny-opt.bin imagelist.txt yolov4-tiny.table mean=[104,117,123] norm=[0.017,0.017,0.017] shape=[224,224,3] pixel=BGR thread=8 method=kl

among , The above variables have the following meanings :

mean The average and norm Norm is the value you pass to Mat::substract_mean_normalize() shape The shape is the spot shape of the model

pixel Is the pixel format of the model , Image pixels will be converted to this type before Extractor::input()

thread Threads can be used for parallel reasoning CPU Number of threads ( This should be defined according to the performance of your computer or board ) The method is to quantify the algorithm after training , At present, we support kl and aciq

- Quantitative models

./ncnn2int8 yolov4-tiny-opt.param yolov4-tiny-opt.bin yolov4-tiny-int8.param yolov4-tiny-int8.bin yolov4-tiny.table

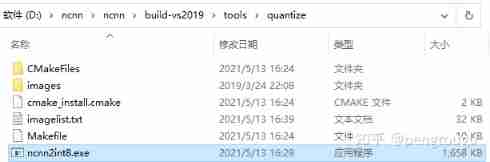

Go straight ahead , All the quantitative tools are in ncnn\build-vs2019\tools\quantize Under the folder

If you can't find it, please check whether your compilation process is wrong , There will be these quantization files under normal compilation

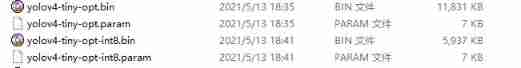

After running successfully, two int8 The file of , Namely :

Compare the original two opt Model , It's twice as small !

3、 ... and 、 new edition ncnn Of int8 Let's go back to quantification

It quantifies int8 The model is only half done , There are models, but the internal parameters are all disordered ...

- call int8 Model reasoning

open vs2019, Build new projects , The configuration steps I mentioned in detail in my last blog , Then turn out the dog's head and offer it to you :

https://zhuanlan.zhihu.com/p/368653551

Let's go straight to ncnn\examples Under the folder copy once yolov4.cpp Code for ( One word ! Whoring !)

But I have a problem here , Because I can't figure out what the main function is about , After reviewing the teaching materials last night, I got late ...

int main(int argc, char** argv)

{

cv::Mat frame;

std::vector<Object> objects;

cv::VideoCapture cap;

ncnn::Net yolov4;

const char* devicepath;

int target_size = 0;

int is_streaming = 0;

if (argc < 2)

{

fprintf(stderr, "Usage: %s [v4l input device or image]\n", argv[0]);

return -1;

}

devicepath = argv[1];

#ifdef NCNN_PROFILING

double t_load_start = ncnn::get_current_time();

#endif

int ret = init_yolov4(&yolov4, &target_size); //We load model and param first!

if (ret != 0)

{

fprintf(stderr, "Failed to load model or param, error %d", ret);

return -1;

}

#ifdef NCNN_PROFILING

double t_load_end = ncnn::get_current_time();

fprintf(stdout, "NCNN Init time %.02lfms\n", t_load_end - t_load_start);

#endif

if (strstr(devicepath, "/dev/video") == NULL)

{

frame = cv::imread(argv[1], 1);

if (frame.empty())

{

fprintf(stderr, "Failed to read image %s.\n", argv[1]);

return -1;

}

}

else

{

cap.open(devicepath);

if (!cap.isOpened())

{

fprintf(stderr, "Failed to open %s", devicepath);

return -1;

}

cap >> frame;

if (frame.empty())

{

fprintf(stderr, "Failed to read from device %s.\n", devicepath);

return -1;

}

is_streaming = 1;

}

while (1)

{

if (is_streaming)

{

#ifdef NCNN_PROFILING

double t_capture_start = ncnn::get_current_time();

#endif

cap >> frame;

#ifdef NCNN_PROFILING

double t_capture_end = ncnn::get_current_time();

fprintf(stdout, "NCNN OpenCV capture time %.02lfms\n", t_capture_end - t_capture_start);

#endif

if (frame.empty())

{

fprintf(stderr, "OpenCV Failed to Capture from device %s\n", devicepath);

return -1;

}

}

#ifdef NCNN_PROFILING

double t_detect_start = ncnn::get_current_time();

#endif

detect_yolov4(frame, objects, target_size, &yolov4); //Create an extractor and run detection

#ifdef NCNN_PROFILING

double t_detect_end = ncnn::get_current_time();

fprintf(stdout, "NCNN detection time %.02lfms\n", t_detect_end - t_detect_start);

#endif

#ifdef NCNN_PROFILING

double t_draw_start = ncnn::get_current_time();

#endif

draw_objects(frame, objects, is_streaming); //Draw detection results on opencv image

#ifdef NCNN_PROFILING

double t_draw_end = ncnn::get_current_time();

fprintf(stdout, "NCNN OpenCV draw result time %.02lfms\n", t_draw_end - t_draw_start);

#endif

if (!is_streaming)

{

//If it is a still image, exit!

return 0;

}

}

return 0;

}

Sure enough, the big guy is the big guy , The code is inscrutable , I'm just a little white , So hard

by , The next day, I didn't watch it , I wrote a new one main function , Call the ones written by the boss function:

int main(int argc, char** argv)

{

cv::Mat frame;

std::vector<Object> objects;

cv::VideoCapture cap;

ncnn::Net yolov4;

const char* devicepath;

int target_size = 160;

int is_streaming = 0;

/* const char* imagepath = "E:/ncnn/yolov5/person.jpg"; cv::Mat m = cv::imread(imagepath, 1); if (m.empty()) { fprintf(stderr, "cv::imread %s failed\n", imagepath); return -1; } double start = GetTickCount(); std::vector<Object> objects; detect_yolov5(m, objects); double end = GetTickCount(); fprintf(stderr, "cost time: %.5f\n ms", (end - start)/1000); draw_objects(m, objects); */

int ret = init_yolov4(&yolov4, &target_size); //We load model and param first!

if (ret != 0)

{

fprintf(stderr, "Failed to load model or param, error %d", ret);

return -1;

}

cv::VideoCapture capture;

capture.open(0); // Modify this parameter to select the camera you want to use

//cv::Mat frame;

while (true)

{

capture >> frame;

cv::Mat m = frame;

double start = GetTickCount();

std::vector<Object> objects;

detect_yolov4(frame, objects, 160, &yolov4);

double end = GetTickCount();

fprintf(stderr, "cost time: %.5f ms \n", (end - start));

// imshow(" External camera ", m); //remember, imshow() needs a window name for its first parameter

draw_objects(m, objects, 8);

if (cv::waitKey(30) >= 0)

break;

}

return 0;

}

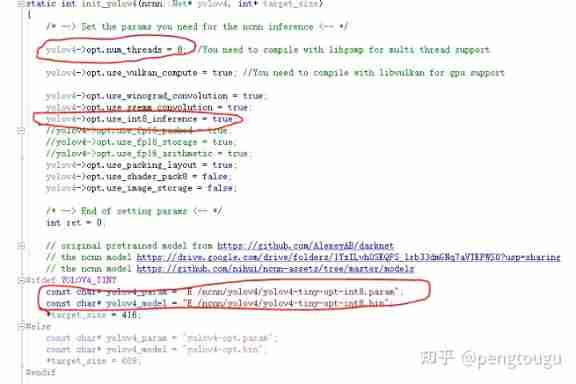

There are a few more points to note , When people are reasoning

hold fp16 Ban , A: no, no

Switch to int8 Reasoning

Change the thread to what you made before int8 The thread of the model

The model has also been replaced

As follows :

Come here , You can reason happily

Four 、 summary

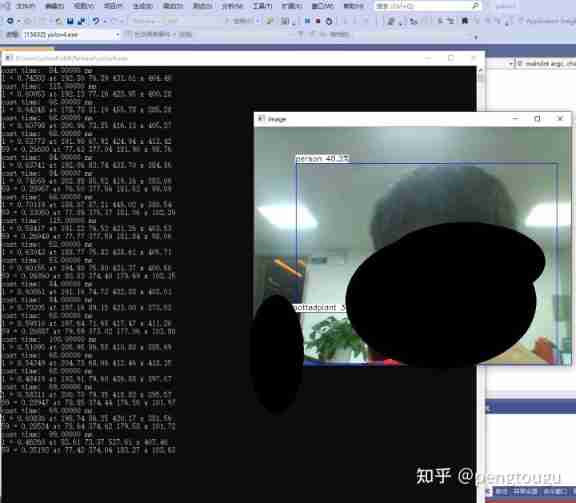

Let's talk about my computer configuration , Shenzhou notebook K650D-i5, processor InterCorei5-4210M, They're all relatively old machines , After all, I bought 6 year , Performance is also declining .

Run the whole process with cpu, Why not gpu?( Good question. ,2g Xiancun is afraid that the computer will blow up )

Contrast the previous fp16 Model , Obviously input_size It's fast in the same situation 40%-70%, And there's almost no loss in accuracy

In conclusion , new edition ncnn Of int8 Quantitative reasoning is hard stuff , More models will be tried later int8 Reasoning , Do a comparative experiment to show you

All the files and the modified code are in this warehouse , Welcome to go whoring for nothing :

https://github.com/pengtougu/ncnn-yolov4-int8

Interested friends can git clone Download run , Use as you go ( The premise is to install ncnn)~

边栏推荐

- Give some suggestions to friends who are just getting started or preparing to change careers as network engineers

- The generosity of a pot fish

- 关于TXE和TC标志位的小知识

- #797div3 A---C

- leetcode 865. Smallest Subtree with all the Deepest Nodes | 865. The smallest subtree with all the deepest nodes (BFs of the tree, parent reverse index map)

- Leetcode featured 200 -- linked list

- Random walk reasoning and learning in large-scale knowledge base

- The circuit is shown in the figure, r1=2k Ω, r2=2k Ω, r3=4k Ω, rf=4k Ω. Find the expression of the relationship between output and input.

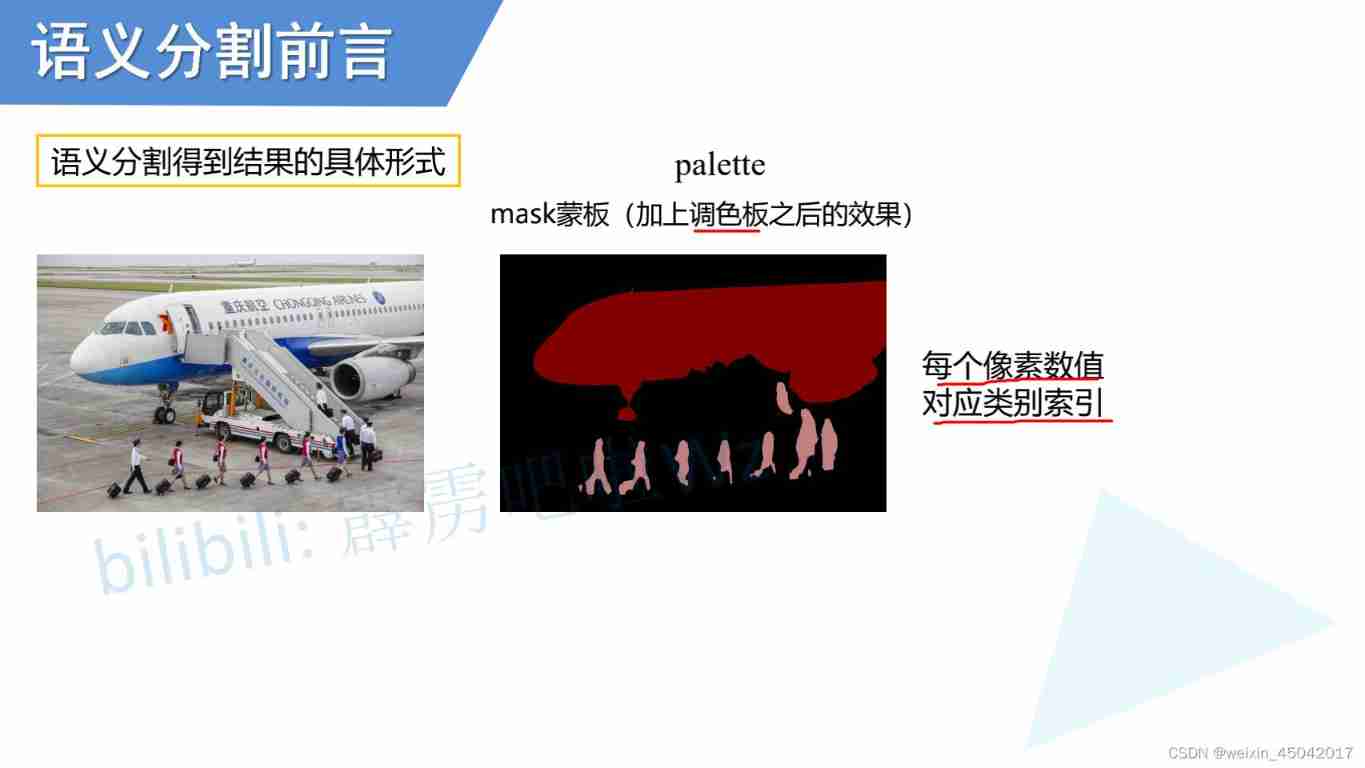

- Semantic segmentation | learning record (1) semantic segmentation Preface

- "Hands on learning in depth" Chapter 2 - preparatory knowledge_ 2.1 data operation_ Learning thinking and exercise answers

猜你喜欢

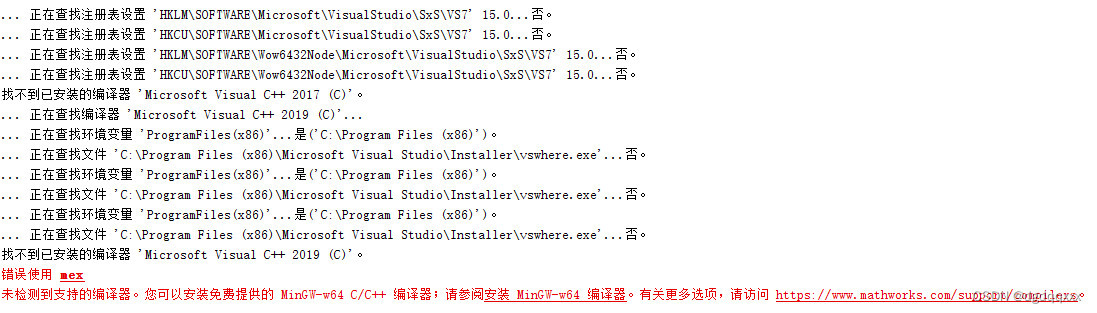

Matlab r2021b installing libsvm

Ml self realization / logistic regression / binary classification

Give some suggestions to friends who are just getting started or preparing to change careers as network engineers

![[knowledge map paper] attnpath: integrate the graph attention mechanism into knowledge graph reasoning based on deep reinforcement](/img/36/8aef4c9fcd9dd8a8227ac8ca57eb26.jpg)

[knowledge map paper] attnpath: integrate the graph attention mechanism into knowledge graph reasoning based on deep reinforcement

Semantic segmentation | learning record (1) semantic segmentation Preface

![[recommendation system paper reading] recommendation simulation user feedback based on Reinforcement Learning](/img/48/3366df75c397269574e9666fcd02ec.jpg)

[recommendation system paper reading] recommendation simulation user feedback based on Reinforcement Learning

![[knowledge map paper] r2d2: knowledge map reasoning based on debate dynamics](/img/e5/646ae977b8a0dc1b1ac2250602a2b9.jpg)

[knowledge map paper] r2d2: knowledge map reasoning based on debate dynamics

Nanny level tutorial: Azkaban executes jar package (with test samples and results)

A comprehensive and detailed explanation of static routing configuration, a quick start guide to static routing

![[knowledge map] interpretable recommendation based on knowledge map through deep reinforcement learning](/img/62/70741e5f289fcbd9a71d1aab189be1.jpg)

[knowledge map] interpretable recommendation based on knowledge map through deep reinforcement learning

随机推荐

文盘Rust -- 给程序加个日志

JVM memory and garbage collection -4-string

Unity 射线与碰撞范围检测【踩坑记录】

XXL job of distributed timed tasks

Little knowledge about TXE and TC flag bits

魚和蝦走的路

常见的磁盘格式以及它们之间的区别

What are the types of system tests? Let me introduce them to you

From starfish OS' continued deflationary consumption of SFO, the value of SFO in the long run

Gaussian filtering and bilateral filtering principle, matlab implementation and result comparison

需要思考的地方

nmap工具介绍及常用命令

[knowledge map paper] r2d2: knowledge map reasoning based on debate dynamics

Introduction à l'outil nmap et aux commandes communes

How to use diffusion models for interpolation—— Principle analysis and code practice

《通信软件开发与应用》课程结业报告

Vim 字符串替换

leetcode 866. Prime Palindrome | 866. prime palindromes

Semantic segmentation | learning record (4) expansion convolution (void convolution)

分布式定时任务之XXL-JOB