当前位置:网站首页>Preliminary knowledge of Neural Network Introduction (pytorch)

Preliminary knowledge of Neural Network Introduction (pytorch)

2022-07-03 10:33:00 【-Plain heart to warm】

Preliminary knowledge

Data manipulation

N Dimension group example

- N Dimensional array is the main data structure of machine learning and neural network .

0-d —— Scalar

1-d —— vector

2-d —— matrix

3-d —— RGB picture

4-d —— One RGB Image batch

5-d —— A video batch

Create array

Creating an array requires :

- Character : for example 3X4 matrix

- The data type of each element : for example 32 Bit floating point

- The value of each element , For example, all 0, Or random numbers

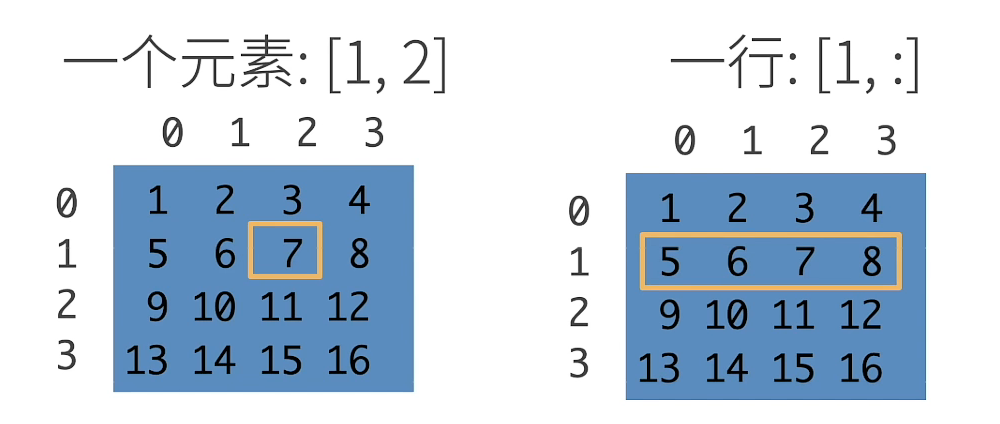

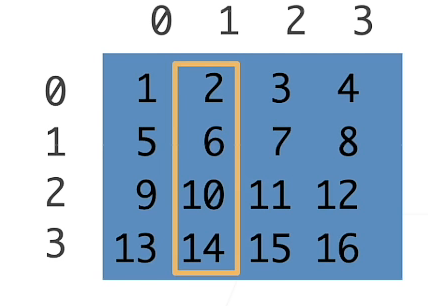

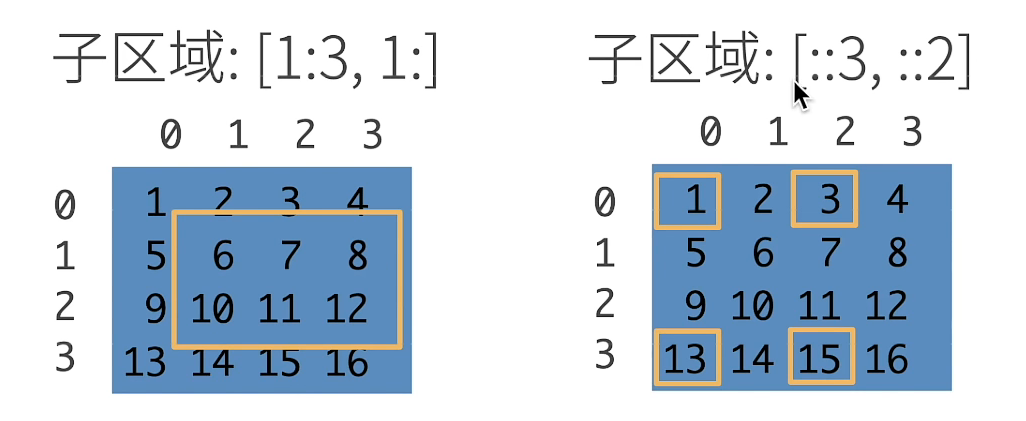

Access elements

A column of :[:,1]

Data operation implementation

First , We import torch. Please note that , Although it's called PyTorch, But we should import torch instead of pytorch.

import torch

The tensor represents an array of values , This array may have multiple dimensions .

x = torch.arange(12)

print(x)

tensor([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11])

We can use the tensor shape Property to access the properties of the tensor Character And the total number of elements in the tensor .

x.shape

torch.Size([12])

x.numel()

12

To change the properties of a tensor without changing the number and value of elements , We can call reshape function .

X = x.reshape(3, 4)

X

tensor([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]])

Use all 0、 whole 1、 Other constants or numbers randomly sampled from a particular distribution

torch.zeros((2, 3, 4))

tensor([[[0., 0., 0., 0.],

[0., 0., 0., 0.],

[0., 0., 0., 0.]],

[[0., 0., 0., 0.],

[0., 0., 0., 0.],

[0., 0., 0., 0.]]])

torch.ones((2, 3, 4))

tensor([[[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]],

[[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]]])

By providing a value containing Python list ( Or nested list ) To give a certain value to each element in the required tensor

torch.tensor([[2, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]])

tensor([[2, 1, 4, 3],

[1, 2, 3, 4],

[4, 3, 2, 1]])

torch.tensor([[[2, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]]]).shape

torch.Size([1, 3, 4])

Common standard arithmetic operators (+、-、*、/ and **) Can be upgraded to operate by element

x = torch.tensor([1.0, 2, 4, 8])

y = torch.tensor([2, 2, 2, 2])

x+y, x-y, x*y, x/y, x**y # ** Operators are exponentiation operations

(tensor([ 3., 4., 6., 10.]),

tensor([-1., 0., 2., 6.]),

tensor([ 2., 4., 8., 16.]),

tensor([0.5000, 1.0000, 2.0000, 4.0000]),

tensor([ 1., 4., 16., 64.]))

Apply more calculations as elements

torch.exp(x)

tensor([2.7183e+00, 7.3891e+00, 5.4598e+01, 2.9810e+03])

We can connect multiple tensors together

X = torch.arange(12, dtype=torch.float32).reshape(3, 4)

Y = torch.tensor([[2.0, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]])

torch.cat((X, Y), dim=0), torch.cat((X, Y), dim=1)

(tensor([[ 0., 1., 2., 3.],

[ 4., 5., 6., 7.],

[ 8., 9., 10., 11.],

[ 2., 1., 4., 3.],

[ 1., 2., 3., 4.],

[ 4., 3., 2., 1.]]),

tensor([[ 0., 1., 2., 3., 2., 1., 4., 3.],

[ 4., 5., 6., 7., 1., 2., 3., 4.],

[ 8., 9., 10., 11., 4., 3., 2., 1.]]))

dim - dimension

dim = 0 Superimpose on line

dim = 1 Stack in column

adopt Logical operators Construct a binary tensor

X == Y

tensor([[False, True, False, True],

[False, False, False, False],

[False, False, False, False]])

Summing all the elements in a tensor produces a tensor with only one element .

X.sum()

tensor(66.)

Even if the characters are different , We can still call Broadcast mechanism (broadcasting mechanism) To perform operations by element

a = torch.arange(3).reshape((3, 1))

b = torch.arange(2).reshape((1, 2))

a, b

(tensor([[0],

[1],

[2]]),

tensor([[0, 1]]))

a + b

tensor([[0, 1],

[1, 2],

[2, 3]])

It can be used [-1] Choose the last element , It can be used [1:3] Select the second and third elements

X[-1], X[1:3]

(tensor([ 8., 9., 10., 11.]),

tensor([[ 4., 5., 6., 7.],

[ 8., 9., 10., 11.]]))

In addition to reading , We can also write elements into the matrix by indexing .

X[1, 2] = 9

X

tensor([[ 0., 1., 2., 3.],

[ 4., 5., 9., 7.],

[ 8., 9., 10., 11.]])

Assign the same value to multiple elements , We just need to index all the elements , Then assign them .

X[0:2, :] = 12

X

tensor([[12., 12., 12., 12.],

[12., 12., 12., 12.],

[ 8., 9., 10., 11.]])

Running some operations may cause memory to be allocated for new results

before = id(Y)

Y = Y + X

id(Y) == before

False

Perform in place operation

# Z And Y Of shape Same type , But all elements are 0

Z = torch.zeros_like(Y)

print('id(Z):', id(Z))

# Z All the elements in =X+Y

Z[:] = X + Y

print('id(Z):', id(Z))

id(Z): 2274712529312

id(Z): 2274712529312

If not reused in subsequent calculations X, We can also use X[:] = X + Y or X += Y To reduce the memory overhead of the operation .

before = id(X)

X += Y

id(X) == before

True

Convert to NumPy tensor

A = X.numpy()

B = torch.tensor(A)

type(A), type(B)

(numpy.ndarray, torch.Tensor)

Will be the size of 1 The tensor of is transformed into Python Scalar

a = torch.tensor([3.5])

a, a.item(), float(a), int(a)

(tensor([3.5000]), 3.5, 3.5, 3)

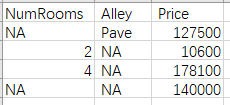

Data preprocessing

Create a manual data set , And stored in csv( Comma separated values ) file

import os

os.makedirs(os.path.join('..', 'data'), exist_ok=True)

data_file = os.path.join('..', 'data', 'house_tiny.csv')

with open(data_file, 'w') as f:

f.write('NumRooms, Alley, Price\n') # Name

f.write('NA, Pave, 127500\n') # Each row represents a data sample

f.write('2, NA, 10600\n')

f.write('4, NA, 178100\n')

f.write('NA, NA, 140000\n')

Created from csv Load the original data set in the file

# If not installed pandas, Just uncomment the following lines

# !pip install pandas

import pandas as pd

data = pd.read_csv(data_file)

print(data)

NumRooms Alley Price

0 NaN Pave 127500

1 2.0 NA 10600

2 4.0 NA 178100

3 NaN NA 140000

To handle missing data , Typical methods include interpolation and Delete , here , We will consider interpolation

inputs, outputs = data.iloc[:, 0:2], data.iloc[:, 2]

inputs = inputs.fillna(inputs.mean())

print(inputs)

data It's a 4*3 Table of

iloc(index location)

NumRooms Alley

0 3.0 Pave

1 2.0 NA

2 4.0 NA

3 3.0 NA

about inputs Class value or discrete value in , We will “NaN” As a category .

inputs = pd.get_dummies(inputs, dummy_na=True)

print(inputs)

NumRooms Alley_ NA Alley_ Pave Alley_nan

0 3.0 0 1 0

1 2.0 1 0 0

2 4.0 1 0 0

3 3.0 1 0 0

Now? inputs and outputs All entries in are numeric types , They can be converted to tensor format .

import torch

X, y = torch.tensor(inputs.values), torch.tensor(outputs.values)

X, y

(tensor([[3., 0., 1., 0.],

[2., 1., 0., 0.],

[4., 1., 0., 0.],

[3., 1., 0., 0.]], dtype=torch.float64),

tensor([127500, 10600, 178100, 140000]))

We will csv The file is transformed into a pure tensor

Conventional python It's usually used float64 Bit floating point

but 64 Bit floating point numbers are a little slow for deep learning , We usually convert it into 32 Bit floating point

Data manipulation QA

a = torch.arange(12)

b = a.reshape((3, 4))

b[:] = 2

a

b Not copied a

tensor([2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2])

tensor It's a mathematical concept , It's a tensor .

array It's a concept inside a computer , Array .

Actually tensor There is no essential difference with arrays , You don't need to worry about mathematical definitions .

3.

reshape and reval There is no essential difference

边栏推荐

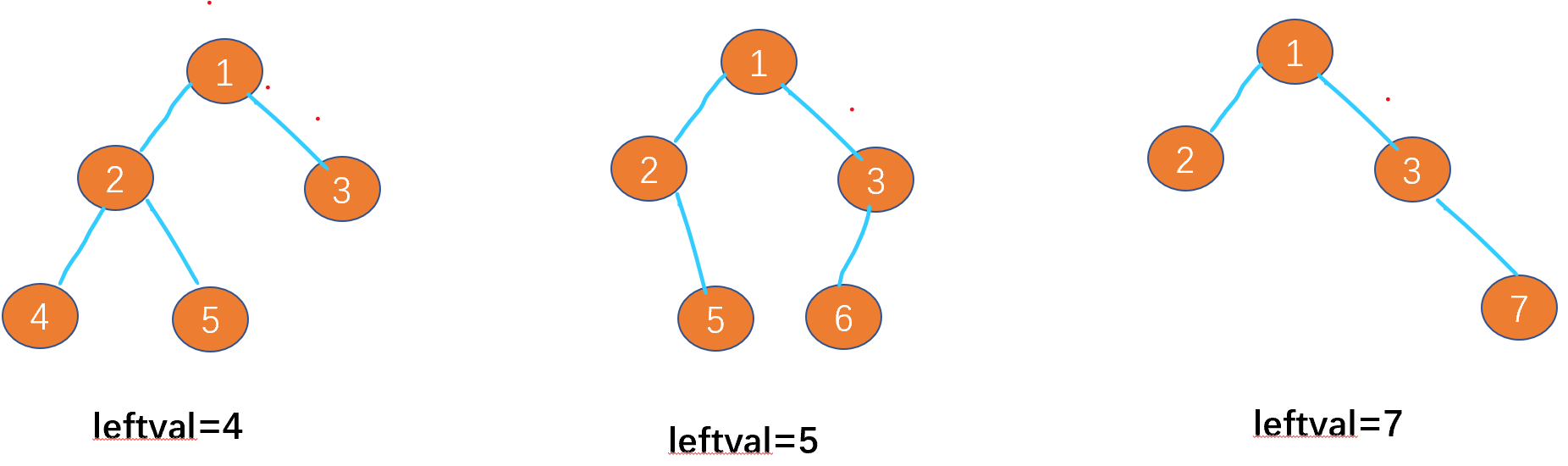

- Leetcode-513:找树的左下角值

- Deep Reinforcement learning with PyTorch

- Handwritten digit recognition: CNN alexnet

- I really want to be a girl. The first step of programming is to wear women's clothes

- [LZY learning notes -dive into deep learning] math preparation 2.1-2.4

- 一步教你溯源【钓鱼邮件】的IP地址

- Leetcode - 1172 plate stack (Design - list + small top pile + stack))

- LeetCode - 715. Range module (TreeSet)*****

- Leetcode - 5 longest palindrome substring

- Anaconda installation package reported an error packagesnotfounderror: the following packages are not available from current channels:

猜你喜欢

随机推荐

Leetcode-100:相同的树

Ut2012 learning notes

A super cool background permission management system

Hands on deep learning pytorch version exercise solution - 2.4 calculus

R language classification

GAOFAN Weibo app

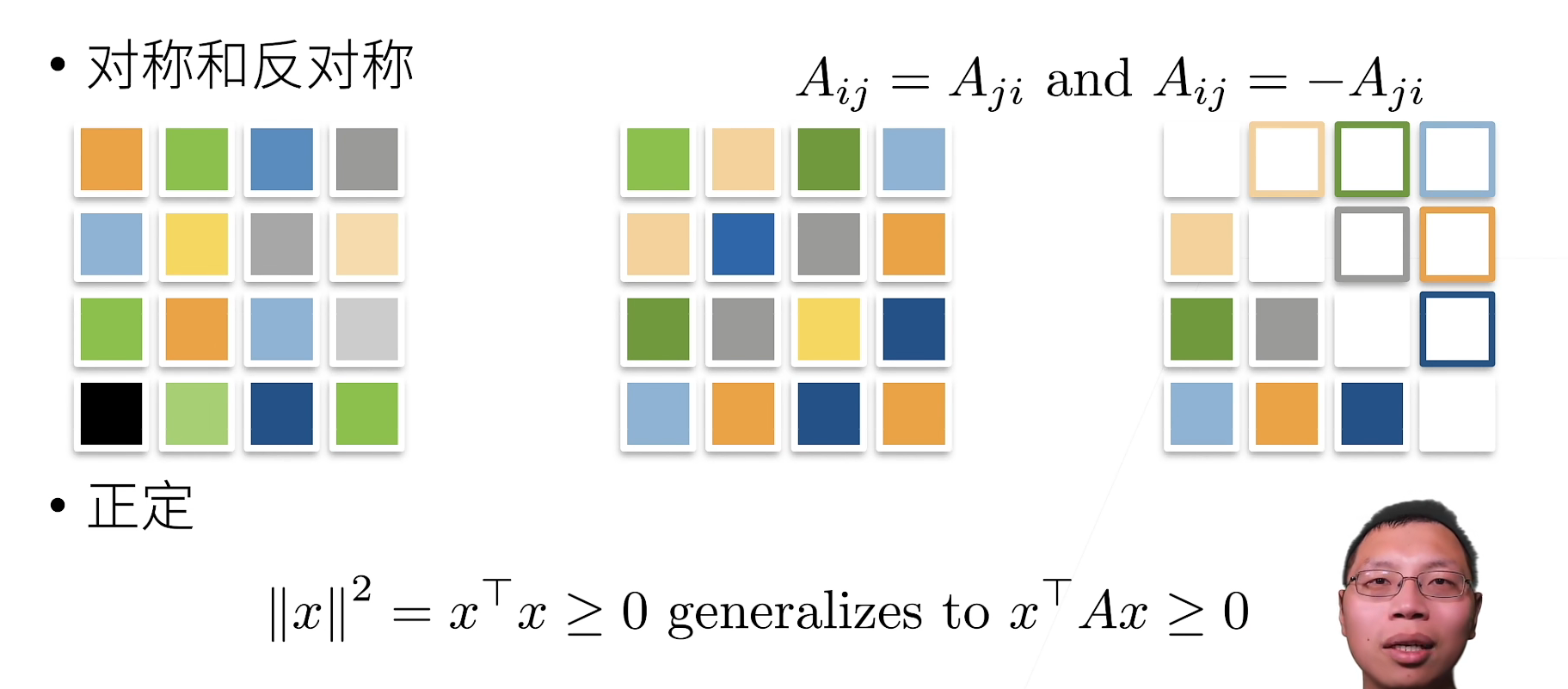

深度学习入门之线性代数(PyTorch)

Leetcode - 705 design hash set (Design)

2018 Lenovo y7000 black apple external display scheme

Tensorflow - tensorflow Foundation

Content type ‘application/x-www-form-urlencoded;charset=UTF-8‘ not supported

Julia1.0

Tensorflow—Neural Style Transfer

六、MySQL之数据定义语言(一)

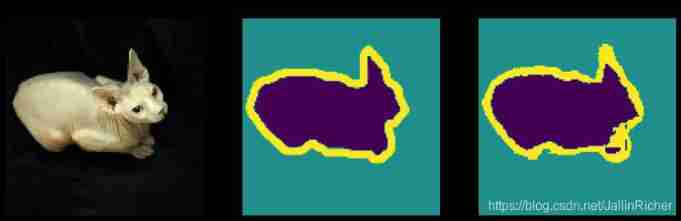

Convolutional neural network (CNN) learning notes (own understanding + own code) - deep learning

Step 1: teach you to trace the IP address of [phishing email]

多层感知机(PyTorch)

High imitation wechat

20220608其他:逆波兰表达式求值

Leetcode-513: find the lower left corner value of the tree