当前位置:网站首页>Hero League | King | cross the line of fire BGM AI score competition sharing

Hero League | King | cross the line of fire BGM AI score competition sharing

2022-07-07 00:33:00 【weixin_ forty-two million one thousand and eighty-nine】

Preface

Recently, I played a multimodal game with my colleagues , Finally, I won the first place . Share with you , Here's an episode , It was always in the leading position during the preliminary round , As a result, he was suddenly overtaken on the last day , But in the defense of the time overturned , The final competition score is mainly through the technical thinking ( Occupy 30%)、 Theoretical depth ( Occupy 30%)、 Live performance ( Occupy 10%) And accuracy ( Occupy 30%, It is converted by the score of the preliminary contest ) Score calculation of four dimensions .

I don't say much nonsense , Start the theme

Competition questions

Simply put, it is to automatically match videos bgm, There are three kinds of games involved : Glory of Kings (HoK)、 Hero alliance (LOL) And crossing the line of fire (CF). The goal is to give a game video, Output its embedding And all candidates bgm District Library embedding.

The evaluation indicators are as follows :

Due to authorization issues , Data sets are not provided here , But I found a similar data on the Internet 【 Infringement can be deleted by contacting the author 】, You can feel it ( because csdn Can't upload , If you want to see it, you can see what the author knows :)

EDA

We have a thorough understanding of the data

(1) Video picture example :

It can be seen that the picture of the same game is actually quite similar

(2) Duration

Training set : Long tail distribution , Median : 67.6s, The average : 94.6s. Test set : The duration is 32s.

(3) There are separated anchor commentary audio data , but After separation BGM The noise is too loud .

(4) Lack of text data ( Such as the title , ASR etc. )

Method

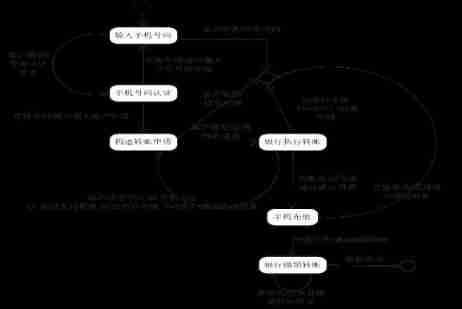

The overall framework

In general, it is also a simple two tower model , The input end has text 、 Audio 、 Three modes of video .

Based on the preliminary investigation , We think the pictures of the video are very similar , Unless we can accurately extract representative frames such as transitions, random frame acquisition is not very helpful , And the anchor's commentary is very critical , tone ( Audio ) It can directly reflect excitement 、 Funny and so on , The second is the content of the commentary ( Text ) It's also crucial .

Note that this is not to say that video is not important , What we are talking about here is cost performance , It's easier to get higher profits in a certain period of time by focusing on the latter two , If we go to the depth of Technology , Video mode must be well explored .

To make a point , Here are just some useful strategies .

(1) Audio

First, feature extraction of audio , At present, people often use VGGish, I also found that most of them are basically VGGish, But we adopted HuBERT, It is based on bert An audio pre training model of thought training , The framework is as follows :

You can see more details :Light Sea:HuBERT: be based on BERT Self supervision of (self-supervised) Pronunciation means learning

(2) Data to enhance

The official data set is very few , How to make full use of data sets is also a consideration , Here we segment the video . With 20s Cut the video for intervals , Video and corresponding BGM Synchronous cutting , Data enhancement . We also carried out experiments on the cutting duration ,5s, 20s, 32s, among 20s The best effect .

The main points of : Training and prediction use the same length , Keep the strategy consistent , Otherwise, the effect will be very poor

(3)loss

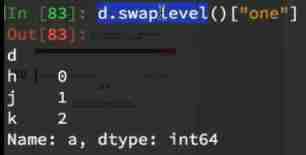

The final request is embedding, It can be guessed that the probability is based on the European similarity to judge the result , So we use the method based on comparative learning Triplet Loss

Core code :

Final :

Harvest

See here actually trick That's it , Does it feel very simple , however ! The author believes that the above is not the most important , Next, the most important thing is : The thinking and experimental details in the whole process !

About video mode , Although at the beginning we expected that it would not have much profit , However, the experiment verifies that each video segment is extracted 10 Frame picture , Integrate with dubbing mode , The result is really no gain .

In addition, dubbing ASR, utilize BERT The extracted features , The effect is not improved , This is quite unexpected , It's not in line with expectations , The reason may be ASR The effect is not good , Here is a direct identification of an open source model , The text result also has no punctuation , Because of time, I didn't try again .

Add game category prediction as an auxiliary task , The effect is not improved , A possible explanation here is BGM It's universal .

Another episode is that it was far ahead of the list in the early stage , But on the last night, a classmate suddenly rose 14 name , To the second , Then the last hour came to the first , This once made us very curious about what it was trick? ha-ha , Finally, I learned that the key to the whole is to use two strong audio backbone, Although many players have designed some small trick spot , But it is eclipsed by the absolute strong model , This shows the importance of explanation on this task and the importance of baseline model selection .

In addition, there are some small points of fragmentary thoughts , Don't say , If you are interested, please contact the author

Explore the direction

Here we also give some possible optimization points in the future .

(1) Text mode : Can you provide some, such as the title , tag Wait for text mode and a good ASR Commentary text , This ceiling is still very high .

(2) How to better capture visual modal features ? For example, scene switching 、 Key frames of game moves, special effects, etc .

(3) Dubbing and BGM Audio does not share the same backbone, Our framework is currently shared , Theoretically speaking, explanation and bgm The distribution of is still different

(4) How to better interact with different modal features ?

If you are interested, you can communicate together ~

Focus on

Welcome to your attention , See you next time ~

Welcome to WeChat official account :

github:

Mryangkaitong · GitHubhttps://github.com/Mryangkaitong

You know :

边栏推荐

- Interesting wine culture

- 【向量检索研究系列】产品介绍

- Huawei mate8 battery price_ Huawei mate8 charges very slowly after replacing the battery

- What can the interactive slide screen demonstration bring to the enterprise exhibition hall

- 【CVPR 2022】半监督目标检测:Dense Learning based Semi-Supervised Object Detection

- 陀螺仪的工作原理

- 基于GO语言实现的X.509证书

- 沉浸式投影在线下展示中的三大应用特点

- The way of intelligent operation and maintenance application, bid farewell to the crisis of enterprise digital transformation

- Pdf document signature Guide

猜你喜欢

MySQL learning notes (mind map)

How engineers treat open source -- the heartfelt words of an old engineer

2021 SASE integration strategic roadmap (I)

准备好在CI/CD中自动化持续部署了吗?

Business process testing based on functional testing

Data analysis course notes (V) common statistical methods, data and spelling, index and composite index

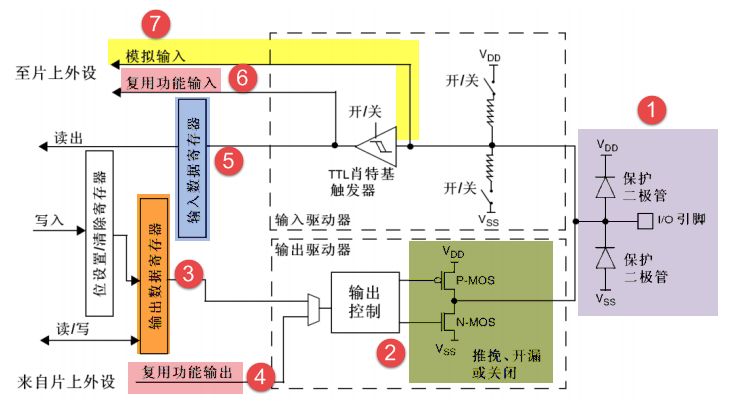

Introduction au GPIO

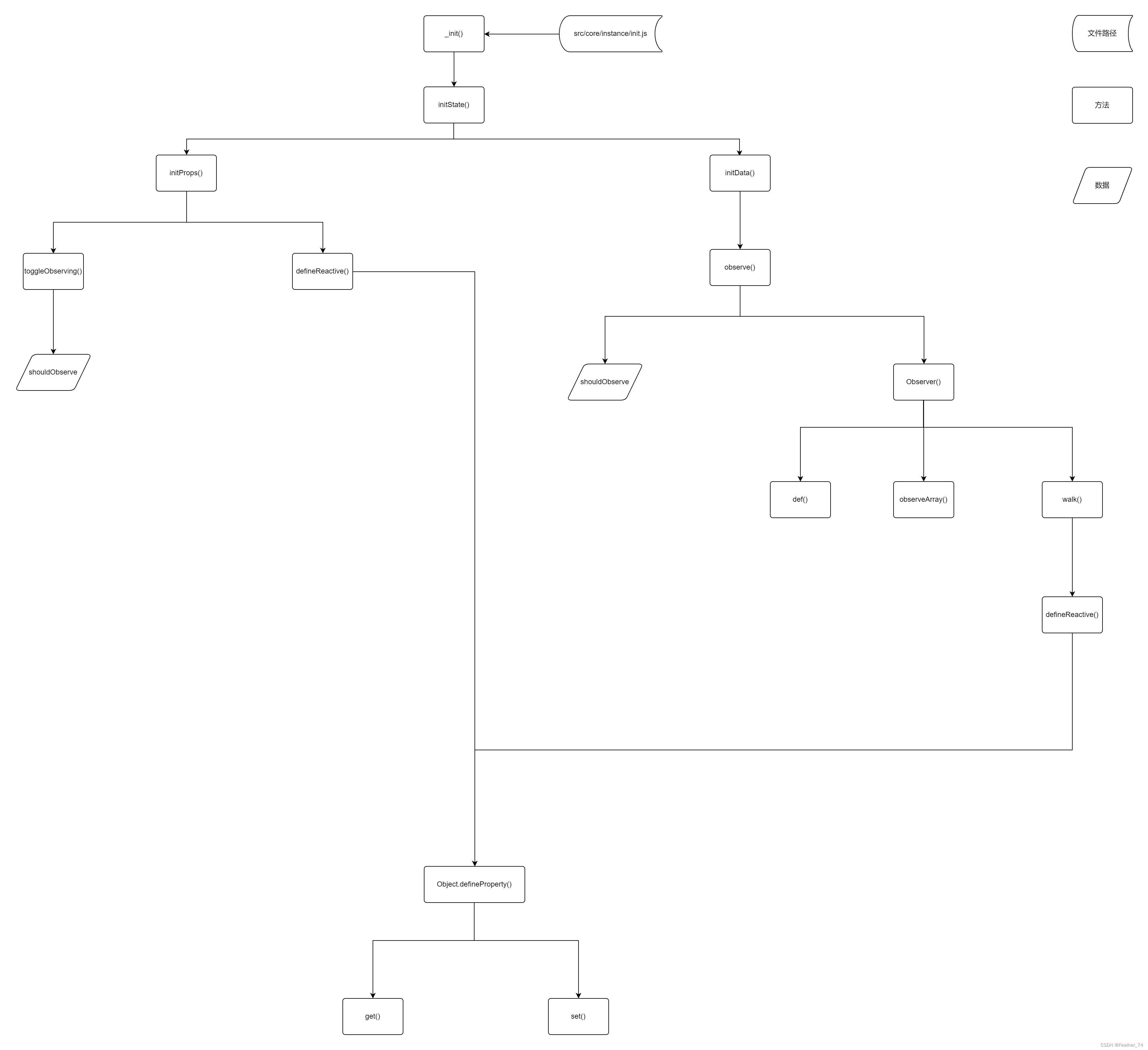

什么是响应式对象?响应式对象的创建过程?

37页数字乡村振兴智慧农业整体规划建设方案

After leaving a foreign company, I know what respect and compliance are

随机推荐

Oracle EMCC 13.5 environment in docker every minute

[CVPR 2022] semi supervised object detection: dense learning based semi supervised object detection

Compilation of kickstart file

Typescript incremental compilation

How can computers ensure data security in the quantum era? The United States announced four alternative encryption algorithms

《LaTex》LaTex数学公式简介「建议收藏」

MySQL master-slave multi-source replication (3 master and 1 slave) setup and synchronization test

Jenkins' user credentials plug-in installation

Cross-entrpy Method

How about the order management of okcc call center

智能运维应用之道,告别企业数字化转型危机

2022/2/11 summary

What is a responsive object? How to create a responsive object?

The way of intelligent operation and maintenance application, bid farewell to the crisis of enterprise digital transformation

华为mate8电池价格_华为mate8换电池后充电巨慢

How to use vector_ How to use vector pointer

Uniapp uploads and displays avatars locally, and converts avatars into Base64 format and stores them in MySQL database

Why should a complete knapsack be traversed in sequence? Briefly explain

JWT signature does not match locally computed signature. JWT validity cannot be asserted and should

Huawei mate8 battery price_ Huawei mate8 charges very slowly after replacing the battery