当前位置:网站首页>6. Dropout application

6. Dropout application

2022-07-08 01:01:00 【booze-J】

article

One 、 not used Dropout Under normal circumstances

stay 4. Cross entropy Add some hidden layers to the network model construction of code , And output the loss and accuracy of the training set .

take 4. In cross entropy

# Creating models Input 784 Neurons , Output 10 Neurons

model = Sequential([

# Define output yes 10 Input is 784, Set offset to 1, add to softmax Activation function

Dense(units=10,input_dim=784,bias_initializer='one',activation="softmax"),

])

Add a hidden layer and change it to :

# Creating models Input 784 Neurons , Output 10 Neurons

model = Sequential([

# Define output yes 200 Input is 784, Set offset to 1, add to softmax Activation function The first hidden layer has 200 Neurons

Dense(units=200,input_dim=784,bias_initializer='one',activation="tanh"),

# The second hidden layer has 100 Neurons

Dense(units=100,bias_initializer='one',activation="tanh"),

Dense(units=10,bias_initializer='one',activation="softmax")

])

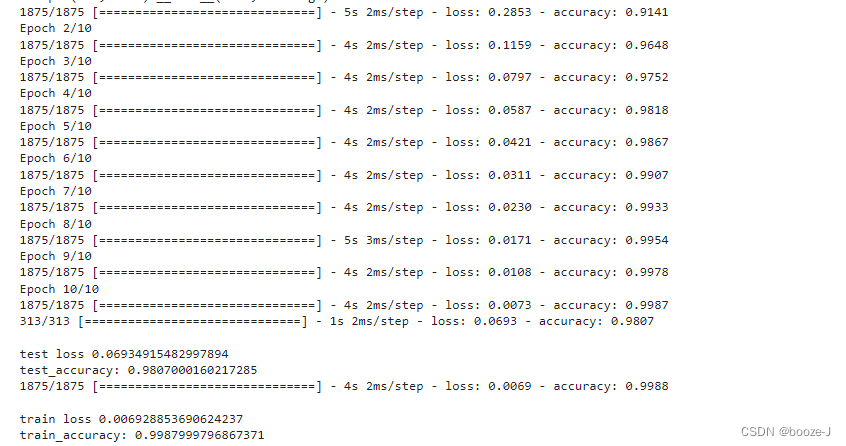

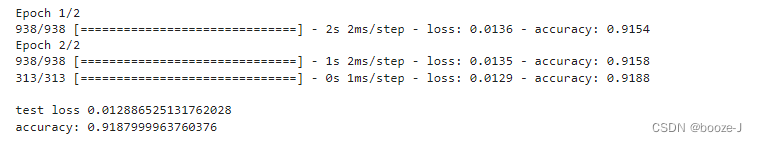

Code run results :

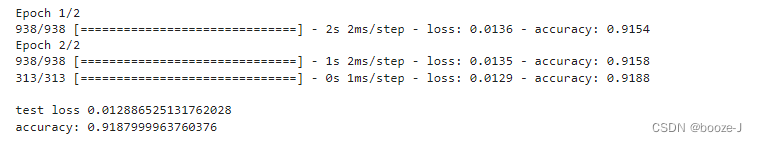

contrast 4. Cross entropy Results of operation , You can find that after adding more hidden layers , The accuracy of model testing is greatly improved , But there is a slight over fitting phenomenon .

Two 、 Use Dropout

Add Dropout:

# Creating models Input 784 Neurons , Output 10 Neurons

model = Sequential([

# Define output yes 200 Input is 784, Set offset to 1, add to softmax Activation function The first hidden layer has 200 Neurons

Dense(units=200,input_dim=784,bias_initializer='one',activation="tanh"),

# Give Way 40% Of neurons do not work

Dropout(0.4),

# The second hidden layer has 100 Neurons

Dense(units=100,bias_initializer='one',activation="tanh"),

# Give Way 40% Of neurons do not work

Dropout(0.4),

Dense(units=10,bias_initializer='one',activation="softmax")

])

Use Dropout You need to import from keras.layers import Dropout

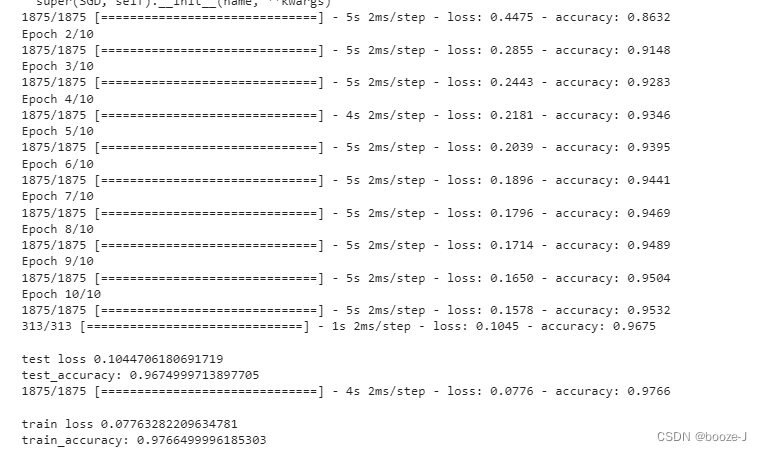

Running results :

In this example, it is not to say that dropout Will get better results , But in some cases , Use dropout Can get better results .

But use dropout after , The test accuracy and training accuracy are relatively close , Over fitting phenomenon is not very obvious . We can see from the training results , The accuracy of the training process is lower than that of the final model test training set , This is because of the use of dropout after , Each training only uses some neurons , Then after the model training , At the last test , It is tested with all neurons , So the effect will be better .

Complete code

1. not used Dropout Complete code of the situation

The code running platform is jupyter-notebook, Code blocks in the article , According to jupyter-notebook Written in the order of division in , Run article code , Glue directly into jupyter-notebook that will do .

1. Import third-party library

import numpy as np

from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense,Dropout

from tensorflow.keras.optimizers import SGD

2. Loading data and data preprocessing

# Load data

(x_train,y_train),(x_test,y_test) = mnist.load_data()

# (60000, 28, 28)

print("x_shape:\n",x_train.shape)

# (60000,) Not yet one-hot code You need to operate by yourself later

print("y_shape:\n",y_train.shape)

# (60000, 28, 28) -> (60000,784) reshape() Middle parameter filling -1 Parameter results can be calculated automatically Divide 255.0 To normalize

x_train = x_train.reshape(x_train.shape[0],-1)/255.0

x_test = x_test.reshape(x_test.shape[0],-1)/255.0

# in one hot Format

y_train = np_utils.to_categorical(y_train,num_classes=10)

y_test = np_utils.to_categorical(y_test,num_classes=10)

3. Training models

# Creating models Input 784 Neurons , Output 10 Neurons

model = Sequential([

# Define output yes 200 Input is 784, Set offset to 1, add to softmax Activation function The first hidden layer has 200 Neurons

Dense(units=200,input_dim=784,bias_initializer='one',activation="tanh"),

# The second hidden layer has 100 Neurons

Dense(units=100,bias_initializer='one',activation="tanh"),

Dense(units=10,bias_initializer='one',activation="softmax")

])

# Define optimizer

sgd = SGD(lr=0.2)

# Define optimizer ,loss_function, The accuracy of calculation during training

model.compile(

optimizer=sgd,

loss="categorical_crossentropy",

metrics=['accuracy']

)

# Training models

model.fit(x_train,y_train,batch_size=32,epochs=10)

# Evaluation model

# Test set loss And accuracy

loss,accuracy = model.evaluate(x_test,y_test)

print("\ntest loss",loss)

print("test_accuracy:",accuracy)

# Training set loss And accuracy

loss,accuracy = model.evaluate(x_train,y_train)

print("\ntrain loss",loss)

print("train_accuracy:",accuracy)

2. Use Dropout Complete code for

The code running platform is jupyter-notebook, Code blocks in the article , According to jupyter-notebook Written in the order of division in , Run article code , Glue directly into jupyter-notebook that will do .

1. Import third-party library

import numpy as np

from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense,Dropout

from tensorflow.keras.optimizers import SGD

2. Loading data and data preprocessing

# Load data

(x_train,y_train),(x_test,y_test) = mnist.load_data()

# (60000, 28, 28)

print("x_shape:\n",x_train.shape)

# (60000,) Not yet one-hot code You need to operate by yourself later

print("y_shape:\n",y_train.shape)

# (60000, 28, 28) -> (60000,784) reshape() Middle parameter filling -1 Parameter results can be calculated automatically Divide 255.0 To normalize

x_train = x_train.reshape(x_train.shape[0],-1)/255.0

x_test = x_test.reshape(x_test.shape[0],-1)/255.0

# in one hot Format

y_train = np_utils.to_categorical(y_train,num_classes=10)

y_test = np_utils.to_categorical(y_test,num_classes=10)

3. Training models

# Creating models Input 784 Neurons , Output 10 Neurons

model = Sequential([

# Define output yes 200 Input is 784, Set offset to 1, add to softmax Activation function The first hidden layer has 200 Neurons

Dense(units=200,input_dim=784,bias_initializer='one',activation="tanh"),

# Give Way 40% Of neurons do not work

Dropout(0.4),

# The second hidden layer has 100 Neurons

Dense(units=100,bias_initializer='one',activation="tanh"),

# Give Way 40% Of neurons do not work

Dropout(0.4),

Dense(units=10,bias_initializer='one',activation="softmax")

])

# Define optimizer

sgd = SGD(lr=0.2)

# Define optimizer ,loss_function, The accuracy of calculation during training

model.compile(

optimizer=sgd,

loss="categorical_crossentropy",

metrics=['accuracy']

)

# Training models

model.fit(x_train,y_train,batch_size=32,epochs=10)

# Evaluation model

# Test set loss And accuracy

loss,accuracy = model.evaluate(x_test,y_test)

print("\ntest loss",loss)

print("test_accuracy:",accuracy)

# Training set loss And accuracy

loss,accuracy = model.evaluate(x_train,y_train)

print("\ntrain loss",loss)

print("train_accuracy:",accuracy)

边栏推荐

- 串口接收一包数据

- They gathered at the 2022 ecug con just for "China's technological power"

- German prime minister says Ukraine will not receive "NATO style" security guarantee

- Introduction to paddle - using lenet to realize image classification method II in MNIST

- Implementation of adjacency table of SQLite database storage directory structure 2-construction of directory tree

- Summary of the third course of weidongshan

- FOFA-攻防挑战记录

- 《因果性Causality》教程,哥本哈根大学Jonas Peters讲授

- ABAP ALV LVC template

- Codeforces Round #804 (Div. 2)(A~D)

猜你喜欢

Codeforces Round #804 (Div. 2)(A~D)

13.模型的保存和载入

Image data preprocessing

第四期SFO销毁,Starfish OS如何对SFO价值赋能?

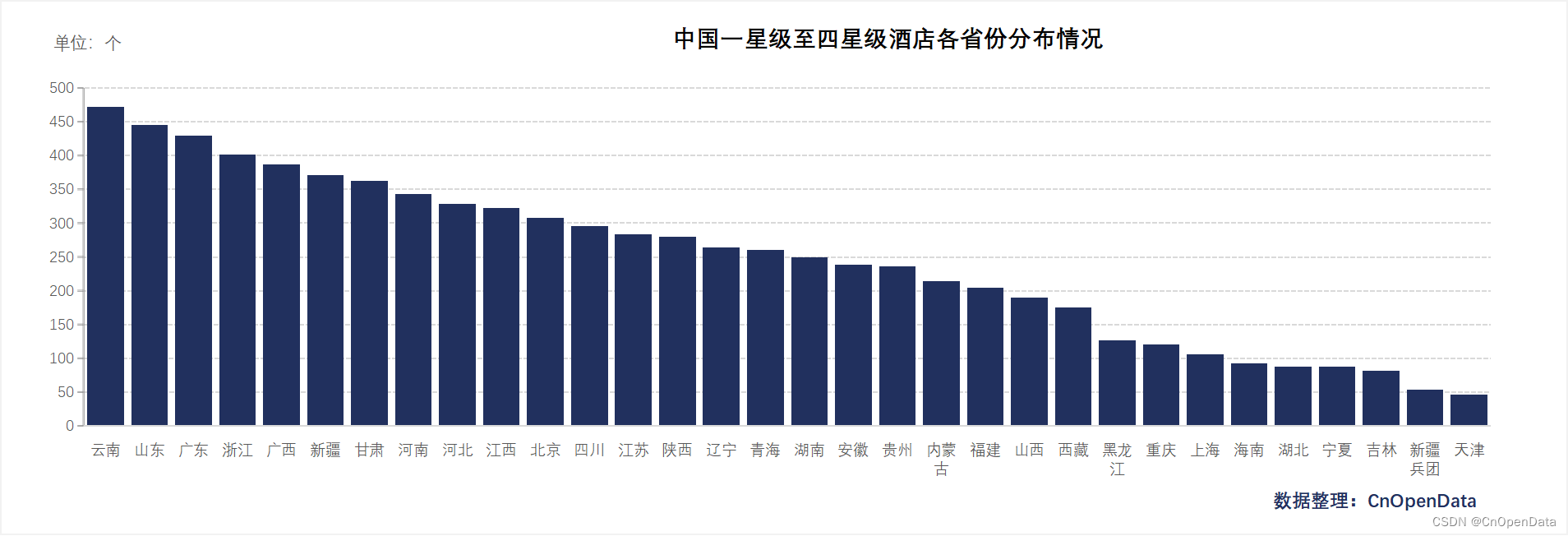

新库上线 | CnOpenData中国星级酒店数据

13. Enregistrement et chargement des modèles

![[Yugong series] go teaching course 006 in July 2022 - automatic derivation of types and input and output](/img/79/f5cffe62d5d1e4a69b6143aef561d9.png)

[Yugong series] go teaching course 006 in July 2022 - automatic derivation of types and input and output

fabulous! How does idea open multiple projects in a single window?

Invalid V-for traversal element style

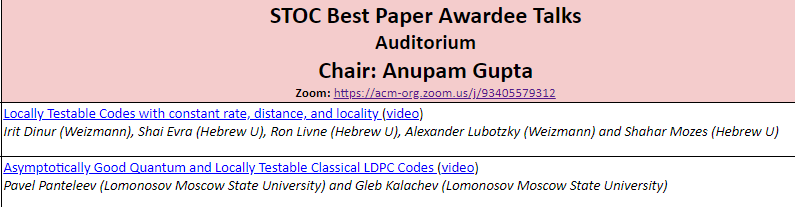

国内首次,3位清华姚班本科生斩获STOC最佳学生论文奖

随机推荐

Jemter distributed

AI遮天传 ML-回归分析入门

Fofa attack and defense challenge record

Service mesh introduction, istio overview

10.CNN应用于手写数字识别

攻防演练中沙盘推演的4个阶段

炒股开户怎么最方便,手机上开户安全吗

jemter分布式

5.过拟合,dropout,正则化

ABAP ALV LVC template

[Yugong series] go teaching course 006 in July 2022 - automatic derivation of types and input and output

Is it safe to open an account on the official website of Huatai Securities?

【GO记录】从零开始GO语言——用GO语言做一个示波器(一)GO语言基础

[go record] start go language from scratch -- make an oscilloscope with go language (I) go language foundation

Analysis of 8 classic C language pointer written test questions

Lecture 1: the entry node of the link in the linked list

Su embedded training - Day5

Class head up rate detection based on face recognition

Cascade-LSTM: A Tree-Structured Neural Classifier for Detecting Misinformation Cascades(KDD20)

Application practice | the efficiency of the data warehouse system has been comprehensively improved! Data warehouse construction based on Apache Doris in Tongcheng digital Department