当前位置:网站首页>[necessary for R & D personnel] how to make your own dataset and display it.

[necessary for R & D personnel] how to make your own dataset and display it.

2022-07-08 00:52:00 【Vertira】

2022.7.5, newest .paddle.fluid Will be eliminated by the official website , Although there are many books on the market , It's better not to use it ,. It is recommended that R & D personnel get started paddle Must from API Starting with .

Here I will introduce the use of paddle api How to make your own training data set ( Follow VOC Data set and COCO Data sets are not the same thing , Learn to make COCO and VOC Please find my previous blog for data set , It 's been written clearly , Relatively simple . This is only for R & D personnel , Not for developers or users ).

In fact, the official website is also very clear , But due to the version update , yes , we have api The calling method has changed , Resulting in several lines of official code , No hair use . Here I personally test , Fill up the hole , Share with you .

Most of them are official website content , Key code I have debugged . my paddle It's the latest of this year 2.3,2022 year 7 month .

Let's talk about the key points ( Some Mandarin , It's better said by the official website , I copied )

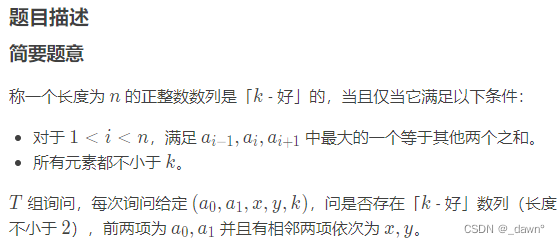

Data set definition and loading

Deep learning models require a lot of data to complete training and evaluation , These data samples may be pictures (image)、 Text (text)、 voice (audio) Etc , The model training process is actually a mathematical calculation process , Therefore, data samples need to go through a series of processing before being sent into the model , Such as converting data format 、 Divide the data set 、 Transform data shape (shape)、 Make data Iterative readers for batch training, etc .

In the propeller frame , The definition and loading of data sets can be completed through the following two core steps :

Define datasets : The original picture saved on disk 、 Samples such as text and corresponding tags are mapped to Dataset, To facilitate subsequent indexing (index) Reading data , stay Dataset Some data transformation can also be carried out in 、 Data augmentation and other preprocessing operations . Recommended for use in the propeller frame paddle.io.Dataset Custom datasets , In addition to paddle.vision.datasets and paddle.text Some classic data sets are built into the propeller under the directory to facilitate direct calling .

Iteratively read data sets : Automatically batch samples from datasets (batch)、 Disorder (shuffle) Wait for the operation , Facilitate iterative reading during training , At the same time, it also supports multi process asynchronous reading function, which can speed up data reading . It can be used in the propeller frame paddle.io.DataLoader Iteratively read data sets .

This paper takes image data set as an example , Text datasets can be referenced NLP Application practice .

One 、 Define datasets

1.1 It is a data set defined by the official website ( I'm not interested in this part , A little )

1.2 Use paddle.io.Dataset Custom datasets

In the actual scene , Generally, you need to use your own data to define the data set , You can pass paddle.io.Dataset Base classes to implement custom datasets .

You can build a subclass that inherits from paddle.io.Dataset , And implement the following three functions :

__init__: Complete dataset initialization , Map the sample file path and the corresponding label in the disk to a list .__getitem__: Define the specified index (index) How to obtain the sample data , Finally, the corresponding index A single piece of data ( Sample data 、 Corresponding label ).__len__: Returns the total number of samples in the dataset .

Here 's how to download MNIST After the original dataset file , use paddle.io.Dataset Code example for defining a dataset .

# Download the original MNIST Dataset and decompress

! wget https://paddle-imagenet-models-name.bj.bcebos.com/data/mnist.tar

! tar -xf mnist.tar

Download the data set , If you are window Direct violence

https://paddle-imagenet-models-name.bj.bcebos.com/data/mnist.tarThen unzip it .

The following is the code for making data sets ( File name : Custom datasets .py):

import os

import cv2

import numpy as np

from paddle.io import Dataset

import paddle.vision.transforms as T

import matplotlib.pyplot as plt

class MyDataset(Dataset):

"""

Step one : Inherit paddle.io.Dataset class

"""

def __init__(self, data_dir, label_path, transform=None):

"""

Step two : Realization __init__ function , Initialize the dataset , Map samples and labels to the list

"""

super(MyDataset, self).__init__()

self.data_list = []

with open(label_path,encoding='utf-8') as f:

for line in f.readlines():

image_path, label = line.strip().split('\t')

image_path = os.path.join(data_dir, image_path)

self.data_list.append([image_path, label])

# Pass in the defined data processing method , As an attribute of the custom dataset class

self.transform = transform

def __getitem__(self, index):

"""

Step three : Realization __getitem__ function , The definition specifies index How to get data when , And return a single piece of data ( Sample data 、 Corresponding label )

"""

# Index based , Take an image from the list

image_path, label = self.data_list[index]

# Read grayscale

image = cv2.imread(image_path, cv2.IMREAD_GRAYSCALE)

# The default internal data format for propeller training is float32, Convert the image data format to float32

image = image.astype('float32')

# Apply data processing methods to images

if self.transform is not None:

image = self.transform(image)

# CrossEntropyLoss requirement label The format is int, take Label Format conversion to int

label = int(label)

# Returns the image and the corresponding label

return image, label

def __len__(self):

"""

Step four : Realization __len__ function , Returns the total number of samples in the dataset

"""

return len(self.data_list)

# Define the image normalization processing method , there CHW Means that the image format must be [C The channel number ,H Height of the image ,W The width of the image ]

transform = T.Normalize(mean=[127.5], std=[127.5], data_format='CHW')

# Print the number of data set samples

train_custom_dataset = MyDataset('mnist/train','mnist/train/label.txt', transform)

test_custom_dataset = MyDataset('mnist/val','mnist/val/label.txt', transform)

print('train_custom_dataset images: ',len(train_custom_dataset), 'test_custom_dataset images: ',len(test_custom_dataset))

The code above , It should be the same as the downloaded file The same path . otherwise Run failed

And then after running , Output such a sentence The size of the dataset And length

train_custom_dataset images: 60000 test_custom_dataset images: 10000 In the code above , Customize a dataset class MyDataset,MyDataset Inherited from paddle.io.Dataset Base class , And implemented __init__,__getitem__ and __len__ Three functions .

stay

__init__Function completes the reading and parsing of the label file , And all the image pathsimage_pathAnd the corresponding labellabelSave to a listdata_listin .stay

__getitem__The specified... Is defined in the function index Method for acquiring corresponding image data , Finished reading the image 、 Preprocessing and image label format conversion , Finally, the image and corresponding label are returnedimage, label.stay

__len__Function__init__The list of data sets initialized in thedata_listlength .

in addition , stay __init__ Functions and __getitem__ Function can also implement some data preprocessing operations , Such as flipping the image 、 tailoring 、 Normalization and other operations , Finally, a single piece of processed data is returned ( Sample data 、 Corresponding label ), This operation can increase the diversity of image data , It helps to enhance the generalization ability of the model . The propeller frame is paddle.vision.transforms There are dozens of image data processing methods built in , Please refer to Data preprocessing chapter .

The following is Try reading a data set ( One of the pictures ), Show me

Directly in the above program Add later ;

for data in train_custom_dataset:

image, label = data

print('shape of image: ',image.shape)

plt.title(str(label))

plt.imshow(image[0])

plt.show()

breakYou can display a picture

Come here , The production and reading of the data set are over .

If it works for you , Welcome to thumb up Collection Pay more attention to .

Two 、 Iteratively read data sets

2.1 Use paddle.io.DataLoader Define data readers ¶

Read through the direct iteration described above Dataset Although the method can realize the access to the data set , However, this access method can only be performed by a single thread and requires manual batching (batch). In the propeller frame , Recommended paddle.io.DataLoader API Read data sets in multiple processes , And can automatically complete the division batch The job of .

# Define and initialize the data reader

train_loader = paddle.io.DataLoader(train_custom_dataset, batch_size=64, shuffle=True, num_workers=1, drop_last=True)

# call DataLoader Read data iteratively

for batch_id, data in enumerate(train_loader()):

images, labels = data

print("batch_id: {}, Training data shape: {}, Tag data shape: {}".format(batch_id, images.shape, labels.shape))

break

batch_id: 0, Training data shape: [64, 1, 28, 28], Tag data shape: [64]

By the above methods , Initialized a data reader train_loader, Used to load training data sets custom_dataset. Several commonly used fields in data readers are as follows :

batch_size: Number of samples read per batch , Examplebatch_size=64Indicates that each batch reads 64 Samples .shuffle: Sample disorder , Exampleshuffle=TrueIndicates that the sample sequence is disturbed when taking data , To reduce the possibility of over fitting .drop_last: Discard incomplete batch samples , Exampledrop_last=TrueIndicates that the number of data set samples cannot be discarded batch_size The last incomplete result of division batch sample .num_workers: Sync / Reading data asynchronously , adoptnum_workersTo set the number of child processes that load data ,num_workers The value of is set to be greater than 0 when , That is, start the multi process mode to load data asynchronously , It can improve the data reading speed .

After defining the data reader , Ready to use for The loop easily iterates through the batch data , Used for model training . It is worth noting that , If you use high-level API Of paddle.Model.fit Read the data set for training , Then just define the dataset Dataset that will do , There is no need to define it separately DataLoader, because paddle.Model.fit Actually, some of them have been encapsulated DataLoader The function of , Details available model training 、 Evaluation and reasoning chapter .

notes : DataLoader In fact, it is through the batch sampler BatchSampler The resulting batch index list , And get... According to the index Dataset Corresponding sample data in , To load batch data .DataLoader The batch size for sampling is defined in 、 Sequence and so on , The corresponding fields include batch_size、shuffle、drop_last. These three fields can also be used as one batch_sampler Fields instead of , And in batch_sampler Import a customized batch sampler instance in . Choose one of the above two methods , The same effect can be achieved . In the following section, the usage of the latter custom sampler is introduced , This usage provides more flexibility in defining sampling rules .

2.2 ( Optional ) Custom sampler

The sampler defines the sampling behavior from the dataset , Such as sequential sampling 、 Batch sampling 、 Random sampling 、 Distributed sampling, etc . The sampler will follow the set sampling rules , Returns the list of indexes in the dataset , Then the data reader Dataloader You can take the corresponding samples from the data set according to the index list .

The propeller frame is paddle.io A variety of samplers are available under the directory , Such as batch sampler BatchSampler、 Distributed batch sampler DistributedBatchSampler、 Sequential sampler SequenceSampler、 Random sampler RandomSampler etc. .

Here are two sample codes , Introduce the usage of sampler .

First , With BatchSampler For example , Introduced in DataLoader Use in BatchSampler Method of obtaining sampling data .

from paddle.io import BatchSampler

# Define a batch sampler , And set the sampling data set source 、 Sampling batch size 、 Whether the order is disordered

bs = BatchSampler(train_custom_dataset, batch_size=8, shuffle=True, drop_last=True)

print("BatchSampler Each iteration returns an index list ")

for batch_indices in bs:

print(batch_indices)

break

# stay DataLoader Use in BatchSampler Get sampling data

train_loader = paddle.io.DataLoader(train_custom_dataset, batch_sampler=bs, num_workers=1)

print(" stay DataLoader Use in BatchSampler, Return a set of sample and label data corresponding to the index ")

for batch_id, data in enumerate(train_loader()):

images, labels = data

print("batch_id: {}, Training data shape: {}, Tag data shape: {}".format(batch_id, images.shape, labels.shape))

break

BatchSampler Each iteration returns an index list

[53486, 39208, 42267, 46762, 33087, 54705, 55986, 20736]

stay DataLoader Use in BatchSampler, Return a set of sample and label data corresponding to the index

batch_id: 0, Training data shape: [8, 1, 28, 28], Tag data shape: [8]

In the above example code , A batch sampler instance is defined bs, Each iteration will return one batch_size Index list of sizes ( In the example, a round of iteration returns 8 Index values ), Data readers train_loader adopt batch_sampler=bs Field is passed into the batch sampler , You can get a corresponding set of sample data according to these indexes . In addition, you can see ,batch_size、shuffle、drop_last These three parameters are only in BatchSampler Set the .

Here is another code example , Compare the sampling behavior of several different samplers .

from paddle.io import SequenceSampler, RandomSampler, BatchSampler, DistributedBatchSampler

class RandomDataset(paddle.io.Dataset):

def __init__(self, num_samples):

self.num_samples = num_samples

def __getitem__(self, idx):

image = np.random.random([784]).astype('float32')

label = np.random.randint(0, 9, (1, )).astype('int64')

return image, label

def __len__(self):

return self.num_samples

train_dataset = RandomDataset(100)

print('----------------- Sequential sampling ----------------')

sampler = SequenceSampler(train_dataset)

batch_sampler = BatchSampler(sampler=sampler, batch_size=10)

for index in batch_sampler:

print(index)

print('----------------- Random sampling ----------------')

sampler = RandomSampler(train_dataset)

batch_sampler = BatchSampler(sampler=sampler, batch_size=10)

for index in batch_sampler:

print(index)

print('----------------- Distributed sampling ----------------')

batch_sampler = DistributedBatchSampler(train_dataset, num_replicas=2, batch_size=10)

for index in batch_sampler:

print(index)

----------------- Sequential sampling ----------------

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

[10, 11, 12, 13, 14, 15, 16, 17, 18, 19]

[20, 21, 22, 23, 24, 25, 26, 27, 28, 29]

[30, 31, 32, 33, 34, 35, 36, 37, 38, 39]

[40, 41, 42, 43, 44, 45, 46, 47, 48, 49]

[50, 51, 52, 53, 54, 55, 56, 57, 58, 59]

[60, 61, 62, 63, 64, 65, 66, 67, 68, 69]

[70, 71, 72, 73, 74, 75, 76, 77, 78, 79]

[80, 81, 82, 83, 84, 85, 86, 87, 88, 89]

[90, 91, 92, 93, 94, 95, 96, 97, 98, 99]

----------------- Random sampling ----------------

[44, 29, 37, 11, 21, 53, 65, 3, 26, 23]

[17, 4, 48, 84, 86, 90, 92, 76, 97, 69]

[35, 51, 71, 45, 25, 38, 32, 83, 22, 57]

[47, 55, 39, 46, 78, 61, 68, 66, 18, 41]

[77, 81, 15, 63, 91, 54, 24, 75, 59, 99]

[73, 88, 20, 43, 93, 56, 95, 60, 87, 72]

[70, 98, 1, 64, 0, 16, 33, 14, 80, 89]

[36, 40, 62, 50, 9, 34, 8, 19, 82, 6]

[74, 27, 30, 58, 31, 28, 12, 13, 7, 49]

[10, 52, 2, 94, 67, 96, 79, 42, 5, 85]

----------------- Distributed sampling ----------------

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

[20, 21, 22, 23, 24, 25, 26, 27, 28, 29]

[40, 41, 42, 43, 44, 45, 46, 47, 48, 49]

[60, 61, 62, 63, 64, 65, 66, 67, 68, 69]

[80, 81, 82, 83, 84, 85, 86, 87, 88, 89]

From the output of the code, we can see :

Sequential sampling : Output the index of each sample in a sequential manner .

Random sampling : First, disrupt the sequence of samples , Then output the sample index after disorder .

Distributed sampling : Commonly used in distributed training scenarios , Cut the sample data into multiple parts , Put them on different cards for training . In the example

num_replicas=2, The sample will be divided into two cards , So here we only output the index of half the samples .

3、 ... and 、 summary

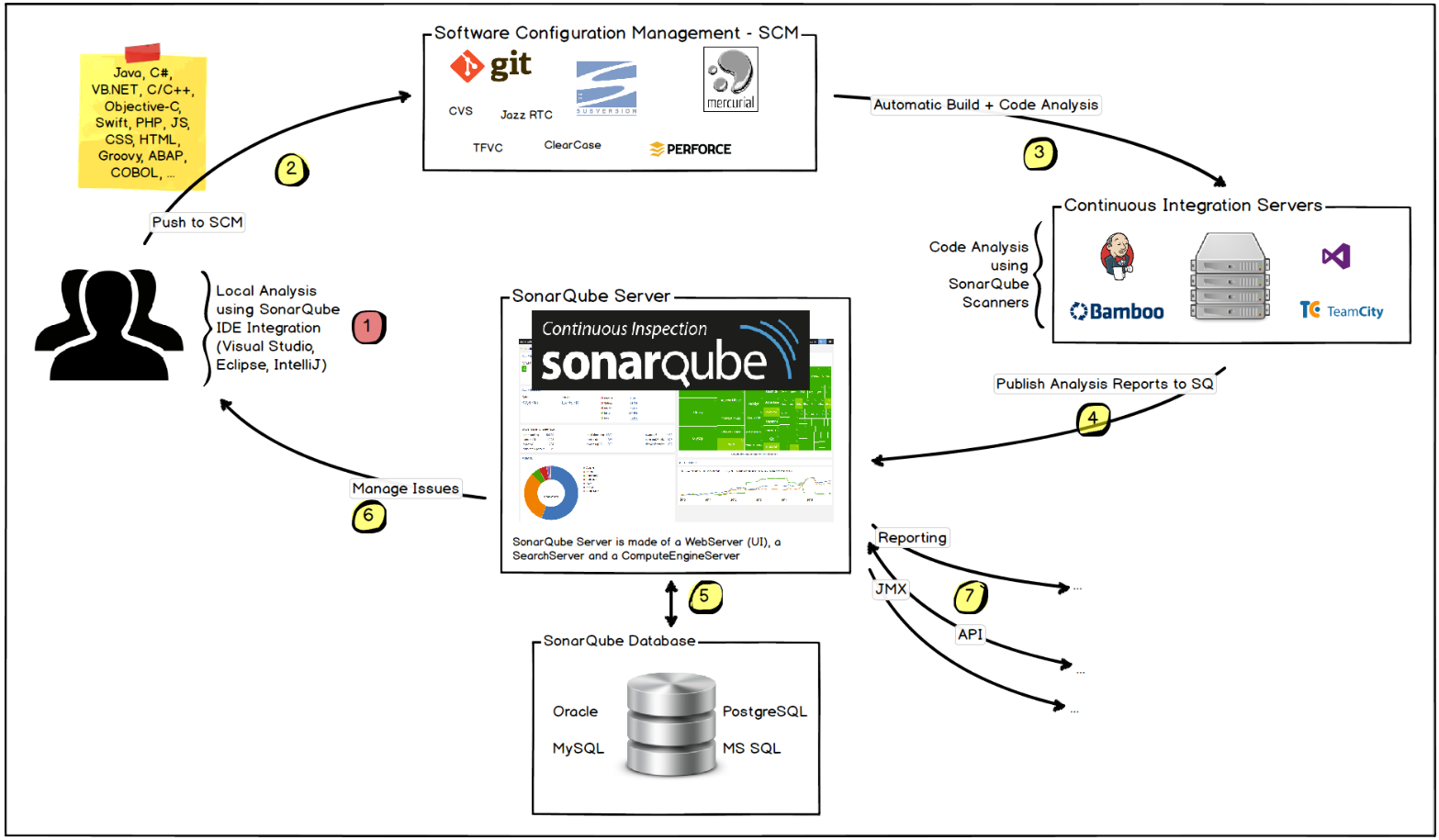

This section introduces the processing flow before the data is sent into the model training in the propeller frame , Summarize the whole process and the key used API As shown in the figure below .

chart 1: Data set definition and loading process

It mainly includes two steps: defining a data set and defining a data reader , In addition, the sampler can be called in the data reader to realize more flexible sampling . among , When defining a dataset , This section only normalizes the data set , To learn more about data enhancement related operations , You can refer to Data preprocessing .

After all the above data processing work is completed , You can enter the next task : model training 、 Evaluation and reasoning .

Refer to the following :

Data set definition and loading - Using document -PaddlePaddle Deep learning platform

Reference resources : Data set definition and loading 、 Data preprocessing

paddle.io.Dataset and paddle.io.DataLoader : Custom datasets and loading functions API

边栏推荐

- 爬虫实战(八):爬表情包

- jemter分布式

- 华为交换机S5735S-L24T4S-QA2无法telnet远程访问

- 韦东山第三期课程内容概要

- 浪潮云溪分布式数据库 Tracing(二)—— 源码解析

- How to learn a new technology (programming language)

- Analysis of 8 classic C language pointer written test questions

- Password recovery vulnerability of foreign public testing

- 基于微信小程序开发的我最在行的小游戏

- A brief history of information by James Gleick

猜你喜欢

玩轉Sonar

ReentrantLock 公平锁源码 第0篇

How to learn a new technology (programming language)

Interface test advanced interface script use - apipost (pre / post execution script)

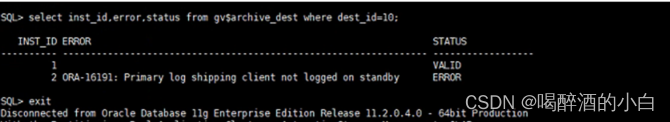

备库一直有延迟,查看mrp为wait_for_log,重启mrp后为apply_log但过一会又wait_for_log

The standby database has been delayed. Check that the MRP is wait_ for_ Log, apply after restarting MRP_ Log but wait again later_ for_ log

SDNU_ ACM_ ICPC_ 2022_ Summer_ Practice(1~2)

How does the markdown editor of CSDN input mathematical formulas--- Latex syntax summary

QT establish signal slots between different classes and transfer parameters

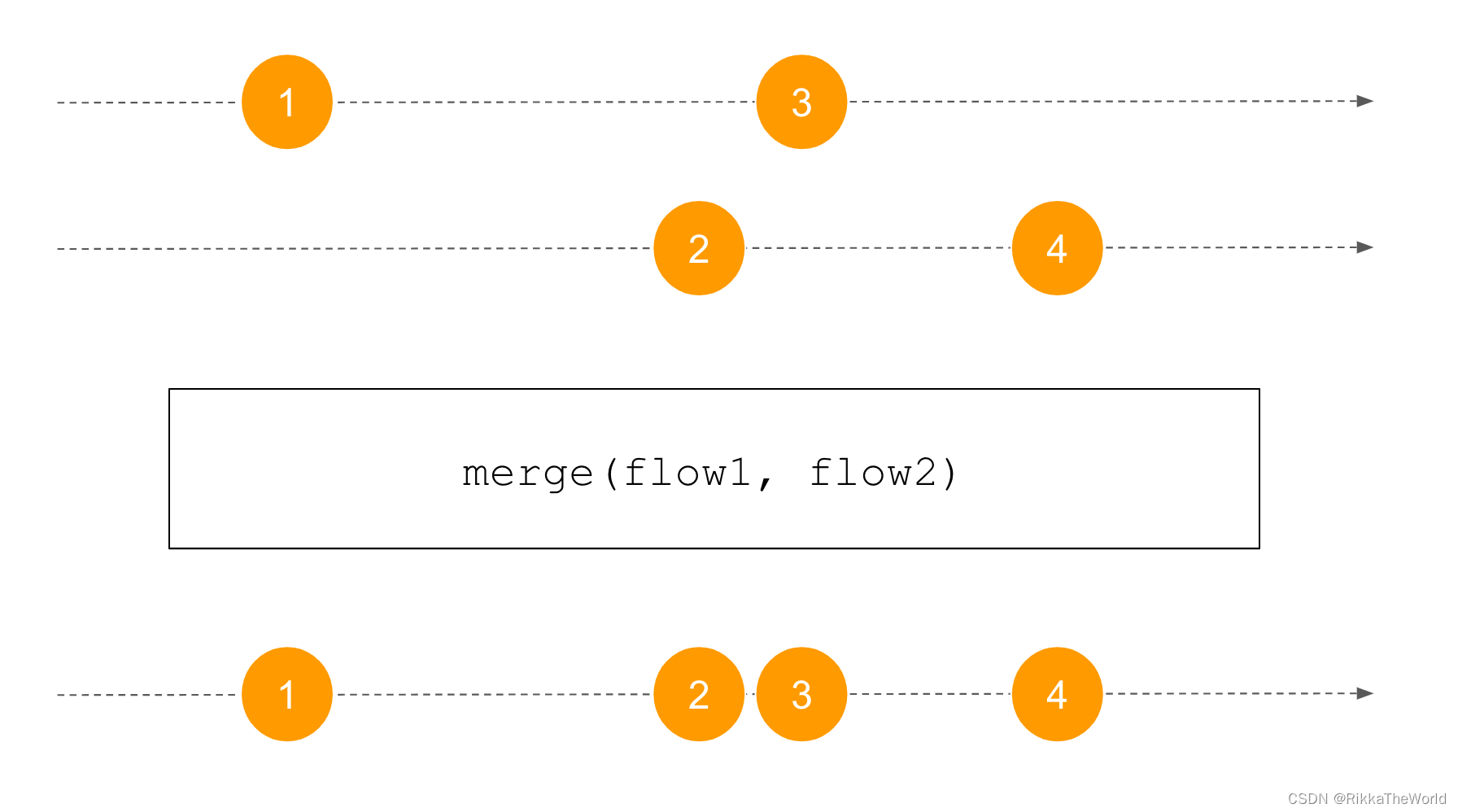

Deep dive kotlin synergy (XXII): flow treatment

随机推荐

攻防世界Web进阶区unserialize3题解

从服务器到云托管,到底经历了什么?

[Yugong series] go teaching course 006 in July 2022 - automatic derivation of types and input and output

Reentrantlock fair lock source code Chapter 0

【obs】Impossible to find entrance point CreateDirect3D11DeviceFromDXGIDevice

ThinkPHP kernel work order system source code commercial open source version multi user + multi customer service + SMS + email notification

Is Zhou Hongyi, 52, still young?

基于人脸识别实现课堂抬头率检测

大二级分类产品页权重低,不收录怎么办?

Cancel the down arrow of the default style of select and set the default word of select

Four stages of sand table deduction in attack and defense drill

LeetCode刷题

Course of causality, taught by Jonas Peters, University of Copenhagen

Cascade-LSTM: A Tree-Structured Neural Classifier for Detecting Misinformation Cascades(KDD20)

哪个券商公司开户佣金低又安全,又靠谱

基于卷积神经网络的恶意软件检测方法

【obs】Impossible to find entrance point CreateDirect3D11DeviceFromDXGIDevice

丸子官网小程序配置教程来了(附详细步骤)

Cascade-LSTM: A Tree-Structured Neural Classifier for Detecting Misinformation Cascades(KDD20)

Smart regulation enters the market, where will meituan and other Internet service platforms go