当前位置:网站首页>Single machine high concurrency model design

Single machine high concurrency model design

2022-07-08 00:10:00 【Abbot's temple】

background

In the microservices architecture , We are used to using multiple machines 、 Distributed storage 、 Cache to support a highly concurrent request model , It ignores how the single machine high concurrency model works . This article deconstructs the process of establishing connection and data transmission between client and server , Explain how to design a single machine high concurrency model .

classic C10K problem

How to serve on a physical machine at the same time 10K user , And 10000 Users , about java For the programmer , It's not difficult , Use netty It can be built to support concurrency more than 10000 The server of . that netty How is it realized ? First we forget netty, Analyze from the beginning . One connection per user , There are two things about the server

Manage this 10000 A connection

Handle 10000 Connected data transmission

TCP Connection and data transmission

Connection is established

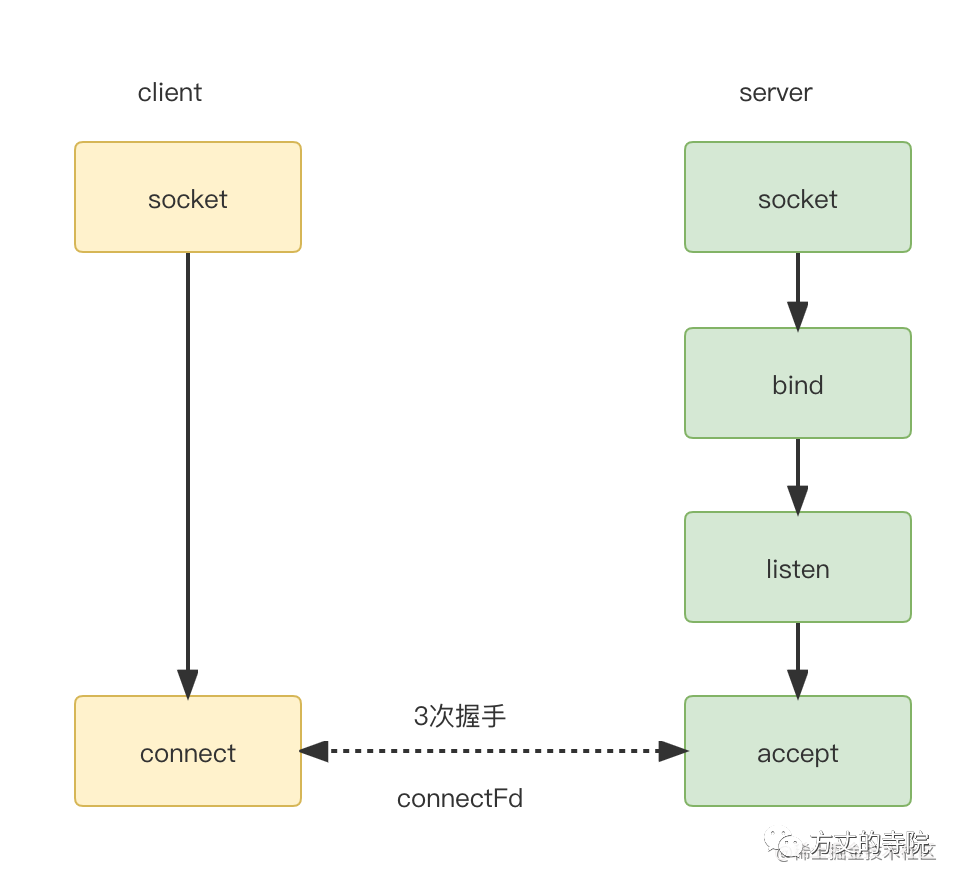

We take common TCP For example, connection .

A familiar picture . This article focuses on the analysis of the server , So ignore the client details first . On the server side, create socket,bind port ,listen Be on it . Finally through accept Establish a connection with the client . Get one connectFd, namely Connect socket ( stay Linux Are file descriptors ), Used to uniquely identify a connection . After that, data transmission is based on this .

The data transfer

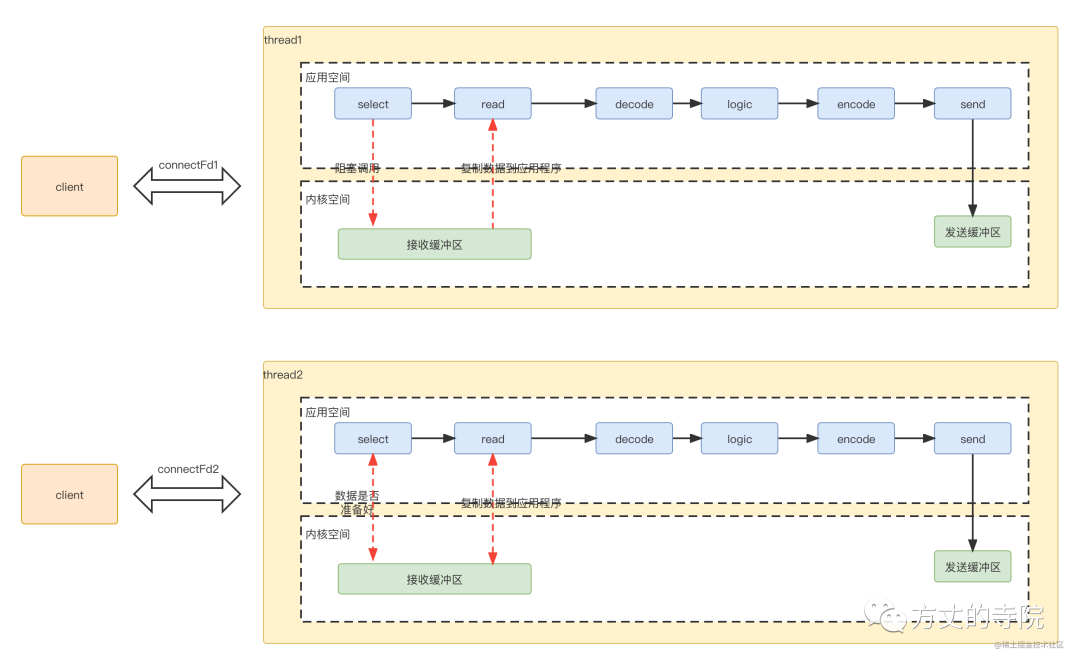

For data transmission , The server opens a thread to process data . The specific process is as follows

For data transmission , The server opens a thread to process data . The specific process is as follows

selectApplication program to system kernel space , Ask if the data is ready ( Because there is a window size limit , There is no data , You can read ), The data is not ready , The application has been blocked , Waiting for an answer .readThe kernel judges that the data is ready , Copy data from the kernel to the application , After completion , Successfully returns .The application goes on decode, Business logic processing , Last encode, Send it out , Return to the client

Because a thread processes a connection data , The corresponding threading model is like this

Multiplexing

Blocking vs Non blocking

Because a connection transmits , One thread , Too many threads are required , It takes up a lot of resources . At the same time, the connection ends , Resource destruction . You have to re create the connection . So a natural idea is to reuse threads . That is, multiple connections use the same thread . This raises a problem , Originally, the entrance where we carried out data transmission ,, Suppose the thread is processing the data of a connection , But the data has never been in good time , because select It's blocked , In this way, even if other connections have data readable , I can't read . So it can't be blocked , Otherwise, multiple connections cannot share a thread . So it must be non blocking .

polling VS Event notification

After changing to non blocking , Applications need to constantly poll the kernel space , Determine whether a connection ready.

for (connectfd fd: connectFds) {

if (fd.ready) {

process();

}

}

Polling is inefficient , Extraordinary consumption CPU, So a common practice is that the callee sends an event notification to inform the caller , Instead of the caller polling . This is it. IO Multiplexing , All the way refers to standard input and connection socket . Register a batch of sockets into a group in advance , When there is any one in this group IO When an event is , Go to inform the blocking object that it is ready .

select/poll/epoll

IO The common realization of multiplexing technology is select,poll.select And poll Not much difference , Mainly poll There is no limit to the maximum file descriptor .

From polling to event notification , Use multiplexing IO After optimization , Although the application does not have to poll the kernel space all the time . But after receiving the event notification in kernel space , The application does not know which corresponding connection event , You have to traverse

onEvent() {

// Listening for events

for (connectfd fd: registerConnectFds) {

if (fd.ready) {

process();

}

}

}

Foreseeable , As the number of connections increases , The time consumption increases in proportion . Comparison poll The number of events returned ,epoll There is an event to return connectFd Array , This avoids application polling .

onEvent() {

// Listening for events

for (connectfd fd: readyConnectFds) {

process();

}

}

Of course epoll The high performance of is more than that , There are also edge triggers (edge-triggered), I will not elaborate in this article .

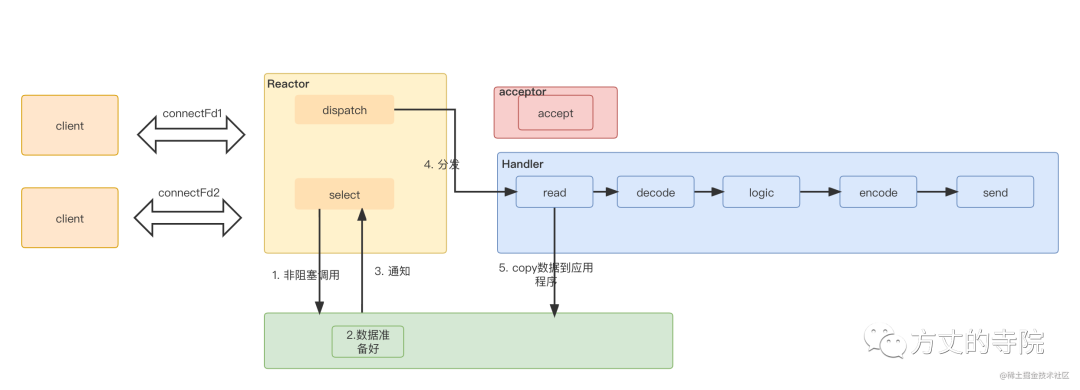

Non blocking IO+ The multiplexing process is as follows :

selectApplication program to system kernel space , Ask if the data is ready ( Because there is a window size limit , There is no data , You can read ), Go straight back to , Nonblocking call .Data is ready in kernel space , send out ready read Feed the application

The application reads data , Conduct decode, Business logic processing , Last encode, Send it out , Return to the client

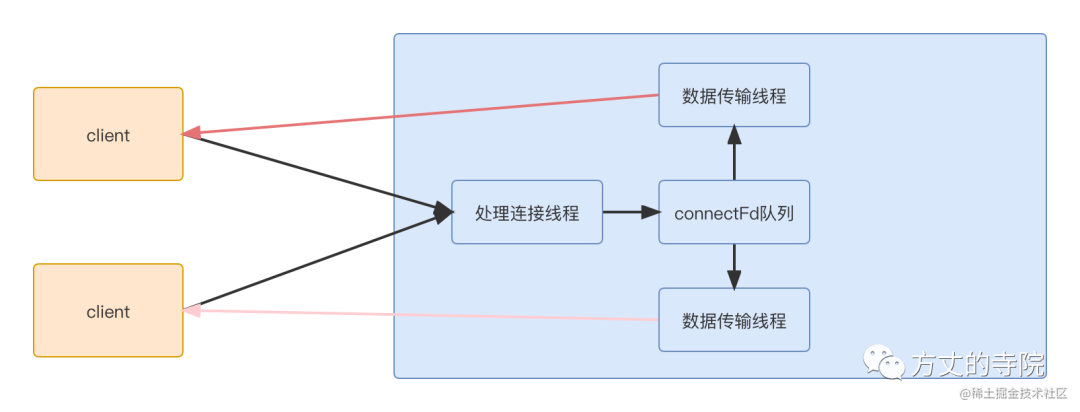

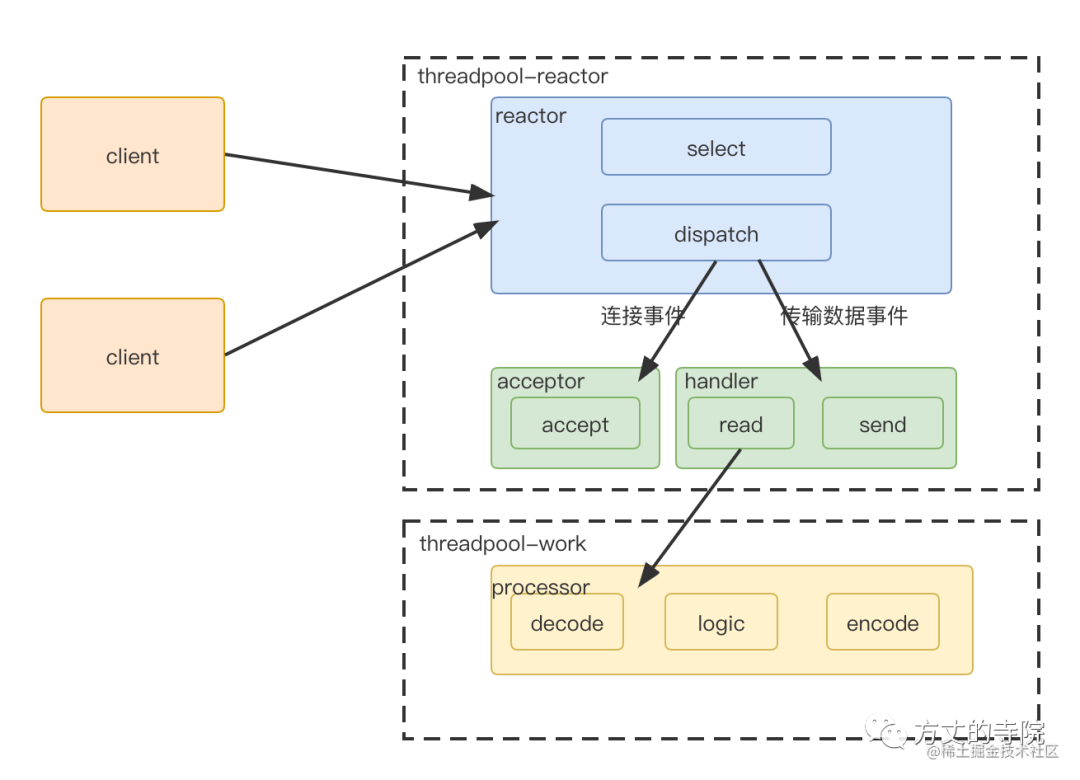

Thread pool division

Above we mainly through non blocking + Multiplexing IO To solve local select and read problem . Let's re sort out the overall process , See how the whole data processing process can be grouped . Each stage uses a different thread pool to handle , Increase of efficiency . First of all, there are two kinds of events

Connection event

acceptAction to deal withTransport events

select,read,sendAction to deal with .The connection event processing flow is relatively fixed , No additional logic , No further splitting is required . Transport events

read,sendIt is relatively fixed , The processing logic of each connection is similar , It can be processed in a thread pool . And concrete logicdecode,logic,encodeEach connection processing logic is different . The whole can be processed in a thread pool .

The server is split into 3 part

reactor part , Unified handling of events , Then distribute according to the type

Connection events are distributed to acceptor, Data transmission events are distributed to handler

If it is data transmission type ,handler read Give it to me after processorc Handle

because 1,2 It's faster to handle , Put it into the process pool for treatment , The business logic is processed in another thread pool .

The above is the famous reactor High concurrency model .

I am participating in the recruitment of nuggets technology community creator signing program

边栏推荐

- Emotional post station 010: things that contemporary college students should understand

- 数据库查询——第几高的数据?

- 手写一个模拟的ReentrantLock

- Uic564-2 Appendix 4 - flame retardant fire test: flame diffusion

- When creating body middleware, express Is there any difference between setting extended to true and false in urlencoded?

- The result of innovation in professional courses such as robotics (Automation)

- Scrapy framework

- QT creator add custom new file / Project Template Wizard

- 关于组织2021-2022全国青少年电子信息智能创新大赛西南赛区(四川)复赛的通知

- 【编程题】【Scratch二级】2019.12 飞翔的小鸟

猜你喜欢

35岁真就成了职业危机?不,我的技术在积累,我还越吃越香了

Connect diodes in series to improve voltage withstand

QT creator add JSON based Wizard

【编程题】【Scratch二级】2019.09 制作蝙蝠冲关游戏

BSS 7230 flame retardant performance test of aviation interior materials

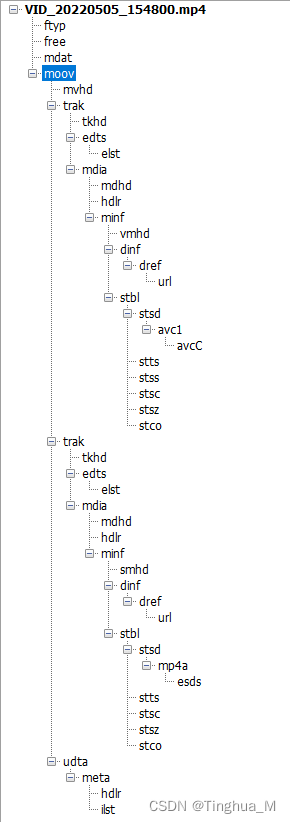

An example analysis of MP4 file format parsing

![[question de programmation] [scratch niveau 2] oiseaux volants en décembre 2019](/img/5e/a105f8615f3991635c9ffd3a8e5836.png)

[question de programmation] [scratch niveau 2] oiseaux volants en décembre 2019

FFA and ICGA angiography

![[basis of recommendation system] sampling and construction of positive and negative samples](/img/4b/753a61b583cf38826b597fd31e5d20.png)

[basis of recommendation system] sampling and construction of positive and negative samples

Kubectl 好用的命令行工具:oh-my-zsh 技巧和窍门

随机推荐

Basic learning of SQL Server -- creating databases and tables with code

Visual Studio Deployment Project - Create shortcut to deployed executable

[programming problem] [scratch Level 2] March 2019 draw a square spiral

ROS from entry to mastery (IX) initial experience of visual simulation: turtlebot3

35岁那年,我做了一个面临失业的决定

Opengl3.3 mouse picking up objects

QT creator add JSON based Wizard

Detailed explanation of interview questions: the history of blood and tears in implementing distributed locks with redis

Solutions to problems in sqlserver deleting data in tables

Database interview questions + analysis

全自动化处理每月缺卡数据,输出缺卡人员信息

Tools for debugging makefiles - tool for debugging makefiles

Redis caching tool class, worth owning~

串联二极管,提高耐压

Anaconda+pycharm+pyqt5 configuration problem: pyuic5 cannot be found exe

自动化测试:Robot FrameWork框架90%的人都想知道的实用技巧

Kubectl 好用的命令行工具:oh-my-zsh 技巧和窍门

52岁的周鸿祎,还年轻吗?

Restricted linear table

Database query - what is the highest data?