当前位置:网站首页>Semantic segmentation model base segmentation_ models_ Detailed introduction to pytorch

Semantic segmentation model base segmentation_ models_ Detailed introduction to pytorch

2022-07-08 00:45:00 【Ten thousand miles and a bright future arrived in an instant】

segmentation_models_pytorch( Later referred to as" smp) It is a high-level model base for semantic segmentation , Support 9 A semantic segmentation network ,400 Multi seat encoder , Originally, bloggers were not interested in this model library that only supports individual networks . however , In view of several competitions top All schemes use unet++(efficientnet Make encoder ), Bloggers are excited . If you want to use more and more comprehensive semantic segmentation models, it is recommended to use MMSegmentation, hold MMSegmentation As a semantic segmentation model base, you can refer to pytorch 24 hold MMSegmentation As pytorch The semantic segmentation model base uses ( The training and deployment of the model have been realized )_ A flash of hope to my blog -CSDN Blog _mmsegmentation Use MMSegmentation It is a semantic segmentation library suite launched by Shangtang technology , Belong to OpenMMLab Part of the project , There are many semantic segmentation models . Why do bloggers have such ideas ? There are two reasons , The first point :MMSegmentation The package is too perfect , As a dependent on pytorch Our model base is different in training , The free play space of users is very small ( For example, customization loss、 Custom learning rate scheduler 、 Custom data loader , Learn everything again ), This makes it difficult for bloggers to accept ; Second point :github Others given on pytorch The model library is too hip , The number of models supported is limited , Most only support 2020 Model years ago , This is right https://hpg123.blog.csdn.net/article/details/124459439 Back to the point , Next, describe smp The use and construction of .smp The project address of is :https://github.com/qubvel/segmentation_models.pytorch

install smp:

pip install segmentation-models-pytorch

1、 Basic introduction

9 A model architecture ( Including the legendary Unet):

- Unet [paper] [docs]

- Unet++ [paper] [docs]

- MAnet [paper] [docs]

- Linknet [paper] [docs]

- FPN [paper] [docs]

- PSPNet [paper] [docs]

- PAN [paper] [docs]

- DeepLabV3 [paper] [docs]

- DeepLabV3+ [paper] [docs]

113 Available encoders ( As well as from timm Of 400 Multiple encoders , All encoders have pre trained weights , To converge faster and better ): It is mainly a variety of versions of the following Networks

ResNet

ResNeXt

ResNeSt

Res2Ne(X)t

RegNet(x/y)

GERNet

SE-Net

SK-ResNe(X)t

DenseNet

Inception

EfficientNet

MobileNet

DPN

VGG

Common losses of training routine : Supported by loss As shown below ( stay segmentation_models_pytorch.losses in )

from .jaccard import JaccardLoss

from .dice import DiceLoss

from .focal import FocalLoss

from .lovasz import LovaszLoss

from .soft_bce import SoftBCEWithLogitsLoss

from .soft_ce import SoftCrossEntropyLoss

from .tversky import TverskyLoss

from .mcc import MCCLossCommon indicators of training routine : stay segmentation_models_pytorch.metrics in

from .functional import (

get_stats,

fbeta_score,

f1_score,

iou_score,

accuracy,

precision,

recall,

sensitivity,

specificity,

balanced_accuracy,

positive_predictive_value,

negative_predictive_value,

false_negative_rate,

false_positive_rate,

false_discovery_rate,

false_omission_rate,

positive_likelihood_ratio,

negative_likelihood_ratio,

)2、 Model construction

smp The construction of the model in is very convenient , Input decoder type , Weight type , Enter the number of channels 、 The number of output channels is enough .

import segmentation_models_pytorch as smp

model = smp.Unet(

encoder_name="resnet34", # choose encoder, e.g. mobilenet_v2 or efficientnet-b7

encoder_weights="imagenet", # use `imagenet` pre-trained weights for encoder initialization

in_channels=1, # model input channels (1 for gray-scale images, 3 for RGB, etc.)

classes=3, # model output channels (number of classes in your dataset)

)But sometimes , The network structure needs to be modified , You can specify more detailed parameters ( Such as the depth of the network , Whether auxiliary head is needed 【 The default auxiliary headers here are classification headers 】). It should be noted that , stay unet The depth of encoder and decoder in the network stage The number must be the same (stage Medium filter num It can be modified according to the situation )

3、 The concrete structure of the model

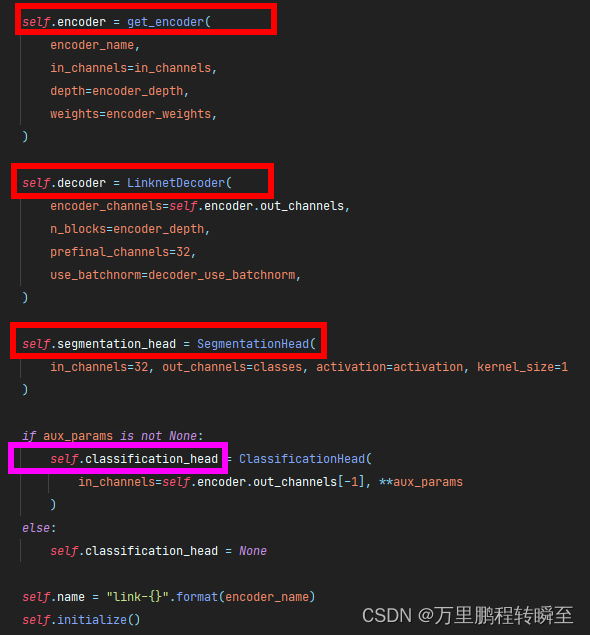

stay smp in , All models have the following structure (encoder,decoder and segmentation_head).encoder It is controlled by transferring parameters ,decoder By specific model Class determination ( all smp Model decoder The output of is a tensor, non-existent list【 Such as pspnet The multi-scale features of , stay decoder Used before output conv It's fused 】),segmentation_head By the incoming classes determine ( It's just a simple one conv layer )

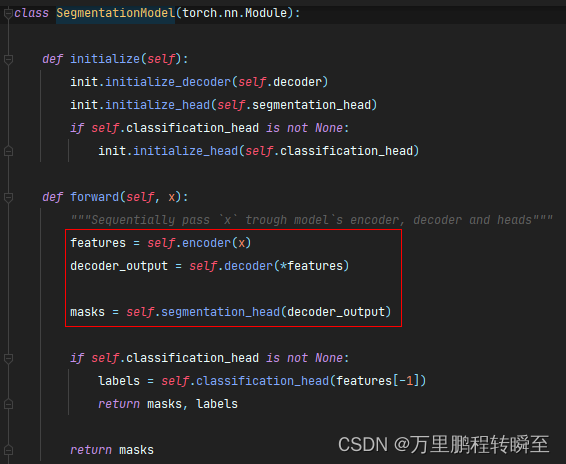

all smp Model forward The process is shown in the figure below

边栏推荐

- 3年经验,面试测试岗20K都拿不到了吗?这么坑?

- Cascade-LSTM: A Tree-Structured Neural Classifier for Detecting Misinformation Cascades(KDD20)

- 基于微信小程序开发的我最在行的小游戏

- 接口测试要测试什么?

- [programming problem] [scratch Level 2] 2019.09 make bat Challenge Game

- 8道经典C语言指针笔试题解析

- RPA cloud computer, let RPA out of the box with unlimited computing power?

- paddle一个由三个卷积层组成的网络完成cifar10数据集的图像分类任务

- 玩转Sonar

- 从服务器到云托管,到底经历了什么?

猜你喜欢

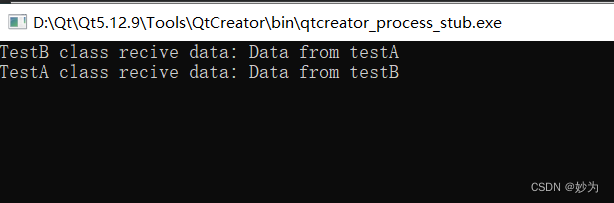

QT establish signal slots between different classes and transfer parameters

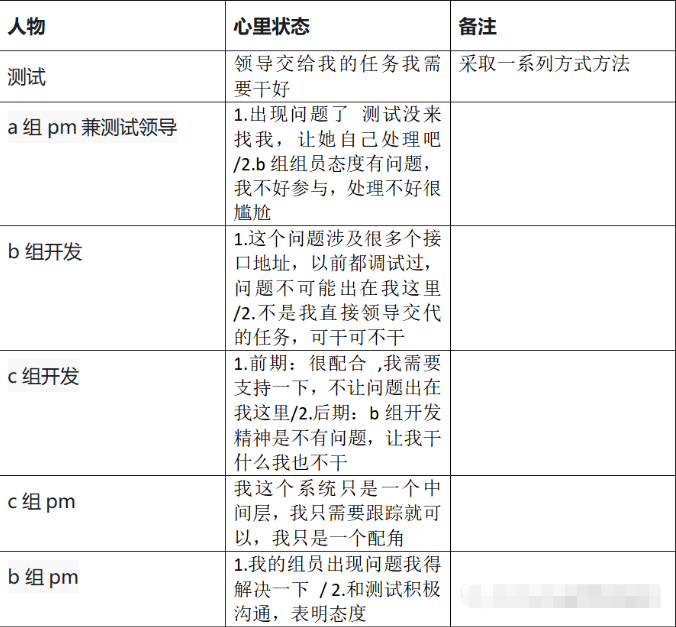

What if the testing process is not perfect and the development is not active?

![[programming problem] [scratch Level 2] 2019.09 make bat Challenge Game](/img/81/c84432a7d7c2fe8ef377d8c13991d6.png)

[programming problem] [scratch Level 2] 2019.09 make bat Challenge Game

DNS 系列(一):为什么更新了 DNS 记录不生效?

Qt不同类之间建立信号槽,并传递参数

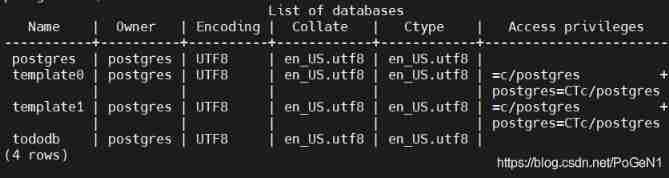

SQL knowledge summary 004: Postgres terminal command summary

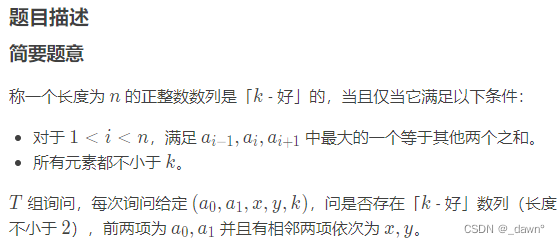

SDNU_ACM_ICPC_2022_Summer_Practice(1~2)

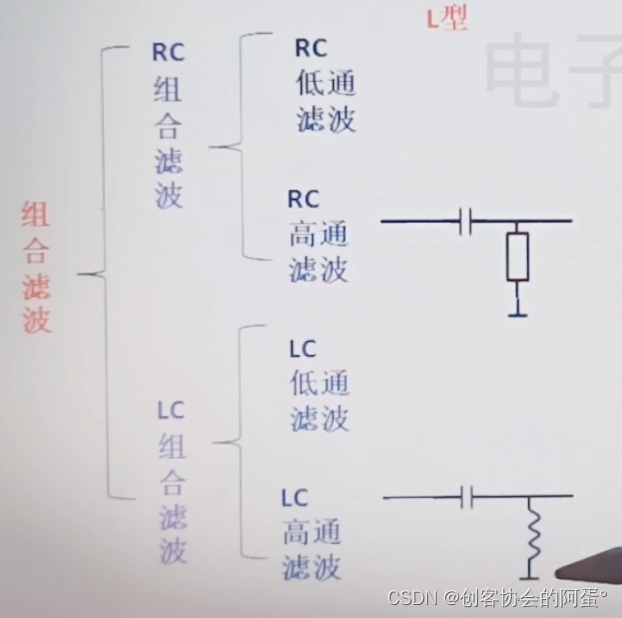

【笔记】常见组合滤波电路

RPA云电脑,让RPA开箱即用算力无限?

5G NR 系统消息

随机推荐

商品的设计等整个生命周期,都可以将其纳入到产业互联网的范畴内

深潜Kotlin协程(二十三 完结篇):SharedFlow 和 StateFlow

Course of causality, taught by Jonas Peters, University of Copenhagen

5g NR system messages

How to add automatic sorting titles in typora software?

C language 001: download, install, create the first C project and execute the first C language program of CodeBlocks

ThinkPHP kernel work order system source code commercial open source version multi user + multi customer service + SMS + email notification

"An excellent programmer is worth five ordinary programmers", and the gap lies in these seven key points

Basic mode of service mesh

ABAP ALV LVC template

The method of server defense against DDoS, Hangzhou advanced anti DDoS IP section 103.219.39 x

Smart regulation enters the market, where will meituan and other Internet service platforms go

【obs】Impossible to find entrance point CreateDirect3D11DeviceFromDXGIDevice

Lecture 1: the entry node of the link in the linked list

接口测试进阶接口脚本使用—apipost(预/后执行脚本)

Emotional post station 010: things that contemporary college students should understand

How to learn a new technology (programming language)

[OBS] the official configuration is use_ GPU_ Priority effect is true

Reentrantlock fair lock source code Chapter 0

Prompt configure: error: required tool not found: libtool solution when configuring and installing crosstool ng tool