当前位置:网站首页>【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

2022-07-02 07:43:00 【bryant_meng】

ICRA-2018

文章目錄

1 Background and Motivation

深度感知和深度估計在 robotics, autonomous driving, augmented reality (AR) and 3D mapping 等工程應用中至關重要!

然而現有的深度估計手段在落地時或多或少有著它的局限性:

1)3D LiDARs are cost-prohibitive

2)Structured-light-based depth sensors (e.g. Kinect) are sunlight-sensitive and power-consuming

3)stereo cameras require a large baseline and careful calibration for accurate triangulation, and usually fails at featureless regions

單目攝像頭由於其體積小,成本低,節能,在消費電子產品中無處不在等特點,單目深度估計方法也成為了人們探索的興趣點!

然而,the accuracy and reliability of such methods is still far from being practical(盡管這些年有了顯著的提昇)

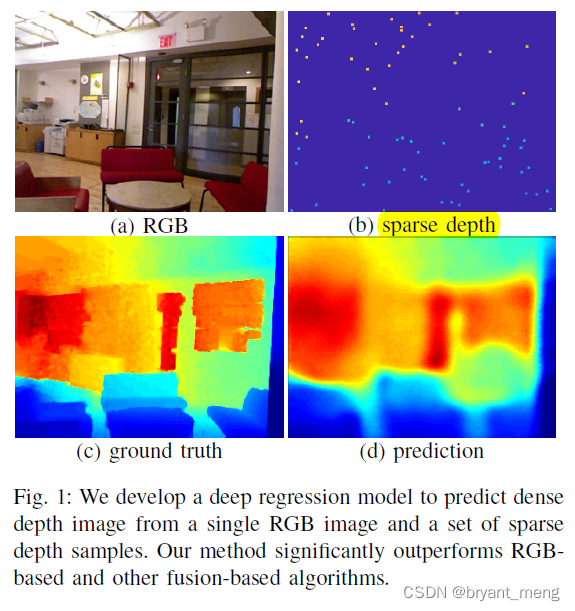

作者在 rgb 圖像的基礎上,配合 sparse depth measurements,來進行深度估計,a few sparse depth samples drastically improves depth reconstruction performance

2 Related Work

- RGB-based depth prediction

- hand-crafted features

- probabilistic graphical models

- Non-parametric approaches

- Semi-supervised learning

- unsupervised learning

- Depth reconstruction from sparse samples

- Sensor fusion

3 Advantages / Contributions

rgb + sparse depth 進行單目深度預測

ps:網絡結構沒啥創新,sparse depth 這種多模態也是借鑒別人的思想(當然,采樣方式不一樣)

4 Method

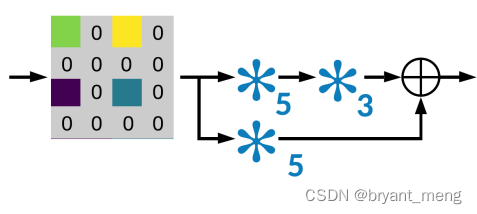

整體結構

采用的是 encoder 和 decoder 的形式

UpProj 的形式如下:

2)Depth Sampling

根據 Bernoulli probability 采樣(eg:拋硬幣,每次結果不相關), p = m n p = \frac{m}{n} p=nm

伯努利試驗(Bernoulli experiment)是在同樣的條件下重複地、相互獨立地進行的一種隨機試驗,其特點是該隨機試驗只有兩種可能結果:發生或者不發生。我們假設該項試驗獨立重複地進行了n次,那麼就稱這一系列重複獨立的隨機試驗為n重伯努利試驗,或稱為伯努利概型。

D ∗ D* D∗ 完整的深度圖,dense depth map

D D D sparse depth map

3)Data Augmentation

Scale / Rotation / Color Jitter / Color Normalization / Flips

scale 和 rotation 的時候采用的是 Nearest neighbor interpolation 以避免 creating spurious sparse depth points

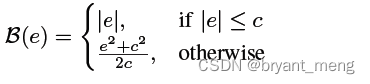

4)loss function

- l1

- l2:sensitive to outliers,over-smooth boundaries instead of sharp transitions

- berHu

berHu 綜合了 l1 和 l2

作者”事實說話”采用的是 l1

5 Experiments

5.1 Datasets

NYU-Depth-v2

464 different indoor scenes,249 Train + 215 test

the small labeled test dataset with 654 images is used for evaluating the final performance

KITTI Odometry Dataset

The KITTI dataset is more challenging for depth prediction, since the maximum distance is 100 meters as opposed to only 10 meters in the NYU-Depth-v2 dataset.

評價指標

RMSE: root mean squared error

REL: mean absolute relative error

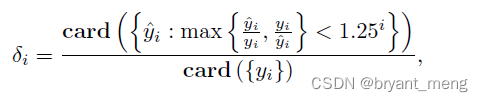

δ i \delta_i δi:

其中

- card:is the cardinality of a set(可簡單理解為對元素個數計數)

- y ^ \hat{y} y^:prediction

- y y y:GT

更多相關評價指標參考 單目深度估計指標:SILog, SqRel, AbsRel, RMSE, RMSE(log)

5.2 RESULTS

1)Architecture Evaluation

DeConv3 比 DeConv2 好,

UpProj 比 DeConv3 好(even larger receptive field of 4x4, the UpProj module outperforms the others)

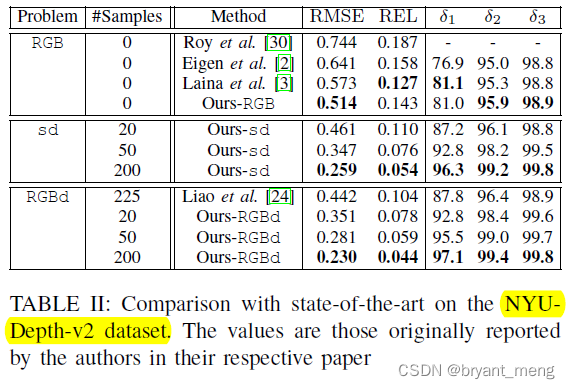

2)Comparison with the State-of-the-Art

NYU-Depth-v2 Dataset

sd 是 sparse-depth 的縮寫,也即輸入沒有 rgb

看看可視化的效果

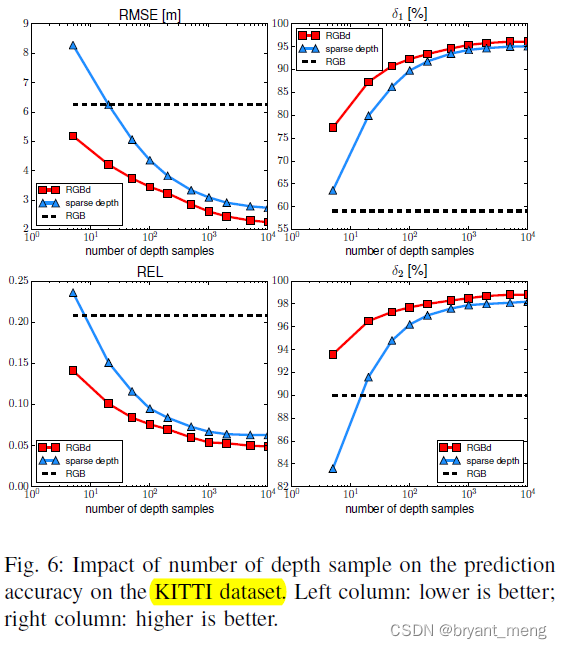

KITTI Dataset

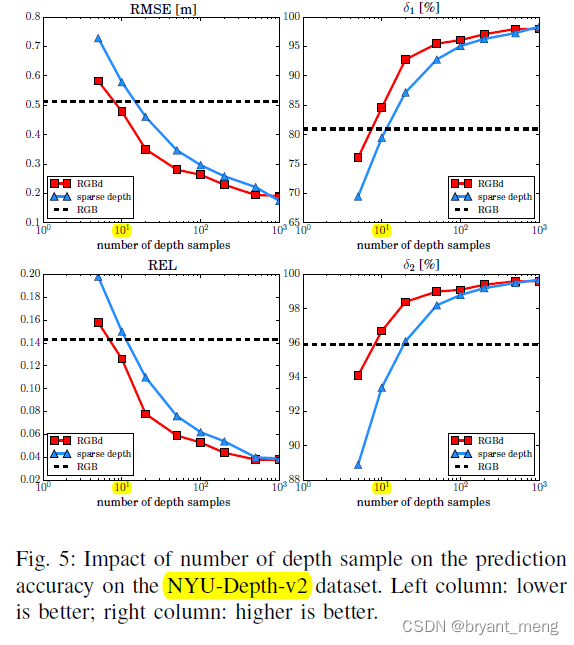

3)On Number of Depth Samples

sparse 1 0 1 10^1 101 這個數量級就可以和 rgb 媲美, 1 0 2 10^2 102 飛躍,

采樣越多,和 rgb 關系就不大了(performance gap between RGBd and sd shrinks as the sample size increases),哈哈哈

This observation indicates that the information extracted from the sparse sample set dominates the prediction when the sample size is sufficiently large, and in this case the color cue becomes almost irrelevant. (全采樣,怎麼輸入我就怎麼給你輸出出來,別說跟 rgb 關系不大,跟神經網絡關系也不大了,哈哈哈)

再看看 KITTI 上的影響

大同小异

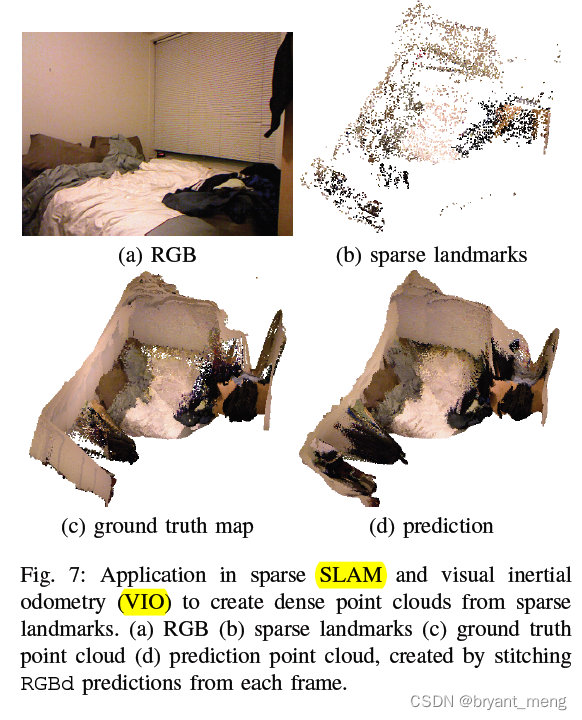

4)Application: Dense Map from Visual Odometry Features

5)Application: LiDAR Super-Resolution

6 Conclusion(own) / Future work

presentation

https://www.bilibili.com/video/av66343637/

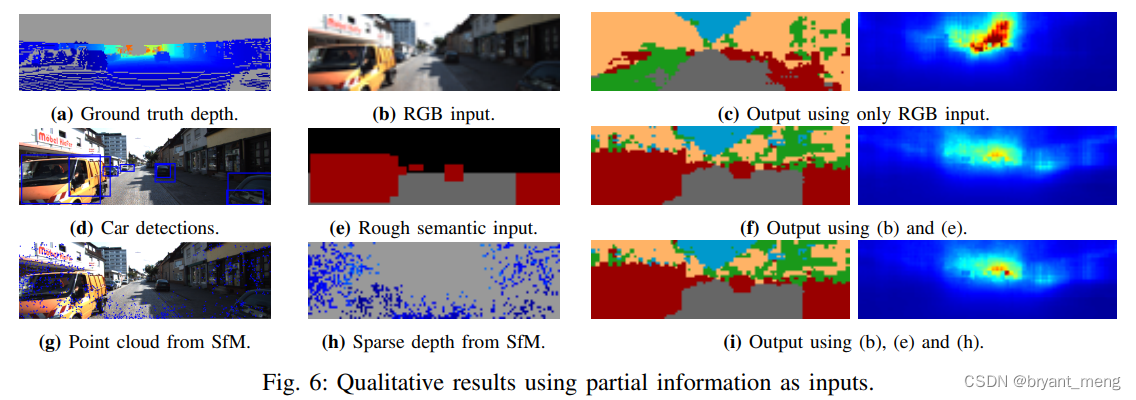

下面看看另外一些多模態的單目深度預測方法

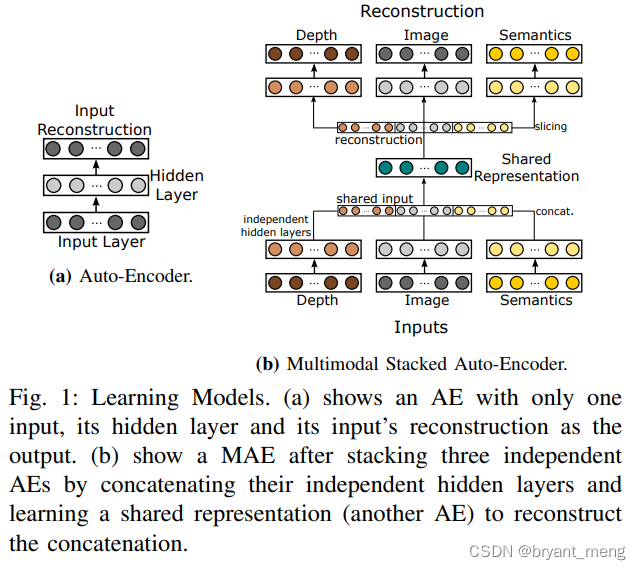

《Multi-modal Auto-Encoders as Joint Estimators for Robotics Scene Understanding》

Robotics: Science and Systems-2016

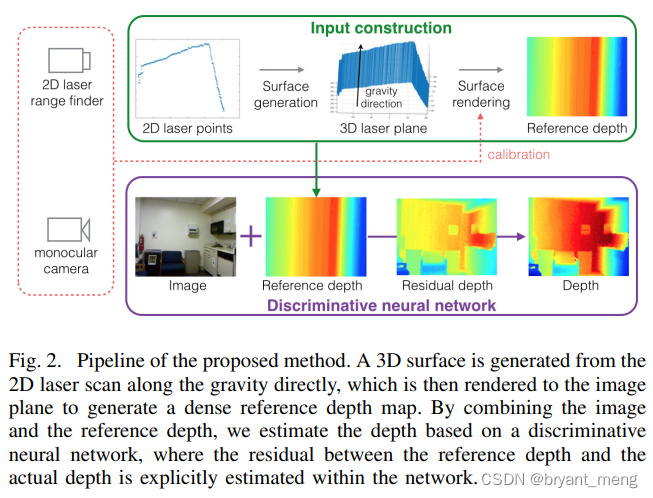

《Parse Geometry from a Line: Monocular Depth Estimation with Partial Laser Observation》

ICRA-2017

感覺這個落地成本比作者的更小

边栏推荐

- PHP uses the method of collecting to insert a value into the specified position in the array

- TimeCLR: A self-supervised contrastive learning framework for univariate time series representation

- ABM论文翻译

- Optimization method: meaning of common mathematical symbols

- Jordan decomposition example of matrix

- 使用百度网盘上传数据到服务器上

- CPU的寄存器

- Faster-ILOD、maskrcnn_benchmark安装过程及遇到问题

- Feeling after reading "agile and tidy way: return to origin"

- 半监督之mixmatch

猜你喜欢

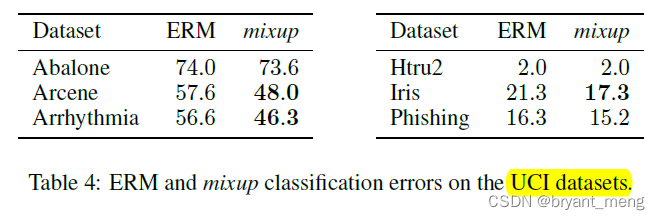

【Mixup】《Mixup:Beyond Empirical Risk Minimization》

![[CVPR‘22 Oral2] TAN: Temporal Alignment Networks for Long-term Video](/img/bc/c54f1f12867dc22592cadd5a43df60.png)

[CVPR‘22 Oral2] TAN: Temporal Alignment Networks for Long-term Video

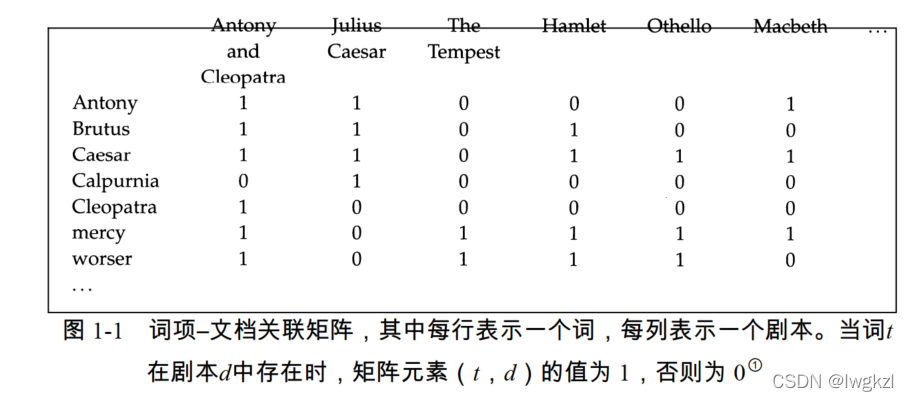

【信息检索导论】第一章 布尔检索

Mmdetection trains its own data set -- export coco format of cvat annotation file and related operations

Faster-ILOD、maskrcnn_ Benchmark training coco data set and problem summary

![[introduction to information retrieval] Chapter 6 term weight and vector space model](/img/42/bc54da40a878198118648291e2e762.png)

[introduction to information retrieval] Chapter 6 term weight and vector space model

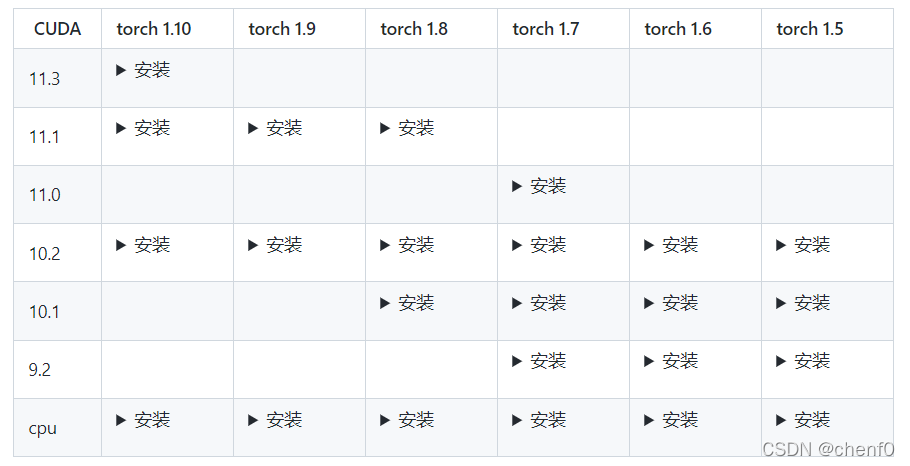

MMDetection安装问题

SSM laboratory equipment management

【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

Ding Dong, here comes the redis om object mapping framework

随机推荐

Sorting out dialectics of nature

One field in thinkphp5 corresponds to multiple fuzzy queries

CPU register

Jordan decomposition example of matrix

【Programming】

一份Slide两张表格带你快速了解目标检测

How to efficiently develop a wechat applet

Mmdetection installation problem

Huawei machine test questions

【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》

parser. parse_ Args boolean type resolves false to true

mmdetection训练自己的数据集--CVAT标注文件导出coco格式及相关操作

Open failed: enoent (no such file or directory) / (operation not permitted)

Translation of the paper "written mathematical expression recognition with bidirectionally trained transformer"

[introduction to information retrieval] Chapter 1 Boolean retrieval

程序的内存模型

【深度学习系列(八)】:Transoform原理及实战之原理篇

Implementation of purchase, sales and inventory system with ssm+mysql

Cognitive science popularization of middle-aged people

win10解决IE浏览器安装不上的问题