当前位置:网站首页>ucore lab 6

ucore lab 6

2022-07-06 15:25:00 【Hu Da jinshengyu】

lab 6

The experiment purpose

- Understand the scheduling management mechanism of the operating system

- be familiar with ucore System scheduler framework , And the default Round-Robin Scheduling algorithm

- Implement a based on the scheduler framework (Stride Scheduling) Scheduling algorithm to replace the default Scheduling Algorithm

Experimental content

Experiment 5 completed the user process management , Multiple processes can be run in user mode . But so far , The scheduling strategy adopted is very simple FIFO Scheduling strategy . This experiment , Mainly familiar ucore System scheduler framework , And based on this framework Round-Robin(RR) Scheduling algorithm . Then refer to RR Implementation of scheduling algorithm , complete Stride Scheduling Scheduling algorithm .

practice

Requirements for experimental report :

- be based on markdown Format to complete , Text based

- Fill in the report required in each basic exercise

- After finishing the experiment , Please analyze ucore_lab Reference answers provided in , Please explain the difference between your implementation and the reference answer in the experiment report

- List the knowledge points you think are important in this experiment , And corresponding OS Knowledge points in principle , And briefly explain your meaning of the two , Relationship , Understanding of differences and other aspects ( It may also appear that the knowledge points in the experiment have no corresponding principle knowledge points )

- List what you think OS Principle is very important , But there is no corresponding knowledge point in the experiment

practice 0: Fill in the existing experiment

This experiment relies on experiments 1/2/3/4/5. Please take your experiment 2/3/4/5 Fill in the code in this experiment. There are “LAB1”/“LAB2”/“LAB3”/“LAB4”“LAB5” The corresponding part of the notes . And ensure that the compilation passes . Be careful : In order to execute correctly lab6 Test application for , It may be necessary to test the completed experiments 1/2/3/4/5 Code for further improvements .

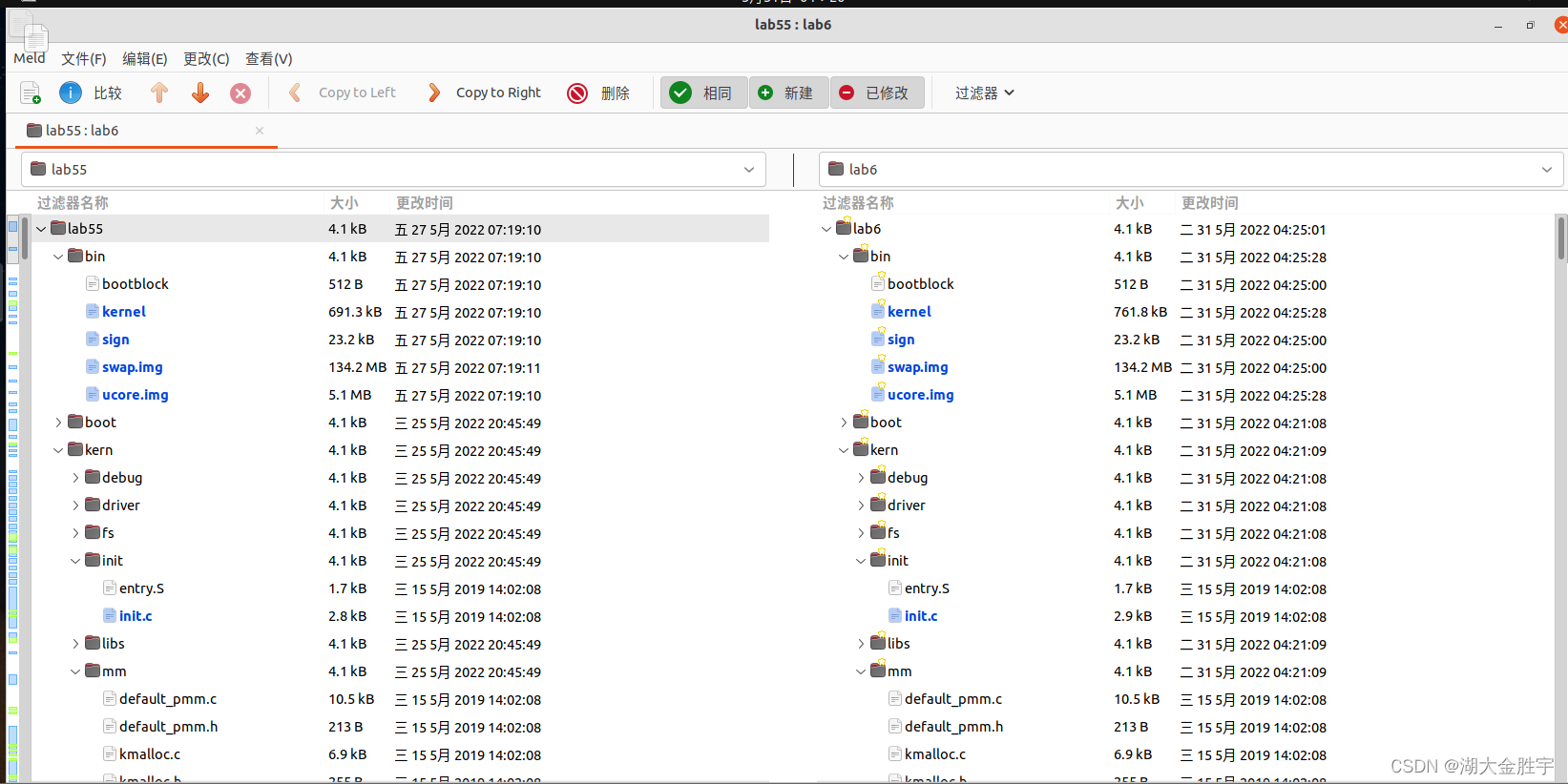

Use meld To compare directories , We can know that the following documents are different :

- proc.c

- default_pmm.c

- pmm.c

- swap_fifo.c

- vmm.c

- trap.c

The parts to be modified are as follows :

1、alloc_proc Function needs to add new members

Change the part to 120——125 That's ok :

proc->rq = NULL; // The initialization run queue is empty

list_init(&(proc->run_link)); // Initialize the pointer of the run queue

proc->time_slice = 0; // Initialize time slice

proc->lab6_run_pool.left = proc->lab6_run_pool.right proc->lab6_run_pool.parent = NULL; // Initialize all kinds of pointers to null , Including the parent process waiting

proc->lab6_stride = 0; // Process running progress initialization ( Aim at stride Scheduling algorithm , The same below )

proc->lab6_priority = 0; // Initialization priority

Relevant explanations and definitions are as follows :

struct run_queue *rq; // The pointer of the current process in the queue ;

list_entry_t run_link; // Pointer to the run queue

int time_slice; // Time slice

skew_heap_entry_t lab6_run_pool; // Where has the representative implemented it now (stride Scheduling algorithm , The same below )

uint32_t lab6_stride; // Process priority

uint32_t lab6_priority;

// FOR LAB6 ONLY: the priority of process, set by lab6_set_priority(uint32_t)

2、trap_dispatch function , You need to change the initialization of the timer , The revised part is as follows :

static void

trap_dispatch(struct trapframe *tf) {

......

......

ticks ++;

assert(current != NULL);

run_timer_list(); // Update timer , And call the scheduling algorithm according to the parameters

break;

......

......

}

run_timer_list The function is defined as follows :

void run_timer_list(void) {

bool intr_flag;

local_intr_save(intr_flag);

{

list_entry_t *le = list_next(&timer_list);

if (le != &timer_list) {

timer_t *timer = le2timer(le, timer_link);

assert(timer->expires != 0);

timer->expires --;

while (timer->expires == 0) {

le = list_next(le);

struct proc_struct *proc = timer->proc;

if (proc->wait_state != 0) {

assert(proc->wait_state & WT_INTERRUPTED);

}

else {

warn("process %d's wait_state == 0.\n", proc->pid);

}

wakeup_proc(proc);

del_timer(timer);

if (le == &timer_list) {

break;

}

timer = le2timer(le, timer_link);

}

}

sched_class_proc_tick(current);

}

local_intr_restore(intr_flag);

}

3、alloc_proc function , The revised part is as follows :

static struct proc_struct *

alloc_proc(void) {

....

proc->rq = NULL; // The initialization run queue is empty

list_init(&(proc->run_link));

proc->time_slice = 0; // Initialize time slice

// The initialization pointer is null

proc->lab6_run_pool.left = proc->lab6_run_pool.right = proc->lab6_run_pool.parent = NULL;

proc->lab6_stride = 0; // Set step size to 0

proc->lab6_priority = 0; // Set the priority to 0

}

practice 1: Use Round Robin Scheduling algorithm ( No coding required )

Finish the exercise 0 after , I suggest you compare ( You can use kdiff3 Wait for file comparison software ) Completed by individuals lab5 And practice 0 Just revised after completion lab6 The difference between , Analysis and understanding lab6 use RR The execution process after Scheduling Algorithm . perform make grade, Most test cases should pass . But enforcement priority.c Should not pass .

Please complete in the experiment report :

- Please understand and analyze sched_calss Usage of function pointers in , And join Round Robin Scheduling algorithm description ucore Scheduling execution process

- Please briefly explain how to design and implement in the experiment report ” Multilevel feedback queue scheduling algorithm “, Give the outline design , Encourage detailed design

Prepare knowledge :

RR The basic implementation idea of the algorithm :

R I didn't think , Take your time ( Sleep out ) I just found that we are really not suitable ound Robin Scheduling algorithm ( abbreviation RR, round-robin scheduling ) The scheduling idea is to make all runnable State processes are used in time-sharing and rotation CPU Time . The scheduler maintains the current runnable The orderly running queue of processes . After the time slice of the current process runs out , The scheduler places the current process at the end of the run queue , Then take out the process from its head for scheduling .

The scheduling in this experiment is based on the member function of scheduling class , Several member functions are defined .

struct sched_class {

// the name of sched_class

const char *name;

// Init the run queue

void (*init)(struct run_queue *rq);

// put the proc into runqueue, and this function must be called with rq_lock

void (*enqueue)(struct run_queue *rq, struct proc_struct *proc);

// get the proc out runqueue, and this function must be called with rq_lock

void (*dequeue)(struct run_queue *rq, struct proc_struct *proc);

// choose the next runnable task

struct proc_struct *(*pick_next)(struct run_queue *rq);

// dealer of the time-tick

void (*proc_tick)(struct run_queue *rq, struct proc_struct *proc);

/* for SMP support in the future * load_balance * void (*load_balance)(struct rq* rq); * get some proc from this rq, used in load_balance, * return value is the num of gotten proc * int (*get_proc)(struct rq* rq, struct proc* procs_moved[]); */

};

Implementation of a scheduling algorithm , You must have these functions , To meet the scheduling class .

Implementation process :

1. Initialize the process queue (RR_init function )

static void

RR_init(struct run_queue *rq) {

list_init(&(rq->run_list)); // Initialize the run queue

rq->proc_num = 0; // The number of initialization processes is 0

}

This part is mainly about initialization , initialization rq Process queue for , And set the number of processes to zero .

among ,struct run_queue Is defined as follows :

struct run_queue {

list_entry_t run_list; // Process queue

unsigned int proc_num; // Number of processes

int max_time_slice; // Maximum time slice length (RR)

skew_heap_entry_t *lab6_run_pool;

// stay stride In the scheduling algorithm , in order to “ Slant pile ” A special process queue created by data structure , The essence is the process queue .

};

In structure skew_heap_entry Structure :

struct skew_heap_entry {

// Tree structured process container

struct skew_heap_entry *parent, *left, *right;

};

typedef struct skew_heap_entry skew_heap_entry_t;

As you can see from the code RR_init function , The function is relatively simple , Finished initializing the process queue .

2. Add the process to the ready queue (RR_enqueue function )

static void RR_enqueue(struct run_queue *rq, struct proc_struct *proc) {

assert(list_empty(&(proc->run_link)));

list_add_before(&(rq->run_list), &(proc->run_link));

if (proc->time_slice == 0 || proc->time_slice > rq->max_time_slice) {

proc->time_slice = rq->max_time_slice;

}

proc->rq = rq;

rq->proc_num ++;

}

This part is a process queue operation : The process queue is a two-way linked list , When a process joins the queue , It will be added to the first place in the queue , And give it the initial number of time slices ; And update the number of processes in the queue .

The specific implementation is as follows :

Look at the code , First , It puts the process control block pointer of the process into rq End of queue , And if the time slice of the process control block is 0 Or the time slice of the process is larger than the maximum time slice allocated to the process , You need to reset it to max_time_slice. Then adjust in turn rq and rq The number of processes plus one .

3. Remove the process from the ready queue (RR_dequeue function )

static void

RR_dequeue(struct run_queue *rq, struct proc_struct *proc) {

// The process control block pointer is not null and the process is in the ready queue

assert(!list_empty(&(proc->run_link)) && proc->rq == rq);

// Remove the process control block pointer from the ready queue

list_del_init(&(proc->run_link));

rq->proc_num --; // Reduce the number of ready processes by one

}

Remove the process from the ready queue , And call it list_del_init Delete . meanwhile , Reduce the number of processes by one .

4. Select the next scheduling process (RR_pick_next function )

static struct proc_struct *RR_pick_next(struct run_queue *rq) {

// Select the ready process queue rq Queue head queue element in

list_entry_t *le = list_next(&(rq->run_list));

if (le != &(rq->run_list)) {

// Get ready process

return le2proc(le, run_link);// Return the process control block pointer

}

return NULL;

}

First select the ready process queue rq Queue head queue element in , And convert the queue elements into process control block pointers . Finally, return to the ready process .

5. Time slice (RR_proc_tick function )

static void RR_proc_tick(struct run_queue *rq, struct proc_struct *proc) {

if (proc->time_slice > 0) {

// Arrival time slice

proc->time_slice --; // Time slice of the execution process time_slice Minus one

}

if (proc->time_slice == 0) {

// The time slice is 0

// Set this process member variable need_resched The label is 1, The process needs to be scheduled

proc->need_resched = 1;

}

}

This part is when the clock interrupt is generated , Will trigger tick Function call , Corresponding to the sixth case of the dispatching point in the above figure .

Each time a clock interrupt is generated , Represents the number of time slices minus one ( Because of the relationship between interruption and time slice , In practice 0 In the interrupt handling function of , Become relevant ).

Once the time slice is used up , Then we need to put the process PCB Medium need_resched Set as 1, It means that it must give up for CPU Possession of , You need to schedule other processes to execute , The current process needs to wait .

6.sched_class

struct sched_class default_sched_class = {

.name = "RR_scheduler",

.init = RR_init,

.enqueue = RR_enqueue,

.dequeue = RR_dequeue,

.pick_next = RR_pick_next,

.proc_tick = RR_proc_tick,

};

stay schedule When initializing , You need to fill in an initialization information , Then fill in the class function we implemented , Then the system can execute in this way .

7. Scheduling initialized functions

void sched_init(void) {

list_init(&timer_list);

sched_class = &default_sched_class;

rq = &__rq;

rq->max_time_slice = MAX_TIME_SLICE;

sched_class->init(rq);

cprintf("sched class: %s\n", sched_class->name);

}

As shown above , take sched_class Set to the class name just defined , You can complete the initialization binding .

Question answering :

Please understand and analyze sched_calss Usage of function pointers in , And join Round Robin Scheduling algorithm description ucore Scheduling execution process .

The code definition of the scheduling class is as follows :

struct sched_class {

// The name of the scheduler

const char *name;

// Initialize the run queue

void (*init) (struct run_queue *rq);

// Process p Insert queue rq

void (*enqueue) (struct run_queue *rq, struct proc_struct *p);

// Process p From the queue rq Delete in

void (*dequeue) (struct run_queue *rq, struct proc_struct *p);

// return Run the queue The next executable process in

struct proc_struct* (*pick_next) (struct run_queue *rq);

// timetick Processing function

void (*proc_tick)(struct run_queue* rq, struct proc_struct* p);

};

Scheduling execution process :

RR The ready queue of the scheduling algorithm is also a two-way linked list , Just add a member variable , Indicates the maximum execution time slice in this ready process queue . And in the process control block proc_struct A member variable is added in time_slice, Used to record the current runnable time segment of the process . This is because RR The scheduling algorithm needs to consider that the running time of the execution process cannot be too long . At every timer By then , The operating system will decrement the current execution process time_slice, When time_slice by 0 when , It means that the process has been running for a period of time ( This time slice is called the time slice of the process ), Need to put CPU Let other processes execute , Then the operating system needs to make this process return to rq End of queue , And reset the time slice of this process to the maximum time slice of the member variable of the ready queue max_time_slice value , And then from rq The queue header of takes out a new process to execute .

Please briefly explain how to design and implement in the experiment report ” Multilevel feedback queue scheduling algorithm “, Give the outline design , Encourage detailed design .

Multilevel feedback scheduling is a scheduling method that uses multiple scheduling queues , The biggest difference between it and multi-level queue scheduling is that processes can move between different scheduling queues , In other words, some strategies can be formulated to determine whether a process can be upgraded to a higher priority queue or downgraded to a lower priority queue . Through this algorithm, both high priority jobs and short jobs can be responded ( process ) make short shrift of sth . The core idea of multi-level feedback queue designed during implementation is : The time slice size increases as the priority level increases . meanwhile , The process is not completed in the current time slice Then it will be reduced to the next priority . Multiple queues can be maintained during implementation , Each new process joins the first queue , When you need to select a process to call in and execute , Search backwards from the first queue , Encountered a queue that is not empty , Then take a process out of this queue and call it in for execution . If the process transferred in from a queue still does not end after the time slice runs out , Then add this process to a queue after the queue in which it was transferred , And the time slice doubles .

- First, schedule the processes in the queue with high priority . If there is no scheduled process in the high priority queue , Then the processes in the secondary priority queue are scheduled

- For each process in the same queue , Schedule according to the time slice rotation method . such as Q1 The time slice of the queue is N, that Q1 The homework in experienced N If it hasn't been completed after a time slice , entering Q2 Wait in line , if Q2 The job cannot be completed after the time slice of is used up , All the way to the next level of queue , Until completion .

- A process in a low priority queue at run time , There are new assignments , So after running this time slice ,CPU Immediately assign to the newly arrived homework .

practice 2: Realization Stride Scheduling Scheduling algorithm ( Need to code )

First of all, it needs to be replaced RR Implementation of scheduler , The box default_sched_stride_c Cover default_sched.c. Then according to this file and subsequent documents Stride Relevant description of the calibrator , complete Stride Implementation of scheduling algorithm .

The following experimental documents give Stride General description of scheduling algorithm . Here is given Stride Some related information about scheduling algorithm ( At present, there is a lack of Chinese information on the Internet ).

- strid-shed paper location1

- strid-shed paper location2

- Can also be GOOGLE “Stride Scheduling” To find relevant information

perform :make grade. If the displayed application tests all output ok, Is basically correct . If it's just priority.c Can't get through , Executable make run-priority Command to debug it separately . See the appendix for the general implementation results .( It uses qemu-1.0.1 ).

Please briefly describe your design and implementation process in the experimental report .

Stride Scheduling algorithm :

Investigate round-robin Scheduler , Assuming that all processes make full use of what they have CPU In the case of time resources , All processes get CPU Time should be equal . But sometimes we want the scheduler to allocate reasonable for each process more intelligently CPU resources . Suppose we assign different priorities to different processes , Then we may hope that the time resources obtained by each process are proportional to their priority .Stride Scheduling is a typical and simple algorithm based on this idea .

Besides being simple and easy to implement , It also has the following features :

- Controllability : As we hoped before , Can prove that Stride Scheduling The number of times a process is scheduled is proportional to its priority .

- deterministic : Regardless of timer events , The whole scheduling mechanism is predictable and reproducible .

The basic idea of this algorithm can be considered as follows :

For each runnable Set a current state for the process stride( Implementation progress ), Indicates the current scheduling right of the process . In addition, define its corresponding pass( step ) value , Indicates that the corresponding process is after scheduling ,stride The cumulative value needed .

Every time you need to schedule , From the current runnable State in the process of choosing stride Minimal process scheduling .

For processes that get scheduled P, The corresponding stride Add its corresponding step pass( It is only related to the priority of the process ).

After a fixed period of time , go back to 2. step , Reschedule the current stride The smallest process .

such as , For the above process , Now we need to choose scheduling stride The smallest P1,P1 Execute a step 16, here stride by 116, Next, I will choose stride The smallest P3(112) To carry out .

Whose? pass The smaller the value. , The more times someone is scheduled .

Implementation process :

Compared with RR Dispatch ,Stride Scheduling The function defines a comparator :

static int

proc_stride_comp_f(void *a, void *b)

{

// Get the process through the process control block pointer a

struct proc_struct *p = le2proc(a, lab6_run_pool);

// Get the process through the process control block pointer b

struct proc_struct *q = le2proc(b, lab6_run_pool);

// Subtract steps , Compare the size relationship through positive and negative

int32_t c = p->lab6_stride - q->lab6_stride;

if (c > 0) return 1;

else if (c == 0) return 0;

else return -1;

}

among ,a and b Is a pointer to two processes , Call out these two processes from the queue through the location pointed by the pointer and copy them to p individual q, Use p and q Directly compare their stride value , And according to the return value , Adjust the inclined stack .

1. Formal scheduling

Formal scheduling requires practice 1 As mentioned in schedule class , It contains a five tuple :

init、enqueue、dequeue、pick_next、tick.

2. Initialize the run queue (stride_init function )

static void

stride_init(struct run_queue *rq) {

/* LAB6: YOUR CODE */

list_init(&(rq->run_list)); // Initialize the scheduler class

rq->lab6_run_pool = NULL; // Initialize that the current process running queue is empty

rq->proc_num = 0; // Set the running queue to empty

}

Initialization function stride_init. Start initializing the run queue , And initialize the current operation team , Then set the number of processes in the current running queue to 0.

3. Add the process to the ready queue (stride_enqueue function )

static void

stride_enqueue(struct run_queue *rq, struct proc_struct *proc) {

/* LAB6: YOUR CODE */

#if USE_SKEW_HEAP

rq->lab6_run_pool =

skew_heap_insert(rq->lab6_run_pool, &(proc->lab6_run_pool), proc_stride_comp_f);

#else

assert(list_empty(&(proc->run_link)));

list_add_before(&(rq->run_list), &(proc->run_link));

#endif

if (proc->time_slice == 0 || proc->time_slice > rq->max_time_slice) {

proc->time_slice = rq->max_time_slice;

}

proc->rq = rq;

rq->proc_num ++;

}

There is a conditional compilation :

#if USE_SKEW_HEAP

...

#else

...

#endif

stay ucore in USE_SKEW_HEAP Defined as 1 , therefore # else And # endif The code between will be ignored .

Its

static inline skew_heap_entry_t *

skew_heap_insert(skew_heap_entry_t *a, skew_heap_entry_t *b,

compare_f comp)

{

skew_heap_init(b); // Initialization process b

return skew_heap_merge(a, b, comp);// return a And b The result of process integration

}

If you use a skewed heap data structure , Then you should call the insert function of the skew heap , This library is similar to the one mentioned above list.h, Belong to linux Kernel part , The improved version is also used here , The specific definition is lib/skew_heap.h in .

skew_heap_entry_t *skew_heap_insert The function is implemented as follows :

static inline skew_heap_entry_t *skew_heap_insert(

skew_heap_entry_t *a, skew_heap_entry_t *b,

compare_f comp) __attribute__((always_inline));

The first and second parameters are elements in the heap , The third is the law of comparison , Because the skew heap data structure is self-organizing , You can sort yourself , So after inserting it, you need to sort .

Other treatments , and RR The scheduling algorithm is the same , Get the process at the head of the queue for scheduling , And allocate time slices .

4. Remove the process from the ready queue (stride_dequeue function )

static void

stride_dequeue(struct run_queue *rq, struct proc_struct *proc) {

/* LAB6: YOUR CODE */

#if USE_SKEW_HEAP

rq->lab6_run_pool =

skew_heap_remove(rq->lab6_run_pool, &(proc->lab6_run_pool), proc_stride_comp_f);

#else

assert(!list_empty(&(proc->run_link)) && proc->rq == rq);

list_del_init(&(proc->run_link));

#endif

rq->proc_num --;

}

and enqueue equally , Data structures that use skew heaps must be matched with their related functions .

The code is relatively simple , There is only one main function :skew_heap_remove:

static inline skew_heap_entry_t *

skew_heap_remove(skew_heap_entry_t *a, skew_heap_entry_t *b,

compare_f comp)

{

skew_heap_entry_t *p = b->parent;

skew_heap_entry_t *rep = skew_heap_merge(b->left, b->right, comp);

if (rep) rep->parent = p;

if (p)

{

if (p->left == b)

p->left = rep;

else p->right = rep;

return a;

}

else return rep;

}

Complete the function of removing a process from the queue , Priority queues are used here . Finally, the number of running queues is reduced by one .

5. Select process scheduling (stride_pick_next function )

static struct proc_struct *

stride_pick_next(struct run_queue *rq) {

/* LAB6: YOUR CODE */

#if USE_SKEW_HEAP

if (rq->lab6_run_pool == NULL) return NULL;

struct proc_struct *p = le2proc(rq->lab6_run_pool, lab6_run_pool);

#else

list_entry_t *le = list_next(&(rq->run_list));

if (le == &rq->run_list)

return NULL;

struct proc_struct *p = le2proc(le, run_link);

le = list_next(le);

while (le != &rq->run_list)

{

struct proc_struct *q = le2proc(le, run_link);

if ((int32_t)(p->lab6_stride - q->lab6_stride) > 0)

p = q;

le = list_next(le);

}

#endif

if (p->lab6_priority == 0) // The priority for 0

p->lab6_stride += BIG_STRIDE; // Set the step size to the maximum

// The step size is set to the reciprocal of priority

else p->lab6_stride += BIG_STRIDE / p->lab6_priority;

return p;

}

First scan the entire running queue , Return to it stride The corresponding process with the smallest value , Then update the corresponding process stride value , Set the step size to the reciprocal of priority , If 0 Then set to the maximum step .

6. Time slice (stride_proc_tick function )

static void

stride_proc_tick(struct run_queue *rq, struct proc_struct *proc) {

/* LAB6: YOUR CODE */

if (proc->time_slice > 0) {

// Arrival time slice

proc->time_slice --; // Time slice of the execution process time_slice Minus one

}

if (proc->time_slice == 0) {

// The time slice is 0

// Set this process member variable need_resched The label is 1, The process needs to be scheduled

proc->need_resched = 1;

}

}

The main work is to detect whether the current process has used up the allocated time slice . If the time slice runs out , You should correctly set the relevant flags of the process structure to cause process switching . and RR It doesn't make any difference .

After finishing the function , The original default_sched_class notes . use stride Scheduling class of Algorithm :

struct sched_class default_sched_class = {

.name = "stride_scheduler",

.init = stride_init,

.enqueue = stride_enqueue,

.dequeue = stride_dequeue,

.pick_next = stride_pick_next,

.proc_tick = stride_proc_tick,

};

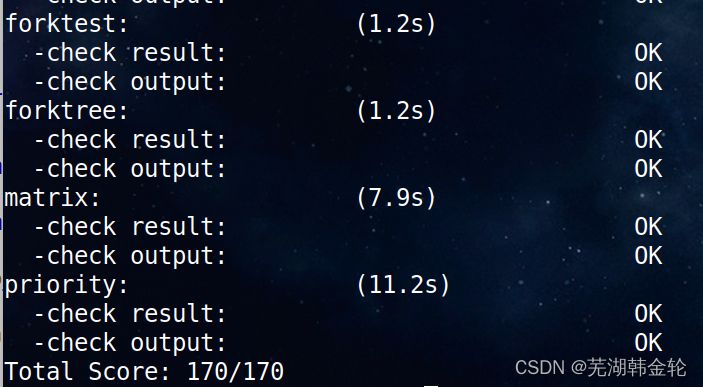

Running results :

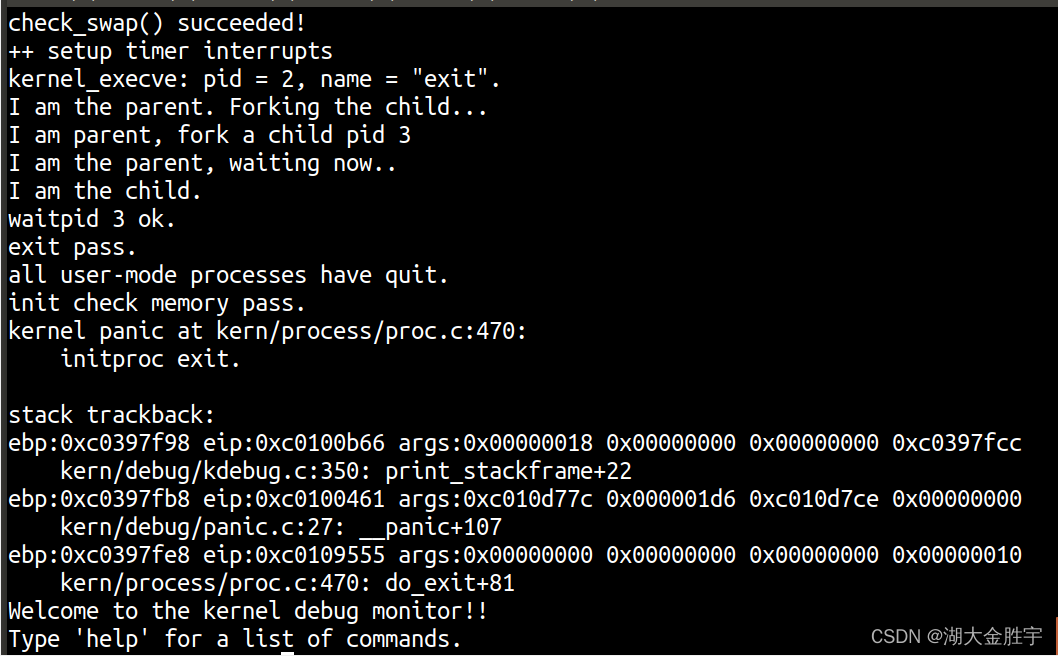

Input make qemu The results are as follows :

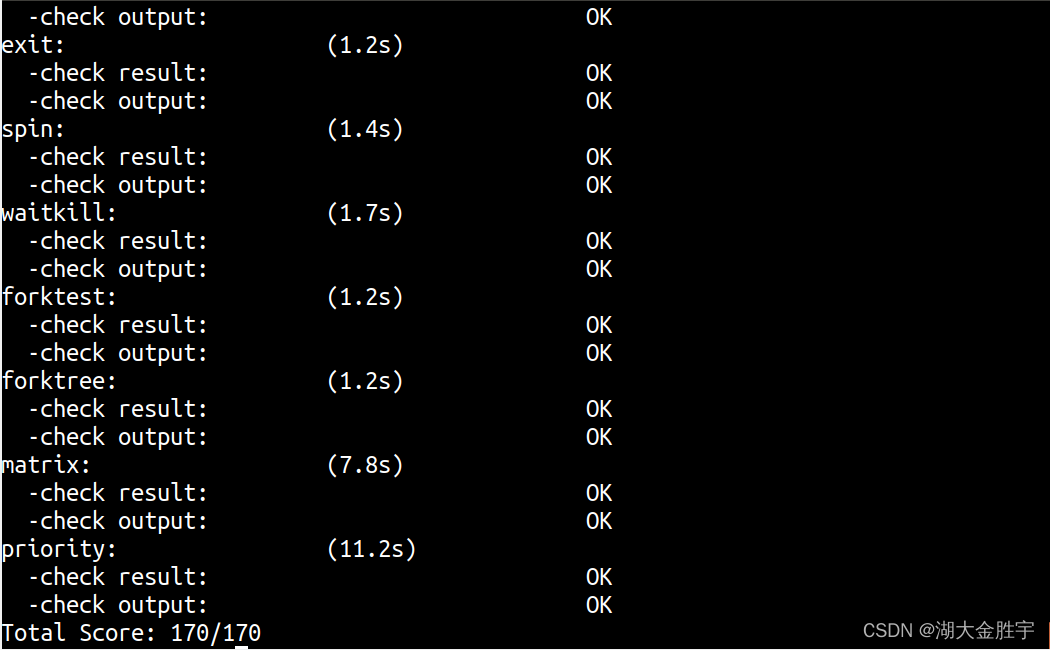

Input make grade The results are as follows :

Extended exercises Challenge 1 : Realization Linux Of CFS Scheduling algorithm

stay ucore Under the scheduler framework of Linux Of CFS Scheduling algorithm . Can read related Linux Kernel books or query online materials , Can understand CFS The details of the , Then it is roughly realized in ucore in .

Let's start the experiment :

CFS The algorithm is to make the running time of each process as same as possible , Then record the running time of each process . Add an attribute to the process control block structure to indicate the running time . Every time you need to schedule , Select the process that has run the least time to call CPU perform .CFS Red black tree is widely used in the implementation of the algorithm , However, the implementation of red black tree is too complex and the heap has been implemented and can meet the requirements , Therefore, heap will be used here .

stay run_queue Add a heap , practice 2 Already used in skew_heap_entry_t * lab6_run_pool; Continue to use .

struct run_queue {

list_entry_t run_list;

unsigned int proc_num;

int max_time_slice;

// For LAB6 ONLY

skew_heap_entry_t *lab6_run_pool;

}

stay proc_struct Several variables are added to help complete the algorithm :

struct proc_struct {

....

//--------------- be used for CFS Algorithm --------------------------------------------------

int fair_run_time; // Virtual runtime

int fair_priority; // Priority factor : from 1 Start , The greater the numerical , The faster time goes

skew_heap_entry_t fair_run_pool; // Run the process pool

}

take proc During initialization, the three added variables are initialized together :

skew_heap_init(&(proc->fair_run_pool));

proc->fair_run_time = 0;

proc->fair_priority = 1;

The algorithm is realized :

Comparison function :proc_fair_comp_f, In fact, this function and exercise 2 Of stride In the algorithm comp The idea of function is consistent .

// Be similar to stride Algorithm , Here we need to compare the two processes fair_run_time

static int proc_fair_comp_f(void *a, void *b)

{

struct proc_struct *p = le2proc(a, fair_run_pool);

struct proc_struct *q = le2proc(b, fair_run_pool);

int c = p->fair_run_time - q->fair_run_time;

if (c > 0) return 1;

else if (c == 0) return 0;

else return -1;

}

initialization :

//init function

static void fair_init(struct run_queue *rq) {

// The process pool is empty , The process for 0

rq->lab6_run_pool = NULL;

rq->proc_num = 0;

}

Join the queue :

// Be similar to stride Dispatch

static void fair_enqueue(struct run_queue *rq, struct proc_struct *proc)

{

// take proc The corresponding heap is inserted into rq in

rq->lab6_run_pool = skew_heap_insert(rq->lab6_run_pool, &(proc->fair_run_pool), proc_fair_comp_f);

// Update proc Time slice of

if (proc->time_slice == 0 || proc->time_slice > rq->max_time_slice)

proc->time_slice = rq->max_time_slice;

proc->rq = rq;

rq->proc_num ++;

}

Out of line :

static void fair_dequeue(struct run_queue *rq, struct proc_struct *proc) {

rq->lab6_run_pool = skew_heap_remove(rq->lab6_run_pool, &(proc->fair_run_pool), proc_fair_comp_f);

rq->proc_num --;

}

Select the next process :

//pick_next, Select the top element

static struct proc_struct * fair_pick_next(struct run_queue *rq) {

if (rq->lab6_run_pool == NULL)

return NULL;

skew_heap_entry_t *le = rq->lab6_run_pool;

struct proc_struct * p = le2proc(le, fair_run_pool);

return p;

}

Need to update the virtual runtime , The amount of increase is the priority coefficient

// On every update , Update the virtual runtime to a priority related coefficient

static void

fair_proc_tick(struct run_queue *rq, struct proc_struct *proc) {

if (proc->time_slice > 0) {

proc->time_slice --;

proc->fair_run_time += proc->fair_priority;

}

if (proc->time_slice == 0) {

proc->need_resched = 1;

}

}

Extended exercises Challenge 2 : stay ucore Realize as many basic scheduling algorithms as possible (FIFO, SJF,…), And design various test cases , It can quantitatively analyze the differences of various scheduling algorithms in various indicators , Explain the scope of application of the scheduling algorithm .

Refer to the answer analysis

Next, the implementation of the reference answer is compared with that in this experiment :

- About updating the previous LAB The implementation part of the code , It is found that the reference answer is not called when processing the time interrupt sched_class_proc_tick function , This is obviously a mistake ;

- Next, compare the reference answers stride Implementation of algorithm , Finding the reference answer realizes the completion of the ready queue by using the linked list and the inclined heap at the same time stride Algorithm , In the implementation of this experiment , Only the inclined stack with higher time efficiency is used ; In the specific use of inclined heap stride The algorithm part , There is hardly much difference , But the reference answer is BigStride It happens to be the maximum value that can be selected 2^31-1, In this implementation, we directly use our student ID as BigStride The value of ;

The knowledge points involved in the experiment are listed

- The knowledge points involved in this experiment are as follows :

- Object oriented programming idea ;

- Round-Robin Scheduling algorithm ;

- Stride Scheduling algorithm ;

- Multilevel feedback queue scheduling algorithm ;

- Implementation of scheduling algorithm framework ;

- Corresponding OS The knowledge points in are as follows :

- ucore The specific encapsulation of scheduling algorithm in ;

- ucore Implementation of three scheduling algorithms in ;

- The relationship between them is :

- The abstract algorithm of the former provides the foundation for the realization of the specific function of the latter ;

- The object-oriented knowledge in the former is conducive to simplifying the specific implementation process of the latter ;

List the knowledge points not involved in the experiment

The knowledge points not involved in this experiment are listed below :

- The startup process of the operating system ;

- Specific theoretical analysis and experimental analysis and comparison between various scheduling algorithms ;

lenge 2 : stay ucore Realize as many basic scheduling algorithms as possible (FIFO, SJF,…), And design various test cases , It can quantitatively analyze the differences of various scheduling algorithms in various indicators , Explain the scope of application of the scheduling algorithm .

边栏推荐

- Crawler series of learning while tapping (3): URL de duplication strategy and Implementation

- Nest and merge new videos, and preset new video titles

- Mysql database (IV) transactions and functions

- LeetCode#2062. Count vowel substrings in strings

- ucore lab 6

- JDBC introduction

- Flex --- detailed explanation of flex layout attributes

- Mysql database (II) DML data operation statements and basic DQL statements

- Preface to the foundations of Hilbert geometry

- CSAPP家庭作業答案7 8 9章

猜你喜欢

遇到程序员不修改bug时怎么办?我教你

The maximum number of words in the sentence of leetcode simple question

UCORE LaB6 scheduler experiment report

Es6---es6 content details

![Cadence physical library lef file syntax learning [continuous update]](/img/0b/75a4ac2649508857468d9b37703a27.jpg)

Cadence physical library lef file syntax learning [continuous update]

Interview answering skills for software testing

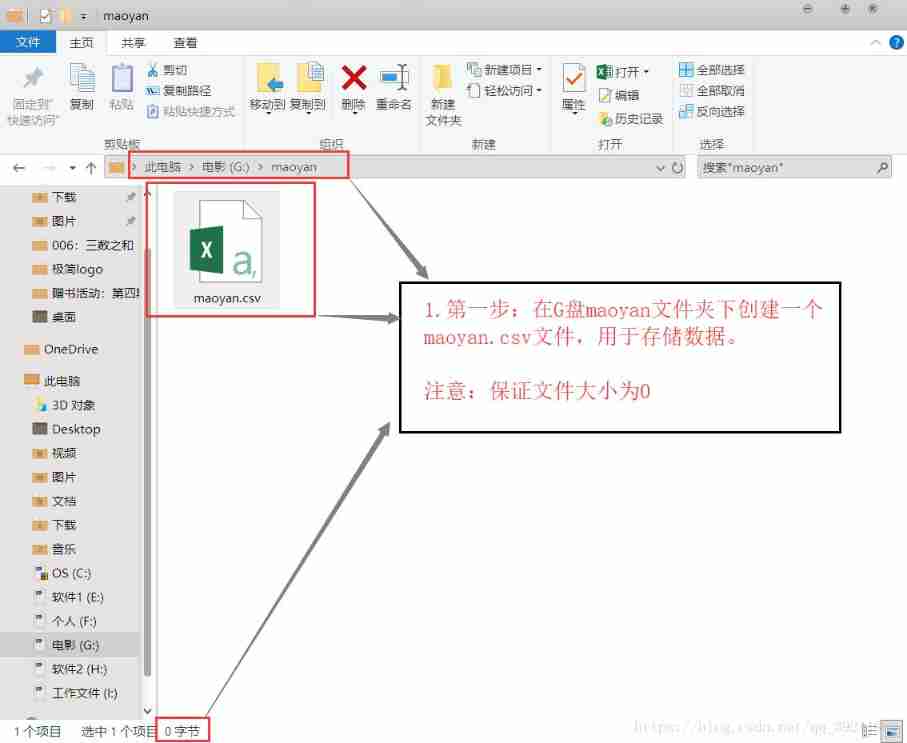

Crawling cat's eye movie review, data visualization analysis source code operation instructions

The wechat red envelope cover designed by the object is free! 16888

软件测试有哪些常用的SQL语句?

Jupyter installation and use tutorial

随机推荐

Winter vacation daily question - maximum number of balloons

Thinking about three cups of tea

MySQL development - advanced query - take a good look at how it suits you

[200 opencv routines] 98 Statistical sorting filter

全网最详细的postman接口测试教程,一篇文章满足你

A method and implementation of using VSTO to prohibit excel cell editing

How to rename multiple folders and add unified new content to folder names

csapp shell lab

Introduction to safety testing

LeetCode#198. raid homes and plunder houses

LeetCode#36. Effective Sudoku

The number of reversing twice in leetcode simple question

How to write the bug report of software test?

Automated testing problems you must understand, boutique summary

ucore lab5

Should wildcard import be avoided- Should wildcard import be avoided?

Preface to the foundations of Hilbert geometry

Programmers, how to avoid invalid meetings?

Collection collection and map collection

LeetCode#19. Delete the penultimate node of the linked list