当前位置:网站首页>Oppo Xiaobu launched Obert, a large pre training model, and promoted to the top of kgclue

Oppo Xiaobu launched Obert, a large pre training model, and promoted to the top of kgclue

2022-07-05 12:41:00 【Zhiyuan community】

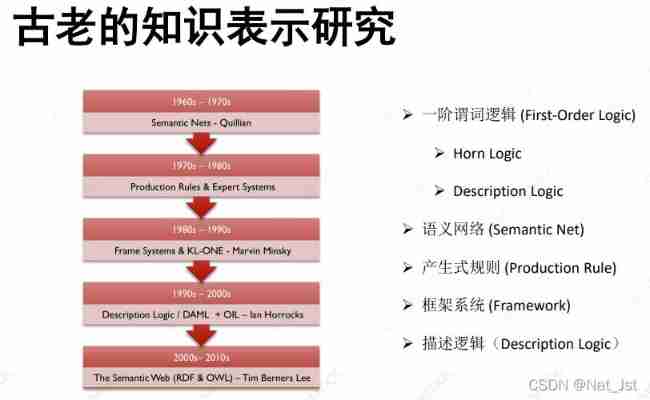

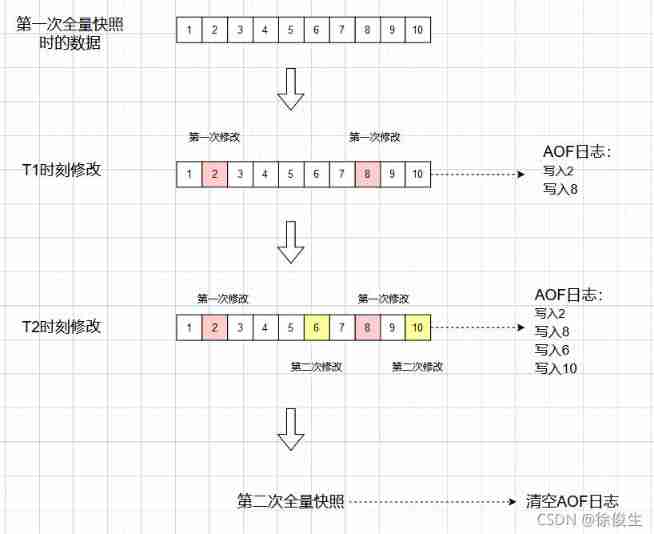

In recent days, ,OPPO Xiaobu assistant team and machine learning department jointly completed a billion parameter model “OBERT” Pre training , Business achievements 4% The above promotion ; In the industry comparison evaluation ,OBERT Leap to the benchmark of Chinese language understanding CLUE1.1 Fifth in the overall list 、 Large scale knowledge map Q & A KgCLUE1.0 Top of the list , Enter the first tier on the billion level model , Scores of multiple subtasks and top ranking 3 The effect of the ten billion parameter model is very close , The parameter quantity is only one tenth of the latter , It is more conducive to large-scale industrial applications .

CLUE1.1 General list , common 9 Subtask

KgCLUE1.0, Knowledge map Q & a list

The technology is completely self-developed , Xiaobu promoted the landing of a billion level pre training large model

The emergence of large-scale pre training model , by natural language processing The task brings a new solution paradigm , It has also significantly improved all kinds of NLP The benchmark effect of the task . since 2020 year ,OPPO Xiaobu assistant team began to explore and apply the pre training model , from “ It can be industrialized on a large scale ” From the angle of , It has successively researched 100 million 、 Pre training model with parameters of 300 million and 1 billion OBERT.

Pre training model development & Application plan

Thanks to the low cost of data acquisition and the powerful migration ability of language models , at present NLP The main task of pre training is language model based on distributed hypothesis . Here it is , The Xiaobu assistant team chose the downstream natural language understanding class (NLU) There is better effect on the task MLM, And use course learning as the main pre training strategy , From easy to difficult, step by step , Improve training stability . First, the above is verified on the 100 million level model mask The effectiveness of the strategy , Its Zero-shot The effect is significantly better than open source base Level model , Downstream applications have also yielded benefits , Then it is applied to the billion level model training .

ZeroCLUE The list

It is worth mentioning that , From the experimental results of open source work , The greater the number and content diversity of corpus , The effect of downstream tasks will be improved . Based on the previous exploration and attempt , Billion OBERT The model was cleaned and collected 1.6 TB Level corpus , adopt 5 Kind of mask The mechanism learns language knowledge from it , The content includes encyclopedia 、 Community Q & A 、 News, etc , The scenario involves intention understanding 、 Multiple rounds of chat 、 Text matching, etc NLP Mission .

Strengthen application innovation , Xiaobu continues to plough deeply NLP technology

CLUE( Chinese language understanding assessment set ) The list is one of the most authoritative natural language understanding lists in the Chinese field , Set up, including classification 、 Text similarity 、 reading comprehension 、 Context reasoning, etc 10 Subtask , To promote NLP The continuous progress and breakthrough of training model technology .

NLP( natural language processing ) Technology is known as Artificial intelligence crown jewels . As the core of AI cognitive ability ,NLP yes AI One of the most challenging tracks in the field , Its purpose is to make computers have human hearing 、 say 、 read 、 The ability to write, etc , And use knowledge and common sense to reason and make decisions .

Xiaobu assistant was released on 2019 year , To 2021 end of the year , It has accumulated 2.5 Billion devices , The number of monthly live users has exceeded 1.3 Billion , The number of monthly interactions reached 20 Billion , Become the first mobile phone voice assistant with hundreds of millions of live users in China , It has become the representative of the new generation of intelligent assistants in China .

stay NLP Technical aspects , Little cloth assistant experienced from rule engine 、 Simple model to strong deep learning , And then to several stages of the pre training model . after 3 The development of , Assistant Xiao Bu is NLP The technology field has reached the industry leading level , this OBERT At the top of CLUE 1.1 Top five 、KgCLUE 1.0 Top of the list , It is the best proof of the precipitation and accumulation of Xiaobu assistant technology .

pass a competitive examination CLUE 1.1 And top the list KgCLUE 1.0 Ranking List , It mainly benefits from three aspects : One is to use the massive data accumulated by Xiaobu assistant , Get spoken language data , Promote the algorithm model to have a better understanding of the language of the intelligent assistant scene ; Second, maintain an open growth mindset , Follow up the latest developments in academia and industry and put them into practice ; Third, firmly invest in the direction of the latest pre training model , Do technical accumulation bit by bit , Explore landing applications again and again .

future , Xiaobu assistant team will combine the characteristics of intelligent assistant scene , Continuous optimization of pre training techniques , Deep tillage NLP, Use technology such as model lightweight to accelerate the landing of large models , And continue to explore AI Combination with active emotion , Make intelligence more humanized , In the era of the integration of all things , Help to promote AI To shine , help AI Moisten things silently into people's future digital intelligence life .

边栏推荐

- Kotlin函数

- Pytoch through datasets Imagefolder loads datasets directly from files

- MySQL splits strings for conditional queries

- MySQL multi table operation

- Introduction to relational model theory

- Learning JVM garbage collection 06 - memory set and card table (hotspot)

- ZABBIX 5.0 - LNMP environment compilation and installation

- Third party payment interface design

- How to recover the information server and how to recover the server data [easy to understand]

- About cache exceptions: solutions for cache avalanche, breakdown, and penetration

猜你喜欢

Knowledge representation (KR)

OPPO小布推出预训练大模型OBERT,晋升KgCLUE榜首

Detailed steps for upgrading window mysql5.5 to 5.7.36

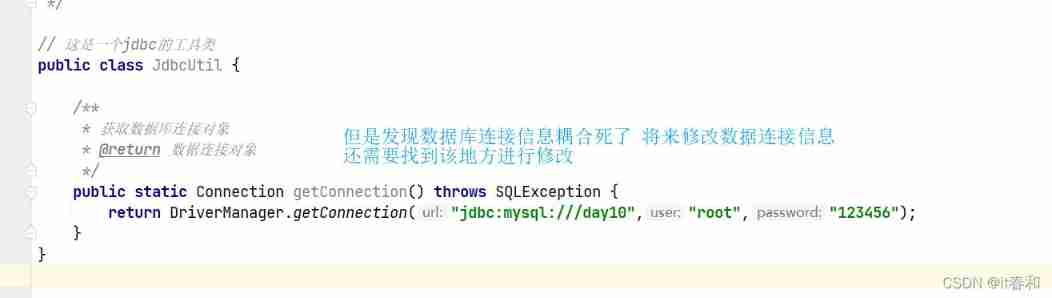

JDBC -- extract JDBC tool classes

Pytorch two-layer loop to realize the segmentation of large pictures

Understand redis persistence mechanism in one article

Learn memory management of JVM 01 - first memory

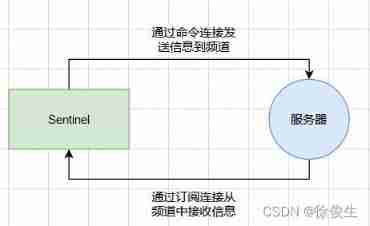

Redis highly available sentinel cluster

Constructing expression binary tree with prefix expression

Automated test lifecycle

随机推荐

MySQL index (1)

Solve the problem of cache and database double write data consistency

How can labels/legends be added for all chart types in chart. js (chartjs.org)?

[figure neural network] GNN from entry to mastery

Preliminary exploration of basic knowledge of MySQL

GPS data format conversion [easy to understand]

JDBC -- use JDBC connection to operate MySQL database

MySQL data table operation DDL & data type

POJ-2499 Binary Tree

MySQL splits strings for conditional queries

Redis master-slave configuration and sentinel mode

Add a new cloud disk to Huawei virtual machine

Understand redis persistence mechanism in one article

Simple production of wechat applet cloud development authorization login

在家庭智能照明中应用的测距传感芯片4530A

Get all stock data of big a

Two minutes will take you to quickly master the project structure, resources, dependencies and localization of flutter

Keras implements verification code identification

JDBC exercise - query data encapsulated into object return & simple login demo

Redis clean cache