当前位置:网站首页>Thesis reading_ Medical NLP model_ EMBERT

Thesis reading_ Medical NLP model_ EMBERT

2022-07-05 17:41:00 【xieyan0811】

English title :EMBERT: A Pre-trained Language Model for Chinese Medical Text Mining

Chinese title : A pre training language model for Chinese medical text mining

Address of thesis :https://chywang.github.io/papers/apweb2021.pdf

field : natural language processing , Knowledge map , Biomedical

Time of publication :2021

author :Zerui Cai etc. , East China Normal University

Source :APWEB/WAIM

Quantity cited :1

Reading time :22.06.22

Journal entry

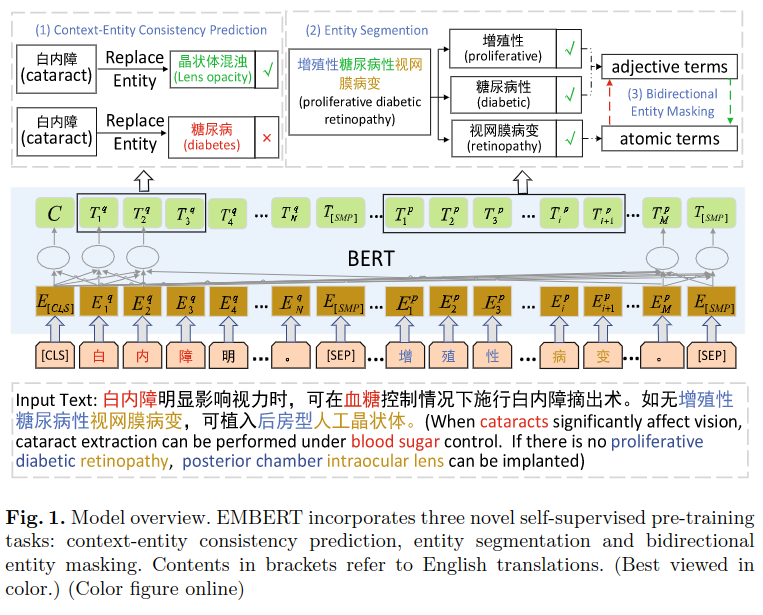

For the medical field , utilize Synonyms in the knowledge map ( Only dictionaries are used , Graph calculation method is not used ), Training similar BERT Natural language representation model . The advantage lies in the substitution of knowledge , Specifically designed Three self supervised learning methods To capture the relationship between fine-grained entities . The experimental effect is slightly better than the existing model . No corresponding code found , The specific operation method is not particularly detailed , Mainly understand the spirit .

What is worth learning from , The Chinese medical knowledge map used , Among them, the use of synonyms ,AutoPhrase Automatically recognize phrases , Segmentation method of high-frequency word boundary, etc .

Introduce

The method in this paper is dedicated to : Make better use of a large number of unlabeled data and pre training models ; Use entity level knowledge to enhance ; Capture fine-grained semantic relationships . And MC-BERT comparison , The model in this paper pays more attention to exploring the relationship between entities .

The author mainly aims at three problems :

- Synonyms are different , such as : Tuberculosis And consumption It refers to the same disease , But the text description is different .

- Entity nesting , such as : Novel coronavirus pneumonia , Both contain pneumonia entities , It also contains the entity of novel coronavirus , Itself is also an entity , The previous method only focused on the whole entity .

- Long entity misreading , such as : Diabetes ketoacids , When parsing, you need to pay attention to the relationship between the primary entity and other entities .

The contribution of the article is as follows :

- A Chinese medical pre training model is proposed EMBERT(Entity-rich Medical BERT), You can learn the characteristics of medical terms .

- Three self supervised tasks are proposed to capture semantic relevance at the entity level .

- Six Chinese medical data sets were used to evaluate , Experiments show that the effect is better than the previous method .

Method

Entity context consistency prediction

Use from http://www.openkg.cn/ From the knowledge map of SameAs Relationship building dictionary , Replace the words in the data set with synonyms to construct more training data , Then predict the consistency between the replaced entity and the context , To improve the effect of the model . On the principle of , The context of the replaced entity and the original entity should also be consistent .

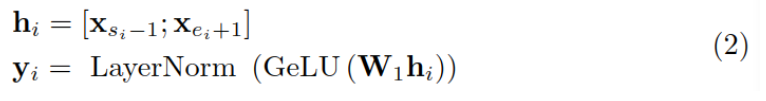

Suppose a sentence contains words x1…xn, Replaced the i Entity xsi,…xei, among s and e Indicates the starting and ending position of replacement , Its context refers to the location in si Before and si What follows , use ci Express .

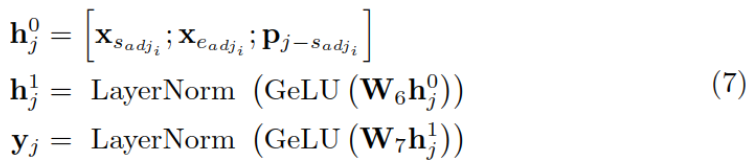

First , Encode the replaced entity as a vector yi:

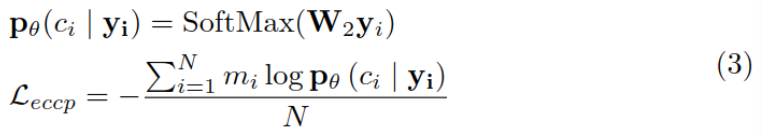

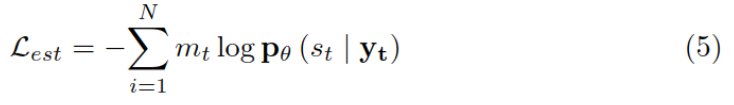

then , utilize yi To predict the context ci, And calculate the loss function :

Entity segmentation

A rule-based system is used to cut the long entity into several parts of semantics , And label , Training model with labeled data .

The way to do it is Build a physical vocabulary , Get a group of high-quality entities in the medical field from the Training Center , Combine with entities in the knowledge map . First use AutoPhrase Generate the original segmentation result , Calculate the frequency of the start and end positions of each segment , Yes top-100 Manual check of high-frequency words , As a tangent diversity .

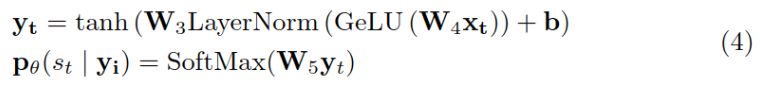

set up Long entity is xsi,…,xei, Cut it further xeij,…,xeij, And the last position of the segmented segment xsij Label as the syncopation point 1, Other location labels are 0, Training models to predict this label , It is defined as a binary classification problem . Formula y Is this position token Vector representation of .

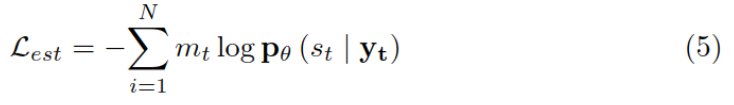

The loss function is calculated as follows :

Bidirectional entity masking

Use the method in the previous step , Long entities can be divided into adjectives and meta entities ( The main entities ), Cover adjectives , Use the primary entity to predict it ; Relative , Also mask the main entity , Predict it with adjectives .

Take the masking meta entity as an example , Use adjectives and relative positions p To calculate the representation of meta entities :

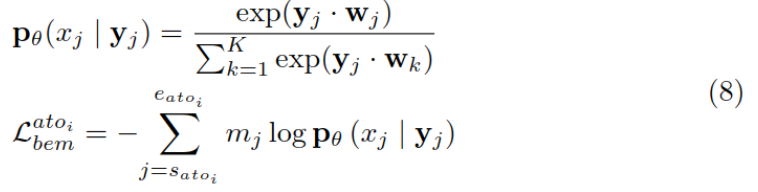

And then use it yj To predict the xj, And calculate the cross entropy as the loss function :

The same is true of using meta entity prediction to predict adjectives , The final loss function Lben It is the sum of two losses .

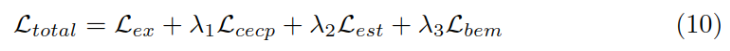

Loss function

The final loss function , contain BERT The loss of Lex And the loss of the above three methods ,λ It's a super parameter. .

experiment

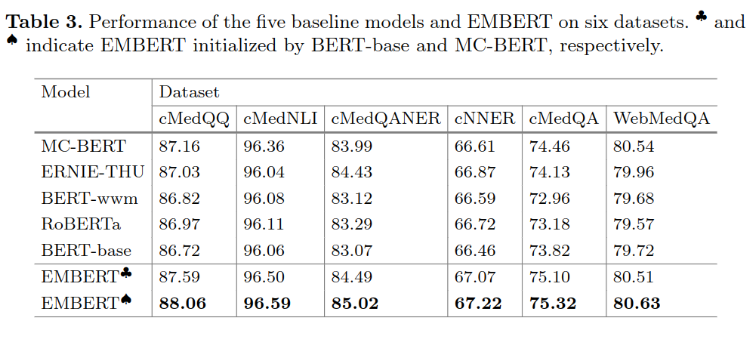

Use dingxiangyuan medical community Q & A and BBS Data training model , Data volume 5G, The training data used in this paper is obviously less than MC-BERT, But the effect is similar .

The main experimental results are as follows :

边栏推荐

- C # mixed graphics and text, written to the database in binary mode

- 这个17岁的黑客天才,破解了第一代iPhone!

- Force deduction solution summary 1200 minimum absolute difference

- Read the history of it development in one breath

- Which is more cost-effective, haqu K1 or haqu H1? Who is more worth starting with?

- Machine learning 01: Introduction

- 漫画:有趣的海盗问题 (完整版)

- ICML 2022 | Meta提出鲁棒的多目标贝叶斯优化方法,有效应对输入噪声

- stirring! 2022 open atom global open source summit registration is hot!

- VBA drives SAP GUI to realize office automation (II): judge whether elements exist

猜你喜欢

企业数字化发展中的六个安全陋习,每一个都很危险!

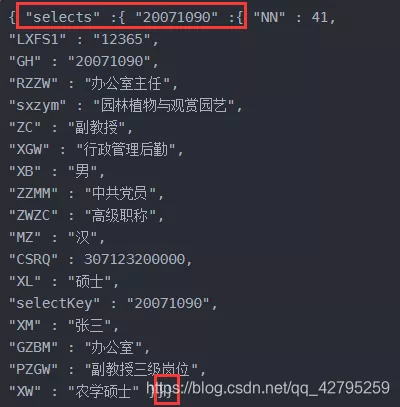

mysql中取出json字段的小技巧

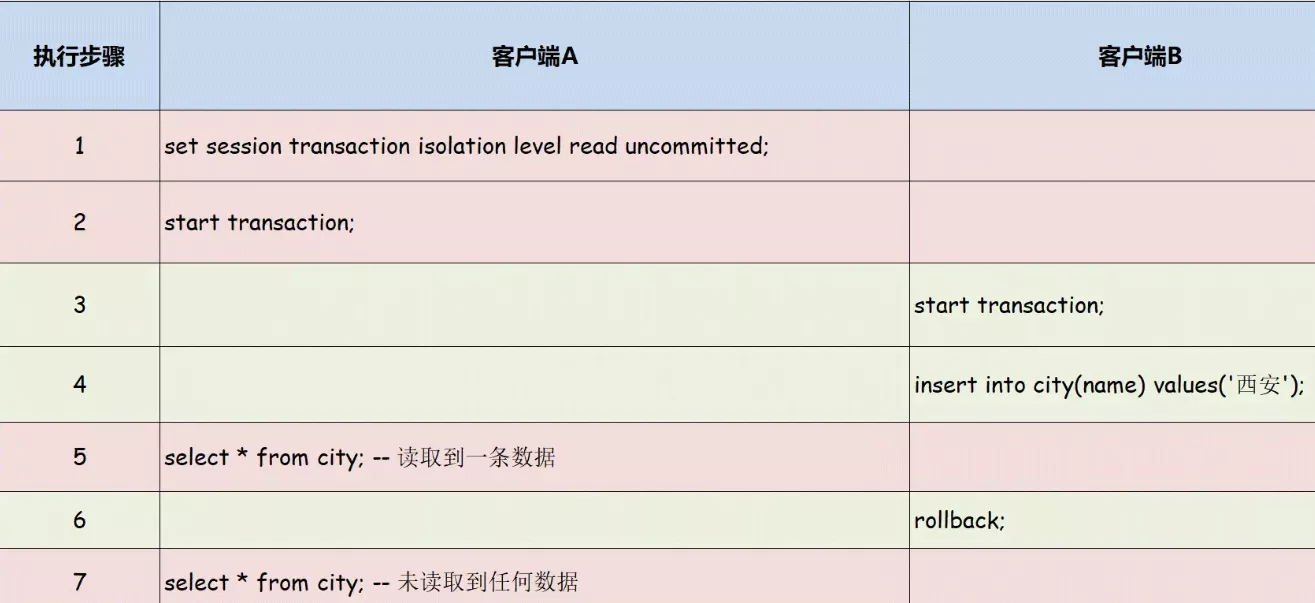

一文了解MySQL事务隔离级别

论文阅读_医疗NLP模型_ EMBERT

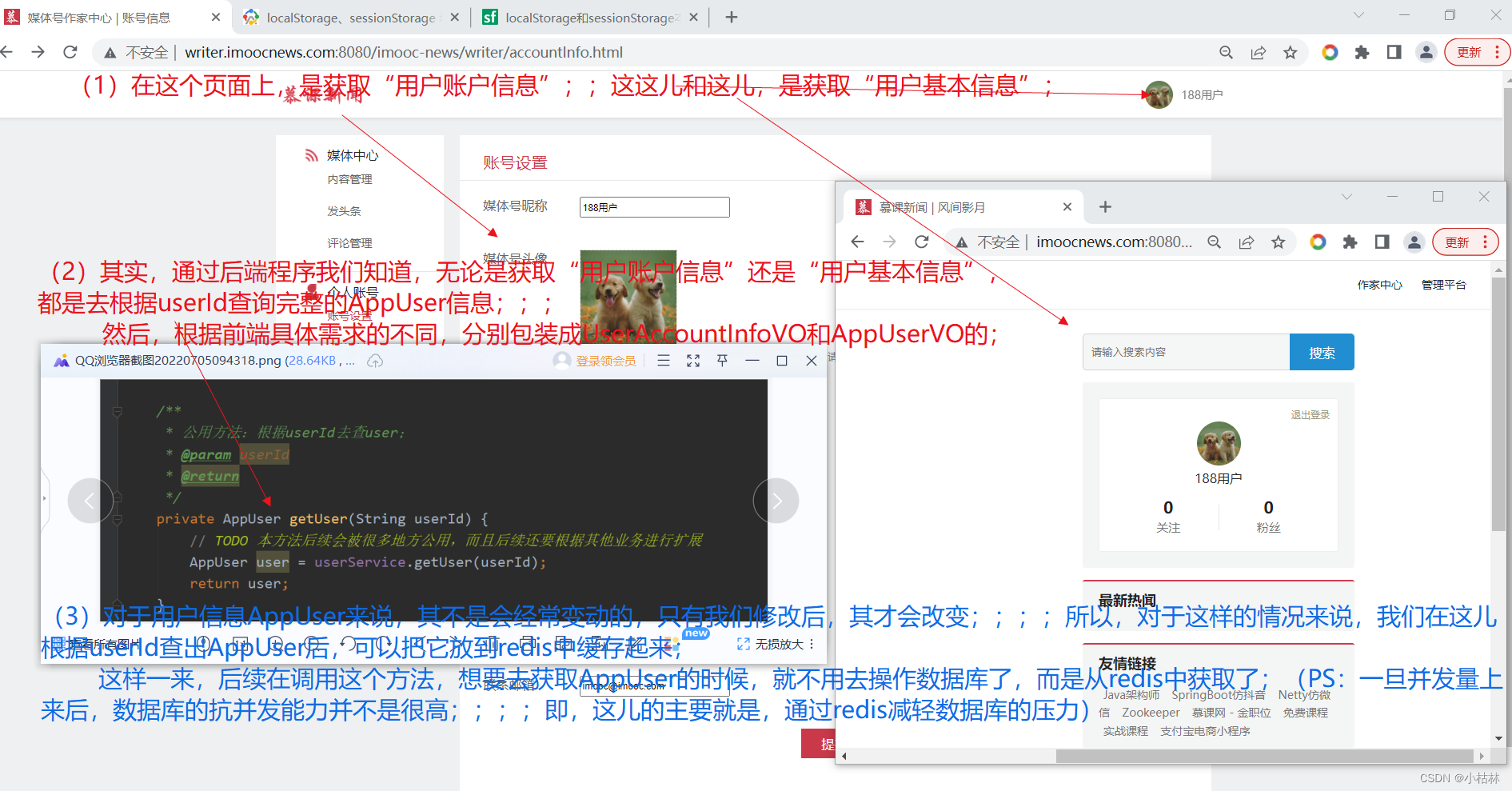

33:第三章:开发通行证服务:16:使用Redis缓存用户信息;(以减轻数据库的压力)

Which is more cost-effective, haqu K1 or haqu H1? Who is more worth starting with?

北京内推 | 微软亚洲研究院机器学习组招聘NLP/语音合成等方向全职研究员

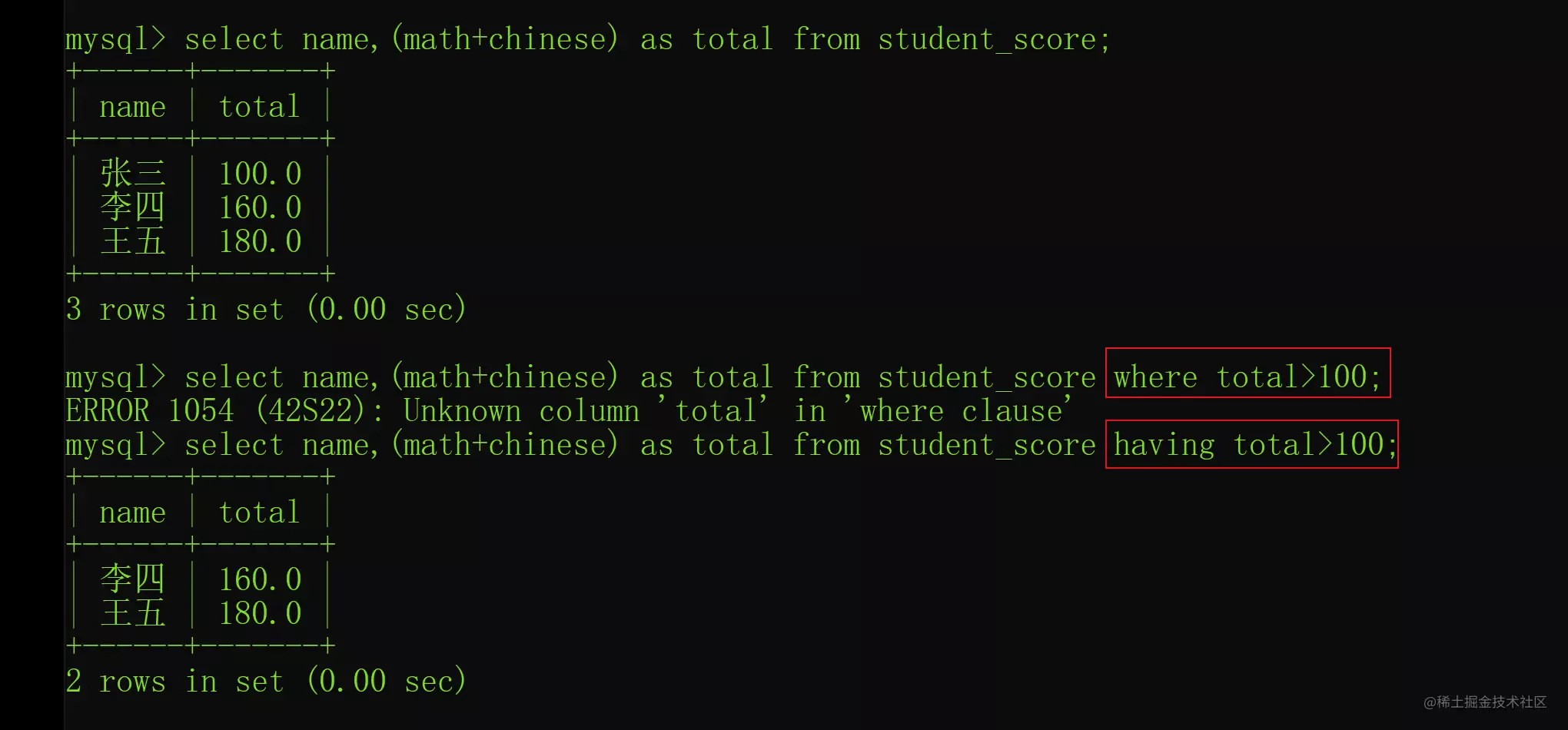

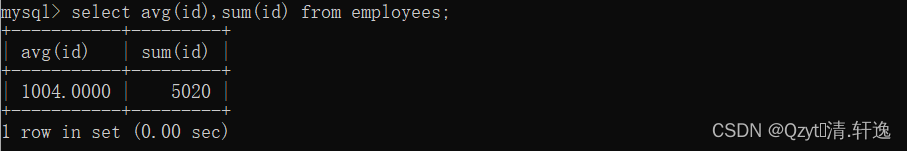

MySQL之知识点(七)

What are the precautions for MySQL group by

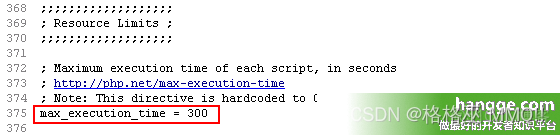

Count the running time of PHP program and set the maximum running time of PHP

随机推荐

Seven Devops practices to improve application performance

Tips for extracting JSON fields from MySQL

普通程序员看代码,顶级程序员看趋势

Flask solves the problem of CORS err

网络威胁分析师应该具备的十种能力

QT控制台打印输出

Cartoon: a bloody case caused by a math problem

Debug kernel code through proc interface

WebApp开发-Google官方教程

mysql如何使用JSON_EXTRACT()取json值

thinkphp3.2.3

深入理解Redis内存淘汰策略

基于Redis实现延时队列的优化方案小结

mysql5.6解析JSON字符串方式(支持复杂的嵌套格式)

蚂蚁金服的暴富还未开始,Zoom的神话却仍在继续!

漫画:寻找股票买入卖出的最佳时机

How to write a full score project document | acquisition technology

Humi analysis: the integrated application of industrial Internet identity analysis and enterprise information system

数据访问 - EntityFramework集成

C # mixed graphics and text, written to the database in binary mode