当前位置:网站首页>Sqoop command

Sqoop command

2022-07-05 02:46:00 【A vegetable chicken that is working hard】

Data import

- This is just for the convenience of the next command test

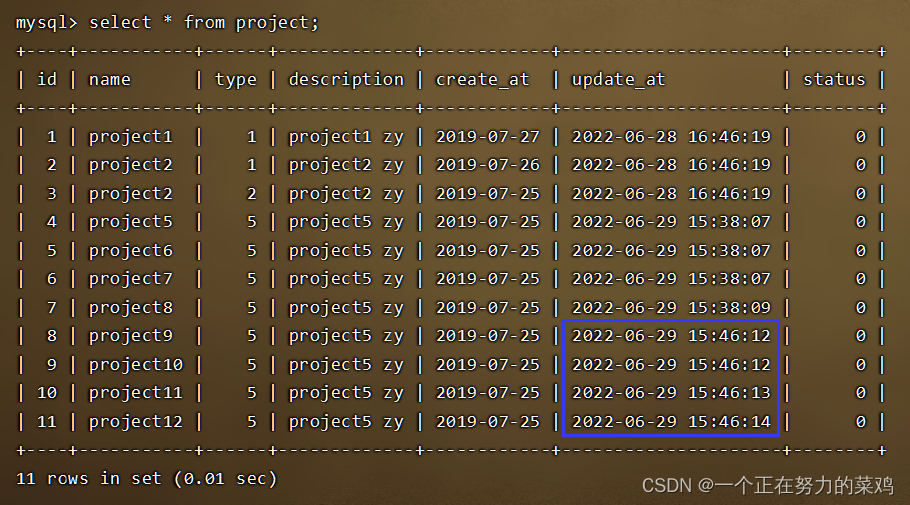

1.project Group data

- database

1.create database sqooptest1

2.use sqooptest1

3.create table project(

id int not null auto_increment primary key,

name varchar(100) not null,

type tinyint(4) not null default 0,

description varchar(500) default null,

create_at date default null,

update_at timestamp not null default current_timestamp on update current_timestamp,

status tinyint(4) not null default 0

);

4.insert into project( name,type,description,create_at,status)

values( 'project1',1,'project1 zy','2019-07-27',0);

insert into project( name,type,description,create_at,status)

values( 'project2',1,'project2 zy','2019-07-26',0);

insert into project( name,type,description,create_at,status)

values( 'project2',2,'project2 zy','2019-07-25',0);

- sqoop command

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1 --username root --password a --table project

- result

2.students Group data

- database

1.create database sqooptest1

2.use sqooptest1

3.create table students(

id int not null primary key,

name varchar(100) not null,

age varchar(100) not null

);

- Data location

E:\JAVA Course \...\11.Hadoop\12.Sqoop\a.txt

- Insert data into the database

import java.io.BufferedReader;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.List;

import java.util.Scanner;

public class AddBatchMysql {

public static void main(String[] args) {

//1. User input file location

Scanner sc = new Scanner(System.in);

System.out.println(" file location :");

String path = sc.nextLine();

//2. Read all data in the file in the form of stream , According to the line read , Press \t cut , Separate out id,name,age

List<String> list = new ArrayList<String>();

try (BufferedReader br = new BufferedReader(new InputStreamReader(new FileInputStream(new File(path))))){

String str;

while((str=br.readLine())!=null){

list.add(str);

}

} catch (Exception e) {

e.printStackTrace();

}

//3. Bulk insert data

System.out.println(" The total number of data :"+list.size());

String sql = "insert into students values(?,?,?)";

Connection con=null;

PreparedStatement pstmt=null;

try {

con = DriverManager.getConnection("jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC","root","a");

con.setAutoCommit(false);// Set to manually commit transactions

pstmt = con.prepareStatement(sql);

int total = 0;

String s;

String[] ss;

for(int i=0;i<list.size();i++){

s = list.get(i);

ss = s.split("\t");

pstmt.setString(1, ss[0]);

pstmt.setString(2, ss[1]);

pstmt.setString(3, ss[2]);

// Add batch operation

// Add the current operation to the batch cache

pstmt.addBatch();

if((i+1)%1000==0){

//1000 Data processing once

int[] res = pstmt.executeBatch();

total+=sum(res);

con.commit();

pstmt.clearBatch();

}

}

int[] res = pstmt.executeBatch();

System.out.println(res);

total+=sum(res);

con.commit();

pstmt.clearBatch();

System.out.println(" Actual number of inserted data :"+total);

} catch (Exception e) {

e.printStackTrace();

try {

con.rollback();

} catch (SQLException e1) {

e1.printStackTrace();

}

}finally {

if(con!=null){

try {

con.setAutoCommit(true);

} catch (SQLException e) {

e.printStackTrace();

}

try {

con.close();

} catch (SQLException e) {

e.printStackTrace();

}

}

}

}

private static int sum(int[] res){

int total = 0;

if(res==null&&res.length<=0){

return 0;

}

for(int i=0;i<res.length;i++){

total+=res[i];

}

return total;

}

}

- Wait until the data insertion is completed ...

Command official website

sqoop-list-

1. Lieku

- command

sqoop-list-databases --connect jdbc:mysql://localhost:3306/mysql?serverTimezone=UTC --username root --password a --verbose

--verbose: Print more information at work

2. list

sqoop-list-tables --connect jdbc:mysql://localhost:3306/mysql?serverTimezone=UTC --username root --password a --verbose

sqoop import-

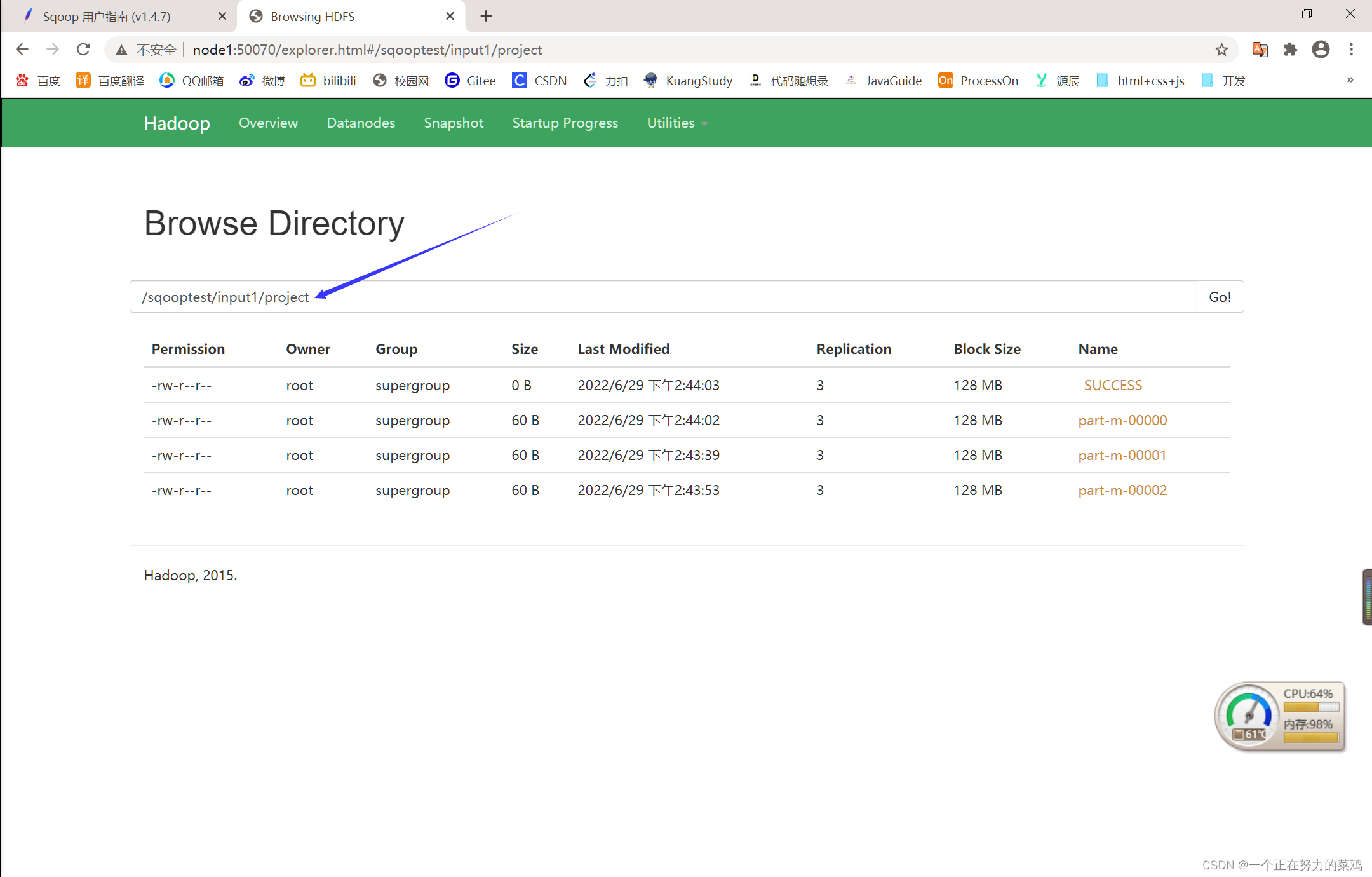

1. Specify the path :–target-dir

- –target-dir

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --target-dir /sqooptest/input1/project

Specify the directory :/sqooptest/input1/project

Actual catalog :/sqooptest/input1/project

- result

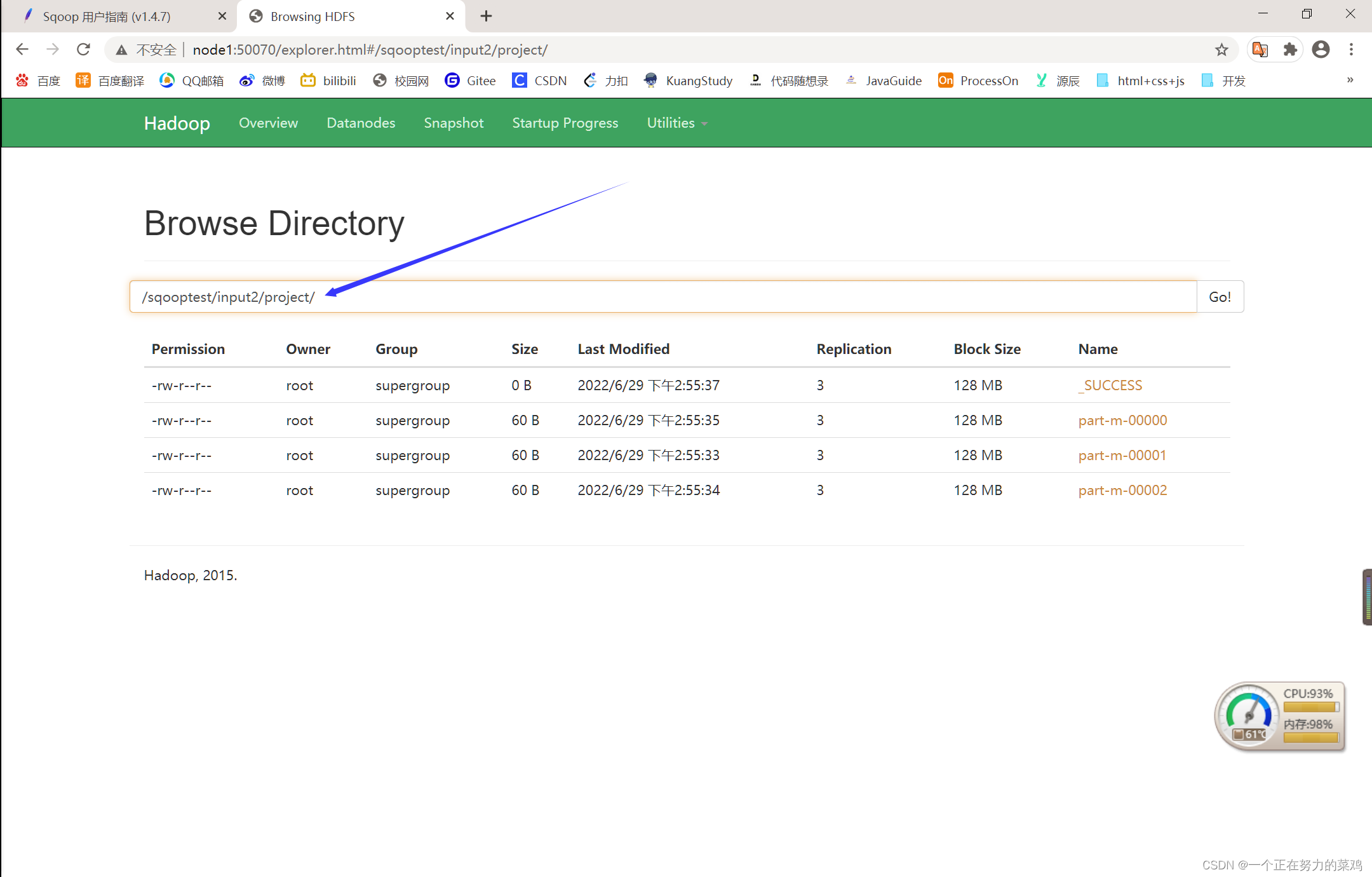

2. The table name is used as the data warehouse name :–warehouse-dir

- –warehouse-dir

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --warehouse-dir /sqooptest/input2

Specify the directory :/sqooptest/input2

Actual catalog :/sqooptest/input2/project

analysis : Create a directory named table name under the specified directory , At this time, the table name is regarded as a data warehouse name (warehouse)

- result

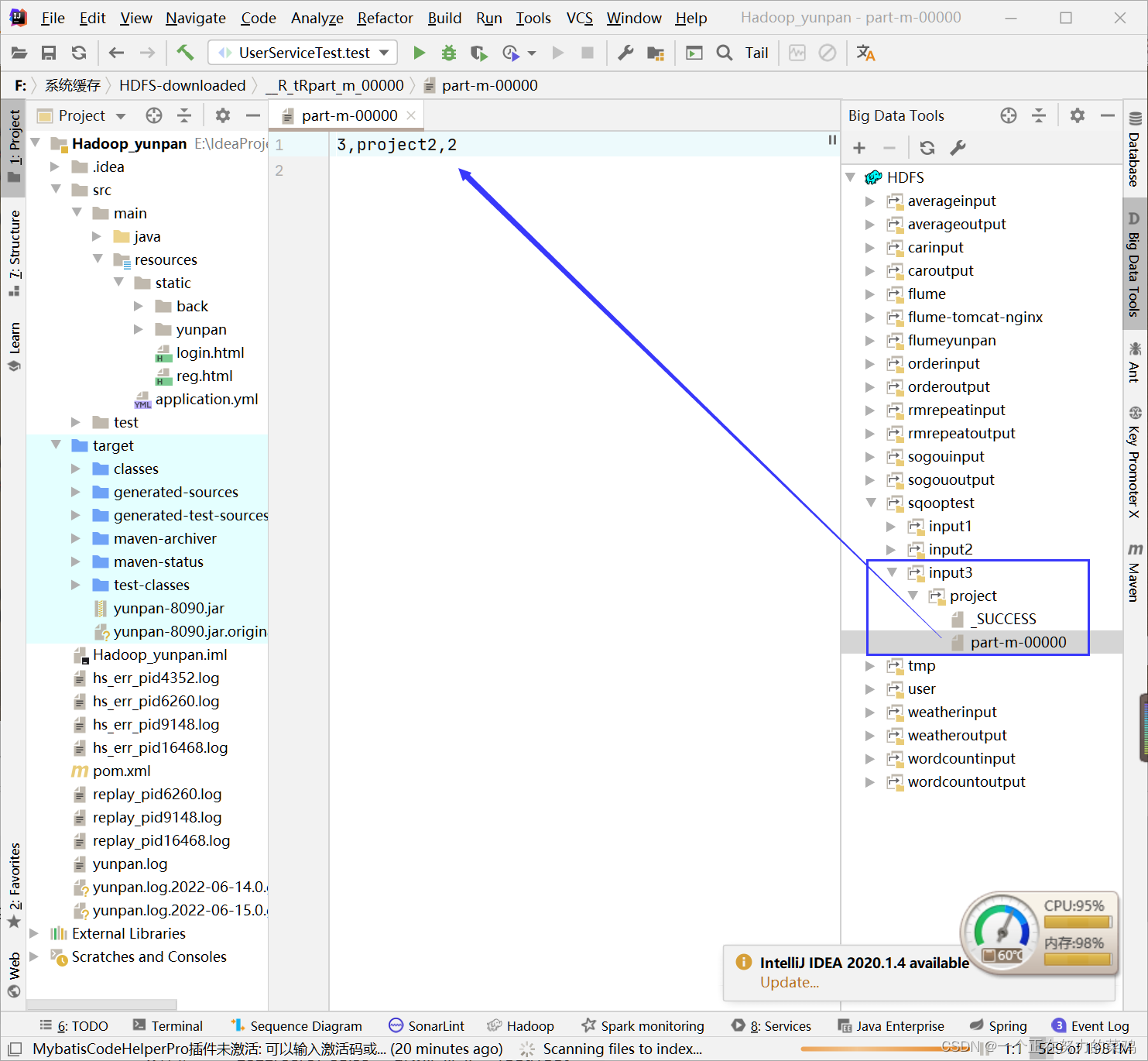

3. Specify the columns and query criteria to query

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --warehouse-dir /sqooptest/input3 --columns 'id,name,type' --where 'id>2' -m 1

--table

--columns

--where

-m: It means only one mapper, One mapper Corresponding to a slice , Corresponding to an output file

Because in the --table, So the above will be assembled automatically sql sentence . , Cannot be associated with -e or -query share

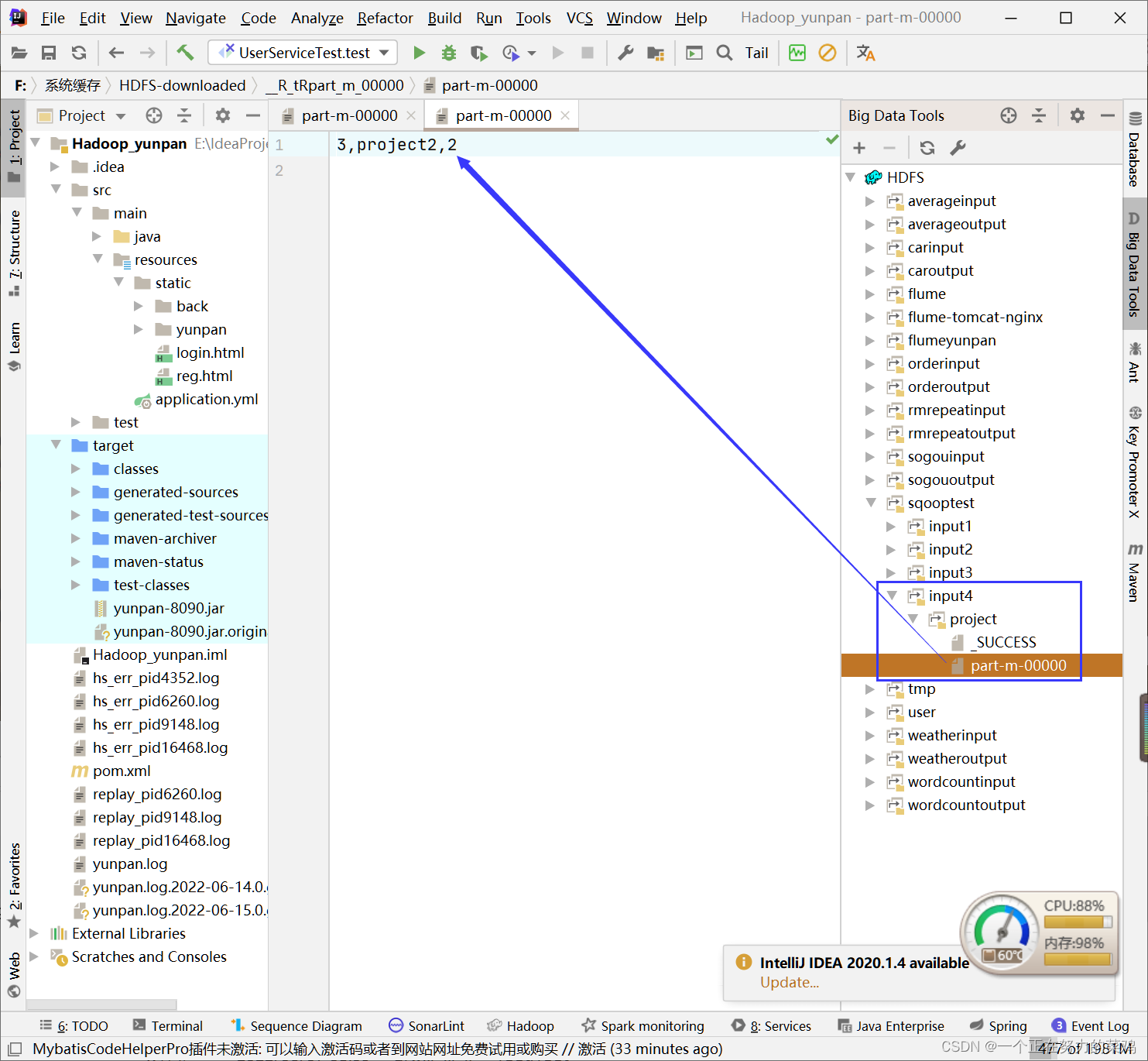

4. Appoint sql sentence

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --target-dir /sqooptest/input4/project --query 'select id,name,type from project where id>2 and $CONDITIONS' --split-by project.id -m 1

--query: Cannot be associated with --table, --columns share

$CONDITIONS: Indicates the partition column

--split-by: Table columns for splitting work units , Cannot be associated with --autoreset-to-one-mapper Use options together

-m: It means only one mapper, One mapper Corresponding to a slice , Corresponding to an output file

5.–direct

- Failure , A pit !!!!!

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --warehouse-dir /sqooptest/input5 --direct -m 1

--direct Use mysqldump Command to complete the import , Because it's a cluster ,map The task is assigned to each node to run , So every node should have mysqldump command

6. Incremental import

- Used to retrieve only rows that are newer than some previously imported rowsets

- Parameters

--check-column: Check the columns

--incremental append: How to determine which values are up-to-date

append: Additional

lastmodified: Last revision

--last-value: The maximum value retrieved from the last import

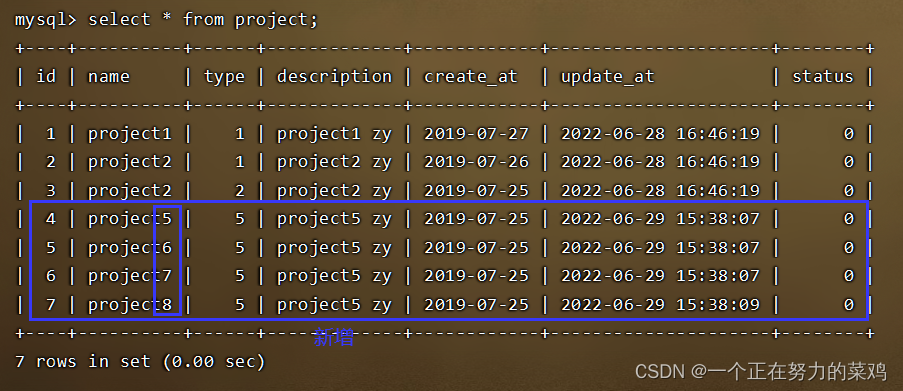

- insert data

insert into project( name,type,description,create_at,status)

values( 'project5',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project6',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project7',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project8',5,'project5 zy','2019-07-25',0);

- command

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --warehouse-dir /sqooptest/input6 -m 1 --check-column id --incremental append --last-value 3

- result

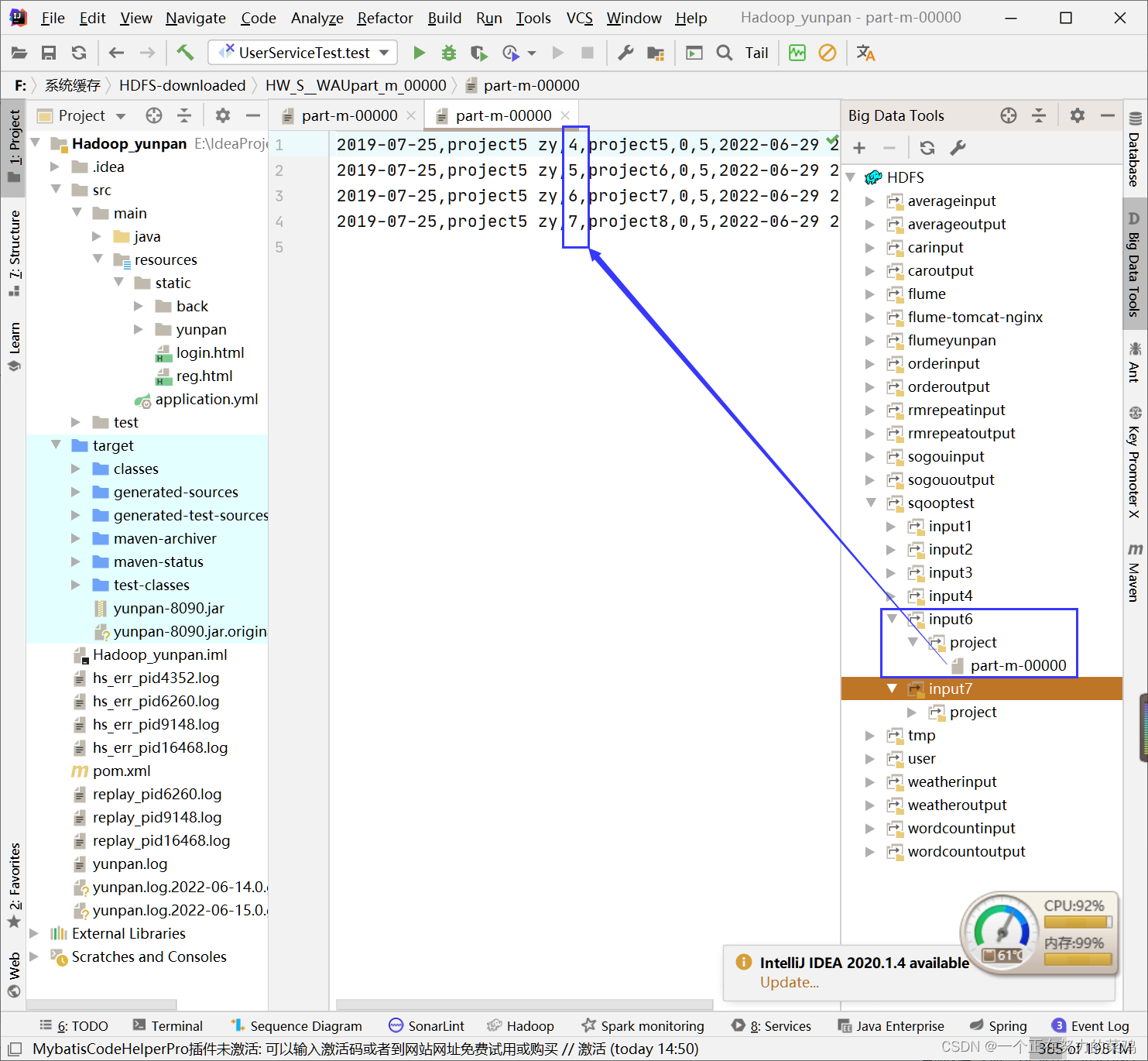

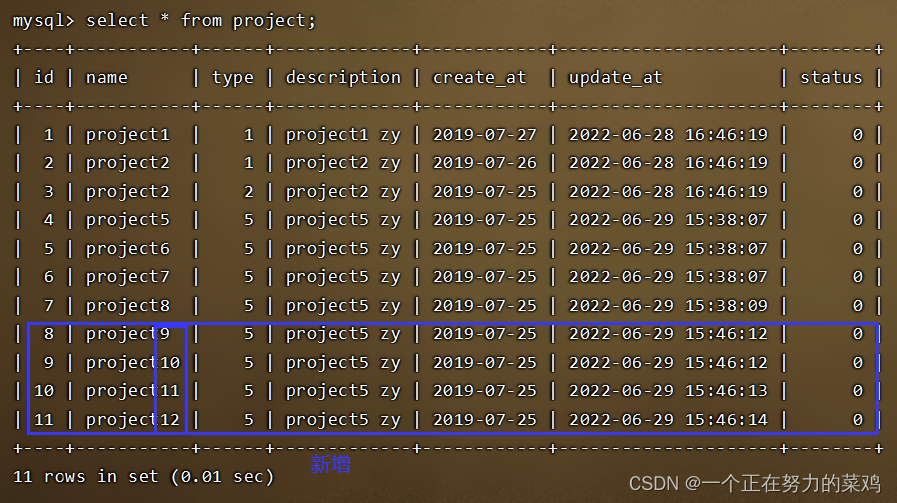

- insert data

insert into project( name,type,description,create_at,status)

values( 'project9',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project10',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project11',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project12',5,'project5 zy','2019-07-25',0);

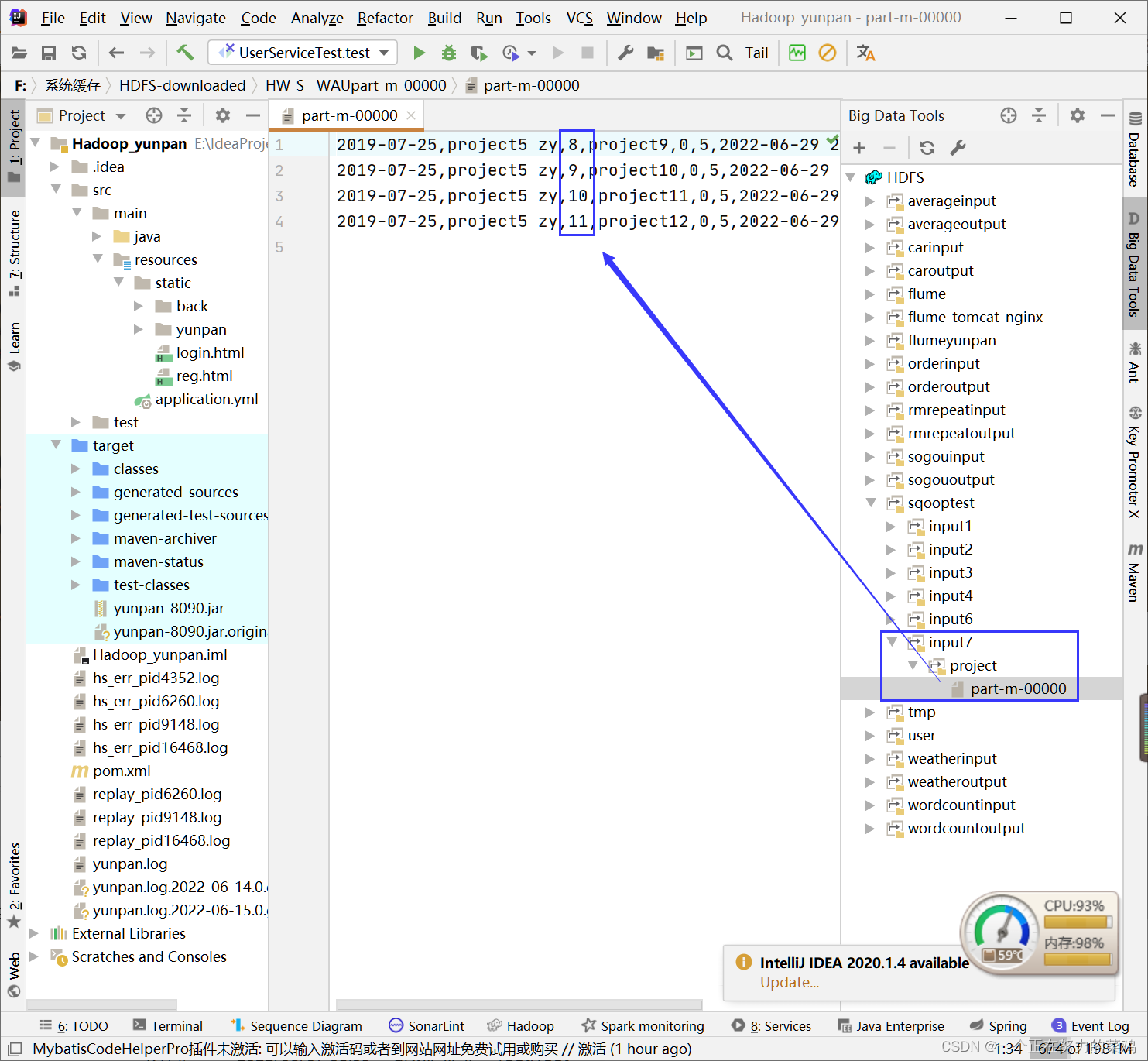

- command : Note that the output directory has not changed

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --warehouse-dir /sqooptest/input6 -m 1 --check-column id --incremental append --last-value 7

- result : The output directory in the figure is correct input6

- Add the last modification time

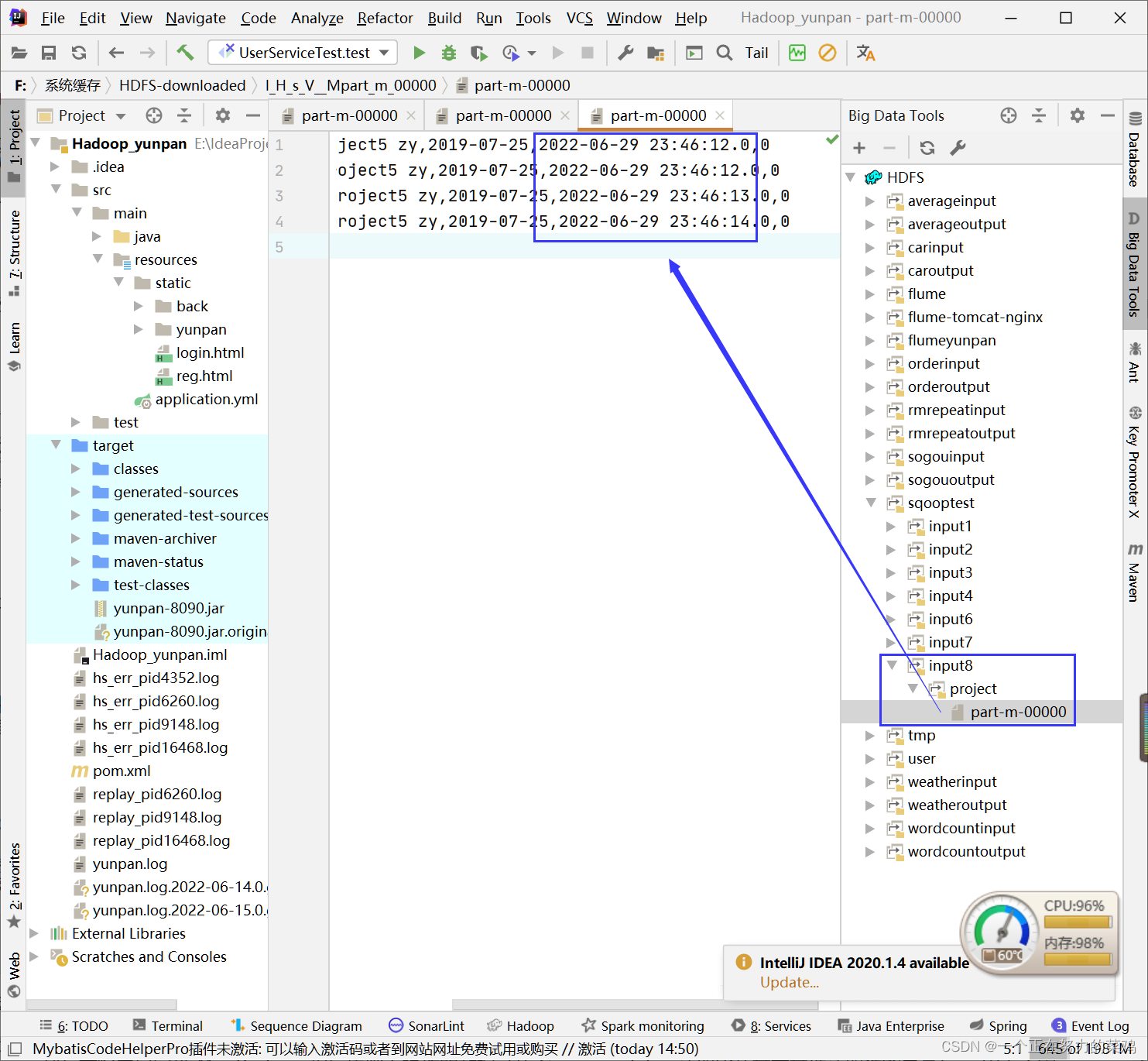

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --warehouse-dir /sqooptest/input8 -m 1 --check-column update_at --incremental lastmodified --last-value "2022-06-29 15:46:12" --append

--append: Append data to HDFS Existing dataset in

- result

sqoop job-

1. Grammar format

sqoop job (generic-args) (job-args) [-- [subtool-name] (subtool-args)]

Be careful -- After space

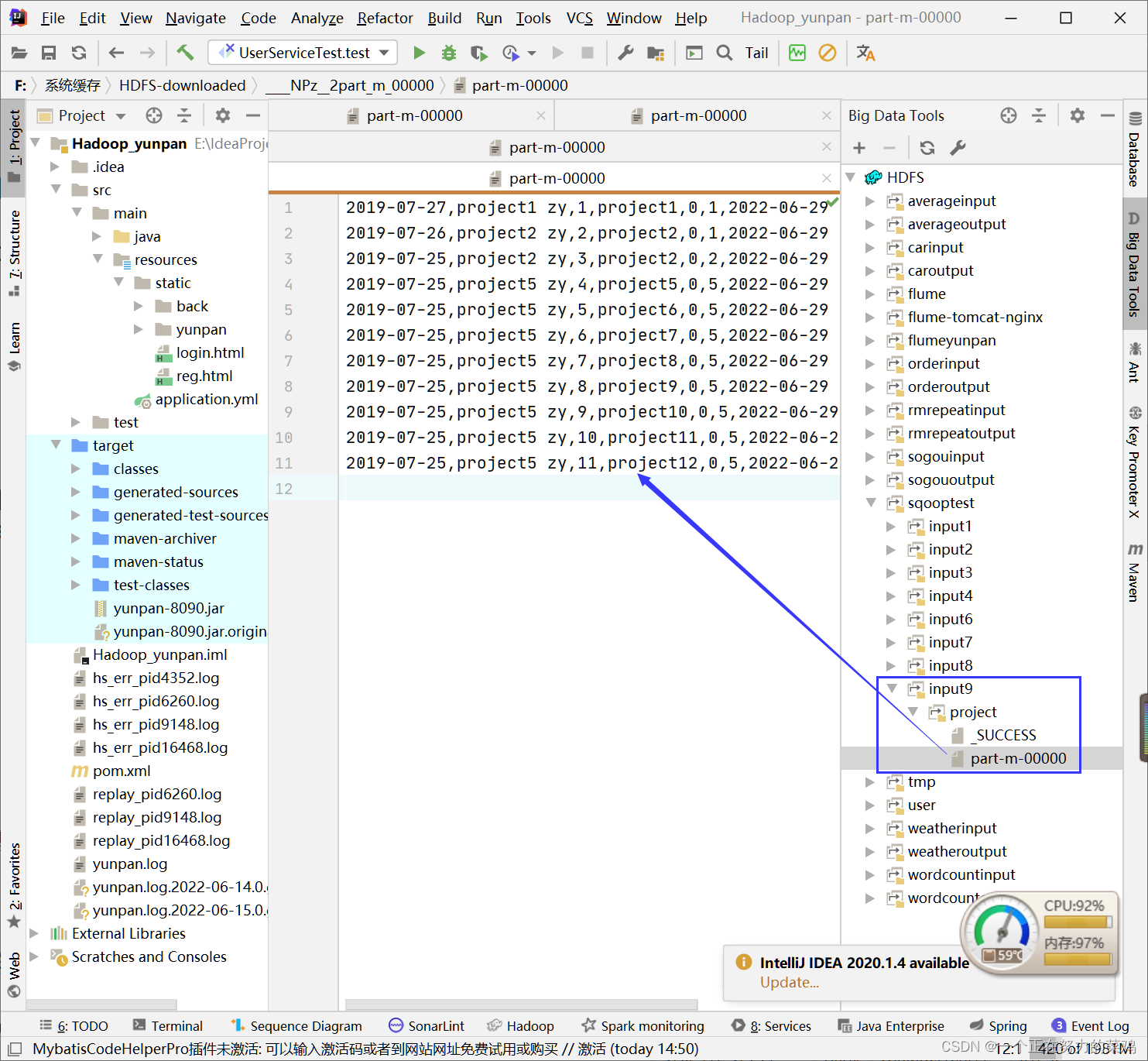

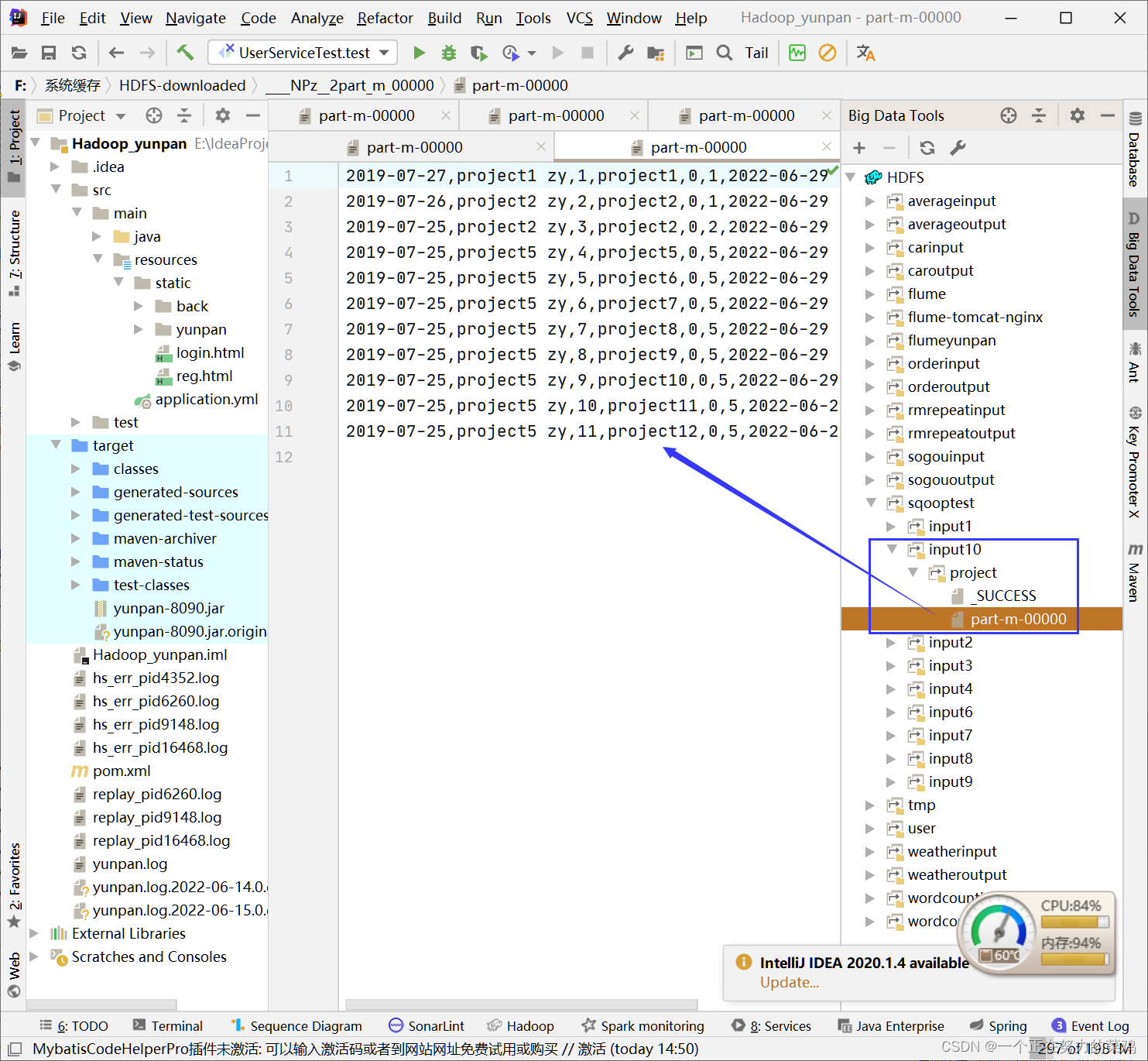

2. Create tasks , Import sqooptest1 In the library project The contents of the table go to hadoop

- The original order

sqoop import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --target-dir /sqooptest/input9/project -m 1

- Create task command

sqoop job --create yc-job1 -- import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password a --table project --target-dir /sqooptest/input10/project -m 1

- Possible problems

1. The task already exists , Please change the task name or delete the original task .

2.Caused by: java.lang.ClassNotFoundException: org.json.JSONObject

The lack of jar package (org.json.json), take java-json.jar Packages uploaded to sqoop/lib It's a bag

- There are two ways to view the created task

sqoop job --list

sqoop job --show yc-job1

- Perform tasks

sqoop job --exec yc-job1

- View the execution results

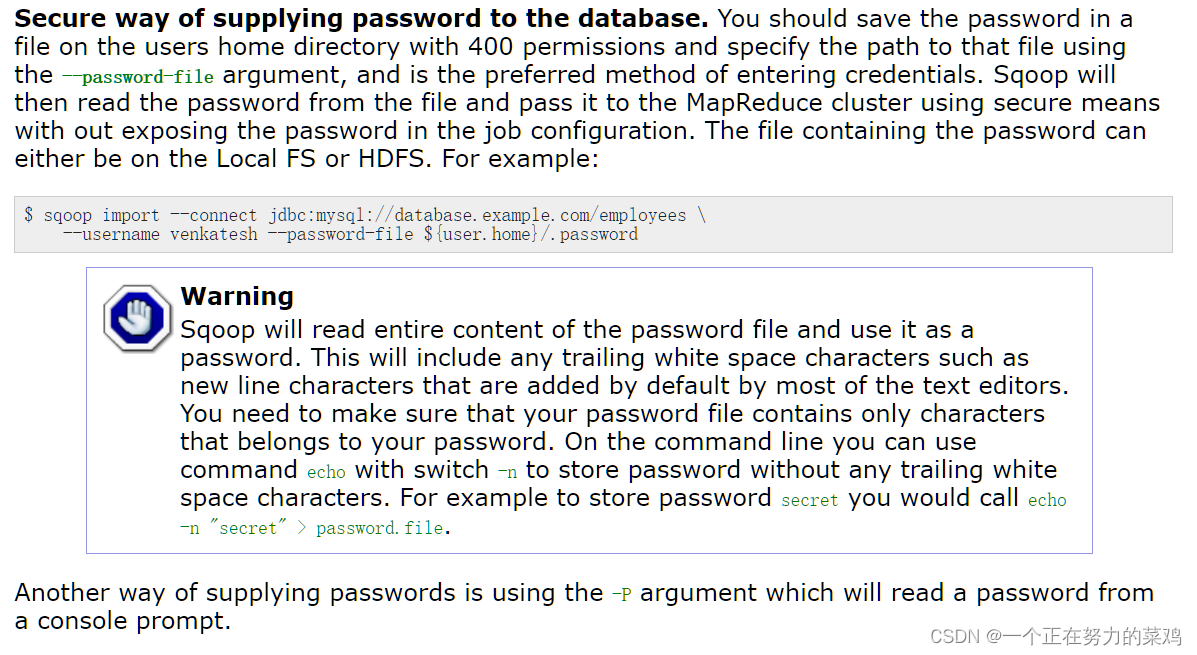

3. Log in to the database with a password file

- When creating tasks above , Tips MySQL Password entry for , Blocking the automatic operation

- official 7.2.1 Prompt to configure password file

- Create password hidden file

echo -n "a" >/root/.mysql.password

chmod 400 /root/.mysql.password

- List all files , Including hiding

ls -al

- The code that creates the task

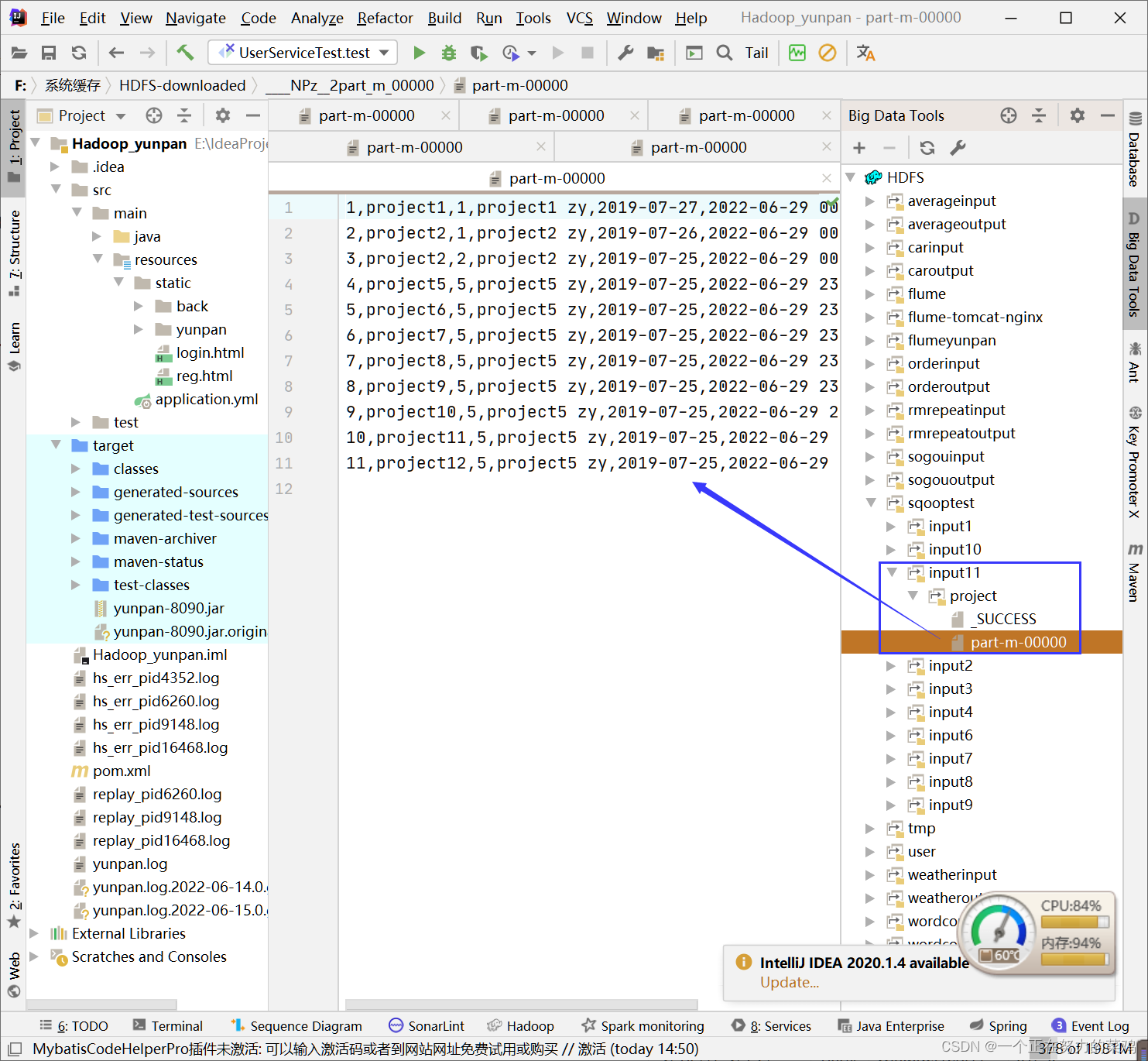

sqoop job --create yc-job2 -- import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password-file file:////root/.mysql.password --table project --target-dir /sqooptest/input11/project -m 1

- View the created task

sqoop job --list

sqoop job --show yc-job2

- Perform tasks

sqoop job --exec yc-job2

- View the execution results

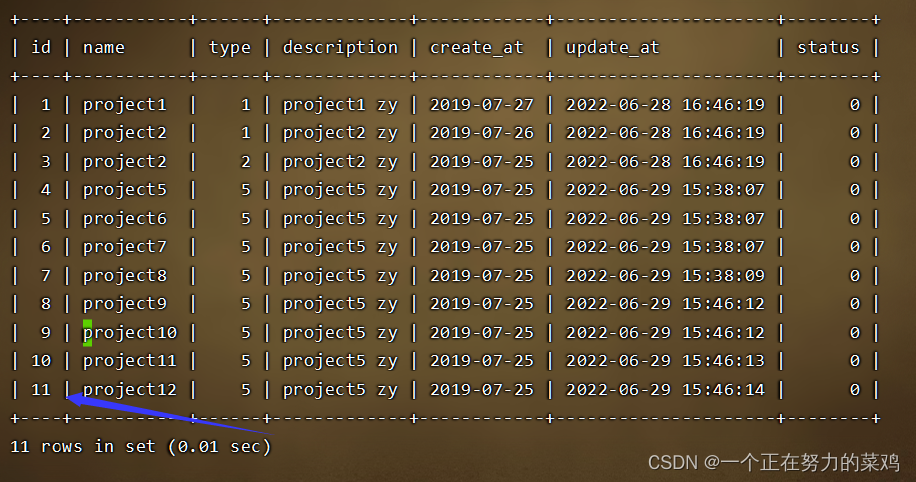

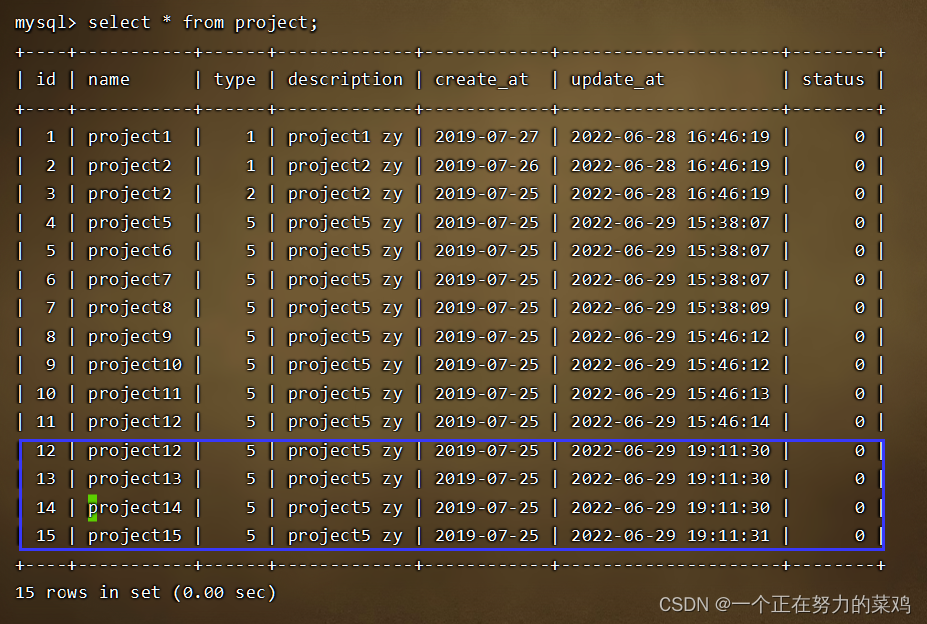

4. Create an additional import task

- To mysql Check out project Tabular id Maximum

- Insert some new data

insert into project( name,type,description,create_at,status)

values( 'project12',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project13',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project14',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project15',5,'project5 zy','2019-07-25',0);

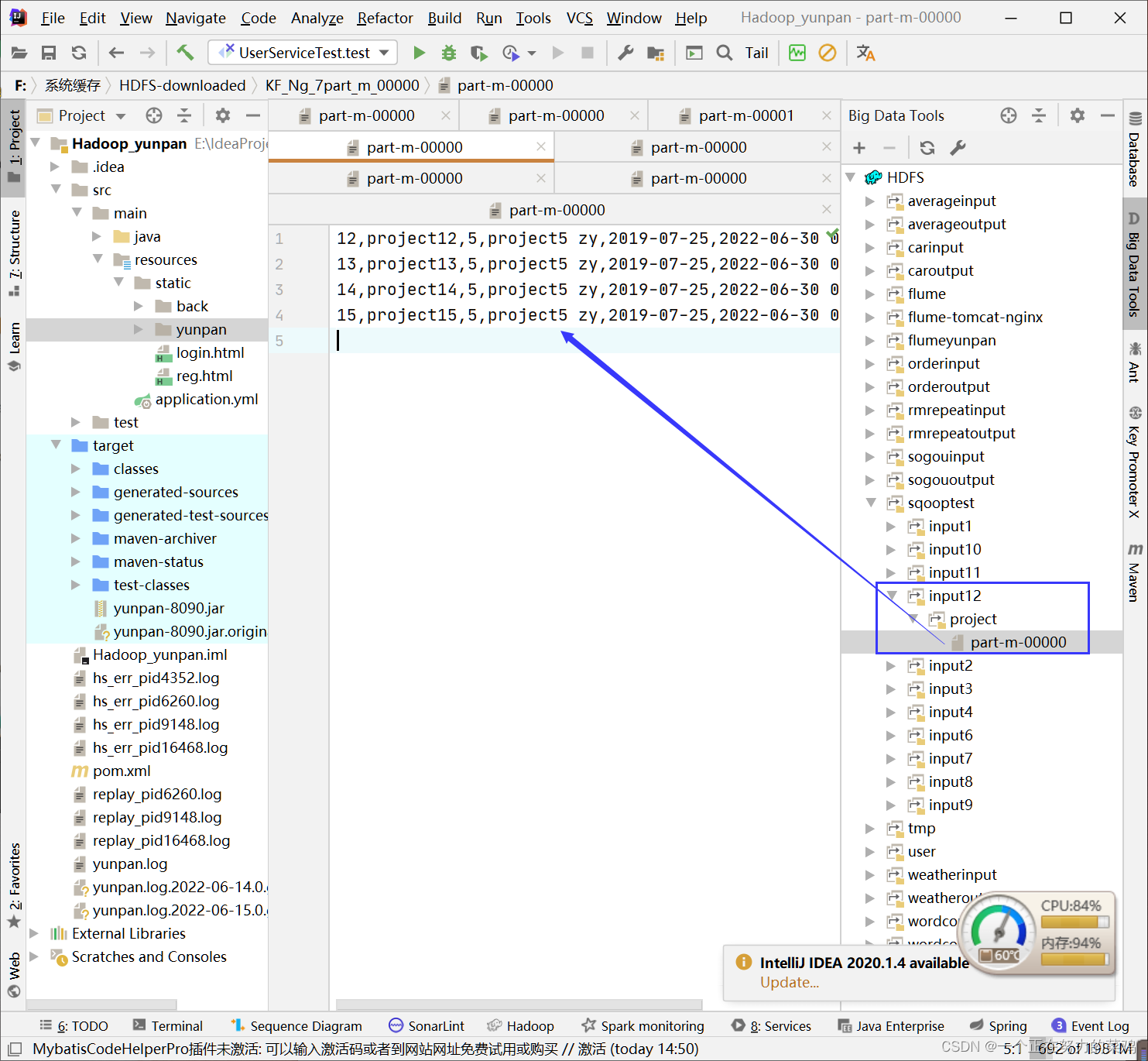

- Create task code

sqoop job --create yc-job3 -- import --connect jdbc:mysql://node3:3306/sqooptest1?serverTimezone=UTC --username root --password-file file:////root/.mysql.password --table project --target-dir /sqooptest/input12/project -m 1 --check-column id --incremental append --last-value 11

- View the created task

sqoop job --list

- Perform tasks

sqoop job --exec yc-job3

- View the execution results

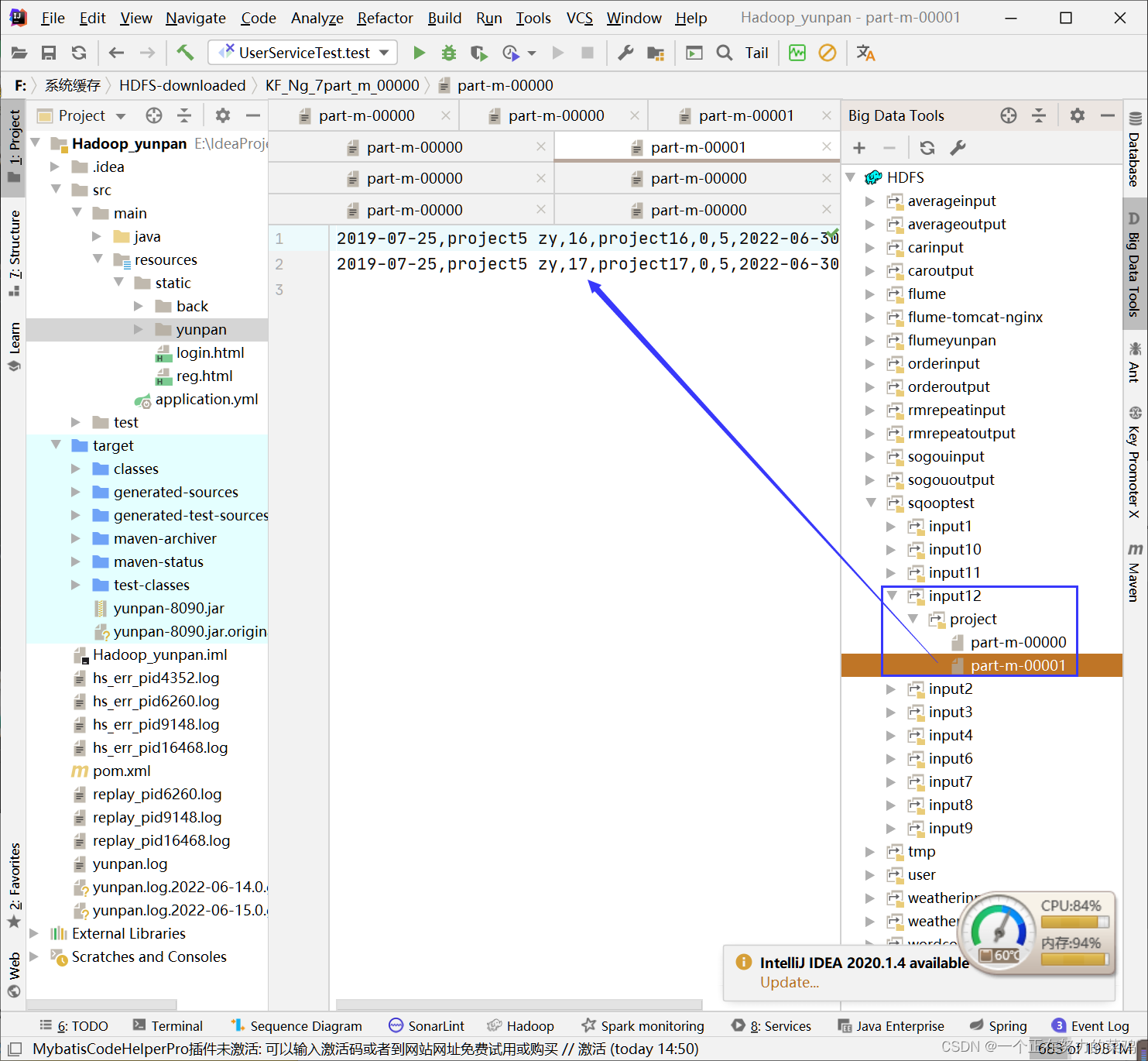

- Insert some new data

insert into project( name,type,description,create_at,status)

values( 'project16',5,'project5 zy','2019-07-25',0);

insert into project( name,type,description,create_at,status)

values( 'project17',5,'project5 zy','2019-07-25',0);

- Perform tasks

sqoop job --exec yc-job3

- View the execution results

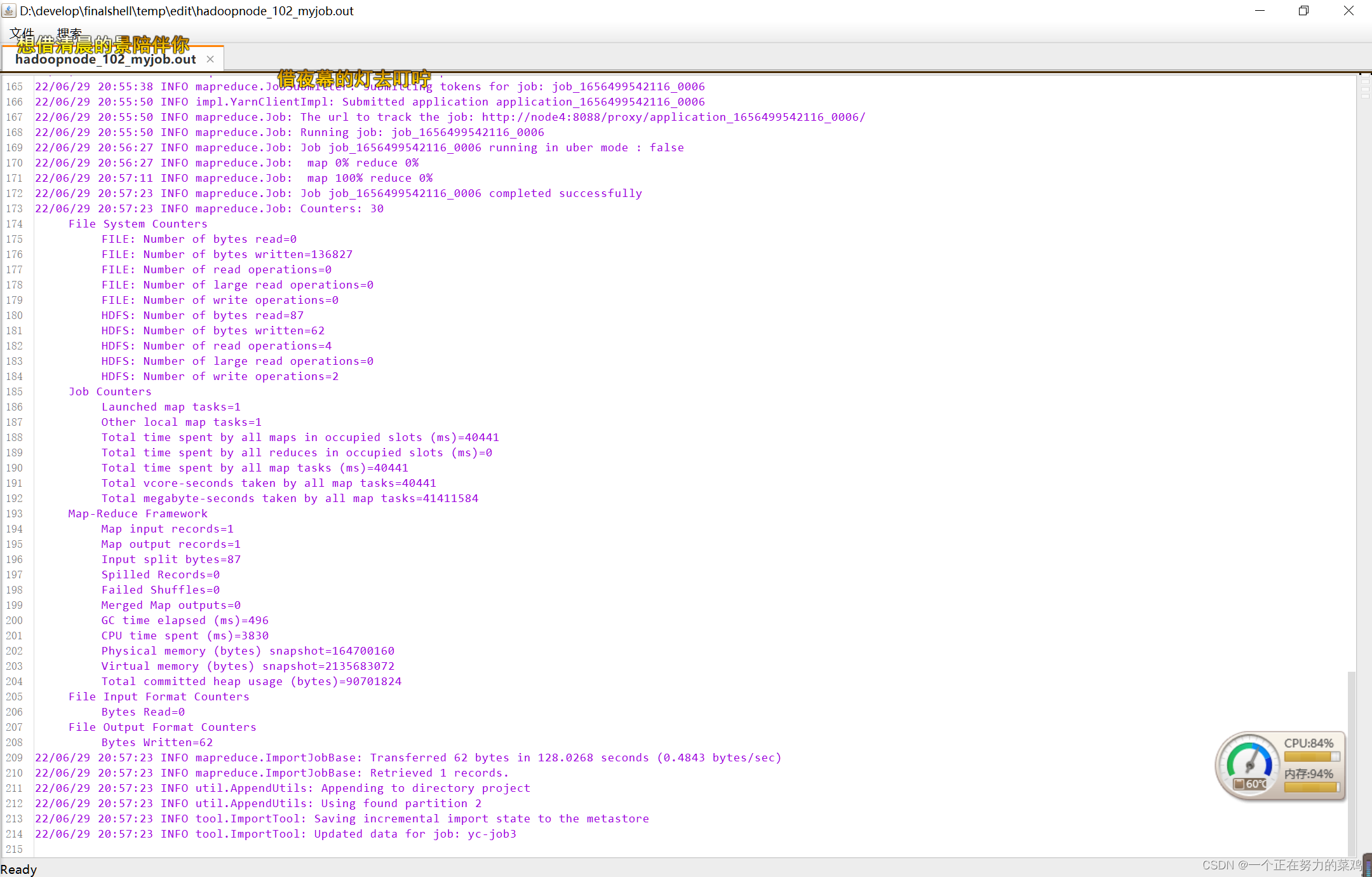

- ** The output shows , This job There is one on the bottom called metastore Metabase of (sqlite, metastore) Store the current id The latest value of , So that you can import from here next time , This facilitates scheduled tasks , There is no need for programmers to record the updated data **

5. Time work

- Three options

1.oozie,azkaban frame ***

2. Write a timer (Thread class ,java.util.TimerTask class ,Quartz Timer frame ->cron expression )

3.centos Self contained crontab Realization ****

- Implementation of the third scheme

- /usr/local/bin Create sqoop_incremental.sh Scheduled task script file

cd /usr/local/bin

vim sqoop_incremental.sh

#! /bin/bash

/usr/local/sqoop147/bin/sqoop job --exec yc-job3>>/usr/local/sqoop147/myjob.out 2>&1 &

# explain

#/usr/local/sqoop147/bin/sqoop:sqoop Command full path , Prevent missing

#/usr/local/sqoop147/myjob.out: The result of the command is output to myjob.out

#2>&1: The error log is also regarded as the correct log

#&: Background processes

- establish crontab

crontab -e

# Every time 5 Once per minute

*/5 * * * * /usr/bin/bash /usr/local/sqoop147/bin/sqoop_incremental.sh

# Format : branch when Japan month Zhou command

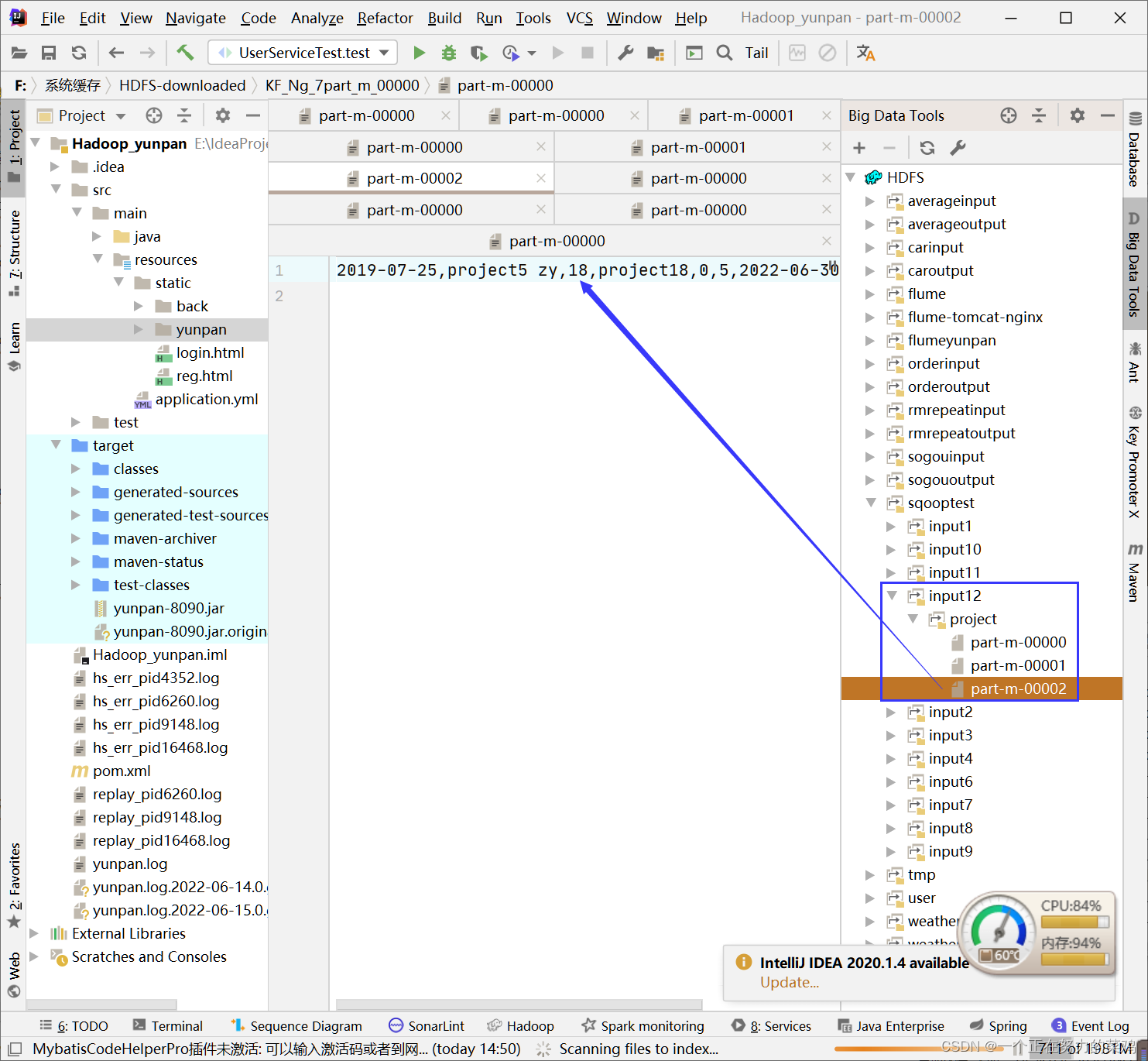

- Insert some new data

insert into project( name,type,description,create_at,status)

values( 'project18',5,'project5 zy','2019-07-25',0);

- Wait about five minutes

- view log file :/usr/local/sqoop147/myjob.out

- View the execution results

边栏推荐

猜你喜欢

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

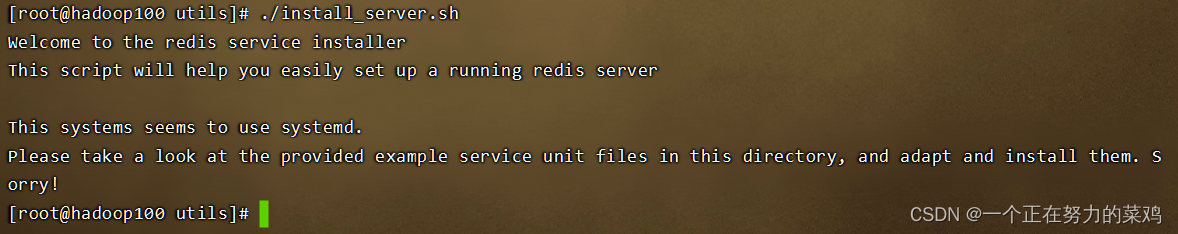

Linux安装Redis

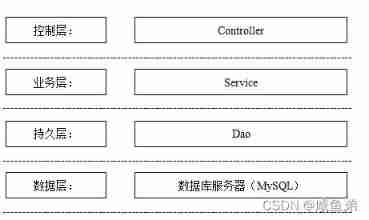

Design and implementation of community hospital information system

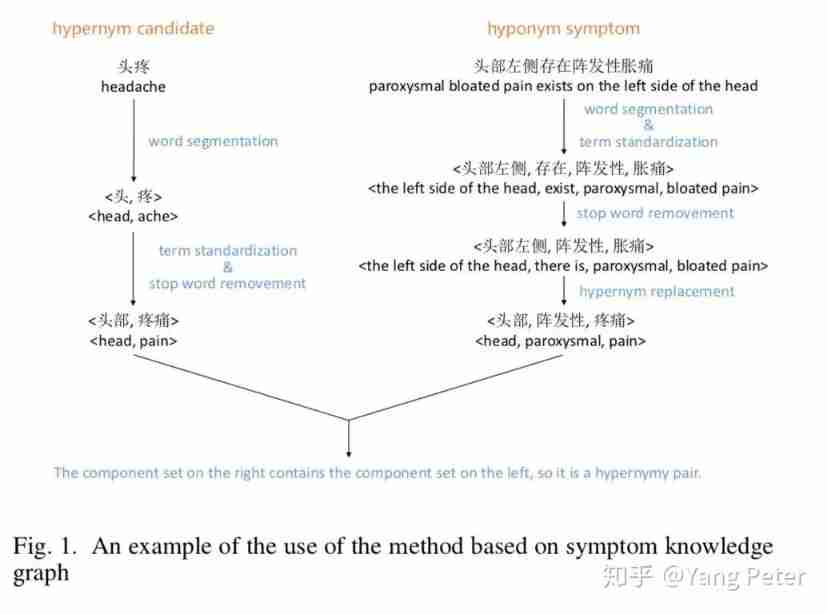

Exploration of short text analysis in the field of medical and health (I)

Summary and practice of knowledge map construction technology

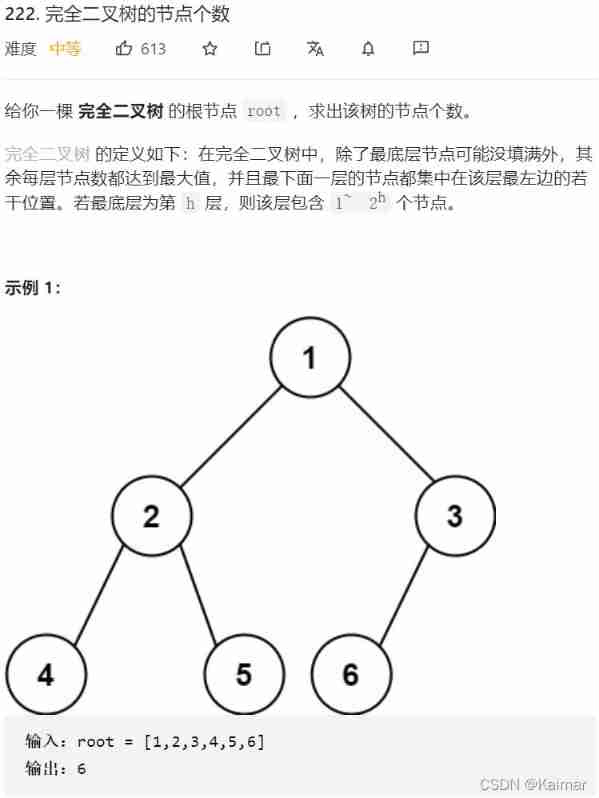

【LeetCode】222. The number of nodes of a complete binary tree (2 mistakes)

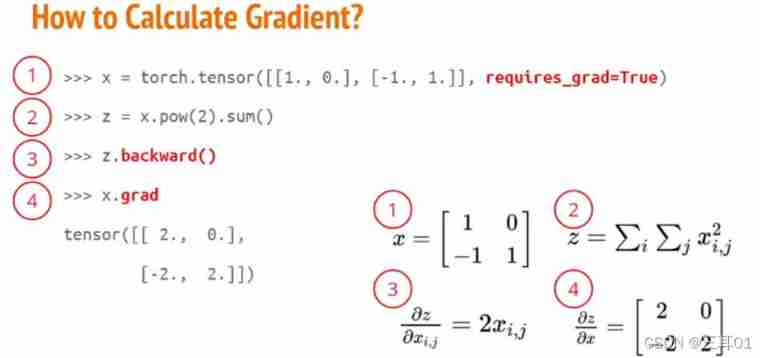

2021 Li Hongyi machine learning (2): pytorch

Cut! 39 year old Ali P9, saved 150million

![ASP. Net core 6 framework unveiling example demonstration [01]: initial programming experience](/img/22/08617736a8b943bc9c254aac60c8cb.jpg)

ASP. Net core 6 framework unveiling example demonstration [01]: initial programming experience

随机推荐

Open source SPL optimized report application coping endlessly

Design and practice of kubernetes cluster and application monitoring scheme

单项框 复选框

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

openresty ngx_ Lua execution phase

Android advanced interview question record in 2022

[Yu Yue education] National Open University autumn 2018 8109-22t (1) monetary and banking reference questions

Exploration of short text analysis in the field of medical and health (I)

Write a thread pool by hand, and take you to learn the implementation principle of ThreadPoolExecutor thread pool

D3js notes

【LeetCode】106. Construct binary tree from middle order and post order traversal sequence (wrong question 2)

PHP cli getting input from user and then dumping into variable possible?

Spark SQL learning bullet 2

Eight days of learning C language - while loop (embedded) (single chip microcomputer)

Hmi-30- [motion mode] the module on the right side of the instrument starts to write

Comparison of advantages and disadvantages between platform entry and independent deployment

Scientific research: are women better than men?

数据库和充值都没有了

Elk log analysis system

返回二叉树中两个节点的最低公共祖先