当前位置:网站首页>Neon Optimization: an optimization case of log10 function

Neon Optimization: an optimization case of log10 function

2022-07-07 01:14:00 【To know】

NEON Optimize :log10 Optimization case of function

NEON Optimization series :

- NEON Optimize 1: Software performance optimization 、 How to reduce power consumption ?link

- NEON Optimize 2:ARM Summary of optimized high frequency instructions , link

- NEON Optimize 3: Matrix transpose instruction optimization case ,link

- NEON Optimize 4:floor/ceil Optimization case of function ,link

- NEON Optimize 5:log10 Optimization case of function ,link

- NEON Optimize 6: About cross access and reverse cross access ,link

- NEON Optimize 7: Performance optimization experience summary ,link

- NEON Optimize 8: Performance optimization FAQs QA,link

background

In the calculation of audio samples , It is often necessary to convert the amplitude value to the logarithmic domain , It involves a lot of log operation , Here is a summary of improvement log Some optimization methods of operation speed .

log10 Function description

- Input :x>0, Floating point numbers

- Output :log10(x), Floating point numbers

- Peak error (Peak Error):~0.000465339053%

- Root mean square error (RMS Error):~0.000008%

An optimization method

Yes log10 The operation is carried out NEON The basic basis of optimization is :log10 The operation can be expanded into polynomials by Taylor approximation , And then according to IEEE Floating point storage format , Perform table lookup operation on relevant data , Into multiplication and addition , Speed up the calculation .

The polynomial coefficients are expanded as follows :

const float LOG10_TABLE[8] = {

// 8 length

-0.99697286229624F, // a0

-1.07301643912502F, // a4

-2.46980061535534F, // a2

-0.07176870463131F, // a6

2.247870219989470F, // a1

0.366547581117400F, // a5

1.991005185100089F, // a3

0.006135635201050F, // a7

};

Optimized version 1

The optimization method is from logarithmic operation to look-up table and polynomial fitting , The calculation process is analyzed as follows :

- step1

- A = a4 * x + a0

- B = a5 * x + a1

- C = a6 * x + a2

- D = a7 * x + a3

- step2

- A := A + B * x^2

- C := C + D * x^2

- step3

- F = A + C * x^4 = a0 + a4 * x + a2 * x^2 + a6 * x^3 + a1 * x^4 + a5 * x^5 + a3 * x^6 + a7 * x^7

- Common operator :

x, x^2, x^4

From principle to code implementation , The code is as follows :

float Log10FloatNeonOpt(float fVarin)

{

float fTmpA, fTmpB, fTmpC, fTmpD, fTmpxx;

int32_t iTmpM;

union {

float fTmp;

int32_t iTmp;

} aVarTmp1;

// extract exponent

aVarTmp1.fTmp = fVarin;

iTmpM = (int32_t)((uint32_t)aVarTmp1.iTmp >> 23); // ride 2^-23 narrow , take 32-9=23 The height of 9 position

iTmpM = iTmpM - 127; // reduce 2^8-1=127, Take the inverse

aVarTmp1.iTmp = aVarTmp1.iTmp - (int32_t)((uint32_t)iTmpM << 23); // Subtract multiply 2^23 Restored value , Take out the index

// Taylor Polynomial (Estrins)

fTmpxx = aVarTmp1.fTmp * aVarTmp1.fTmp;

fTmpA = (LOG10_TABLE[4] * aVarTmp1.fTmp) + (LOG10_TABLE[0]); // 4: a1 0: a0

fTmpB = (LOG10_TABLE[6] * aVarTmp1.fTmp) + (LOG10_TABLE[2]); // 6: a3 2: a2

fTmpC = (LOG10_TABLE[5] * aVarTmp1.fTmp) + (LOG10_TABLE[1]); // 5: a5 1: a4

fTmpD = (LOG10_TABLE[7] * aVarTmp1.fTmp) + (LOG10_TABLE[3]); // 7: a7 3: a6

fTmpA = fTmpA + fTmpB * fTmpxx;

fTmpC = fTmpC + fTmpD * fTmpxx;

fTmpxx = fTmpxx * fTmpxx;

aVarTmp1.fTmp = fTmpA + fTmpC * fTmpxx;

// add exponent

aVarTmp1.fTmp = aVarTmp1.fTmp + ((float)iTmpM) * (0.3010299957f);

return aVarTmp1.fTmp;

}

Optimized version 2

The optimization method is to version 1 The internal multiplication and addition operations in are done in parallel .

float Log10FloatNeonOpt(float fVarin)

{

float fTmpxx;

int32_t iTmpM;

union {

float fTmp;

int32_t iTmp;

} aVarTmp1;

// extract exponent

aVarTmp1.fTmp = fVarin;

iTmpM = (int32_t)((uint32_t)aVarTmp1.iTmp >> 23); // ride 2^-23 narrow , take 32-9=23 The height of 9 position

iTmpM = iTmpM - 127; // reduce 2^8-1=127, Take the inverse

aVarTmp1.iTmp = aVarTmp1.iTmp - (int32_t)((uint32_t)iTmpM << 23); // Subtract multiply 2^23 Restored value , Take out the index

// Taylor Polynomial (Estrins)

fTmpxx = aVarTmp1.fTmp * aVarTmp1.fTmp;

/* origin code fTmpA = (LOG10_TABLE[4] * aVarTmp1.fTmp) + (LOG10_TABLE[0]); // 4: a1 0: a0 fTmpB = (LOG10_TABLE[6] * aVarTmp1.fTmp) + (LOG10_TABLE[2]); // 6: a3 2: a2 fTmpC = (LOG10_TABLE[5] * aVarTmp1.fTmp) + (LOG10_TABLE[1]); // 5: a5 1: a4 fTmpD = (LOG10_TABLE[7] * aVarTmp1.fTmp) + (LOG10_TABLE[3]); // 7: a7 3: a6 fTmpA = fTmpA + fTmpB * fTmpxx; fTmpC = fTmpC + fTmpD * fTmpxx; */

// optcode: A C B D

float32x4_t vf32x4fTmpACBD, vf32x4fTmp1, vf32x4fTmp2;

vf32x4fTmp1 = vld1q_f32(&LOG10_TABLE[0]);

vf32x4fTmp2 = vld1q_f32(&LOG10_TABLE[4]); // 4 is for sth

vf32x4fTmpACBD = vmlaq_n_f32(vf32x4fTmp1, vf32x4fTmp2, aVarTmp1.fTmp); // fTmp1 + fTmp2 * fTmp

float32x2_t vf32x2fTmpAC, vf32x2fTmpBD;

vf32x2fTmpAC = vget_low_f32(vf32x4fTmpACBD);

vf32x2fTmpBD = vget_high_f32(vf32x4fTmpACBD);

vf32x2fTmpAC = vmla_n_f32(vf32x2fTmpAC, vf32x2fTmpBD, fTmpxx); // fTmpAC + fTmpBD * fTmpxx

float tmpAC[2]; // 2 is for sth

vst1_f32(tmpAC, vf32x2fTmpAC);

fTmpxx = fTmpxx * fTmpxx;

aVarTmp1.fTmp = tmpAC[0] + tmpAC[1] * fTmpxx;

// add exponent

aVarTmp1.fTmp = aVarTmp1.fTmp + ((float)iTmpM) * (0.3010299957f);

return aVarTmp1.fTmp;

}

Optimized version 3

For the original function Log10FloatNeonOpt() Do interface extension , Make it possible to input 4 individual x value , Calculation 4 individual log10 The result of the operation .

In the code , Assume that the input / All outputs are NEON Read and write in register , Are all 4 Floating point register values .

#define PARALLEL_NUM (4)

#define EXP_SHIFT_NUM (23)

float32x4_t Log10FloatX4NeonOpt(float32x4_t vf32x4Varin)

{

int32x4_t vs32x4VarTmpI = vreinterpretq_s32_f32(vf32x4Varin); // aVarTmp1.iTmp

uint32x4_t vu32x4VarTmpU = vreinterpretq_u32_s32(vs32x4VarTmpI); // (uint32_t)aVarTmp1.iTmp

vu32x4VarTmpU = vshrq_n_u32(vu32x4VarTmpU, EXP_SHIFT_NUM); // (uint32_t)aVarTmp1.iTmp >> 23

int32x4_t vs32x4TmpM = vreinterpretq_s32_u32(vu32x4VarTmpU); // (int32_t)((uint32_t)aVarTmp1.iTmp >> 23)

int32x4_t vs32x4RevsCode = vdupq_n_s32(-127); // -127 is shift

vs32x4TmpM = vaddq_s32(vs32x4TmpM, vs32x4RevsCode); // iTmpM = iTmpM - 127, used later

vu32x4VarTmpU = vreinterpretq_u32_s32(vs32x4TmpM);

vu32x4VarTmpU = vshlq_n_u32(vu32x4VarTmpU, EXP_SHIFT_NUM); // (uint32_t)iTmpM << 23

int32x4_t vs32x4SubVal = vreinterpretq_s32_u32(vu32x4VarTmpU);

vs32x4VarTmpI = vsubq_s32(vs32x4VarTmpI, vs32x4SubVal); // aVarTmp1.iTmp-(int32_t)((uint32_t)iTmpM<<23)

float32x4_t vf32x4TVarTmpF = vreinterpretq_f32_s32(vs32x4VarTmpI);

float32x4_t vf32x4TmpXX = vmulq_f32(vf32x4TVarTmpF, vf32x4TVarTmpF);

float32x4_t vf32x4TmpXXXX = vmulq_f32(vf32x4TmpXX, vf32x4TmpXX);

// input: vf32x4TVarTmpF vs32x4TmpM vf32x4TmpXX vf32x4TmpXXXX

float32x4x4_t vf32x4x4fTmpACBD;

float32x4_t vf32x4fTmp1, vf32x4fTmp2;

float fTmp[PARALLEL_NUM];

vst1q_f32(fTmp, vf32x4TVarTmpF);

vf32x4fTmp1 = vld1q_f32(&LOG10_TABLE[0]);

vf32x4fTmp2 = vld1q_f32(&LOG10_TABLE[4]); // 4 is for sth

vf32x4x4fTmpACBD.val[0] = vmlaq_n_f32(vf32x4fTmp1, vf32x4fTmp2, fTmp[0]); // fTmp1 + fTmp2 * fTmp

vf32x4x4fTmpACBD.val[1] = vmlaq_n_f32(vf32x4fTmp1, vf32x4fTmp2, fTmp[1]);

vf32x4x4fTmpACBD.val[2] = vmlaq_n_f32(vf32x4fTmp1, vf32x4fTmp2, fTmp[2]); // 2 is ACBD[2]

vf32x4x4fTmpACBD.val[3] = vmlaq_n_f32(vf32x4fTmp1, vf32x4fTmp2, fTmp[3]); // 3 is ACBD[3]

// transpose

// a1 b1 c1 d1 => a1 a2 a3 a4

// a2 b2 c2 d2 => b1 b2 b3 b4

// ...

// a4 b4 c4 d4 => d1 d2 d3 d4

float32x4x2_t vf32x4x2fTmpACBD01 = vtrnq_f32(vf32x4x4fTmpACBD.val[0], vf32x4x4fTmpACBD.val[1]);

float32x4x2_t vf32x4x2fTmpACBD23 = vtrnq_f32(vf32x4x4fTmpACBD.val[2], vf32x4x4fTmpACBD.val[3]); // 2, 3 is row b/d

float32x4x2_t vf32x4x2fTmpACBD02 = vuzpq_f32(vf32x4x2fTmpACBD01.val[0], vf32x4x2fTmpACBD23.val[0]); // row02

float32x4x2_t vf32x4x2fTmpACBD13 = vuzpq_f32(vf32x4x2fTmpACBD01.val[1], vf32x4x2fTmpACBD23.val[1]); // row13

vf32x4x2fTmpACBD02 = vtrnq_f32(vf32x4x2fTmpACBD02.val[0], vf32x4x2fTmpACBD02.val[1]);

vf32x4x2fTmpACBD13 = vtrnq_f32(vf32x4x2fTmpACBD13.val[0], vf32x4x2fTmpACBD13.val[1]);

vf32x4x4fTmpACBD.val[0] = vf32x4x2fTmpACBD02.val[0]; // a0 a1 a2 a3

vf32x4x4fTmpACBD.val[2] = vf32x4x2fTmpACBD02.val[1]; // 2 is row c

vf32x4x4fTmpACBD.val[1] = vf32x4x2fTmpACBD13.val[0];

vf32x4x4fTmpACBD.val[3] = vf32x4x2fTmpACBD13.val[1]; // d0 d1 d2 d3, row d

// A = A + B * xx, C = C + D * xx

float32x4_t vf32x4fTmpA = vmlaq_f32(vf32x4x4fTmpACBD.val[0], vf32x4x4fTmpACBD.val[2], vf32x4TmpXX); // 2 row b

float32x4_t vf32x4fTmpC = vmlaq_f32(vf32x4x4fTmpACBD.val[1], vf32x4x4fTmpACBD.val[3], vf32x4TmpXX); // 3 row d

vf32x4TVarTmpF = vmlaq_f32(vf32x4fTmpA, vf32x4fTmpC, vf32x4TmpXXXX);

// add exponent

float32x4_t vf32x4fTmpM = vcvtq_f32_s32(vs32x4TmpM);

vf32x4TVarTmpF = vmlaq_n_f32(vf32x4TVarTmpF, vf32x4fTmpM, (0.3010299957f));

return vf32x4TVarTmpF;

}

Summary

This article takes log10 Functional NEON Take optimization as an example , Three different optimization methods are summarized , It can be combined according to specific scenarios . among , Register 4x4 Transpose operation of matrix , Used blog 《NEON Optimize 3: Matrix transpose instruction optimization case 》 Methods mentioned .

It is worth noting that ,log10/log2 There is no difference in computing power , Similar principle , Just look up the different coefficients in the table , if necessary log2 Result , It can be converted with the bottom changing formula .

If you want to draw inferences from one instance , Similarly , This method can also be used to optimize powf Calculation of function .

边栏推荐

- C# 计算农历日期方法 2022

- 【JVM调优实战100例】04——方法区调优实战(上)

- Dynamic planning idea "from getting started to giving up"

- Realize incremental data synchronization between MySQL and ES

- [Niuke classic question 01] bit operation

- 再聊聊我常用的15个数据源网站

- Installation of torch and torch vision in pytorch

- JTAG debugging experience of arm bare board debugging

- STM32开发资料链接分享

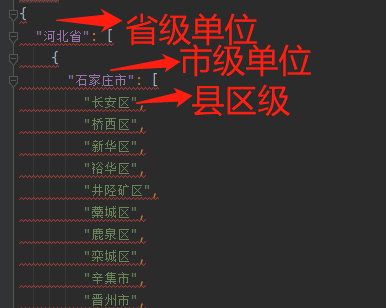

- 批量获取中国所有行政区域经边界纬度坐标(到县区级别)

猜你喜欢

![[case sharing] basic function configuration of network loop detection](/img/d8/a367c26b51d9dbaf53bf4fe2a13917.png)

[case sharing] basic function configuration of network loop detection

![[hfctf2020]babyupload session parsing engine](/img/db/6003129bc16f943ad9868561a2d5dc.png)

[hfctf2020]babyupload session parsing engine

Build your own website (17)

批量获取中国所有行政区域经边界纬度坐标(到县区级别)

![[Niuke] [noip2015] jumping stone](/img/9f/b48f3c504e511e79935a481b15045e.png)

[Niuke] [noip2015] jumping stone

迈动互联中标北京人寿保险,助推客户提升品牌价值

【案例分享】网络环路检测基本功能配置

![[HFCTF2020]BabyUpload session解析引擎](/img/db/6003129bc16f943ad9868561a2d5dc.png)

[HFCTF2020]BabyUpload session解析引擎

![[牛客] B-完全平方数](/img/bd/0812b4fb1c4f6217ad5a0f3f3b8d5e.png)

[牛客] B-完全平方数

Analysis of mutex principle in golang

随机推荐

第五篇,STM32系统定时器和通用定时器编程

[Niuke] b-complete square

What are the differences between Oracle Linux and CentOS?

【js】获取当前时间的前后n天或前后n个月(时分秒年月日都可)

Build your own website (17)

Taro中添加小程序 “lazyCodeLoading“: “requiredComponents“,

Telerik UI 2022 R2 SP1 Retail-Not Crack

JTAG principle of arm bare board debugging

省市区三级坐标边界数据csv转JSON

Let's see through the network i/o model from beginning to end

Informatics Orsay Ibn YBT 1172: find the factorial of n within 10000 | 1.6 14: find the factorial of n within 10000

UI control telerik UI for WinForms new theme - vs2022 heuristic theme

实现mysql与ES的增量数据同步

pyflink的安装和测试

线段树(SegmentTree)

NEON优化:关于交叉存取与反向交叉存取

C# 计算农历日期方法 2022

ZABBIX 5.0: automatically monitor Alibaba cloud RDS through LLD

A brief history of deep learning (I)

pyflink的安装和测试